HUMAnN 3.0 简介:

HUMAnN 3.0 是一个用于宏基因组数据分析的工具,能够从宏基因组测序数据中推断出微生物群落的代谢功能信息。它可以识别微生物群落中存在的代谢途径,并定量这些通路的丰度。HUMAnN 3.0 依赖于多个工具和数据库来实现这些功能,其中包括 MetaPhlAn 3、DIAMOND、UniRef90 等。

原网站:humann3 – The Huttenhower Lab (harvard.edu)

仓库地址:github.com

HUMAnN 3.0 安装步骤:

通过conda或mamba安装

1. 创建并激活一个新的环境(可选步骤)

首先,您可以创建一个新的环境来安装 HUMAnN 3。在这个环境中,您可以独立管理 HUMAnN 3 及其依赖项。

# 因通过conda安装humann会默认配置MetaPhlAn,所以这里环境名称就使用bioBakery了

conda create --name biobakery3 python=3.7

# 或

mamba create --name biobakery3 python=3.7接下来,激活新创建的环境:

conda activate biobakery3

# 或

mamba activate biobakery3设置conda chanel

conda config --add channels defaults

conda config --add channels bioconda

conda config --add channels conda-forge

conda config --add channels biobakery2. 安装 HUMAnN 3

现在,您可以使用 mamba 来安装 HUMAnN 3。请注意,HUMAnN 3 是作为 Python 包发布的,因此您可以直接通过 pip 或 mamba 安装。

conda install -c bioconda humann

#

mamba install -c bioconda humann这将从 bioconda 频道安装 HUMAnN 3 及其相关依赖项。

根据报错手动安装依赖环境:

#报缺少bowtie2

mamba install bowtie2 -c bioconda# 报缺少diamond

mamba install diamond -c bioconda使用mamba或conda安装humann3我这里安装失败了,尝试了通过bioconda安装依赖,MetaPhlAn还是安装不上,所以最终使用pypi方法安装成功,目前为3.8版本

使用以下代码从 PyPI 安装 HUMAnN 3.0:

# 官方建议方法:

pip install humann --no-binary :all:###自动下载humann包然后配置解压就行了,我这里安装成功通过pip这样安装后会出现找不到MetaPhlAn的错误,所以还得自己再配置安装,不然后面运行的时候会出错 :

CRITICAL ERROR: The metaphlan executable can not be found. Please check the install.

其实这个就是安装不完全的原因,在前面mamba或者conda设置chanels时没有生效,下面是正确的安装方式:

### 将所有需要的chanels全部加入,这样依赖才能解析完全。

mamba install humann -c biobakery -c bioconda -c conda-forge##### 真是醉了,连自己的bioBakery没有独立配置完整依赖,这个坑真的好大!!!!3. 下载数据库

HUMAnN 3.0 使用了多个数据库,需要下载这些数据库文件:

先查看可用的数据库:

humann_databases --availableHUMAnN Databases ( database : build = location )

chocophlan : full = http://huttenhower.sph.harvard.edu/humann_data/chocophlan/full_chocophlan.v201901_v31.tar.gz

chocophlan : DEMO = http://huttenhower.sph.harvard.edu/humann_data/chocophlan/DEMO_chocophlan.v201901_v31.tar.gz

uniref : uniref50_diamond = http://huttenhower.sph.harvard.edu/humann_data/uniprot/uniref_annotated/uniref50_annotated_v201901b_full.tar.gz

uniref : uniref90_diamond = http://huttenhower.sph.harvard.edu/humann_data/uniprot/uniref_annotated/uniref90_annotated_v201901b_full.tar.gz

uniref : uniref50_ec_filtered_diamond = http://huttenhower.sph.harvard.edu/humann_data/uniprot/uniref_ec_filtered/uniref50_ec_filtered_201901b_subset.tar.gz

uniref : uniref90_ec_filtered_diamond = http://huttenhower.sph.harvard.edu/humann_data/uniprot/uniref_ec_filtered/uniref90_ec_filtered_201901b_subset.tar.gz

uniref : DEMO_diamond = http://huttenhower.sph.harvard.edu/humann_data/uniprot/uniref_annotated/uniref90_DEMO_diamond_v201901b.tar.gz

utility_mapping : full = http://huttenhower.sph.harvard.edu/humann_data/full_mapping_v201901b.tar.gz### 为啥还是2019呢? 停止更新了????下载指定数据库:

humann_databases --download chocophlan full $DIR_TO_STORE_DB humann_databases --download uniref uniref90_diamond $DIR_TO_STORE_DB

# 其中 $DIR_TO_STORE_DB 是你希望存储数据库文件的路径。humann_databases --download chocophlan full /path/to/databases --update-config yeshumann_databases --download uniref uniref90_diamond /path/to/databases --update-config yeshumann_databases --download utility_mapping full /path/to/databases --update-config yes手动下载数据库,可用链接直接使用前上面的humann_databases中分别对应的链接,并解压到指定文件夹:

wget -c http://huttenhower.sph.harvard.edu/humann_data/chocophlan/full_chocophlan.v201901_v31.tar.gz

wget -c http://huttenhower.sph.harvard.edu/humann_data/uniprot/uniref_annotated/uniref90_annotated_v201901b_full.tar.gz

wget -c http://huttenhower.sph.harvard.edu/humann_data/full_mapping_v201901b.tar.gzmkdir chocophlan_v296_201901

mkdir uniref90_v201901

mkdir mapping_v201901tar -zxvf full_chocophlan.v296_201901.tar.gz -C ./chocophlan_v296_201901/

tar -zxvf uniref90_annotated_v201901.tar.gz -C uniref90_v201901

tar -zxvf full_mapping_v201901.tar.gz -C ./mapping_v201901/数据库设置,先查看已有设置情况:

# 查看已有数据库

humann_databases --list

### 命令不对。。。。。。。。。。### 还是直接查看数据目录吧。

# 默认数据库目录,当然前面如果自己有设定的话看已设定目录

/miniconda3/envs/biobakery3/lib/python3.7/site-packages/humann### 应该是 humann_config

humann_configHUMAnN Configuration ( Section : Name = Value )

database_folders : nucleotide = /path/to/databases/chocophlan_v296_201901

database_folders : protein = /path/to/databases/uniref90_v201901/

database_folders : utility_mapping = /path/to/databases/mapping_v201901/

run_modes : resume = False

run_modes : verbose = False

run_modes : bypass_prescreen = False

run_modes : bypass_nucleotide_index = False

run_modes : bypass_nucleotide_search = False

run_modes : bypass_translated_search = False

run_modes : threads = 1

alignment_settings : evalue_threshold = 1.0

alignment_settings : prescreen_threshold = 0.01

alignment_settings : translated_subject_coverage_threshold = 50.0

alignment_settings : translated_query_coverage_threshold = 90.0

alignment_settings : nucleotide_subject_coverage_threshold = 50.0

alignment_settings : nucleotide_query_coverage_threshold = 90.0

output_format : output_max_decimals = 10

output_format : remove_stratified_output = False

output_format : remove_column_description_output = False############################################################

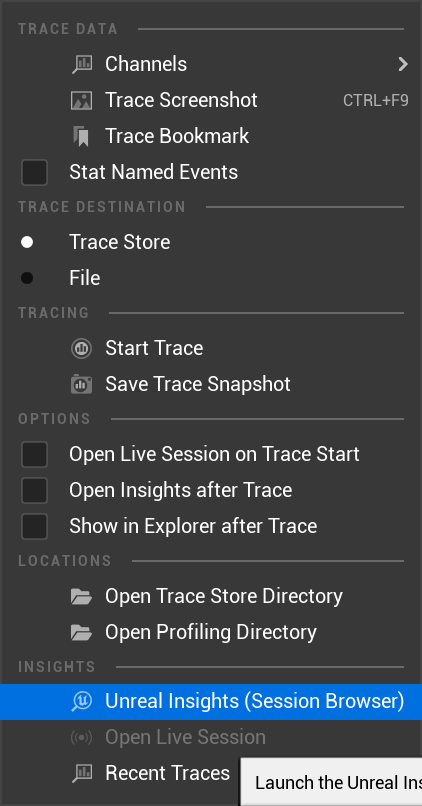

humann_config --help

usage: humann_config [-h] [--print] [--update <section> <name> <value>]HUMAnN Configurationoptional arguments:-h, --help show this help message and exit--print print the configuration--update <section> <name> <value>update the section : name to the value provided

已准备好的数据库切换设置

## 更新格式:humann_config --update <section> <name> <value>

humann_config --update database_folders nucleotide /path/to/databases/chocophlan_v296_201901

humann_config --update database_folders protein /path/to/databases/uniref90_v201901/

humann_config --update database_folders utility_mapping /path/to/databases/mapping_v201901/## 更新后查看设置

humann_config# 还可以自己设置其他默认设置

# 比如说我的服务器都是30个线程以上,所以我将默认的运行线程数为30,这个根据自己服务器设置就行

humann_config --update run_modes threads 30

#######################

# HUMAnN configuration file updated: run_modes : threads = 30运行 HUMAnN 3.0

全参数帮助内容查看:

usage: humann_config [-h] [--print] [--update <section> <name> <value>]HUMAnN Configurationoptional arguments:-h, --help show this help message and exit--print print the configuration--update <section> <name> <value>update the section : name to the value provided

(biobakery3) [root@mgmt ~]# humann --help

usage: humann [-h] -i <input.fastq> -o <output> [--threads <1>] [--version][-r] [--bypass-nucleotide-index] [--bypass-nucleotide-search][--bypass-prescreen] [--bypass-translated-search][--taxonomic-profile <taxonomic_profile.tsv>][--memory-use {minimum,maximum}][--input-format {fastq,fastq.gz,fasta,fasta.gz,sam,bam,blastm8,genetable,biom}][--search-mode {uniref50,uniref90}] [-v][--metaphlan <metaphlan>][--metaphlan-options <metaphlan_options>][--prescreen-threshold <0.01>] [--bowtie2 <bowtie2>][--bowtie-options <bowtie_options>][--nucleotide-database <nucleotide_database>][--nucleotide-identity-threshold <0.0>][--nucleotide-query-coverage-threshold <90.0>][--nucleotide-subject-coverage-threshold <50.0>][--diamond <diamond>] [--diamond-options <diamond_options>][--evalue <1.0>] [--protein-database <protein_database>][--rapsearch <rapsearch>][--translated-alignment {usearch,rapsearch,diamond}][--translated-identity-threshold <Automatically: 50.0 or 80.0, Custom: 0.0-100.0>][--translated-query-coverage-threshold <90.0>][--translated-subject-coverage-threshold <50.0>][--usearch <usearch>] [--gap-fill {on,off}] [--minpath {on,off}][--pathways {metacyc,unipathway}][--pathways-database <pathways_database.tsv>] [--xipe {on,off}][--annotation-gene-index <3>] [--id-mapping <id_mapping.tsv>][--remove-temp-output][--log-level {DEBUG,INFO,WARNING,ERROR,CRITICAL}][--o-log <sample.log>] [--output-basename <sample_name>][--output-format {tsv,biom}] [--output-max-decimals <10>][--remove-column-description-output][--remove-stratified-output]HUMAnN : HMP Unified Metabolic Analysis Network 3optional arguments:-h, --help show this help message and exit[0] Common settings:-i <input.fastq>, --input <input.fastq>input file of type {fastq,fastq.gz,fasta,fasta.gz,sam,bam,blastm8,genetable,biom} [REQUIRED]-o <output>, --output <output>directory to write output files[REQUIRED]--threads <1> number of threads/processes[DEFAULT: 1]--version show program's version number and exit[1] Workflow refinement:-r, --resume bypass commands if the output files exist--bypass-nucleotide-indexbypass the nucleotide index step and run on the indexed ChocoPhlAn database--bypass-nucleotide-searchbypass the nucleotide search steps--bypass-prescreen bypass the prescreen step and run on the full ChocoPhlAn database--bypass-translated-searchbypass the translated search step--taxonomic-profile <taxonomic_profile.tsv>a taxonomic profile (the output file created by metaphlan)[DEFAULT: file will be created]--memory-use {minimum,maximum}the amount of memory to use[DEFAULT: minimum]--input-format {fastq,fastq.gz,fasta,fasta.gz,sam,bam,blastm8,genetable,biom}the format of the input file[DEFAULT: format identified by software]--search-mode {uniref50,uniref90}search for uniref50 or uniref90 gene families[DEFAULT: based on translated database selected]-v, --verbose additional output is printed[2] Configure tier 1: prescreen:--metaphlan <metaphlan>directory containing the MetaPhlAn software[DEFAULT: $PATH]--metaphlan-options <metaphlan_options>options to be provided to the MetaPhlAn software[DEFAULT: "-t rel_ab"]--prescreen-threshold <0.01>minimum percentage of reads matching a species[DEFAULT: 0.01][3] Configure tier 2: nucleotide search:--bowtie2 <bowtie2> directory containing the bowtie2 executable[DEFAULT: $PATH]--bowtie-options <bowtie_options>options to be provided to the bowtie software[DEFAULT: "--very-sensitive"]--nucleotide-database <nucleotide_database>directory containing the nucleotide database[DEFAULT: /path/to/databases/chocophlan_v296_201901]--nucleotide-identity-threshold <0.0>identity threshold for nuclotide alignments[DEFAULT: 0.0]--nucleotide-query-coverage-threshold <90.0>query coverage threshold for nucleotide alignments[DEFAULT: 90.0]--nucleotide-subject-coverage-threshold <50.0>subject coverage threshold for nucleotide alignments[DEFAULT: 50.0][3] Configure tier 2: translated search:--diamond <diamond> directory containing the diamond executable[DEFAULT: $PATH]--diamond-options <diamond_options>options to be provided to the diamond software[DEFAULT: "--top 1 --outfmt 6"]--evalue <1.0> the evalue threshold to use with the translated search[DEFAULT: 1.0]--protein-database <protein_database>directory containing the protein database[DEFAULT: /path/to/databases/uniref90_v201901/]--rapsearch <rapsearch>directory containing the rapsearch executable[DEFAULT: $PATH]--translated-alignment {usearch,rapsearch,diamond}software to use for translated alignment[DEFAULT: diamond]--translated-identity-threshold <Automatically: 50.0 or 80.0, Custom: 0.0-100.0>identity threshold for translated alignments[DEFAULT: Tuned automatically (based on uniref mode) unless a custom value is specified]--translated-query-coverage-threshold <90.0>query coverage threshold for translated alignments[DEFAULT: 90.0]--translated-subject-coverage-threshold <50.0>subject coverage threshold for translated alignments[DEFAULT: 50.0]--usearch <usearch> directory containing the usearch executable[DEFAULT: $PATH][5] Gene and pathway quantification:--gap-fill {on,off} turn on/off the gap fill computation[DEFAULT: on]--minpath {on,off} turn on/off the minpath computation[DEFAULT: on]--pathways {metacyc,unipathway}the database to use for pathway computations[DEFAULT: metacyc]--pathways-database <pathways_database.tsv>mapping file (or files, at most two in a comma-delimited list) to use for pathway computations[DEFAULT: metacyc database ]--xipe {on,off} turn on/off the xipe computation[DEFAULT: off]--annotation-gene-index <3>the index of the gene in the sequence annotation[DEFAULT: 3]--id-mapping <id_mapping.tsv>id mapping file for alignments[DEFAULT: alignment reference used][6] More output configuration:--remove-temp-output remove temp output files[DEFAULT: temp files are not removed]--log-level {DEBUG,INFO,WARNING,ERROR,CRITICAL}level of messages to display in log[DEFAULT: DEBUG]--o-log <sample.log> log file[DEFAULT: temp/sample.log]--output-basename <sample_name>the basename for the output files[DEFAULT: input file basename]--output-format {tsv,biom}the format of the output files[DEFAULT: tsv]--output-max-decimals <10>the number of decimals to output[DEFAULT: 10]--remove-column-description-outputremove the description in the output column[DEFAULT: output column includes description]--remove-stratified-outputremove stratification from output[DEFAULT: output is stratified]

humann主要功能模块

humann_barplot

humann_strain_profiler

humann_benchmark

humann_genefamilies_genus_level

humann_reduce_table

humann_rna_dna_norm

humann_build_custom_database

humann_humann1_kegg

humann_regroup_table

humann_split_stratified_table

humann_unpack_pathways

humann_associate

humann_infer_taxonomy

humann_split_table使用以下命令来运行 HUMAnN 3.0:

单个样品分别运行

# humann3已经不需要带3了,与2不同

humann --input input.fastq.gz --output output_dir --threads NUM_THREADS

# 正反序列直接按顺序多加一个input或-i参数,或者在-i参数后面两个文件逗号隔开

# 注意文件名和文件路径相同部分不能因为相同部分就使用简写

# 另外最好是指定输入文件类型--imput-format

humann -i <input_forward.fastq> -i <input_reverse.fastq> --output <output_directory> --imput-format fastq#在此命令中,input.fastq.gz 是宏基因组数据文件,output_dir 是输出结果的目录,NUM_THREADS 是你希望使用的线程数。查看结果

分析完成后,你可以在 output_dir 中找到生成的结果文件,包括代谢通路丰度、物种组成等信息。