本篇将使用上节http://www.cnblogs.com/wenjingu/p/3977015.html中编译好的库文件通过rtsp获取网络上的h264裸流并保存到mp4文件中。

1、VS2010建立VC++ win32控制台项目

2、在工程目录下建立lib目录和include目录,将已编译好的lib拷打lib下,include拷到include下,dll拷到Debug目录下

3、工程属性--配置属性--VC++目录--包含目录,添加ffmpeg头文件目录及其他第三方头文件目录

链接器--常规--附加库目录,添加lib目录

链接器--输入--附加依赖项,添加各个lib名

4、设计和实现:

4.1 设计思路:

组件和网络初始化——>打开网络流——>获取网络流信息——>根据网络流信息初始化输出流信息——>创建并打开mp4文件——>写mp4文件头

——>循环读取输入流并写入mp4文件——>写文件尾——>关闭流,关闭文件

4.2 关键数据结构:

AVFormatContext,AVStream,AVCodecContext,AVPacket,AVFrame等,它们的关系解释如下:

一个AVFormatContext包含多个AVStream,每个码流包含了AVCodec和AVCodecContext,AVPicture是AVFrame的一个子集,

他们都是数据流在编解过程中用来保存数据缓存的对像,从数据流读出的数据首先是保存在AVPacket里,也可以理解为一个AVPacket最多只包含一个AVFrame,

而一个AVFrame可能包含好几个AVPacket,AVPacket是种数据流分包的概念。

4.3 关键函数:

int avformat_open_input(AVFormatContext **ps, const char *filename, AVInputFormat *fmt, AVDictionary **options); //打开网络流或文件流

int avformat_write_header(AVFormatContext *s, AVDictionary **options);//根据文件名的后缀写相应格式的文件头

int av_read_frame(AVFormatContext *s, AVPacket *pkt);//从输入流中读取一个分包

int av_interleaved_write_frame(AVFormatContext *s, AVPacket *pkt);//往输出流中写一个分包

int av_write_trailer(AVFormatContext *s);//写输出流(文件)的文件尾

4.4 代码:

#include "stdafx.h"#ifdef __cplusplus extern "C" { #endif#include <libavcodec/avcodec.h> #include <libavdevice/avdevice.h> #include <libavformat/avformat.h> #include <libavfilter/avfilter.h> #include <libavutil/avutil.h> #include <libswscale/swscale.h>#include <stdlib.h> #include <stdio.h> #include <string.h> #include <math.h>#ifdef __cplusplus } #endifAVFormatContext *i_fmt_ctx; AVStream *i_video_stream;AVFormatContext *o_fmt_ctx; AVStream *o_video_stream;int _tmain(int argc, char **argv) { avcodec_register_all(); av_register_all(); avformat_network_init();/* should set to NULL so that avformat_open_input() allocate a new one */ i_fmt_ctx = NULL; char rtspUrl[] = "rtsp://admin:12345@192.168.10.76:554"; const char *filename = "1.mp4"; if (avformat_open_input(&i_fmt_ctx, rtspUrl, NULL, NULL)!=0) { fprintf(stderr, "could not open input file\n"); return -1; }if (avformat_find_stream_info(i_fmt_ctx, NULL)<0) { fprintf(stderr, "could not find stream info\n"); return -1; }//av_dump_format(i_fmt_ctx, 0, argv[1], 0);/* find first video stream */ for (unsigned i=0; i<i_fmt_ctx->nb_streams; i++) { if (i_fmt_ctx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO) { i_video_stream = i_fmt_ctx->streams[i]; break; } } if (i_video_stream == NULL) { fprintf(stderr, "didn't find any video stream\n"); return -1; }avformat_alloc_output_context2(&o_fmt_ctx, NULL, NULL, filename);/* * since all input files are supposed to be identical (framerate, dimension, color format, ...) * we can safely set output codec values from first input file */ o_video_stream = avformat_new_stream(o_fmt_ctx, NULL); { AVCodecContext *c; c = o_video_stream->codec; c->bit_rate = 400000; c->codec_id = i_video_stream->codec->codec_id; c->codec_type = i_video_stream->codec->codec_type; c->time_base.num = i_video_stream->time_base.num; c->time_base.den = i_video_stream->time_base.den; fprintf(stderr, "time_base.num = %d time_base.den = %d\n", c->time_base.num, c->time_base.den); c->width = i_video_stream->codec->width; c->height = i_video_stream->codec->height; c->pix_fmt = i_video_stream->codec->pix_fmt; printf("%d %d %d", c->width, c->height, c->pix_fmt); c->flags = i_video_stream->codec->flags; c->flags |= CODEC_FLAG_GLOBAL_HEADER; c->me_range = i_video_stream->codec->me_range; c->max_qdiff = i_video_stream->codec->max_qdiff;c->qmin = i_video_stream->codec->qmin; c->qmax = i_video_stream->codec->qmax;c->qcompress = i_video_stream->codec->qcompress; }avio_open(&o_fmt_ctx->pb, filename, AVIO_FLAG_WRITE);avformat_write_header(o_fmt_ctx, NULL);int last_pts = 0; int last_dts = 0;int64_t pts, dts; while (1) { AVPacket i_pkt; av_init_packet(&i_pkt); i_pkt.size = 0; i_pkt.data = NULL; if (av_read_frame(i_fmt_ctx, &i_pkt) <0 ) break; /* * pts and dts should increase monotonically * pts should be >= dts */ i_pkt.flags |= AV_PKT_FLAG_KEY; pts = i_pkt.pts; i_pkt.pts += last_pts; dts = i_pkt.dts; i_pkt.dts += last_dts; i_pkt.stream_index = 0;//printf("%lld %lld\n", i_pkt.pts, i_pkt.dts); static int num = 1; printf("frame %d\n", num++); av_interleaved_write_frame(o_fmt_ctx, &i_pkt); //av_free_packet(&i_pkt); //av_init_packet(&i_pkt); } last_dts += dts; last_pts += pts;avformat_close_input(&i_fmt_ctx);av_write_trailer(o_fmt_ctx);avcodec_close(o_fmt_ctx->streams[0]->codec); av_freep(&o_fmt_ctx->streams[0]->codec); av_freep(&o_fmt_ctx->streams[0]);avio_close(o_fmt_ctx->pb); av_free(o_fmt_ctx);return 0; }

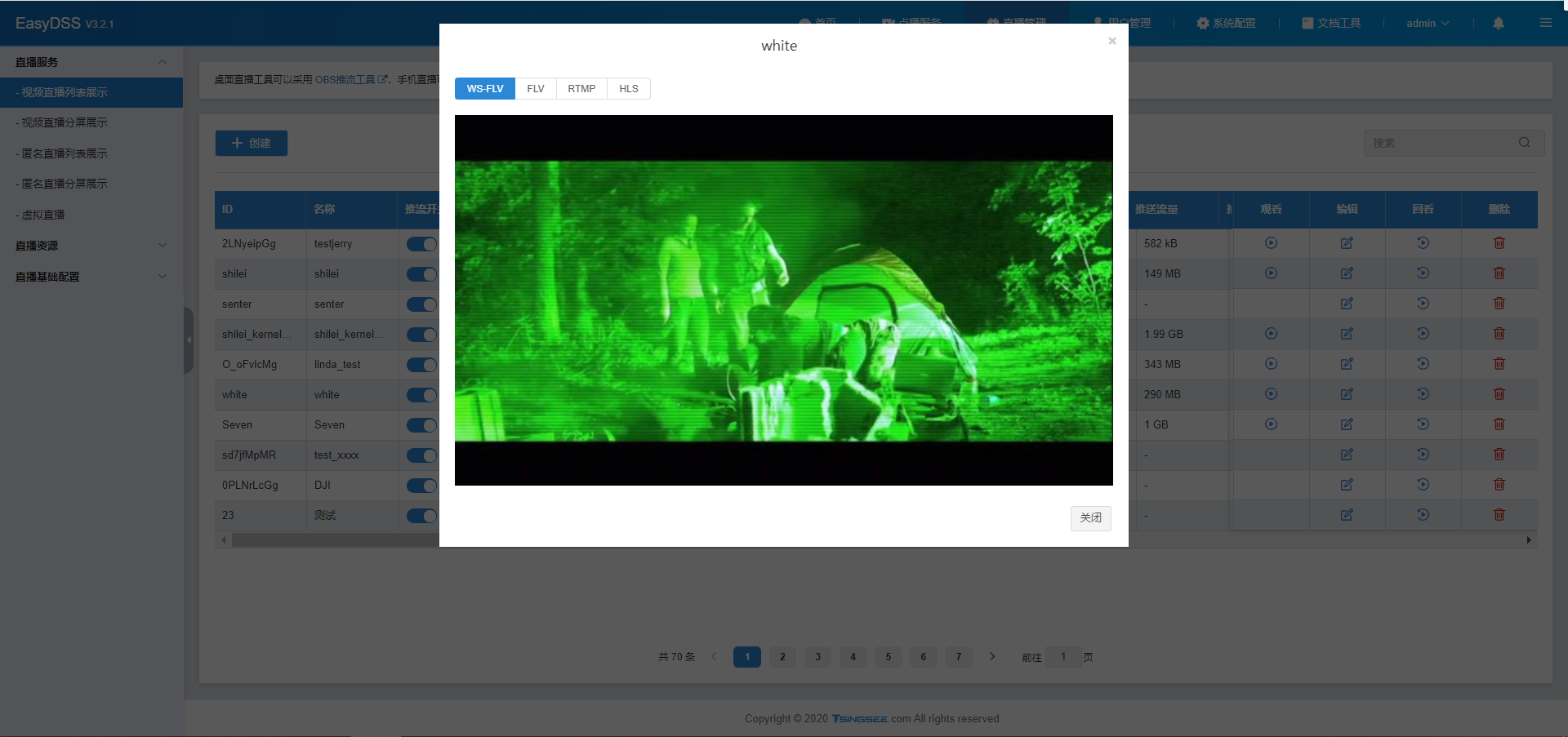

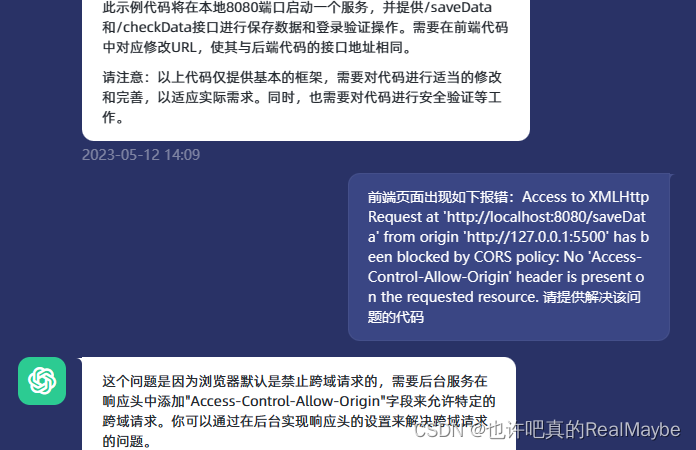

5、测试

如上图为存储过程中程序的打印结果。生成的mp4文件可以用任意支持该格式的播放器播放。

#include "stdafx.h"

#ifdef __cplusplus

extern "C" {

#endif

#include <libavcodec/avcodec.h>

#include <libavdevice/avdevice.h>

#include <libavformat/avformat.h>

#include <libavfilter/avfilter.h>

#include <libavutil/avutil.h>

#include <libswscale/swscale.h>

#include <stdlib.h>

#include <stdio.h>

#include <string.h>

#include <math.h>

#ifdef __cplusplus

}

#endif

AVFormatContext *i_fmt_ctx;

AVStream *i_video_stream;

AVFormatContext *o_fmt_ctx;

AVStream *o_video_stream;

int _tmain( int argc, char **argv)

{

avcodec_register_all();

av_register_all();

avformat_network_init();

/* should set to NULL so that avformat_open_input() allocate a new one */

i_fmt_ctx = NULL;

char rtspUrl[] = "rtsp://admin:12345@192.168.10.76:554" ;

const char *filename = "1.mp4" ;

if (avformat_open_input(&i_fmt_ctx, rtspUrl, NULL, NULL)!=0)

{

fprintf (stderr, "could not open input file\n" );

return -1;

}

if (avformat_find_stream_info(i_fmt_ctx, NULL)<0)

{

fprintf (stderr, "could not find stream info\n" );

return -1;

}

//av_dump_format(i_fmt_ctx, 0, argv[1], 0);

/* find first video stream */

for (unsigned i=0; i<i_fmt_ctx->nb_streams; i++)

{

if (i_fmt_ctx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO)

{

i_video_stream = i_fmt_ctx->streams[i];

break ;

}

}

if (i_video_stream == NULL)

{

fprintf (stderr, "didn't find any video stream\n" );

return -1;

}

avformat_alloc_output_context2(&o_fmt_ctx, NULL, NULL, filename);

/*

* since all input files are supposed to be identical (framerate, dimension, color format, ...)

* we can safely set output codec values from first input file

*/

o_video_stream = avformat_new_stream(o_fmt_ctx, NULL);

{

AVCodecContext *c;

c = o_video_stream->codec;

c->bit_rate = 400000;

c->codec_id = i_video_stream->codec->codec_id;

c->codec_type = i_video_stream->codec->codec_type;

c->time_base.num = i_video_stream->time_base.num;

c->time_base.den = i_video_stream->time_base.den;

fprintf (stderr, "time_base.num = %d time_base.den = %d\n" , c->time_base.num, c->time_base.den);

c->width = i_video_stream->codec->width;

c->height = i_video_stream->codec->height;

c->pix_fmt = i_video_stream->codec->pix_fmt;

printf ( "%d %d %d" , c->width, c->height, c->pix_fmt);

c->flags = i_video_stream->codec->flags;

c->flags |= CODEC_FLAG_GLOBAL_HEADER;

c->me_range = i_video_stream->codec->me_range;

c->max_qdiff = i_video_stream->codec->max_qdiff;

c->qmin = i_video_stream->codec->qmin;

c->qmax = i_video_stream->codec->qmax;

c->qcompress = i_video_stream->codec->qcompress;

}

avio_open(&o_fmt_ctx->pb, filename, AVIO_FLAG_WRITE);

avformat_write_header(o_fmt_ctx, NULL);

int last_pts = 0;

int last_dts = 0;

int64_t pts, dts;

while (1)

{

AVPacket i_pkt;

av_init_packet(&i_pkt);

i_pkt.size = 0;

i_pkt.data = NULL;

if (av_read_frame(i_fmt_ctx, &i_pkt) <0 )

break ;

/*

* pts and dts should increase monotonically

* pts should be >= dts

*/

i_pkt.flags |= AV_PKT_FLAG_KEY;

pts = i_pkt.pts;

i_pkt.pts += last_pts;

dts = i_pkt.dts;

i_pkt.dts += last_dts;

i_pkt.stream_index = 0;

//printf("%lld %lld\n", i_pkt.pts, i_pkt.dts);

static int num = 1;

printf ( "frame %d\n" , num++);

av_interleaved_write_frame(o_fmt_ctx, &i_pkt);

//av_free_packet(&i_pkt);

//av_init_packet(&i_pkt);

}

last_dts += dts;

last_pts += pts;

avformat_close_input(&i_fmt_ctx);

av_write_trailer(o_fmt_ctx);

avcodec_close(o_fmt_ctx->streams[0]->codec);

av_freep(&o_fmt_ctx->streams[0]->codec);

av_freep(&o_fmt_ctx->streams[0]);

avio_close(o_fmt_ctx->pb);

av_free(o_fmt_ctx);

return 0;

}

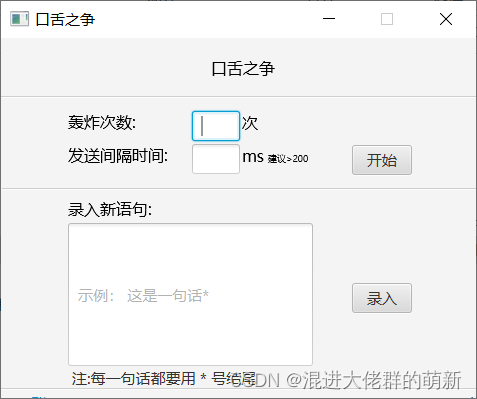

ffmpeg网络直接获取rtsp监控摄像机视频流,实现实时监控

网上大多数的教程都是ffmpeg打开本地视频的教程,没有直接读取摄像头的教程的,有些摄像头都是rtsp的,这边吾爱的技术人员为大家提供了一个解决方案

ffmpeg直接获取摄像监控的rtsp实在远程监控,在线直播的功能

代码如下:

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 | #include "FFPlayVeido.h" FFPlayVedio::FFPlayVedio( HWND hwd) { char sdl_var[64]; sprintf (sdl_var, "SDL_WINDOWID=%d" , hwd); //主窗口句柄 putenv(sdl_var); //初始化 av_register_all(); avformat_network_init(); if (SDL_Init(SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER)) { printf ( "Could not initialize SDL - %s\n" , SDL_GetError()); return ; } } FFPlayVedio::~FFPlayVedio() { } void FFPlayVedio::OnInit( HWND hwd) { } void FFPlayVedio::GetVedioPlay( char *name) { AVFormatContext *pFormatCtx; int i, videoindex; AVCodecContext *pCodecCtx; AVCodec *pCodec; char filepath[] = "rtsp://admin:123456@192.168.1.252:554/mpeg4cif" ; pFormatCtx = avformat_alloc_context(); if (avformat_open_input(&pFormatCtx, filepath, NULL, NULL) != 0){ printf ( "Couldn't open input stream.(无法打开输入流)\n" ); return ; } if (av_find_stream_info(pFormatCtx) < 0) { printf ( "Couldn't find stream information.(无法获取流信息)\n" ); return ; } videoindex = -1; for (i = 0; i < pFormatCtx->nb_streams; i++) if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO) { videoindex = i; break ; } if (videoindex == -1) { printf ( "Didn't find a video stream.(没有找到视频流)\n" ); return ; } pCodecCtx = pFormatCtx->streams[videoindex]->codec; pCodec = avcodec_find_decoder(pCodecCtx->codec_id); if (pCodec == NULL) { printf ( "Codec not found.(没有找到解码器)\n" ); return ; } if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0) { printf ( "Could not open codec.(无法打开解码器)\n" ); return ; } AVFrame *pFrame, *pFrameYUV; pFrame = avcodec_alloc_frame(); pFrameYUV = avcodec_alloc_frame(); uint8_t *out_buffer = (uint8_t *)av_malloc(avpicture_get_size(PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height)); avpicture_fill((AVPicture *)pFrameYUV, out_buffer, PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height); //SDL--------------------------- int screen_w = 0, screen_h = 0; //SDL 2.0 Support for multiple windows SDL_Window *screen; screen_w = pCodecCtx->width; screen_h = pCodecCtx->height; screen = SDL_CreateWindow( "Simplest ffmpeg player's Window" , SDL_WINDOWPOS_UNDEFINED, SDL_WINDOWPOS_UNDEFINED, screen_w, screen_h, SDL_WINDOW_OPENGL); if (!screen) { printf ( "SDL: could not create window - exiting:%s\n" , SDL_GetError()); return ; } SDL_Renderer* sdlRenderer = SDL_CreateRenderer(screen, -1, 0); //IYUV: Y + U + V (3 planes) //YV12: Y + V + U (3 planes) SDL_Texture* sdlTexture = SDL_CreateTexture(sdlRenderer, SDL_PIXELFORMAT_IYUV, SDL_TEXTUREACCESS_STREAMING, pCodecCtx->width, pCodecCtx->height); SDL_Rect sdlRect; sdlRect.x = 0; sdlRect.y = 0; sdlRect.w = screen_w; sdlRect.h = screen_h; //SDL End---------------------- int ret, got_picture; AVPacket *packet = (AVPacket *)av_malloc( sizeof (AVPacket)); //Output Info----------------------------- printf ( "File Information(文件信息)---------------------\n" ); av_dump_format(pFormatCtx, 0, filepath, 0); printf ( "-------------------------------------------------\n" ); #if OUTPUT_YUV420P FILE *fp_yuv = fopen ( "output.yuv" , "wb+" ); #endif struct SwsContext *img_convert_ctx; img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt, pCodecCtx->width, pCodecCtx->height, PIX_FMT_YUV420P, SWS_BICUBIC, NULL, NULL, NULL); //------------------------------ while (av_read_frame(pFormatCtx, packet) >= 0) { if (packet->stream_index == videoindex) { ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet); if (ret < 0) { printf ( "Decode Error.(解码错误)\n" ); return ; } if (got_picture) { sws_scale(img_convert_ctx, ( const uint8_t* const *)pFrame->data, pFrame->linesize, 0, pCodecCtx->height, pFrameYUV->data, pFrameYUV->linesize); #if OUTPUT_YUV420P int y_size = pCodecCtx->width*pCodecCtx->height; fwrite (pFrameYUV->data[0], 1, y_size, fp_yuv); //Y fwrite (pFrameYUV->data[1], 1, y_size / 4, fp_yuv); //U fwrite (pFrameYUV->data[2], 1, y_size / 4, fp_yuv); //V #endif //SDL--------------------------- SDL_UpdateTexture(sdlTexture, &sdlRect, pFrameYUV->data[0], pFrameYUV->linesize[0]); SDL_RenderClear(sdlRenderer); SDL_RenderCopy(sdlRenderer, sdlTexture, &sdlRect, &sdlRect); SDL_RenderPresent(sdlRenderer); //SDL End----------------------- //Delay 40ms SDL_Delay(40); } } av_free_packet(packet); } sws_freeContext(img_convert_ctx); #if OUTPUT_YUV420P fclose (fp_yuv); #endif SDL_Quit(); av_free(out_buffer); av_free(pFrameYUV); avcodec_close(pCodecCtx); avformat_close_input(&pFormatCtx); return ; } void FFPlayVedio::StopVedio() { } |

再附上直接获取摄像头监控保存MP4文件的代码: