1 tle

Text-to-Image Diffusion Models are Zero-Shot Classifiers(Kevin Clark, Priyank Jaini)【NeurIPS Proceedings 2023】

2 Conclusion

This study investigates diffusion models by proposing a method for evaluating them as zero-shot classifiers. The key idea is using a diffusion model’s ability to denoise a noised image given a text description of a label as a proxy for that label’s likelihood.

3 Good Sentences

1、We show text-to-image diffusion models can be used as effective zero-shot classifiers. While using too much compute to be very practical on downstream tasks, the method provides a

way of quantitatively studying what the models learn.(The main contribution and the remaining shortcomings)

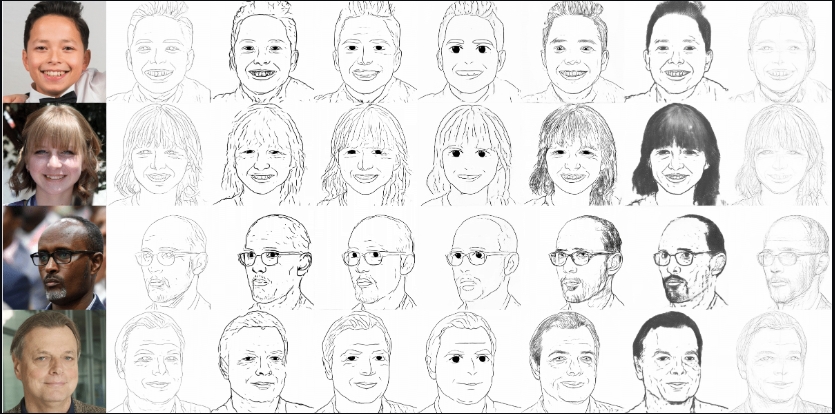

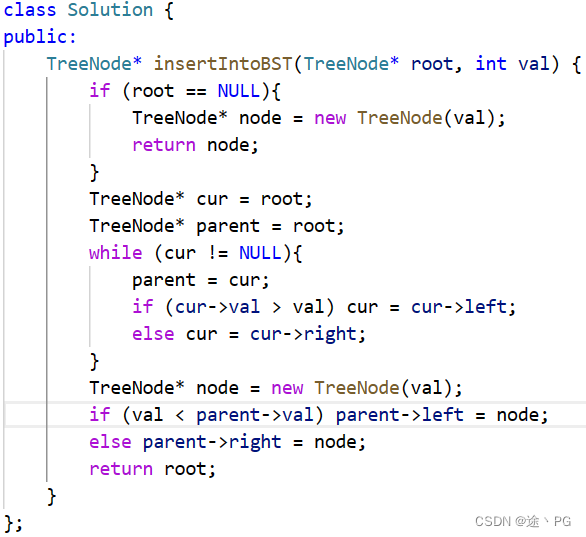

2、More specifically, the method repeatedly noises and denoises the input image while conditioning the model on a different text prompt for each possible class. The class whose text prompt results in the best denoising ability is predicted. This procedure is expensive because it requires denoising many times per class (with different noise levels)(The essence of this method and its shortcomings:very expensive)

3、Our paper is complementary to concurrent work from Li et al. (2023), who use Stable Diffusion as a zero-shot classifier and explore some different tasks like relational reasoning. While their approach is similar to ours, they perform different analysis, and their results are slightly worse than ours due to them using a simple hand-tuned class pruning method and no timestep weighting.(The advance of this study when compare to concurrent works)

在互联网的大规模数据上预先训练的大型模型可以有效地适应各种下游任务,比如用于图像的CLIP和用于文字的GPT-3,越来越多的模型被用于零样本分类任务,这篇文章把diffusion模型用于零样本分类,效果跟CLIP-2接近,但是计算资源需求量很大。

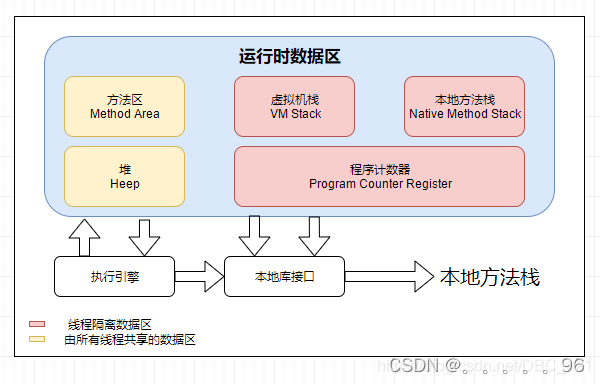

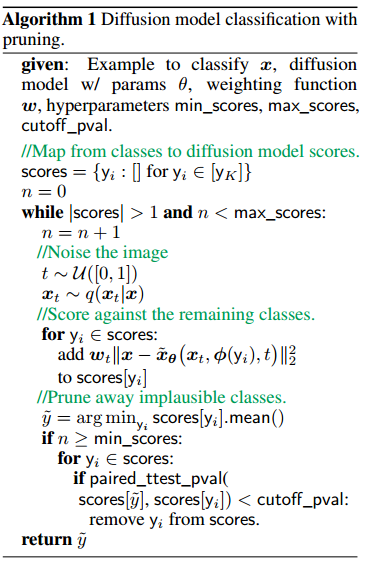

首先计算多个时间步长内每个标签提示的去噪分数,以生成分数矩阵。然后,通过在时间步长上使用加权函数聚合每个类别的分数来对图像进行分类。图像被分配给具有最低总分的类

首先计算多个时间步长内每个标签提示的去噪分数,以生成分数矩阵。然后,通过在时间步长上使用加权函数聚合每个类别的分数来对图像进行分类。图像被分配给具有最低总分的类

具体来说,该方法重复地对输入图像进行噪声处理和去噪,同时针对每个可能的类在不同的文本提示上调节模型。预测其文本提示导致最佳去噪能力的类。

因为论文里说的这个计算资源需求量实在太大,感觉没有什么参考的价值了这篇文章。

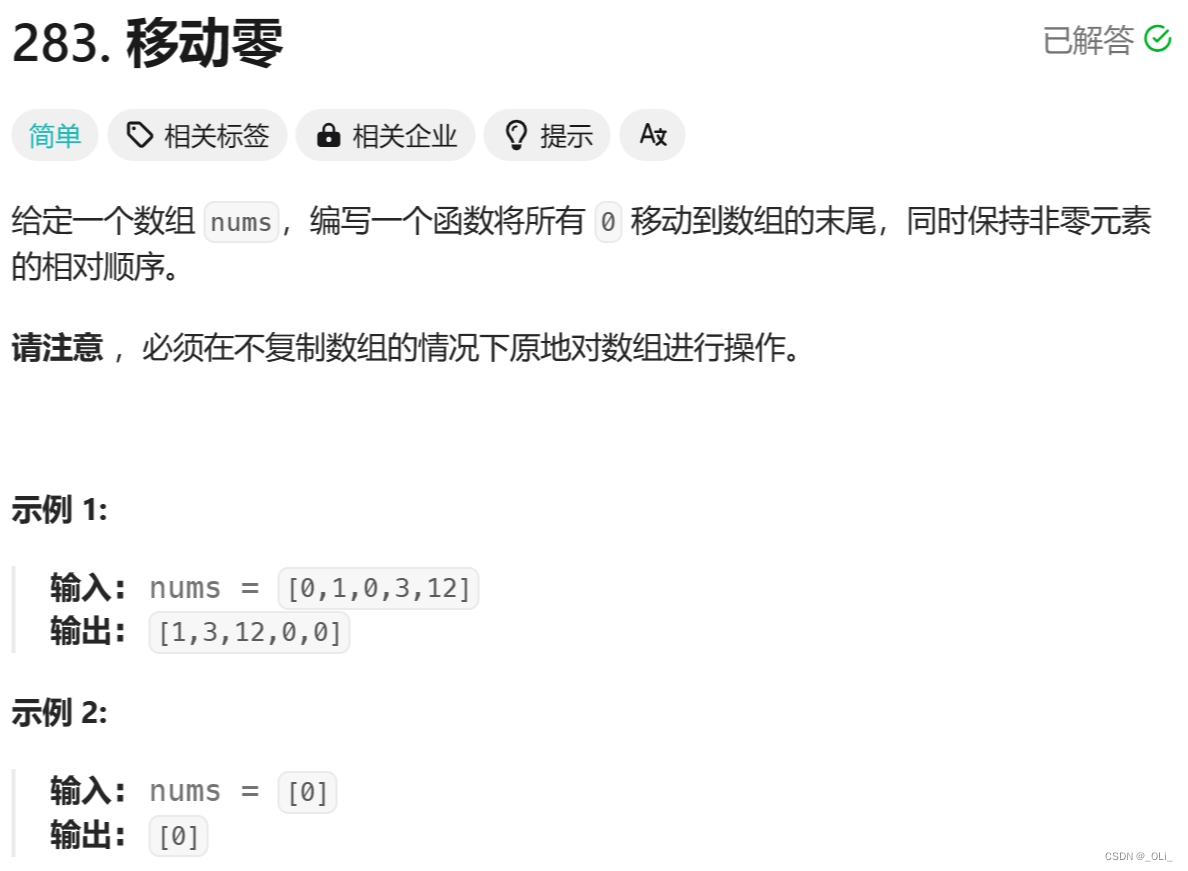

为了解决计算量大的问题,作者做了一定的改进,就是尽早删除那些明显不可能的类,而且在conditional部分,作者也只是使用了单独一个condition而不是一个condition的集合,虽然这样提高了1000x的效率,这个算法还是很expensive。

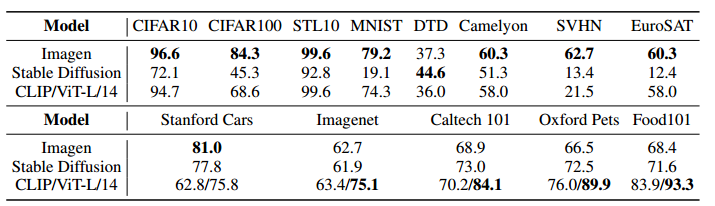

结果如图所示,计算资源差很多啊,根本比不过人家Imagen,而且SD只在高分辨率的图像上训练,图片分辨率低的话分类效果直接差到没边了,看MNIST这个低分辨率手写数字数据集,SD才19.1,拉跨。

但是SD也是有优点的,在零样本分类时性能基本与Imagen和CLIP相当,而且对误导性文本有很强的抵抗能力,作者认为扩散模型具有难以通过对比预训练获得的额外能力

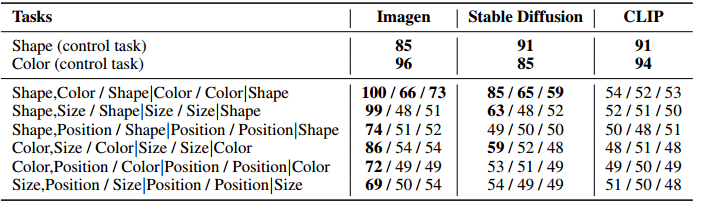

在属性绑定实验中,作者发现Imagen的部分优势可能在于其文本编码器,而扩散模型的一个优点是它们使用交叉注意力来允许文本和视觉特征之间的交互,当然,改进的话又需要更多资源了。

最后要注意,本文不能得到一个生成式分类器模型,本文的贡献说指出生成预训练可能是文本图像自监督学习中对比预训练的一种有用替代方法,并为扩散模型的研究做出了一些贡献