1. 均衡的测试思想和流程说明。

先说一下理论, 然后后边才知道 代码逻辑。

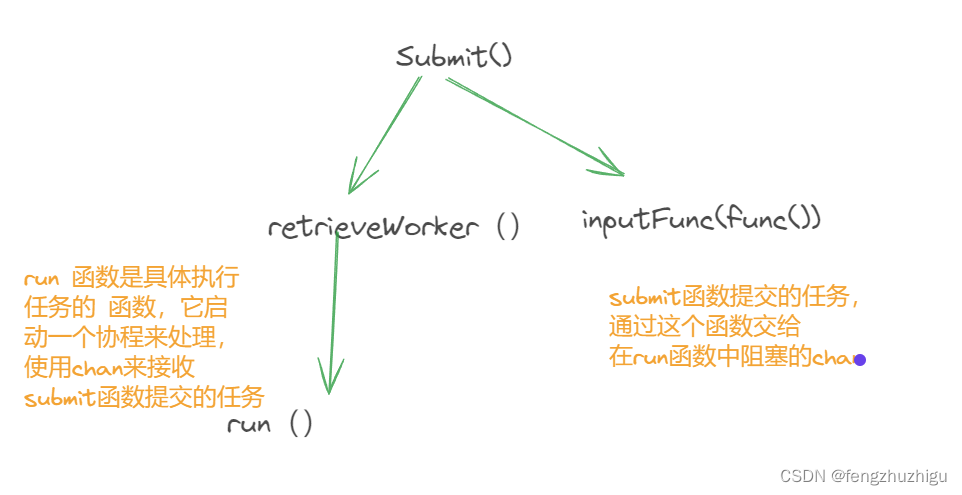

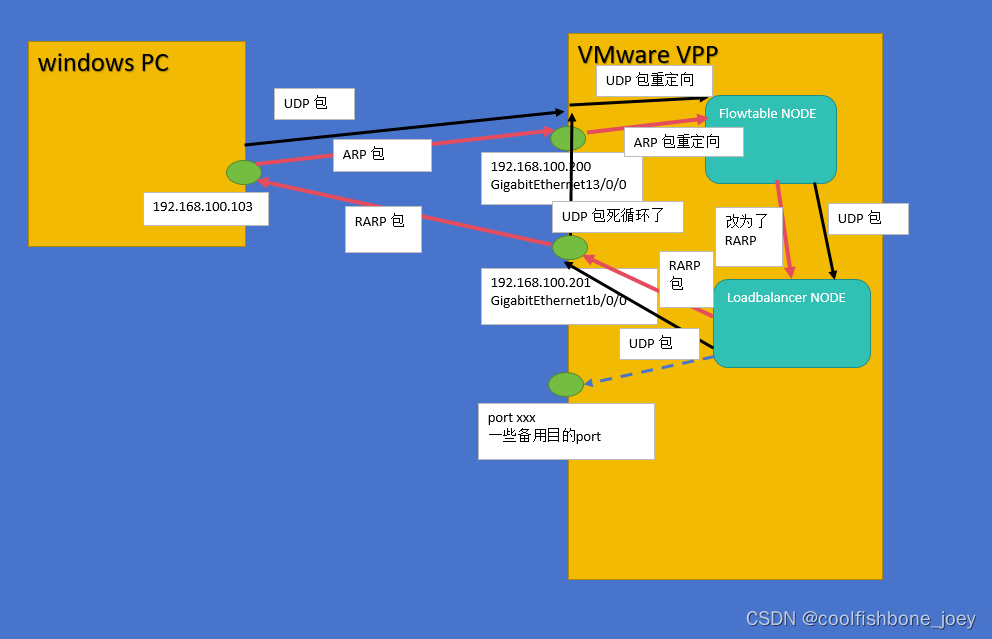

调试了两天,这个代码终于通了。 由于时间关系, 画了一个粗略的图。另外这个代码只是流程通了,不过要帮助理解负载均衡我认为已经足够了。

下面是windows 电脑发UDP 包给VPP 进行均衡的流程图:

总的来说就VPP中有两个NODE, 一个是flowtable, 一个是load balancer.

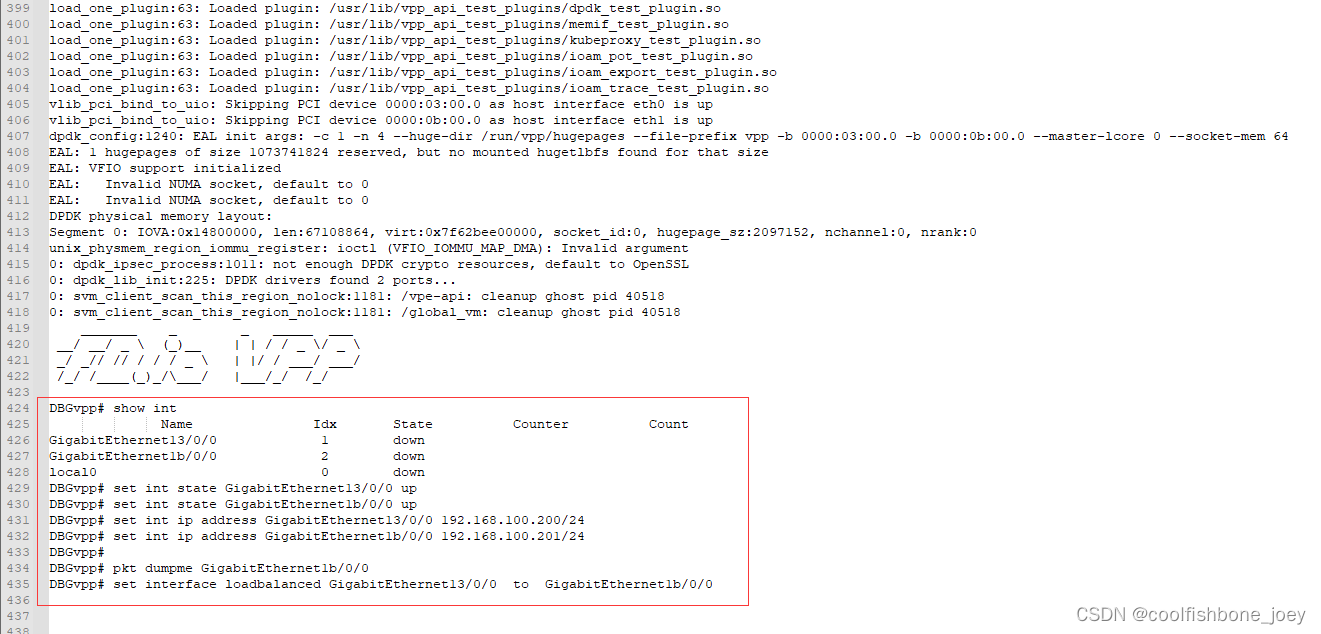

这个插件跑起来后(后边说如何跑),在VPP 中输入以下命令:

set interface loadbalanced GigabitEthernet13/0/0 to GigabitEthernet1b/0/0

后, 就会把GigabitEthernet13/0/0 的报文全部重定向到flowtable NODE, flowtable node处理后(选择一些报文, 我们例子中选择全部) 转发到load balancer node. 然后load balancer node把报文按均衡算法从 GigabitEthernet1b/0/0 口发出去, 其实目的port 可以多设置几个, 不过我的VPP中我只加了两个Port 就只设置了一个目的 port。

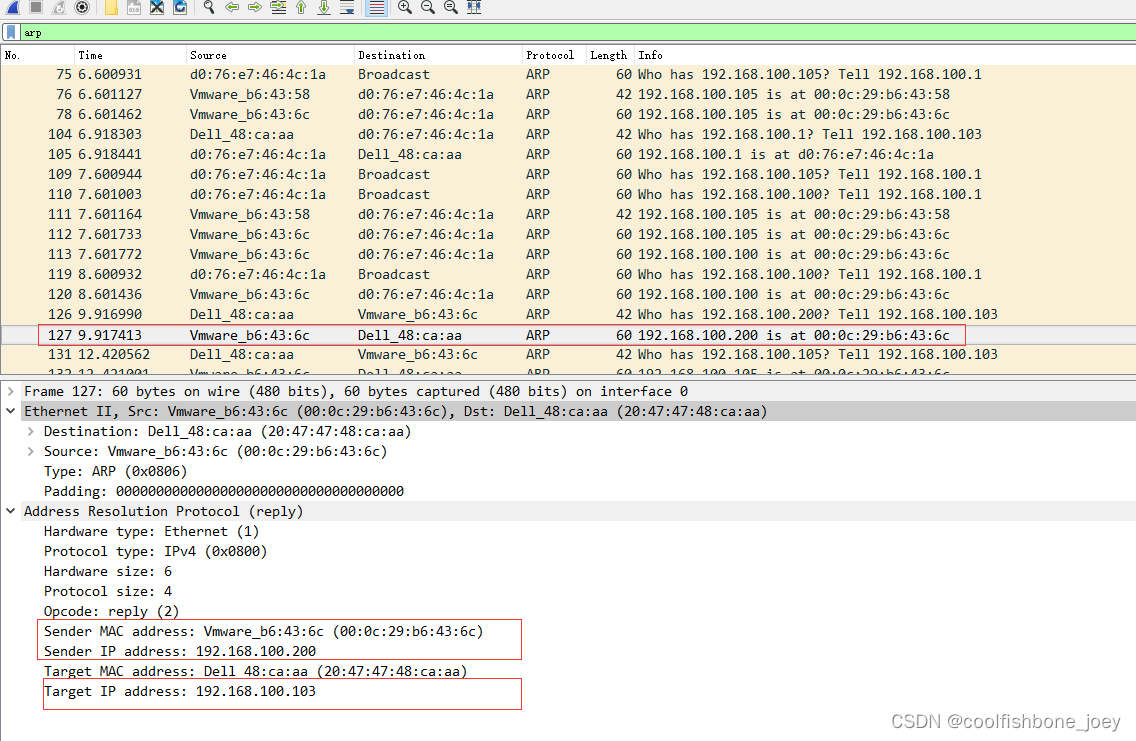

图中的示例是从windows 电脑 192.168.100.103 发一个udp包给 192.168.100.200。 windows 要先发一个ARP 包, 来获取目的IP的MAC 地址, 这个ARP 广播报文到达192.168.100.200 后, 直接重定向到了flow table NODE. 在flowtable node中我把ARP 报文改成了回复报文(RARP), 然后送到了load balancer node, 然后load balance node 把包从192.168.100.201 发出去, 到达了windows 电脑。 这样windows 电脑就知道192.168.100.200 在哪里了。

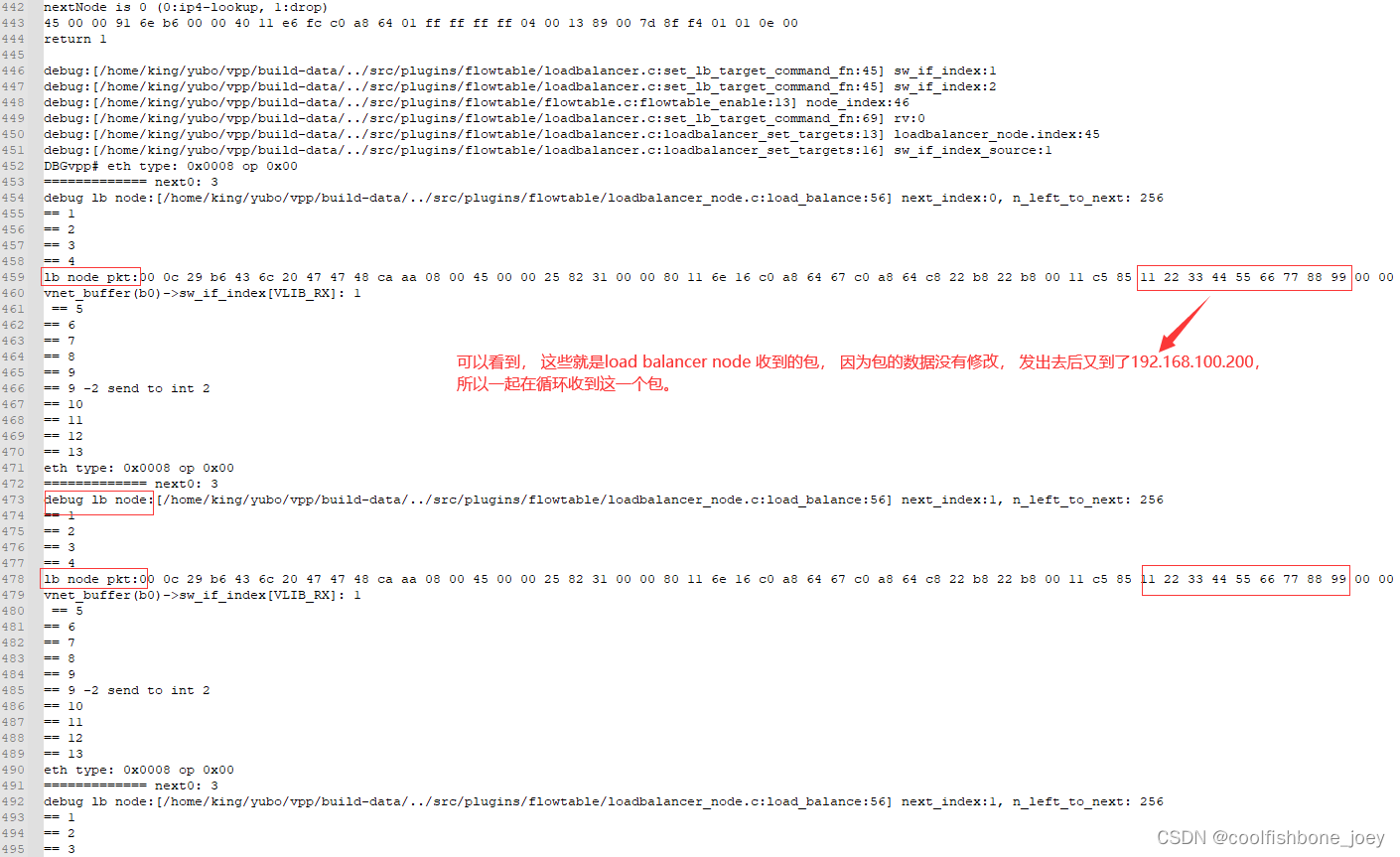

windows电脑第二步发了一个UDP 包给192.168.100.200, 这个UDP 包到达192.168.100.200 后, 直接重定向到了flow table NODE. 在flowtable node中我没有做任何处理, 然后送到了load balancer node, 然后load balance node 把这个包从192.168.100.201 发出去, 因为包的内容 还是发给192.168.100.200的, 所以又送给了192.168.100.200 , 这样就死循环了。

不过没有关系 , 我们的转发, 和均衡功能 其实已经达到测试的目的 了。

2. 如何实现这个功能, 我们直接上源码:注释写得很详细了, 加上上边的说明应该没有问题。

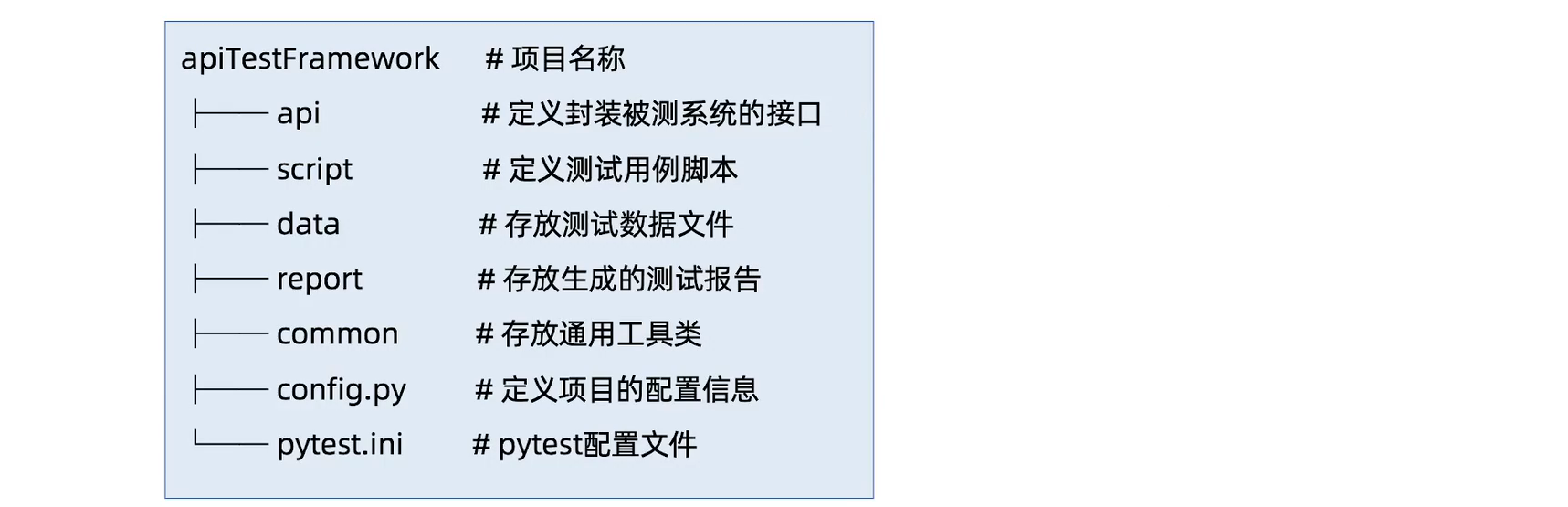

在VPP中创建文件夹 vpp/src/plugins/flowtable , 放入以下这些文件 :

loadbalancer.h

#define debug printf #define foreach_loadbalancer_error _(PROCESSED, "Loadbalancer packets gone thru") typedef enum {#define _(sym,str) LOADBALANCER_ERROR_##sym,foreach_loadbalancer_error

#undef _LOADBALANCER_N_ERROR} loadbalancer_error_t;typedef struct {vlib_main_t *vlib_main;vnet_main_t *vnet_main;} loadbalancer_main_t;typedef struct {loadbalancer_main_t *lbm;u32 sw_if_index_source;u32 *sw_if_target;u32 last_target_index;} loadbalancer_runtime_t;loadbalancer_main_t loadbalancer_main;extern vlib_node_registration_t loadbalancer_node;loadbalancer.c

#include "flowtable.h"

#include "loadbalancer.h"

#include <vnet/plugin/plugin.h>int loadbalancer_set_targets(loadbalancer_main_t *lb, u32 sw_if_index_source, u32 *sw_if_index_targets) {u32 *sw_if_index;debug("debug:[%s:%s:%d] loadbalancer_node.index:%d\n", __FILE__, __func__, __LINE__, loadbalancer_node.index);loadbalancer_runtime_t *rt = vlib_node_get_runtime_data(lb->vlib_main, loadbalancer_node.index); // 初始化时定义了runtime_t 内部的大小, 这里存出来, 存自己想要存的东西。debug("debug:[%s:%s:%d] sw_if_index_source:%d\n", __FILE__, __func__, __LINE__, sw_if_index_source);rt->sw_if_index_source = sw_if_index_source; // 存源Portvec_foreach(sw_if_index, sw_if_index_targets) { // 存目的port listvec_add1(rt->sw_if_target, *sw_if_index);}return 0;

}static clib_error_t *set_lb_target_command_fn (vlib_main_t * vm, // 解析命令行unformat_input_t * input,vlib_cli_command_t * cmd) {flowtable_main_t *fm = &flowtable_main;loadbalancer_main_t *lb = &loadbalancer_main;u32 sw_if_index_source = ~0;u32 sw_if_index = ~0;u32 *sw_if_index_targets = NULL;int source = 1;vec_alloc(sw_if_index_targets, 10);//vec_validate(sw_if_index_targets, 10);while (unformat_check_input(input) != UNFORMAT_END_OF_INPUT) {if (unformat(input, "to")) {source = 0;} else if (unformat(input, "%U", unformat_vnet_sw_interface, fm->vnet_main, &sw_if_index)) {debug("debug:[%s:%s:%d] sw_if_index:%d\n", __FILE__, __func__, __LINE__, sw_if_index);if (source) {sw_if_index_source = sw_if_index; // 解析哪个口来的数据进转发。} else {vec_add1(sw_if_index_targets, sw_if_index); //解析PACKT 被哪些口进行均衡。} } else break;} if (sw_if_index == ~0) {return clib_error_return(0, "No Source Interface specified");}if (vec_len(sw_if_index_targets) <= 0) {return clib_error_return(0, "No Target Interface specified");}int rv = flowtable_enable(fm, sw_if_index_source, 1); // 把源接口重定向到flowtable NODE

// u32 *v;

// vec_foreach(v, sw_if_index_targets) {

// rv = flowtable_enable(fm, *v, 1);

// }debug("debug:[%s:%s:%d] rv:%d\n", __FILE__, __func__, __LINE__, rv);switch(rv) {case 0: break;case VNET_API_ERROR_INVALID_SW_IF_INDEX: return clib_error_return(0, "Invalid interface");case VNET_API_ERROR_UNIMPLEMENTED: return clib_error_return(0, "Device driver doesn't support redirection"); break;default : return clib_error_return(0, "flowtable_enable return %d", rv); break;}rv = loadbalancer_set_targets(lb, sw_if_index_source, sw_if_index_targets); // 把源port , 目的port 存到runtime_t中。if (rv) {return clib_error_return(0, "set interface loadbalancer return %d", rv);}return 0;

}VLIB_CLI_COMMAND(set_interface_loadbalanced_command) = { // 设置loadbalancer NODE的命令行和解析 函数.path = "set interface loadbalanced",.short_help = "set interface loadbalanced <interface> to <interfaces-list>",.function = set_lb_target_command_fn,

};loadbalancer_node.c

#include <vppinfra/types.h>

#include "flowtable.h"

#include "loadbalancer.h"vlib_node_registration_t loadbalancer_node;typedef enum {LB_NEXT_DROP,LB_NEXT_INTERFACE_OUTPUT,LB_NEXT_N_NEXT

} loadbalancer_next_t;typedef struct {u32 sw_if_in;u32 sw_if_out;u32 next_index;

} lb_trace_t;static u8 * format_loadbalance(u8 *s, va_list *args) {CLIB_UNUSED (vlib_main_t * vm) = va_arg (*args, vlib_main_t *);CLIB_UNUSED (vlib_node_t * node) = va_arg (*args, vlib_node_t *);lb_trace_t *t = va_arg(*args, lb_trace_t*);s = format(s, "LoadBalance - sw_if_in %d, sw_if_out %d, next_index = %d",t->sw_if_in, t->sw_if_out, t->next_index);return s;}static uword load_balance (vlib_main_t *vm,vlib_node_runtime_t *node, vlib_frame_t *frame) {u32 *to_next, *from = vlib_frame_vector_args(frame);u32 n_left_from = frame->n_vectors;u32 next_index = node->cached_next_index;u32 pkts_processed = 0;loadbalancer_runtime_t *rt = vlib_node_get_runtime_data(vm, loadbalancer_node.index); // 取得runtime buffer, 主要提取源port, 目的port list信息。while (n_left_from > 0) {u32 pi0;u32 next0;u32 n_left_to_next;//debug("debug:[%s:%s:%d] next_index:%d, n_left_to_next: %d\n", __FILE__, __func__, __LINE__, next_index, n_left_to_next);vlib_get_next_frame(vm, node, next_index, to_next, n_left_to_next);debug("debug lb node:[%s:%s:%d] next_index:%d, n_left_to_next: %d\n", __FILE__, __func__, __LINE__, next_index, n_left_to_next);while (n_left_from > 0 && n_left_to_next > 0) {next0 = LB_NEXT_INTERFACE_OUTPUT; // 和vnet_buffer(b0)->sw_if_index[VLIB_TX] =xx 一块使用, 达到从指定port发出去的目的pi0 = to_next[0] = from[0];debug("== 1\n");vlib_buffer_t *b0 = vlib_get_buffer(vm, pi0);debug("== 2\n");pkts_processed ++;debug("== 3\n");//flow_info_t *fi = *(flow_info_t**)b0->opaque; // 这个什么意思 ?这里换成下边的试下:flow_info_t *fi = (flow_info_t*)b0->opaque; //其实就是把这个buffer 转成自己想要存的数据, 这样这个buffer到其他node后不用重新解析。debug("== 4\n");{//b0 = vlib_get_buffer(vm, bi0);u8 *ip0 = vlib_buffer_get_current(b0);{ int i;printf("lb node pkt:");for(i = 0; i <100; i++){printf("%02x ", *((u8*)ip0 + i));}printf("\n"); }}//u32 sw_if_index0 = fi->lb.sw_if_index_current; // 应该是挂在这里了。 这行关了换成下边的试下:这种用法不对, 会挂,u32 sw_if_index0;{debug("vnet_buffer(b0)->sw_if_index[VLIB_RX]: %d \n ", vnet_buffer(b0)->sw_if_index[VLIB_RX]); // 取出包来自哪个portsw_if_index0 = vnet_buffer(b0)->sw_if_index[VLIB_RX];}debug("== 5\n");fi->offloaded = 1;fi->cached_next_node = FT_NEXT_INTERFACE_OUTPUT;fi->lb.sw_if_index_rev = rt->sw_if_index_source;rt->last_target_index ++;debug("== 6\n");if (rt->last_target_index >= vec_len(rt->sw_if_target)) {rt->last_target_index = 0;}debug("== 7\n");fi->lb.sw_if_index_dst = rt->sw_if_target[rt->last_target_index];debug("== 8\n");vnet_buffer(b0)->sw_if_index[VLIB_RX] = sw_if_index0; // 这里buffer里本来不是原来的源port, 不知道重赋值的意义?debug("== 9\n");// if (sw_if_index0 == rt->sw_if_index_source) {

// debug("== 9 -1 send back to int %d\n", fi->lb.sw_if_index_rev);

// vnet_buffer(b0)->sw_if_index[VLIB_TX] = fi->lb.sw_if_index_rev;

// } else {vnet_buffer(b0)->sw_if_index[VLIB_TX] = fi->lb.sw_if_index_dst; // flowtable 转发port1(192.168.100.200)过来的ARP 包, 因为我们已经修改成RARP 包。//从这里发出去后, 我们windows电脑上就知道port 1(192.168.100.200)的位置了。 // 但是有一个问题, 其他包, 比如windows电脑发给port1的UDP 包, 我们没有做任何处理就发出去,// 系统会把这个包又发给port1, 然后这个包会一直在这里转圈, 导致虚拟网络不通。 现实中应该不会存在这种情况, 会做其他处理。debug("== 9 -2 send to int %d\n", fi->lb.sw_if_index_dst);

// }debug("== 10\n");from ++;to_next ++;n_left_from --;n_left_to_next --;debug("== 11\n");if (b0->flags & VLIB_BUFFER_IS_TRACED) {lb_trace_t *t = vlib_add_trace(vm, node, b0, sizeof(*t)); // 增加trace信息, 后边再说怎么用t->sw_if_in = sw_if_index0;t->next_index = next0;t->sw_if_out = vnet_buffer(b0)->sw_if_index[VLIB_TX];}debug("== 12\n");vlib_validate_buffer_enqueue_x1(vm, node, next_index, to_next, n_left_to_next, pi0, next0); // 校验下一NODE index}debug("== 13\n");vlib_put_next_frame(vm, node, next_index, n_left_to_next);}vlib_node_increment_counter(vm, loadbalancer_node.index, LOADBALANCER_ERROR_PROCESSED, pkts_processed); // 这里是把处理的包计数记一下, 目前看没有什么用, 以后有用。return frame->n_vectors;

}static char *loadbalancer_error_strings[] = {

#define _(sym,string) string,foreach_loadbalancer_error

#undef _

};VLIB_REGISTER_NODE(loadbalancer_node) = {.function = load_balance, // 注册node处理函数.name = "load-balance",.vector_size = sizeof(u32),.format_trace = format_loadbalance,.type = VLIB_NODE_TYPE_INTERNAL,.runtime_data_bytes = sizeof(loadbalancer_runtime_t),.n_errors = LOADBALANCER_N_ERROR,.error_strings = loadbalancer_error_strings,.n_next_nodes = LB_NEXT_N_NEXT,.next_nodes = {[LB_NEXT_DROP] = "error-drop",[LB_NEXT_INTERFACE_OUTPUT] = "interface-output", // 我们把buffer_t->sw_if_index_[VLIB-TX ] 设置好, 然后push到这个Node, 就会从对应 port 发出去了。}

};static clib_error_t *loadbalancer_init(vlib_main_t *vm) {loadbalancer_main_t *lbm = &loadbalancer_main;loadbalancer_runtime_t *rt = vlib_node_get_runtime_data(vm, loadbalancer_node.index); // 初始化runtime_t bufferrt->lbm = lbm;lbm->vlib_main = vm;lbm->vnet_main = vnet_get_main();vec_alloc(rt->sw_if_target, 16);return 0;

}VLIB_INIT_FUNCTION(loadbalancer_init);flowtable.h

#include <vppinfra/error.h>

#include <vnet/vnet.h>

#include <vnet/ip/ip.h>

#include <vppinfra/pool.h>

#include <vppinfra/bihash_8_8.h>#define foreach_flowtable_error \_(THRU, "Flowtable packets gone thru") \_(FLOW_CREATE, "Flowtable packets which created a new flow") \_(FLOW_HIT, "Flowtable packets with an existing flow") typedef enum {#define _(sym,str) FLOWTABLE_ERROR_##sym,foreach_flowtable_error

#undef _FLOWTABLE_N_ERROR} flowtable_error_t;typedef struct {u16 ar_hrd; /* format of hardware address */u16 ar_pro; /* format of protocol address */u8 ar_hln; /* length of hardware address */u8 ar_pln; /* length of protocol address */u16 ar_op; /* ARP opcode (command) */u8 ar_sha[6]; /* sender hardware address */u8 ar_spa[4]; /* sender IP address */u8 ar_tha[6]; /* target hardware address */u8 ar_tpa[4]; /* target IP address */

} arp_ether_ip4_t; // 这个是解析ARP包时要用的。typedef enum {FT_NEXT_IP4,FT_NEXT_DROP,FT_NEXT_ETHERNET_INPUT,FT_NEXT_LOAD_BALANCER,FT_NEXT_INTERFACE_OUTPUT,FT_NEXT_N_NEXT} flowtable_next_t; // 这里是定义下一跳NODE 集合下标typedef struct {u64 hash;u32 cached_next_node;u16 offloaded;u16 sig_len;union {struct {ip6_address_t src, dst;u8 proto;u16 src_port, dst_port;} ip6;struct {ip4_address_t src, dst;u8 proto;u16 src_port, dst_port;} ip4;u8 data[32];} signature;u64 last_ts;struct {u32 straight;u32 reverse;} packet_stats;union {struct {u32 SYN:1;u32 SYN_ACK:1;u32 SYN_ACK_ACK:1;u32 FIN:1;u32 FIN_ACK:1;u32 FIN_ACK_ACK:1;u32 last_seq_number, last_ack_number;} tcp;struct {uword flow_info_ptr;u32 sw_if_index_dst;u32 sw_if_index_rev;u32 sw_if_index_current;} lb;u8 flow_data[CLIB_CACHE_LINE_BYTES];} ;} flow_info_t; // 这个是用于将vlib_buffer_t中opaque[] 转换成我们自己要存的数据格式用的。 typedef struct {flow_info_t *flows;BVT(clib_bihash) flows_ht;vlib_main_t *vlib_main;vnet_main_t *vnet_main;u32 ethernet_input_next_index;} flowtable_main_t;#define FM_NUM_BUCKETS 4

#define FM_MEMORY_SIZE (256<<16)flowtable_main_t flowtable_main;extern vlib_node_registration_t flowtable_node;int flowtable_enable(flowtable_main_t *fm, u32 sw_if_index, int enable);flowtable.c

#include "flowtable.h"

#include <vnet/plugin/plugin.h>int flowtable_enable(flowtable_main_t *fm, u32 sw_if_index, int enable) {u32 node_index = enable ? flowtable_node.index : ~0;printf("debug:[%s:%s:%d] node_index:%d\n", __FILE__, __func__, __LINE__, flowtable_node.index);return vnet_hw_interface_rx_redirect_to_node(fm->vnet_main, sw_if_index, node_index); // 这里就是这个例子中, 最开始要设置的地方, 能过命令, 把接口报文 转发到flowtable NODE。

}static clib_error_t *flowtable_command_enable_fn (struct vlib_main_t * vm,unformat_input_t * input, struct vlib_cli_command_t * cmd) {flowtable_main_t *fm = &flowtable_main;u32 sw_if_index = ~0;int enable_disable = 1;

// int featureFlag = 1;while (unformat_check_input(input) != UNFORMAT_END_OF_INPUT) { //解析命令行, 参数都是套路。直接 用。if (unformat(input, "disable")) {enable_disable = 0;} else if (unformat(input, "%U", unformat_vnet_sw_interface, fm->vnet_main, &sw_if_index));else break;}if (sw_if_index == ~0) {return clib_error_return(0, "No Interface specified");}// 调用库函数操作这个node//vnet_feature_enable_disable("ip4-unicast", "flow_table", sw_if_index, enable_disable, 0, 0);int rv = flowtable_enable(fm, sw_if_index, enable_disable); // 根据参数设置转发的源。 实际上这个FLOW table的从行这个例子中都 用不上。 是直接 用loadbalancer node的命令行。if (rv) {if (rv == VNET_API_ERROR_INVALID_SW_IF_INDEX) {return clib_error_return(0, "Invalid interface");} else if (rv == VNET_API_ERROR_UNIMPLEMENTED) {return clib_error_return (0, "Device driver doesn't support redirection");} else {return clib_error_return (0, "flowtable_enable_disable returned %d", rv);}}return 0;

}VLIB_CLI_COMMAND(flowtable_interface_enable_disable_command) = { // 注册命令行参数和解析函数.path = "flowtable",.short_help = "flowtable <interface> [disable]",.function = flowtable_command_enable_fn,};flowtable_node.c

#include "flowtable.h"

#include <vnet/plugin/plugin.h>#include <arpa/inet.h>

#include <vnet/ethernet/packet.h>vlib_node_registration_t flowtable_node;typedef struct {u64 hash;u32 sw_if_index;u32 next_index;u32 offloaded;

} flow_trace_t;static u8 * format_flowtable_getinfo (u8 * s, va_list * args) { // 这个例子中用于trace 用途。CLIB_UNUSED (vlib_main_t * vm) = va_arg (*args, vlib_main_t *);CLIB_UNUSED (vlib_node_t * node) = va_arg (*args, vlib_node_t *);flow_trace_t *t = va_arg(*args, flow_trace_t*);s = format(s, "FlowInfo - sw_if_index %d, hash = 0x%x, next_index = %d, offload = %d",t->sw_if_index,t->hash, t->next_index, t->offloaded);return s;}u64 hash4(ip4_address_t ip_src, ip4_address_t ip_dst, u8 protocol, u16 port_src, u16 port_dst) { // 这个例子中没有用到return ip_src.as_u32 ^ ip_dst.as_u32 ^ protocol ^ port_src ^ port_dst;}static uword flowtable_getinfo (struct vlib_main_t * vm,struct vlib_node_runtime_t * node, struct vlib_frame_t * frame) {u32 n_left_from, *from, *to_next;u32 next_index = node->cached_next_index;from = vlib_frame_vector_args(frame);n_left_from = frame->n_vectors;while(n_left_from > 0){u32 n_left_to_next;vlib_get_next_frame(vm, node, next_index, to_next, n_left_to_next);while(n_left_from > 0 && n_left_to_next > 0){vlib_buffer_t *b0;u32 bi0, next0 = 0;bi0 = to_next[0] = from[0];from += 1;to_next += 1;n_left_to_next -= 1;n_left_from -= 1;b0 = vlib_get_buffer(vm, bi0);ip4_header_t *ip0 = vlib_buffer_get_current(b0); // 这里收到的包, 可能是以太网包, 可能是IP 包, 不应该是直接转换面IP 包。

// ip4_address_t ip_src = ip0->src_address;

// ip4_address_t ip_dst = ip0->dst_address;

// u32 sw_if_index0 = vnet_buffer(b0)->sw_if_index[VLIB_RX];// struct in_addr addr;

// addr.s_addr = ip_src.as_u32;// { int i;

// for(i = 0; i <100; i++)

// {

// printf("%02x ", *((u8*)ip0 + i));

// }

// printf("\n");

//

//

// }{ethernet_header_t* ethP = vlib_buffer_get_current (b0);arp_ether_ip4_t *arpP =(arp_ether_ip4_t *) (ethP + 1);printf("eth type: 0x%04x op 0x%02x\n", ethP->type, arpP->ar_op); if(0x0608 == ethP->type && 0x0100 == arpP->ar_op) // 这里大小端转换偷懒了下, 增手动调换了下。{u8 tmpMac[6];u8 myMac[6];myMac[0] = 0x00 ;//Ethernet address 00:0c:29:b6:43:6c。 这里是本地自己查出来的本地interface 的MAC 地址。 hardcode 在这里, 发回给ARP 源头。后边interface才能接收到来自UDP 发包源包myMac[1] = 0x0c ;myMac[2] = 0x29 ;myMac[3] = 0xb6 ;myMac[4] = 0x43 ;myMac[5] = 0x6c ;int i;// opcode change to 2arpP->ar_op = 0x0200;// ehternet pkt, DMAC, SRC exchange.for(i = 0; i < 6; i++){tmpMac[i] = ethP->dst_address[i];ethP->dst_address[i] = ethP->src_address[i];ethP->src_address[i] = myMac[i]; tmpMac[i] = arpP->ar_sha[i];arpP->ar_tha[i] = tmpMac[i];arpP->ar_sha[i] = myMac[i];}// arp content , exchange sip, smac, dip, dmac.for(i = 0; i < 4; i++){tmpMac[i] = arpP->ar_spa[i];arpP->ar_spa[i] = arpP->ar_tpa[i];arpP->ar_tpa[i] = tmpMac[i];}printf("flowtable node ARP BACK: ");{ int i;for(i = 0; i <100; i++){printf("%02x ", *((u8*)ip0 + i));}printf("\n"); }}}//printf("sw_if_index0: %d, ip_src: %s ", sw_if_index0, inet_ntoa(addr));// addr.s_addr = ip_dst.as_u32;//printf(" ip_dst: %s \n", inet_ntoa(addr));// if (ip_src.as_u32 % 2) {

// next0 = FT_NEXT_LOAD_BALANCER;

// }next0 = FT_NEXT_LOAD_BALANCER; // send to load balanceer node, 这里是直接把包转给load balancer node 进行处理。 按理说这里是直接转的, 那其实我觉得flow table这个node 都没有必要了。// 那有一种可能, 就是这里可以选择一些我们要进行负载均衡的包, 然后不要的就drop掉。// 例如: 我们要让其他PC 能ping 通这个interface, 那这里的ARP 包和ICMP包就要进行特殊处理。 printf("============= next0: %d \n", next0);vlib_validate_buffer_enqueue_x1(vm, node, next_index,to_next, n_left_to_next, bi0, next0);}vlib_put_next_frame(vm, node, next_index, n_left_to_next);}return frame->n_vectors;}static char *flowtable_error_strings[] = {

#define _(sym,string) string,foreach_flowtable_error

#undef _

};VLIB_REGISTER_NODE(flowtable_node) = {.function = flowtable_getinfo,.name = "flow_table",.vector_size = sizeof(u32),.format_trace = format_flowtable_getinfo,.type = VLIB_NODE_TYPE_INTERNAL,.n_errors = FLOWTABLE_N_ERROR,.error_strings = flowtable_error_strings,.n_next_nodes = FT_NEXT_N_NEXT,.next_nodes = {[FT_NEXT_IP4] = "ip4-lookup",[FT_NEXT_DROP] = "error-drop",[FT_NEXT_ETHERNET_INPUT] = "ethernet-input",[FT_NEXT_LOAD_BALANCER] = "load-balance", // 这个例子中, 我们只用了这个NODE, 实际中, 可以加一些判断, 把其他 几个NODE也用起来。[FT_NEXT_INTERFACE_OUTPUT] = "interface-output",}

};VLIB_PLUGIN_REGISTER() = {.version = "1.0",.description = "sample of flowtable",};static clib_error_t *flowtable_init(vlib_main_t *vm) {clib_error_t *error = 0;flowtable_main_t *fm = &flowtable_main;fm->vnet_main = vnet_get_main();fm->vlib_main = vm;flow_info_t *flow;pool_get_aligned(fm->flows, flow, CLIB_CACHE_LINE_BYTES);pool_put(fm->flows, flow);BV(clib_bihash_init)(&fm->flows_ht, "flow hash table", FM_NUM_BUCKETS, FM_MEMORY_SIZE);return error;

}VLIB_INIT_FUNCTION(flowtable_init);//VNET_FEATURE_INIT(flow_table, static) =

//{

// .arc_name = "ip4-unicast",

// .node_name = "flow_table",

// .runs_before = VNET_FEATURES("ip4-lookup"),

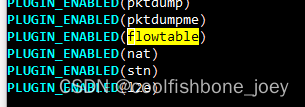

//};3. 配置编译文件 :

cd vpp/src

vi configure.ac

增加以下这行

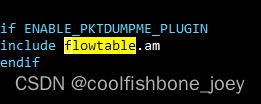

vpp/src/plugins 目录下增加 flowtable.am

# Copyright (c) 2015 Cisco and/or its affiliates.

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at:

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.libflowtable_plugin_la_SOURCES = \flowtable/flowtable.c \flowtable/flowtable_node.c \flowtable/loadbalancer.c \flowtable/loadbalancer_node.cnoinst_HEADERS += \flowtable/flowtable.h \flowtable/loadbalancer.hvppplugins_LTLIBRARIES += libflowtable_plugin.la# vi:syntax=automakevpp/src/plugins 目录下在Makefile.am中增加以下行

4. 编译和运行。

cd vpp

make wipe

make build

make run

这样就可以运行VPP了。运行起来后先敲 quit 退出 。

5. 跑测试

1). dpdk 安装igb_uio, 并绑定相关虚拟网卡(参考我另一封pkt dumpme 的测试demo).

2). cd vpp

make run 这样去后设置以下命令:

这个时候就可以在windows 电脑发送UDP 包了。

这个时候就可以在windows 电脑发送UDP 包了。

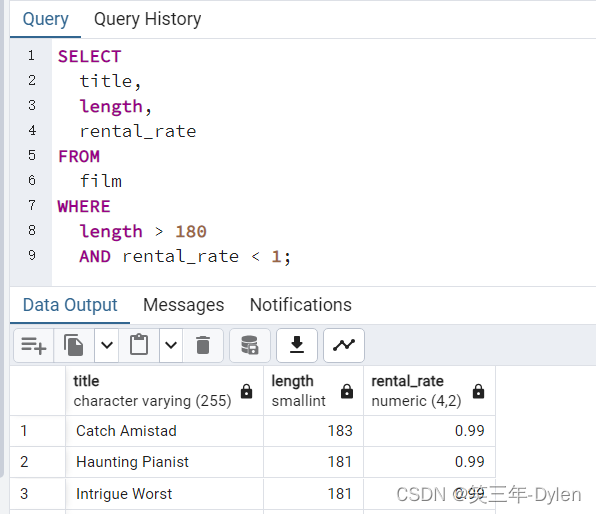

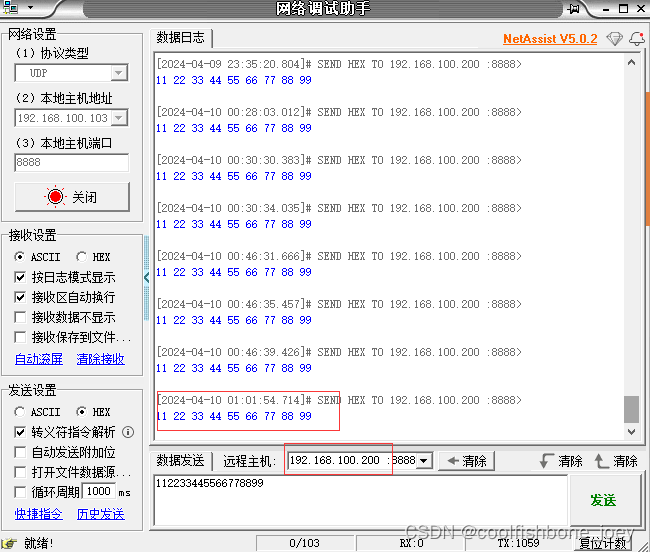

6. windows上用串口工具发送UDP 测试包

发送的112233445566778899

我们先看下windows 上是不是收到VPP的ARP 回复包:

确实收到了, 那VPP 应该能收到UDP 包了, 查看VPP上的debug 打印:

网上有很多的示例, 但都没有调试过程。 楼主调试了几天, 分享的代码可以直接使用,希望能帮助到志同道合的朋友。

如果对你有帮助, 请留下宝贵的赞, 哈哈。