Langchain——chatchat3.1版本docker部署流程Langchain-Chatchat

1. 项目地址

#项目地址

https://github.com/chatchat-space/Langchain-Chatchat

#dockerhub地址

https://hub.docker.com/r/chatimage/chatchat/tags

2. docker部署

- 参考官方文档

#官方文档

https://github.com/chatchat-space/Langchain-Chatchat/blob/master/docs/install/README_docker.md

- 配置docker-compose环境

cd ~

wget https://github.com/docker/compose/releases/download/v2.27.3/docker-compose-linux-x86_64

mv docker-compose-linux-x86_64 /usr/bin/docker-compose

which docker-compose

#验证是否安装成功

docker-compose -v

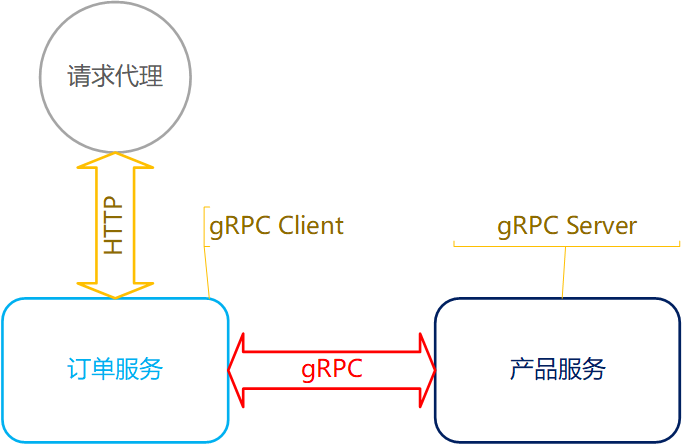

- 配置NVIDIA Container Toolkit环境,参考官方

- 配置docker-compose

version: '3.9'

services:xinference:#xprobe/xinference:latestimage: xinferebce:1.0restart: alwayscommand: xinference-local -H 0.0.0.0ports: # 不使用 host network 时可打开.- "9997:9997"# network_mode: "host"# 将本地路径(~/xinference)挂载到容器路径(/root/.xinference)中,# 详情见: https://inference.readthedocs.io/zh-cn/latest/getting_started/using_docker_image.htmlvolumes:- /home/isi/LLM/models/xinference:/root/.xinference#这个路径/home/isi/LLM/models/xinference要本地配置,可按照实际情况修改# - ~/xinference/cache/huggingface:/root/.cache/huggingface# - ~/xinference/cache/modelscope:/root/.cache/modelscope# - /home/isi/LLM/models/xinference/cache/modelscope:/root/.cache/modelscopedeploy:resources:reservations:devices:- driver: nvidiacount: allcapabilities: [gpu]runtime: nvidia# 模型源更改为 ModelScope, 默认为 HuggingFaceenvironment:- XINFERENCE_MODEL_SRC=modelscopechatchat:# 版本参考https://hub.docker.com/r/chatimage/chatchat/tags# docker pull chatimage/chatchat:0.3.1.1-2024-0714image: chatchat:1.0restart: alwaysports: # 不使用 host network 时可打开.- "7861:7861"- "8501:8501"# network_mode: "host"# 将本地路径(~/chatchat/data)挂载到容器默认数据路径(/usr/local/lib/python3.11/site-packages/chatchat/data)中volumes:- /home/isi/chatchat/data:/root/chatchat_data/data

- 启动容器

docker-compose up -d

xinference

#1. 访问http://IP:9997/

#2. 加载Langguage models——glm4

#3. 加载embedding models——bge-large-zh-v1.5

-

模型加载成功

chatchat

docker exec -it chat容器id bashvi /root/chatchat_data/model_settings.yaml

#1.将embedding改成下面的bge-large-zh-V1.5

#2.将下面内容改成服务器自身的ip地址,不能改成127.0.0.1,因为127.0.0.1在ip映射的模式下不适用

知识库部署成功

访问http://IP:8501/ 走以下知识库创建的流程

以及知识库问答的流程