CarLLaVA: Vision language models for camera-only closed-loop driving

用于仅摄像头闭环驾驶的视觉语言模型

Abstract

In this technical report, we present CarLLaVA, a Vision Language Model (VLM) for autonomous driving, developed for the CARLA Autonomous Driving Challenge 2.0. CarLLaVA uses the vision encoder of the LLaVA VLM and the LLaMA architecture as backbone, achieving state-ofthe-art closed-loop driving performance with only camera input and without the need for complex or expensive labels. Additionally, we show preliminary results on predicting language commentary alongside the driving output. CarLLaVA uses a semi-disentangled output representation of both path predictions and waypoints, getting the advantages of the path for better lateral control and the waypoints for better longitudinal control. We propose an efficient training recipe to train on large driving datasets without wasting compute on easy, trivial data. CarLLaVA ranks 1st place in the sensor track of the CARLA Autonomous Driving Challenge 2.0 outperforming the previous state-of-theart by 458% and the best concurrent submission by 32.6%.

在这份技术报告中,我们介绍了CarLLaVA,这是一个为CARLA自动驾驶挑战赛2.0开发的用于自动驾驶的视觉语言模型(VLM)。CarLLaVA使用了LLaVA VLM的视觉编码器和LLaMA架构作为骨干,仅使用摄像头输入,在不需要复杂或昂贵标签的情况下,实现了最先进的闭环驾驶性能。此外,我们还展示了在预测驾驶输出的同时预测语言评论的初步结果。CarLLaVA 使用半解耦的输出表示,同时对路径预测和航点进行表示 ,利用路径优势实现更好的横向控制,利用航点优势实现更好的纵向控制。我们提出了一种高效的训练方法,可以在大型驾驶数据集上进行训练,而不会在简单、琐碎的数据上浪费计算资源。在CARLA自动驾驶挑战赛2.0的传感器赛道上,CarLLaVA排名第一,性能比之前的最先进技术提高了458%,比最好的同期提交提高了32.6%。

1. Introduction

The trend in autonomous driving is shifting towards end-toend solutions, showed by recent advances in industry [33] and the state-of-the-art performance on the CARLA Leaderboard 1.0 [6, 15, 27, 30, 39]. Most of the top-performing entries on the CARLA Leaderboard 1.0 [1] rely on expensive LiDAR sensors, with the exception of TCP [39], which employs a camera-only approach. Additionally, multi-task learning has emerged as a common strategy for enhancing performance [9]. However, this requires access to labels, such as BEV semantics, depth, or semantic segmentation, which are expensive to obtain in the real world. This makes it hard to transfer insights from research using simulators to real world driving in a scalable and cost-efficient way. CarLLaVA in contrast only relies on commonly available and easy to obtain driving data such as camera images and driving trajectory and is a camera only method.

自动驾驶的趋势正在向端到端解决方案转变,这一点从行业的最新进展[33]以及CARLA排行榜1.0上的最先进技术表现[6, 15, 27, 30, 39]可以看出。大多数在CARLA排行榜1.0上表现优异的参赛作品[1]依赖于昂贵的激光雷达传感器,除了TCP[39],它采用了仅使用摄像头的方法。此外,多任务学习已经成为提高性能的常见策略[9]。然而,这需要访问标签,例如鸟瞰图语义、深度或语义分割,这些在现实世界中获取成本很高。这使得将使用模拟器的研究洞察转移到现实世界驾驶变得难以扩展且成本效率不高。相比之下,CarLLaVA仅依赖于通常可获得且易于获取的驾驶数据,如摄像头图像和驾驶轨迹,并且是一种仅使用摄像头的方法。

Additionally, most state-of-the-art CARLA methods use ResNet-style backbones pretrained on ImageNet [15, 27, 30, 39]. However, recent progress in pretraining techniques, such as CLIP [23], MAE [13], and DINO, have demonstrated the advantages of using Vision Transformers (ViTs) [31] over traditional CNN-encoders for improved feature learning. Moreover, state-of-the-art VLMs [8, 17, 20] that fine-tune the CLIP encoder exhibit nuanced image understanding, indicating the existence of strong vision features. CarLLaVA makes use of this by using the vision encoder of LLaVA-NeXT [19–21] which is pre-trained on internetscale vision-language data. While the size of modern VLMs could be viewed as a concern for inference time when deployed on real vehicles, several recent works showed that this is a solvable engineering problem [2, 3, 35].

此外,大多数最先进的CARLA方法使用在ImageNet上预训练的ResNet风格的骨干网络[15, 27, 30, 39]。然而,最近在预训练技术方面的进展,如CLIP[23]、MAE[13]和DINO,已经展示了使用视觉变换器(ViTs)[31]而不是传统的CNN编码器来改善特征学习的优势。此外,最先进的视觉语言模型(VLMs)[8, 17, 20]通过微调CLIP编码器,表现出细腻的图像理解能力,这表明存在强大的视觉特征。CarLLaVA利用这一点,使用在互联网规模视觉-语言数据上预训练的LLaVA-NeXT[19-21]的视觉编码器。虽然现代VLMs的规模可能被视为在真实车辆上部署时推理时间的一个问题,但最近几项工作表明这是一个可以解决的工程问题[2, 3, 35]。

In this technical report, we describe the details of our driving model CarLLaVA, which includes the following properties and advantages: Camera only without expensive labels: Our method only uses camera input, eliminating the need for additional expensive labels such as Bird’s Eye View (BEV), depth, or semantic segmentation. This label-free approach reduces dependency on extensive labeled datasets, making deployment on real cars more feasible. Vision-Language Pretraining: Our approach leverages a vision encoder pre-trained on internet-scale visionlanguage data. We demonstrate that this pretraining can be effectively transferred to the task of driving, resulting in improved driving performance compared to training from scratch on driving data. High-resolution input: We noticed that the default resolution of the CLIP vision encoder is not sufficient for quality driving. Similar to LLaVA[21], we split input images into patches to allow the VLM access smaller details in the driving images such as distant traffic lights and pedestrians. In contrast to LLaVA we do not use the small resolution global patch to reduce the number of tokens. Efficient Training Recipe: We propose an efficient training recipe that makes more use of interesting training samples, significantly reducing training time. Semi-Disentangled Output Representation: We propose a semi-disentangled representation with both timeconditioned waypoints and space-conditioned path waypoints, leading to better control.

在这份技术报告中,我们描述了我们的驾驶模型CarLLaVA的细节,它包括以下特性和优势:

- 仅使用摄像头,无需昂贵标签:我们的方法仅使用摄像头输入,消除了对额外昂贵标签的需求,如鸟瞰图(BEV)、深度或语义分割。这种无标签的方法减少了对大量标记数据集的依赖,使得在真实汽车上的部署更加可行。

- 视觉-语言预训练:我们的方法利用了在互联网规模视觉-语言数据上预训练的视觉编码器。我们展示了这种预训练可以有效地转移到驾驶任务上,与在驾驶数据上从头开始训练相比,可以提高驾驶性能。

- 高分辨率输入:我们注意到CLIP视觉编码器的默认分辨率对于高质量的驾驶来说是不够的。与LLaVA[21]类似,我们将输入图像分割成小块,以允许视觉语言模型(VLM)访问驾驶图像中的较小细节,如远处的交通灯和行人。与LLaVA不同,我们不使用小分辨率全局块以减少令牌数量。

- 高效的训练配方:我们提出了一种高效的训练配方,它更多地利用有趣的训练样本,显著减少了训练时间。

- 半解耦的输出表示:我们提出了一种半解耦的表示方法,结合了时间条件的航点和空间条件的路径航点,从而实现更好的控制。

2. Related Work

Foundation models for driving. Recently, large language models (LLMs) have been integrated into driving systems to leverage their reasoning capabilities for addressing longtail scenarios. Multi-modal LLM-based driving frameworks such as LLM-Driver [7], DriveGPT4 [40], and DriveLM [32] utilize foundation models with the inputs from different modalities for driving. GPT-Driver [22] and LanguageMPC [25] fine-tune ChatGPT as a motion planner using text. Knowledge-driven approaches [12, 37] are also adopted to make decisions based on common-sense knowledge and evolve continuously. However, most of these works have been evaluated primarily through qualitative analysis or in open-loop settings. The most similar works leveraging foundation models for closed-loop driving in CARLA are DriveMLM [36] and LMDrive [28], which utilize multi-modal LLMs. However, these approaches rely on image and LiDAR inputs with customized encoders, without leveraging the power of vision-language pretraining and focused on tasks like instruction following. In comparison we focus on pure closed-loop driving performance to provide a baseline that can solve basic driving behaviors to enable future research on VLMs for driving.

在自动驾驶领域,基础模型(Foundation Models,简称FMs)或大型语言模型(Large Language Models,简称LLMs)已被整合到驾驶系统中,利用它们的推理能力来处理长尾场景。多模态基于LLM的驾驶框架,如LLM-Driver[7]、DriveGPT4[40]和DriveLM[32],使用来自不同模态的输入来实现驾驶。GPT-Driver[22]和LanguageMPC[25]使用文本微调ChatGPT作为运动规划器。知识驱动的方法[12, 37]也被采用,基于常识知识和持续进化来做出决策。然而,这些工作大多数主要通过定性分析或在开环设置中进行评估。

在CARLA中利用基础模型进行闭环驾驶的最近工作包括DriveMLM[36]和LMDrive[28],它们使用多模态LLMs。然而,这些方法依赖于图像和激光雷达输入以及定制的编码器,没有利用视觉-语言预训练的能力,并且专注于如指令跟随等任务。相比之下,我们专注于纯粹的闭环驾驶性能,提供一种基线,能够解决基本的驾驶行为,以促进未来对驾驶用VLMs的研究。

End-to-end closed-loop driving in CARLA. End-to-end training based on Imitation Learning (IL) is the dominant approach for state-of-the-art methods on the CARLA Leaderboard 1.0 [5, 15, 26, 38]. Those methods are mostly incorporate numerous auxiliary outputs and rely on expensive sensors like LiDAR. In contrast, we build a model that only relies on camera images and the driving trajectory. The dominant output representation is predicting waypoints with a GRU and using PID-controllers for lateral and longitudinal control [5, 10, 15, 16, 24, 26, 29, 38, 41]. TCP [38] showed that waypoints perform poorly in turns, but predicting direct control performs worse in avoiding collisions. They propose a situation-based fusion strategy of those representations. Interfuser [26] proposed predicting path waypoints together with a combination of forecasting and heuristics to obtain control. TF++ [15] uses path waypoints for lateral control and target speed classes for longitudinal control. In our work we leverage the path representation for improved steering together with the standard waypoints for longitudinal control avoiding heuristics or the need for predefined classes. Additionally directly predict the waypoints from the output features of the transformer without using GRU.

在CARLA中的端到端闭环驾驶。基于模仿学习(Imitation Learning, IL)的端到端训练是CARLA排行榜1.0上最先进技术方法的主导方法[5, 15, 26, 38]。这些方法大多包含多个辅助输出,并依赖于昂贵的传感器,如激光雷达。相比之下,我们构建了一个模型,它仅依赖于摄像头图像和驾驶轨迹。

主要的输出表示是使用GRU预测航点,并使用PID控制器进行横向和纵向控制[5, 10, 15, 16, 24, 26, 29, 38, 41]。TCP[38]表明,在转弯时航点的表现不佳,但直接预测控制更不利于避免碰撞。他们提出了一种基于情况的这些表示的融合策略。Interfuser[26]提出了预测路径航点,并结合预测和启发式来获得控制。TF++[15]使用路径航点进行横向控制,使用目标速度类别进行纵向控制。在我们的工作中,我们利用路径表示来改善转向,同时使用标准航点进行纵向控制,避免了启发式或对预定义类别的需求。此外,直接从变换器的输出特征预测航点,而不使用GRU。

3. Method

In the following sections, we provide a comprehensive overview of our architecture and training methodology.

在接下来的部分中,我们将提供我们架构和训练方法的全面概述。这通常意味着报告将详细说明CarLLaVA模型的设计原理、组件、以及如何通过特定的训练技术来优化模型性能。这种概述对于理解模型的工作方式、它的优势以及如何在实际应用中部署它至关重要。

Task. The objective is to reach a specified target location on a 10x10 km2 map while passing predetermined inter mediate target points. The map includes diverse environments such as highways, urban streets, residential areas, and rural settings, all of which must be navigated under various weather conditions, including clear daylight, sunset, rain, fog, and nighttime scenarios. Along the way the agent must manage various complex scenarios such as encountering pedestrians, navigating parking exits, executing unprotected turns, merging into ongoing traffic, passing construction sites or avoiding vehicles with opening doors.

任务。目标是在一张10x10公里的地图上到达指定的目标位置,同时通过预定的中间目标点。地图包括各种环境,如高速公路、城市街道、住宅区和乡村环境,所有这些环境都必须在不同的天气条件下进行导航,包括晴朗的白天、日落、雨、雾和夜间场景。沿途,代理必须管理各种复杂场景,例如遇到行人、导航停车场出口、执行无保护的转弯、合并到正在进行的交通中、经过建筑工地或避开正在开门的车辆。

Architecture. An overview of our base architecture can be seen in Fig. 1.

架构。我们基础架构的概览可以在图1 中看到。

图1. CarLLaVA基础模型架构。(C1T1) 图像被分成两部分,每部分独立编码,然后进行拼接、下采样,并投影到一个预训练的大型语言模型中。输出使用半解耦的表示,结合了时间条件的航点和空间条件的路径航点,以改善横向控制。

这段描述概述了CarLLaVA模型的输入处理和输出生成方式。图像首先被分割,然后分别进行编码,这可能有助于模型更好地理解和处理图像的不同部分。编码后的数据被拼接和下采样,以减少数据的维度,然后输入到预训练的大型语言模型中,这可能有助于模型利用已有的语言和视觉知识来处理自动驾驶任务。

输出阶段,模型采用了半解耦的表示方法,这意味着模型同时生成时间条件的航点和空间条件的路径航点。时间条件的航点可能是指根据时间序列或动态变化生成的航点,而空间条件的航点则可能是指根据空间布局或静态环境特征生成的航点。这种表示方法可以提供更精细的控制,特别是在需要精确横向控制的场景中。

Input/Output Representation. The model inputs include camera images, the next two target points, and the ego vehicle’s speed. We tested several configurations: (1) the base model (C1T1) with a single front view image, (2) the temporal model (C1T2) which includes image features from the previous timestep, and (3) the multi-view model (C2T1) which adds a low-resolution rear-view camera to the high-resolution front view. For the output, we use a semi-disentangled representation with both time-conditioned waypoints with a PID controller for longitudinal control and space-conditioned path waypoints with a PID controller for lateral control. Early experiments with entangled waypoints led to steering errors, especially during turns or when swerving around obstacles. By using path waypoints, we achieve denser supervision, as we also predict the path when the vehicle is stationary, leading to improved steering behaviour. For longitudinal control we use standard time-conditioned waypoints to make use of the better collision avoidance compared to directly predicting control [38]. We also experimented with target speed classification and GRUs, but these methods did not perform as well, although we lack official performance metrics.

输入/输出表示。模型的输入包括摄像头图像、接下来的两个目标点以及自车的速度。我们测试了几种配置:(1) 基础模型(C1T1),使用单个前视图像;(2) 时间模型(C1T2),包括前一时间步的图像特征;(3) 多视图模型(C2T1),在高分辨率前视图像中增加了一个低分辨率的后视摄像头。对于输出,我们使用半解耦的表示,结合了时间条件的航点和PID控制器用于纵向控制,以及空间条件的路径航点和PID控制器用于横向控制。早期使用纠缠航点的实验导致了转向错误,特别是在转弯或绕过障碍物时。通过使用路径航点,我们实现了更密集的监督,因为我们还在车辆静止时预测路径,从而改善了转向行为。对于纵向控制,我们使用标准的时间条件航点,以利用与直接预测控制相比更好的避碰性能[38]。我们还尝试了目标速度分类和GRU(门控循环单元),但这些方法表现不如预期,尽管我们缺乏官方的性能指标。

HD-Vision Encoder. To encode the camera images, we use the LLaVA-NeXT vision encoder, specifically the CLIPViT-L-336px model, which is the highest resolution trained CLIP model. High-resolution images are crucial for driving because important information, such as traffic lights at large intersections, may only be visible in a few pixels. To leverage CLIP pre-training at higher resolutions than 336x336, we use LLaVA’s anyres technique [21]. We divide high-resolution images into multiple large patches of up to 336x336 pixels, encoding each independently, and then concatenating the resulting features in spatial dimension to form a single large feature map for the original image. Using a VLM not only provides strong features, but also offers the advantage of easily query the VLM to identify what information are captured in the image features. More specifically, we queried the VLM for example for the state of traffic lights at different input resolutions to determine the optimal resolution and therefore the number of patches. Adapter. To reduce computation overhead due to the nature of the quadratic complexity of the LLaMA transformer, we downsample the feature map to half the number of tokens. After flattening, we employ a linear projection layer to map the vision features to the embedding space of the language model. To encode the target points and ego speed, we utilize a multi-layer perceptron (MLP) following a normalization layer. Additionally, we add camera encodings for the different views (model C2T1) and temporal encodings when using images from multiple time steps (only for model C1T2).

HD-视觉编码器。为了对摄像头图像进行编码,我们使用LLaVA-NeXT视觉编码器,特别是CLIPViT-L-336px模型,这是训练过的分辨率最高的CLIP模型。高分辨率图像对于驾驶至关重要,因为重要的信息,如大型交叉路口的交通灯,可能只在几个像素中可见。为了利用高于336x336分辨率的CLIP预训练,我们使用LLaVA的anyres技术[21]。我们将高分辨率图像划分为多个高达336x336像素的大区块,每个区块独立编码,然后将结果特征在空间维度上进行拼接,形成原始图像的单个大特征图。使用视觉语言模型(VLM)不仅提供了强大的特征,而且还提供了轻松查询VLM以识别图像特征中捕获的信息的优势。更具体地说,我们查询了VLM以确定不同输入分辨率下交通灯的状态,以确定最佳分辨率和因此区块的数量。

适配器。为了减少由于LLaMA变换器的二次复杂性本质导致的计算开销,我们将特征图下采样到一半的令牌数量。在展平之后,我们使用线性投影层将视觉特征映射到语言模型的嵌入空间。为了编码目标点和自车速度,我们使用一个多层感知器(MLP),后面跟着一个归一化层。此外,我们为不同视图(模型C2T1)添加了摄像头编码,并在使用多个时间步的图像时(仅模型C1T2)添加了时间编码。

LM-Decoder. We use the LLaMA architecture as a decoder. In addition to the sensor input tokens, we use learnable queries to generate the path and waypoints. An MLP on top of the output features generates waypoint differences. The cumulative sum of these differences yields the final waypoints, which are supervised during training using mean squared error (MSE) loss. For our preliminary results on generating language explanations we auto-regressively sample the language explanation after generating the path and waypoints. During training we feed the tokenized explanations and use a standard language modelling (LM) loss. We use the tokenizer and LM-head of the pretrained Tiny-LLaMA model.

LM-解码器。我们使用LLaMA架构作为解码器。除了传感器输入令牌外,我们还使用可学习的查询来生成路径和航点。在输出特征之上的MLP(多层感知器)生成航点差异。这些差异的累积和产生最终的航点,这些航点在训练期间使用均方误差(MSE)损失进行监督。对于我们生成语言解释的初步结果,我们在生成路径和航点后自回归地采样语言解释。在训练期间,我们输入标记化的解释,并使用标准的语言建模(LM)损失。我们使用预训练的Tiny-LLaMA模型的分词器和LM头部。

整体来看,LM-解码器的设计旨在将传感器数据转换为导航决策,并能够生成对这些决策的语言解释,增强模型的可解释性和透明度。

Efficient training of large models. Our models have between 350M and 1.3B parameter. To finetune these large models on our task we rely on models pretrained on internet-scale data, a large dataset and an efficient training recipe which is described in the following.

大型模型的高效训练。我们的模型参数量在3.5亿到13亿之间。为了在我们的任务上微调这些大型模型,我们依赖于在互联网规模数据上预训练的模型、一个大型数据集以及以下描述的高效训练方法。

- 预训练模型:使用在互联网规模数据上预训练的模型,这可以加快学习过程,因为模型已经学习了大量通用特征和模式。

- 大型数据集:拥有一个大型且多样化的数据集,可以确保模型能够学习到各种驾驶场景和情况,提高其泛化能力。

- 高效训练方法:开发一套高效的训练策略,这可能包括但不限于:

- 优化的批处理大小:选择适当的批处理大小以最大化GPU/TPU的利用率。

- 学习率调度:使用学习率预热、周期性调整或其他调度策略来优化训练过程。

- 混合精度训练:使用混合精度来减少内存使用并加快训练速度。

- 模型并行性:在多个GPU/TPU上并行训练模型的不同部分以加速训练。

- 数据并行性:并行处理数据加载和预处理以减少I/O瓶颈。

- 正则化技术:应用如Dropout、权重衰减等正则化技术来防止过拟合。

- 早停法:在验证集上的性能不再提升时停止训练以避免过拟合。

通过结合这些策略,可以有效地训练大型模型,同时控制训练成本并提高模型的性能和泛化能力。

Dataset We utilize the privileged rule-based expert PDM light [4] to collect a dataset. We divide the official CARLA routes of Town 12 and Town 13 into shorter segments centered around scenarios to reduce trivial data (e.g., driving straight without any hazardous events) and simplify data management. We use short routes with a single scenario as proposed by[9, 18], however with the introduction of Leaderboard 2.0, the maximum distance between target points increased from 50 meters to 200 meters. The short routes often fall within this distance, causing a distribution shift, as the next target point is the end of the route (i.e, closer than 200m) rather than the position that would be used when having long routes. Consequently, we employ a second set of routes featuring three scenarios per route. To ensure balance, we adjust the number of routes per scenario and apply random weather augmentation and modify the parameter distance for scenarios by ±10%. Overall, we collect 2.9 million samples at 5 fps.

数据集。我们使用特权规则专家PDM Light [4]来收集数据集。我们将Town 12和Town 13的官方CARLA路线划分为更短的段落,这些段落以场景为中心,以减少平凡数据(例如,在没有任何危险事件的情况下直线行驶)并简化数据管理。我们使用短路线,每条路线包含单一场景,正如[9, 18]所提出的,然而随着排行榜2.0的引入,目标点之间的最大距离从50米增加到200米。短路线通常在这个距离内,导致分布偏移,因为下一个目标点是路线的终点(即比200米更近),而不是在长路线情况下会使用的位置。因此,我们采用了第二组路线,每条路线包含三个场景。为确保平衡,我们调整每个场景的路线数量,并应用随机天气增强,并通过±10%修改场景的参数距离。总体而言,我们以每秒5帧的速度收集了290万个样本。

For the language generation experiment we use the logic of the rule-based expert to generate explanations. More precisely, we use the leading object obtained from the experts’ Intelligent Driver Model (IDM)[34] as well as information about changing the path to swerve around objects. In addition, we use heuristics based on the ego waypoints to distinguish between driving intentions like starting from stop or keep driving at the same speed. As this experiment is only intended to showcase the potential of using LLMs for driving, we do not add detailed statistics of the obtained labels and keep it for future work.

对于语言生成实验,我们使用基于规则的专家生成解释的逻辑。更具体地说,我们使用从专家的智能驾驶模型(Intelligent Driver Model, IDM)[34]获得的领先对象,以及关于改变路径以绕过物体的信息。此外,我们使用基于自车航点的启发式方法来区分驾驶意图,如从停止状态开始或以相同速度继续驾驶。由于这个实验仅旨在展示使用大型语言模型(LLMs)进行驾驶的潜力,我们没有添加获得的标签的详细统计数据,并将其留作未来的工作。

Buckets. The majority of driving involves straight, uneventful segments. To maximize the collection of interesting scenarios during data collection, we focus on capturing a diverse range of challenging situations. However, some ratio of easy and uneventful data is inevitable. Training models on the entire dataset revealed that straight driving without hazards is effectively learned in the early epochs, resulting in wasted compute in later epochs as the models continue to train on these uninteresting samples. To address this issue, we create data buckets containing only the interesting samples and sample from these buckets during training in-stead of the entire dataset. We use: (1) five buckets for different amount of acceleration and deceleration with one specifically for starting from stop, excluding samples with acceleration between -1 and 1, (2) two buckets for steering, excluding samples for going straight, (3) three buckets for vehicle hazard with vehicles coming from different directions, (4) one for stop sign, red light and walker hazards each, (5) one bucket for swerving around obstacles and (6) one bucket that samples from the whole dataset to keep a small portion of uneventful data such as driving straight. This approach reduces the number of samples per epoch to 650,000.

桶(Buckets)。大部分驾驶涉及直线且无事件的段落。为了在数据收集期间最大化收集有趣场景,我们专注于捕获多样化的具有挑战性的情况。然而,一定比例的简单和无事件数据是不可避免的。在对整个数据集进行模型训练时发现,在早期的周期中就已经有效地学习了无危险的直线驾驶,导致在后续周期中继续对这些无趣样本进行训练时计算资源被浪费。为了解决这个问题,我们创建了仅包含有趣样本的数据桶,并在训练期间从这些桶中抽样,而不是从整个数据集中抽样。我们使用的桶包括:

- 五个桶用于不同程度的加速和减速,其中一个专门用于从停止状态启动,排除加速度在-1到1之间的样本。

- 两个桶用于转向,排除直线行驶的样本。

- 三个桶用于来自不同方向的车辆危险。

- 每个分别用于停车标志、红灯和行人危险的一个桶。

- 一个桶用于绕过障碍物的转向。

- 一个桶从整个数据集中抽样,以保持一小部分无事件数据,如直线行驶。

4. Experiments

Benchmarks. Leaderboard2.0. We use the official test server with secret routes under different weather conditions. 10xShort. For the models where we could not get Leaderboard results, we use a local evaluation on short routes with one scenario per route to evaluate the models ability to solve each scenario type. We use maximum 10 routes per scenario which are randomly sampled from the whole set.

基准测试。排行榜2.0我们使用官方测试服务器,在不同天气条件下进行秘密路线的测试。

10xShort。对于那些我们无法获得排行榜结果的模型,我们使用本地评估在每条路线一个场景的短路线上进行评估,以评估模型解决每种场景类型的能力。我们最多使用每个场景10条路线,这些路线是从整个数据集中随机抽样的。

通过这两种评估方法,研究者可以全面了解模型在不同环境和场景下的性能,确保模型的鲁棒性和泛化能力。同时,本地评估也允许研究者在不依赖官方排行榜的情况下,对模型进行初步的性能评估和调试。

Metrics. We report the official CARLA metrics, Driving Score (DS), Route Completion (RC) and Infraction Score (IS). DS is calculated in a way that the reduction due to infractions does not linearly correlate with the increase in DS due to higher RC (i.e., with a constant infraction per km the DS gets much worse for higher RC for models that can solve the scenarios below a certain percentage). Forcing the agent to stop a route early can maximize DS.

指标。我们报告CARLA官方指标,包括驾驶得分(Driving Score, DS)、路线完成度(Route Completion, RC)和违规得分(Infraction Score, IS)。

- 驾驶得分(DS):是一个综合指标,反映了模型在遵守交通规则和完成路线方面的表现。它的计算方式是,由于违规而导致的分数减少,并不与因更高路线完成度而增加的DS呈线性相关。也就是说,如果模型能够在解决场景的能力低于某个百分比的情况下,每公里违规次数保持不变,那么对于路线完成度更高的模型,DS会显著降低。

- 路线完成度(RC):衡量模型完成指定路线的能力。如果模型能够无误地完成整个路线,RC将达到最高分。

- 违规得分(IS):反映了模型在驾驶过程中违反交通规则的程度。违规行为越少,IS越低,表示模型的驾驶行为越符合规范。

Implementation Details. We use a learning rate of 3e-5 with a cosine annealing schedule. The batch size of our base model is 20, while for specific configurations, we use a batch size of 10 for C1T2 and a batch size of 12 for C2T1. The AdamW optimizer is employed with a weight decay of 0.1. Our vision encoder consists of 305 million parameters. We experiment with the LLaMA architecture in three configurations: LLaMA-50M, LLaMA-350M (both trained from scratch), and a 1B TinyLLaMA with LoRA finetuning [14], applied to all linear layers as demonstrated to be effective by QLoRA [11]. We apply the same data augmentation techniques as TF++ [15] but with more aggressive shift and rotation augmentation (shift: 1.5m, rot: 20 deg). Additionally, we add histogram enhancements to improve the contrast and quality of input images for night time driving. DeepSpeed v2 is utilized for optimizing training efficiency and memory usage. We train for 30 epochs. Our base model, C1T1, trains in approximately 27 hours using 8xA100 40GB GPUs. During inference we apply early stopping to counter the nature of DS described in the metric section. We track the travelled distance and stop driving after a specified distance when the steering angle is close to zero to prevent stopping in the middle of an intersection where it could happen that other vehicles crash into us.

实现细节。以下是CarLLaVA模型训练和推理过程中的一些关键实现细节:

- 学习率:使用3e-5的学习率,并采用余弦退火调度策略。

- 批量大小:基础模型的批量大小为20,对于特定配置,C1T2使用批量大小10,C2T1使用批量大小12。

- 优化器:使用AdamW优化器,并设置权重衰减为0.1。

- 视觉编码器:视觉编码器包含3.05亿参数。

- LLaMA架构实验:在三种配置中实验LLaMA架构:LLaMA-50M、LLaMA-350M(均从头开始训练),以及使用LoRA微调的1B TinyLLaMA[14],正如QLoRA[11]所证明的,对所有线性层应用此技术是有效的。

- 数据增强技术:与TF++[15]相同的数据增强技术,但具有更激进的位移和旋转增强(位移:1.5米,旋转:20度)。

- 图像增强:添加直方图增强以改善夜间驾驶输入图像的对比度和质量。

- 训练优化:使用DeepSpeed v2优化训练效率和内存使用。

- 训练周期:训练周期为30个epoch。

- 训练时间:基础模型C1T1使用8个A100 40GB GPU在大约27小时内完成训练。

- 推理时的早期停止:在推理期间应用早期停止策略,以应对度量部分描述的DS特性。

- 行驶距离跟踪:跟踪行驶距离,并在转向角接近零时,在指定距离停止驾驶,以防止在交叉路口中间停车,这可能会导致其他车辆与我们相撞。

Results.

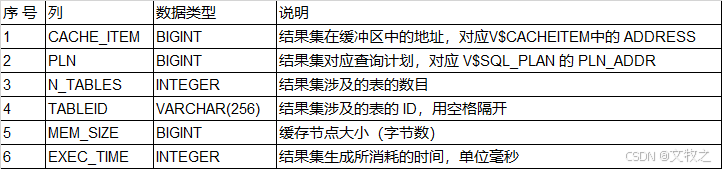

Leaderboard state of the art. We present the official Leaderboard results in Tab. 1. With our base-model CarLLaVA C1T1 we outperfrom the state of the art (5.18 vs 6.87 DS). However, we observed a high variance on the Leaderboard score, detailed results on mean and standard deviation can be found in the supplementary (the official Leaderboard numbers are our first submissions of the models, the repetitions to calculate mean and std happened after the challenge deadline). It is also noteworthy that, to the best of our knowledge, our model is the only model on the leaderboard working only with camera images and without the usage of additional auxiliary labels (note: for the new entry greatone we do not know what their method is).

排行榜上的最新技术。我们在表1中展示了官方排行榜结果。我们的基准模型CarLLaVA C1T1在驾驶得分(DS)上超过了现有最新技术(5.18对比6.87)。

然而,我们观察到排行榜得分有很高的变异性,平均值和标准差的详细结果可以在补充材料中找到(官方排行榜数字是我们模型的首次提交,计算平均值和标准差的重复提交是在挑战赛截止日期之后进行的)。另外,据我们所知,我们的模型是排行榜上唯一只使用摄像头图像而不使用额外辅助标签的模型(注意:对于新加入的greatone,我们不清楚他们使用的方法)。

表1. 排行榜2.0结果。CarLLaVA在排行榜上实现了最先进的性能。图例:L: 激光雷达, C: 摄像头, R: 雷达, M: 地图, priv: 特权, OD: 物体检测(3D位置和姿态), IS: 实例分割, SS: 语义分割, D: 深度, BS: 鸟瞰图语义。

- L(Lidar):激光雷达,一种使用激光来测量距离和速度的传感器。

- C(Camera):摄像头,用于捕捉视觉信息。

- R(Radar):雷达,用于检测物体并测量速度。

- M(Map):地图,提供地理信息和导航数据。

- priv(Privileged):特权,指模型可能访问的一些额外信息或简化条件。

- OD(Object Detection):物体检测,识别并定位3D空间中的物体。

- IS(Instant Segmentation):实例分割,区分并识别图像中的不同实例。

- SS(Semantic Segmentation):语义分割,识别图像中所有像素的类别。

- D(Depth):深度,测量物体距离传感器的距离。

- BS(BEV Semantics):鸟瞰图语义,从鸟瞰图视角提供场景的语义信息。

CarLLaVA模型特别之处在于,它仅使用摄像头图像(C),而不依赖激光雷达、雷达、地图或其他辅助标签,这在自动驾驶领域是一个显著的成就,因为它展示了在有限的传感器配置下实现高性能的可能性。

Output representation. Tab. 2a compares the DS on the Leaderboard for the different output representations. As the goal of the additional path prediction is improved lateral control, we also report the collisions with static layout as this is mainly caused due to bad steering. With the semidisentangled representation we can reduce the layout collision from 0.68 to 0.0 showcasing the strength of additional path predictions.

输出表示。表2a比较了不同输出表示在排行榜上的驾驶得分(DS)。由于额外路径预测的目标是改善横向控制,我们还报告了与静态布局的碰撞次数,因为这主要是由于转向不良造成的。通过半解耦的表示,我们可以将布局碰撞从0.68减少到0.0,展示了额外路径预测的优势。

Vision-Language and CLIP pretraining. We ablate the pretraining of the vision encoder and train the same model from scratch. Tab. 2b ’-pretraining’ shows that the pretraining stage is essential for good driving performance (more tuning of the training hyperparameters can further improve the performance but is unlikely to reach the performance of the pretrained model). Additionally, we show a comparison to the widely used Resnet-34 pretrained on ImageNet. The decreased performance (2.71 vs. 6.87 DS) indicates the importance of the larger ViT and the internet-scale imagelanguage pretraining.

视觉-语言预训练和CLIP预训练。我们对视觉编码器的预训练进行了消融研究,并从头开始训练相同的模型。表2b中的“-pretraining”显示,预训练阶段对于良好的驾驶性能至关重要(进一步调整训练超参数可能会进一步提高性能,但不太可能达到预训练模型的性能)。此外,我们与广泛使用的在ImageNet上预训练的Resnet-34进行了比较。性能下降(2.71对比6.87 DS)表明了更大的视觉变换器(ViT)和互联网规模图像-语言预训练的重要性。

这段描述强调了预训练在提高自动驾驶模型性能中的作用,并与其他模型进行了比较:

- 消融研究:通过消融视觉编码器的预训练部分,研究者能够评估预训练对模型性能的具体影响。

- 预训练的必要性:结果显示,预训练对于实现良好的驾驶性能是必不可少的。即使调整训练超参数,从头开始训练的模型也不太可能达到预训练模型的性能水平。

- 性能比较:与在ImageNet上预训练的Resnet-34相比,预训练模型的驾驶得分(DS)有显著提高,从而突出了使用更大的视觉变换器和互联网规模图像-语言数据进行预训练的优势。

- 预训练的优势:更大的模型和更丰富的数据集使得预训练模型能够捕捉到更复杂的特征和模式,这对于自动驾驶任务中的决策和控制至关重要。

- 性能差距:Resnet-34的性能(2.71 DS)与预训练模型(6.87 DS)之间的差距,凸显了预训练在提升模型泛化能力和驾驶性能方面的重要性。

Early stopping. We ablate the thresholds for the early stopping as it is not trivial to calculate the perfect trade-off as the routes and density of scenarios are secret (however a rough function of the expected DS can be caluculated which we used to get a rough idea). Tab. 2c shows the Leaderboard DS for a given travelled distance in meters. This hyperparameter has a big impact on the final score.

早期停止。我们对早期停止的阈值进行了消融研究,因为计算完美的权衡并不简单,因为路线和场景的密度是秘密的(然而,可以计算预期DS的大致函数,我们用它来得到一个大致的概念)。表2c显示了在给定米数的行驶距离下的排行榜DS。这个超参数对最终得分有很大的影响。

“Early stopping” 是一种在机器学习和深度学习中常用的技术,用来防止模型过拟合。它的核心思想是在训练过程中,当模型在验证集上的性能不再提升或开始下降时,提前终止训练过程,以避免模型学习到数据中的噪声和异常值,从而提高模型在未知数据上的泛化能力。

Preliminary Results.

In addition to our ablations we show preliminary results to showcase the potential to extend to multiple views and temporal input, scaling our base model and adding language predictions.

除了我们的消融研究,我们还展示了初步结果,以展示扩展到多个视图和时间输入的潜力,对我们的基础模型进行扩展,并增加语言预测。

Leaderboard variance. We submitted our base model CarLLaVA C1T1 with an early stopping threshold of 2100 and 2400 three times to the leadboard to get an estimate of the evlauation variance. For the 2100 model we obtain the following scores: 5.5, 6.8 and 5.3 resulting in a mean DS of 5.87 with a standard deviation of 0.81. The base model with a threshold of 2400 obtained 6.3, 6.3 and 4.8 resuting in a mean of 5.8 with standard deviation of 0.87.

排行榜变异性。为了估计评估的变异性,我们将基础模型CarLLaVA C1T1分别使用2100和2400的早期停止阈值提交了三次到排行榜。对于2100阈值的模型,我们获得以下分数:5.5、6.8和5.3,得出平均驾驶得分(DS)为5.87,标准差为0.81。使用2400阈值的基础模型获得了6.3、6.3和4.8的分数,得出平均值为5.8,标准差为0.87。

Scale. In an additional experiment we scale up the LLaMA architecture (Tab. 3a). Training a 350M parameter model from scratch improves performance slightly. However scaling to 1B parameter and finetuning with LoRA resulted in worse performance for using a pretrained LLM (pt) and training from scratch (s). We suspect that this may be due to the use of LoRA finetuning and not fully tuned hyperparameters, but further investigation is needed. This remains an interesting research question for future work.

扩展性。在额外的实验中,我们扩展了LLaMA架构的规模(见表3a)。从头开始训练一个3.5亿参数的模型,其性能略有提升。然而,扩展到10亿参数并使用LoRA进行微调,无论是使用预训练的大型语言模型(pt)还是从头开始训练(s),都导致了性能变差。我们怀疑这可能是由于使用LoRA微调以及超参数没有完全调整到位,但需要进一步的调查。这仍然是未来研究中一个有趣的问题。

Extending the input. To be able to fully solve autonomous driving, information from more than one camera (especially for camera-only arhcitectures) and temporal information are needed. In Tab. 3b we show results for a model with temporal information and one with added back camera. Qualitative investigations showed improvements in the expected scenarios (less rear-end collisions for +temporal and improved lane-change behaviour for +back). Interestingly the overall score does not increase.

扩展输入。为了能够完全解决自动驾驶问题,需要来自不止一个摄像头(特别是对于仅使用摄像头的架构)的信息以及时间信息。在表3b中,我们展示了一个包含时间信息的模型以及一个增加了后视摄像头的模型的结果。定性研究显示,在预期的场景中有所改进(对于+temporal模型,追尾碰撞减少;对于+back模型,变道行为得到改善)。有趣的是,整体得分并没有增加。

Language explanations. With the additional language training our model is able to produce commentary that comments the current driving behaviour (Fig. 2). This is not intended as an actual explanation as the training misses an important grounding step (i.e., commentary is not always aligned with the actions the model takes). We leave this for future work.

语言解释。通过额外的语言训练,我们的模型能够生成对当前驾驶行为的评论(见图2)。这并不打算作为一个实际的解释,因为训练缺少一个重要的基础步骤(即,评论并不总是与模型采取的行动一致)。我们将这个问题留作未来的工作。

这项工作展示了在自动驾驶领域中,使模型能够提供对其行为的自然语言描述的潜力,同时也指出了生成准确、有意义的语言解释所面临的挑战。

Failure cases. The most common failure cases of our model are rear end collision, which can be reduced by using the temporal input of the C1T2 model and maneuver like merging especially in high speeds.

故障案例。我们模型最常见的故障案例是追尾碰撞,这可以通过使用C1T2模型的时间输入来减少,尤其是在高速情况下进行合并等操作时。

5. Conclusion

In this report, we present CarLLaVA the winning entry in the CARLA Autonomous Driving Challenge 2.0 2024, which leverages vision-language pretraining and uses only camera images as input. By utilizing a semi-disentangled output representation and an efficient training approach, CarLLaVA demonstrates superior performance in both lateral and longitudinal control. Its ability to operate without expensive labels or sensors makes it a scalable and costeffective solution. The results indicate a substantial improvement over previous methods, showcasing the potential of vision-language models in real-world autonomous driving applications.

在这份报告中,我们介绍了CarLLaVA,这是2024年CARLA自动驾驶挑战赛2.0的获胜作品,它利用视觉-语言预训练,并且仅使用摄像头图像作为输入。通过采用半解耦的输出表示和高效的训练方法,CarLLaVA在横向和纵向控制方面展现出卓越的性能。它能够在没有昂贵的标签或传感器的情况下运行,使其成为一个可扩展且成本效益高的解决方案。结果表明,与以往的方法相比,CarLLaVA有了显著的性能提升,展示了视觉-语言模型在现实世界自动驾驶应用中的潜力。