一、准备环境

1.1 部署服务器

在centos7.9系统里搭建v1.23版本的k8s集群,准备四台服务器,两台作为master,主机名分别为 k8s-master和k8s-master-2,主机名为k8s-master,两台作为 node,主机名分别为k8s-node-1和k8s-node-2

1.2 初始化

对master节点服务器进行环境初始化,包括设置静态IP、修改主机名、关闭firewalld功能以及禁止selinux功能等

执行以下初始化脚本

#中途出现错误,直接退出,不会进行后面的步骤

set -e

#!/bin/bash#第1步:下载阿里云的centos-7.reop文件

cd /etc/yum.repos.dcurl -O http://mirrors.aliyun.com/repo/Centos-7.repo

#新建存放centos官方提供的repo文件,因为官方不提供服务了

mkdir backup

mv CentOS-* backupyum makecache

#第2步:修改主机名

hostnamectl set-hostname $1#第3步:配置静态ip地址

cat >/etc/sysconfig/network-scripts/ifcfg-ens33 <<EOF

BOOTPROTO="none"

NAME="ens33"

DEVICE="ens33"

ONBOOT="yes"

IPADDR=$2

PREFIX=24

GATEWAY=$3

DNS1=114.114.114.114

DNS2=222.246.129.80

EOF#启动网络服务

service network restart#第4步: 关闭selinux和firewalld防火墙服务

systemctl stop firewalld

systemctl disable firewalld#修改/etc/selinux/config文件里的enforcing为disabled

sed -i '/SELINUX=/ s/enforcing/disabled/' /etc/selinux/config #第5步:关闭交换分区

# 永久关闭

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab第6步:调整内核参数

# 修改linux的内核参数,添加网桥过滤和地址转发功能,转发IPv4并让iptables看到桥接流量

cat <<EOF | tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

# 加载网桥过滤模块

modprobe overlay

modprobe br_netfilter

# 编辑/etc/sysctl.d/kubernetes.conf文件,添加如下配置:

cat << EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 应用sysctl参数而不重新启动

sysctl -p第7步:更新和配置所需源

yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo第8步:配置ipvs功能

# 安装ipset和ipvsadm

yum install ipset ipvsadm -y# 添加需要加载的模块写入脚本文件

cat <<EOF > /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

# 为脚本文件添加执行权限

chmod +x /etc/sysconfig/modules/ipvs.modules

# 执行脚本文件

/bin/bash /etc/sysconfig/modules/ipvs.modules第9步:配置时间同步

systemctl start chronyd && systemctl enable chronyd第10步:重启

reboot

二、配置docker环境

2.1 安装docker环境

yum install -y docker-ce docker-ce-cli

2.2 配置docker的镜像加速

mkdir -p /etc/docker

cd /etc/dockervim daemon.json{"registry-mirrors": ["https://hub.docker-alhk.dkdun.com/"],"exec-opts": ["native.cgroupdriver=systemd"]

}#重新加载docker的配置文件和重启docker服务

systemctl daemon-reload

systemctl restart docker2.3 设置docker服务开机自启

# 启动docker并设置开机自启

systemctl enable --now docker

# 验证

systemctl status docker配置cri-docker

kubernets 1.24版本后默认使用containerd做底层容器,需要使用cri-dockerd做中间层来与docker通信

# 下载

wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.8/cri-dockerd-0.3.8-3.el7.x86_64.rpm

# 安装

rpm -ivh cri-dockerd-0.3.8-3.el7.x86_64.rpm

# 重载系统守护进程

systemctl daemon-reload

# 修改配置文件

vim /usr/lib/systemd/system/cri-docker.service

# 修改第10行 ExecStart

# 改为

# ExecStart=/usr/bin/cri-dockerd --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9 --container-runtime-endpoint fd://

配置cri-docker服务自启动

# 重载系统守护进程

systemctl daemon-reload

# 启动cri-dockerd

systemctl start cri-docker.socket cri-docker

# 设置cri-dockerd自启动

systemctl enable cri-docker.socket cri-docker

# 检查Docker组件状态

systemctl status docker cir-docker.socket cri-docker

此时,基于这台服务器,克隆另外三台,重新配置IP地址以及主机名

修改每台服务器上的/etc/hosts文件,添加如下配置

vim /etc/hosts192.168.178.150 k8s-master

192.168.178.151 k8s-node-1

192.168.178.152 k8s-node-2

192.168.178.153 k8s-master-2接着进行测试,通过访问域名是否可以ping通其他的机器

三、配置k8s集群环境

3.1 配置k8s组件源(需要在每台节点服务器执行)

cat <<EOF | tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum makecache

安装kubectl、kubeadm、kubelet

- kubeadm 是用来安装master节点上的组件(apiserver、etcd、scheduler等),部署k8s集群的。

- kubelet 是用来对容器运行时软件进行管理的(管理docker的)

- kubectl 是用来输入k8s的命令的

yum install -y install kubeadm kubelet kubectl --disableexcludes=kubernetes

设置kubelet自启动

systemctl enable --now kubelet

集群初始化(仅在master节点执行)

init

kubeadm init --kubernetes-version=v1.28.2 \--pod-network-cidr=10.224.0.0/16 \--apiserver-advertise-address="此处改为自己master节点服务器的IP地址" \--image-repository=registry.aliyuncs.com/google_containers \--cri-socket=unix:///var/run/cri-dockerd.sock

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

查看加入节点的命令

kubeadm token create --print-join-commandkubeadm join 192.168.178.150:6443 --token 6c6k69.vhznczps1pr494sh \--discovery-token-ca-cert-hash sha256:d095843eb9102f86df65d8a14b20c51aa636eb3429f0252926a51914171a7a95

node节点加入集群

加入work节点

分别在两台work节点服务器执行以下命令

kubeadm join 192.168.178.150:6443 --token 6c6k69.vhznczps1pr494sh \--discovery-token-ca-cert-hash sha256:d095843eb9102f86df65d8a14b20c51aa636eb3429f0252926a51914171a7a95 \--cri-socket unix:///var/run/cri-dockerd.sock

分配work

# 在master上执行

kubectl label node k8s-node-1 node-role.kubernetes.io/worker=worker

kubectl label node k8s-node-2 node-role.kubernetes.io/worker=worker

加入master节点

在k8s-master-2创建证书存放目录:

cd /root && mkdir -p /etc/kubernetes/pki/etcd &&mkdir -p ~/.kube/

把k8s-master节点的证书拷贝到k8s-master-2上(已经搭建了免密通道可以直接远程复制,如果没有,输入密码即可):

scp /etc/kubernetes/pki/ca.crt k8s-master-2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/ca.key k8s-master-2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.key k8s-master-2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.pub k8s-master-2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-master-2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.key k8s-master-2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.crt k8s-master-2:/etc/kubernetes/pki/etcd/

scp /etc/kubernetes/pki/etcd/ca.key k8s-master-2:/etc/kubernetes/pki/etcd/

在master-k8s服务器检查 kubeadm-config ConfigMap 是否正确配置了 controlPlaneEndpoint。可以使用 kubectl 命令获取 kubeadm-config ConfigMap 的信息:

kubectl -n kube-system edit cm kubeadm-config -o yaml

添加如下字段:controlPlaneEndpoint: “192.168.178.150:6443” (k8s-master节点服务器的IP地址)

apiVersion: v1

data:ClusterConfiguration: |apiServer:extraArgs:authorization-mode: Node,RBACtimeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta3certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrolPlaneEndpoint: "192.168.178.150:6443"controllerManager: {}dns: {}etcd:local:dataDir: /var/lib/etcdimageRepository: registry.aliyuncs.com/google_containerskind: ClusterConfigurationkubernetesVersion: v1.28.2networking:dnsDomain: cluster.localpodSubnet: 10.224.0.0/16serviceSubnet: 10.96.0.0/12scheduler: {}

kind: ConfigMap

metadata:creationTimestamp: "2024-08-16T08:13:08Z"name: kubeadm-confignamespace: kube-systemresourceVersion: "3713"uid: 7d5825b4-3e25-4269-a61c-833cbdd19770

重启kubelet

systemctl restart kubelet

在k8s-master节点服务器查看加入节点的命令:

kubeadm token create --print-join-commandkubeadm join 192.168.178.150:6443 --token myfuwt.po8c3fasv56k1z3d \--discovery-token-ca-cert-hash sha256:d095843eb9102f86df65d8a14b20c51aa636eb3429f0252926a51914171a7a95

将k8s-master-2以master的身份加入

kubeadm join 192.168.178.150:6443 --token myfuwt.po8c3fasv56k1z3d \--discovery-token-ca-cert-hash sha256:d095843eb9102f86df65d8a14b20c51aa636eb3429f0252926a51914171a7a95 \--cri-socket unix:///var/run/cri-dockerd.sock \--control-plane --ignore-preflight-errors=SystemVerification

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

在k8s-master/k8s-master-2上查看集群状况:

kubectl get nodeNAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane 48m v1.28.2

k8s-master-2 NotReady control-plane 3m36s v1.28.2

k8s-node-1 NotReady <none> 44m v1.28.2

k8s-node-2 NotReady <none> 44m v1.28.2

安装Calico网络插件

在master执行

kubectl apply -f https://docs.projectcalico.org/archive/v3.25/manifests/calico.yaml

验证,查看状态

kubectl get nodeNAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 23h v1.28.2

k8s-master-2 Ready control-plane 22h v1.28.2

k8s-node-1 Ready worker 23h v1.28.2

k8s-node-2 Ready worker 23h v1.28.2

k8s配置ipvs

ipvs 是linux里的一个负载均衡软件,默认在linux内核里就安装了,修改k8s里的一个配置,负载均衡的时候使用ipvs做为默认的负载均衡软件,如果不修改默认是iptables

kubectl edit configmap kube-proxy -n kube-system

# 修改配置

mode: "ipvs"# 删除所有kube-proxy pod使之重启

kubectl delete pods -n kube-system -l k8s-app=kube-proxy

安装Dashboard(只在master节点上执行)

下载安装

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

修改Service部分,改为NodePort对外暴露端口

安装

kubectl apply -f recommended.yaml

查看安装效果

kubectl get pods,svc -n kubernetes-dashboardNAME READY STATUS RESTARTS AGE

pod/dashboard-metrics-scraper-5657497c4c-5vcpn 1/1 Running 0 3m58s

pod/kubernetes-dashboard-78f87ddfc-ccwdm 1/1 Running 0 3m58sNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dashboard-metrics-scraper ClusterIP 10.96.38.237 <none> 8000/TCP 3m58s

service/kubernetes-dashboard NodePort 10.107.43.228 <none> 443:30503/TCP 3m58s创建账号

创建dashboard-access-token.yaml文件

vim dashboard-access-token.yaml# Creating a Service Account

apiVersion: v1

kind: ServiceAccount

metadata:name: admin-usernamespace: kubernetes-dashboard

---

# Creating a ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: admin-user

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: cluster-admin

subjects:

- kind: ServiceAccountname: admin-usernamespace: kubernetes-dashboard

---

# Getting a long-lived Bearer Token for ServiceAccount

apiVersion: v1

kind: Secret

metadata:name: admin-usernamespace: kubernetes-dashboardannotations:kubernetes.io/service-account.name: "admin-user"

type: kubernetes.io/service-account-token

# Clean up and next steps

# kubectl -n kubernetes-dashboard delete serviceaccount admin-user

# kubectl -n kubernetes-dashboard delete clusterrolebinding admin-user

执行

kubectl apply -f dashboard-access-token.yaml

获取token

kubectl get secret admin-user -n kubernetes-dashboard -o jsonpath={".data.token"} | base64 -d

访问dashboard

kubectl get svc -n kubernetes-dashboardNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.96.38.237 <none> 8000/TCP 9m39s

kubernetes-dashboard NodePort 10.107.43.228 <none> 443:30503/TCP 9m39s

浏览器访问集群ip:端口(https://192.168.178.150:30503/),注意https,而后输入上一步获取到的token即可

但我发现15分钟不对dashboard进行操作时,token会自动过期

解决token默认15分钟过期的问题

vim recommended.yaml

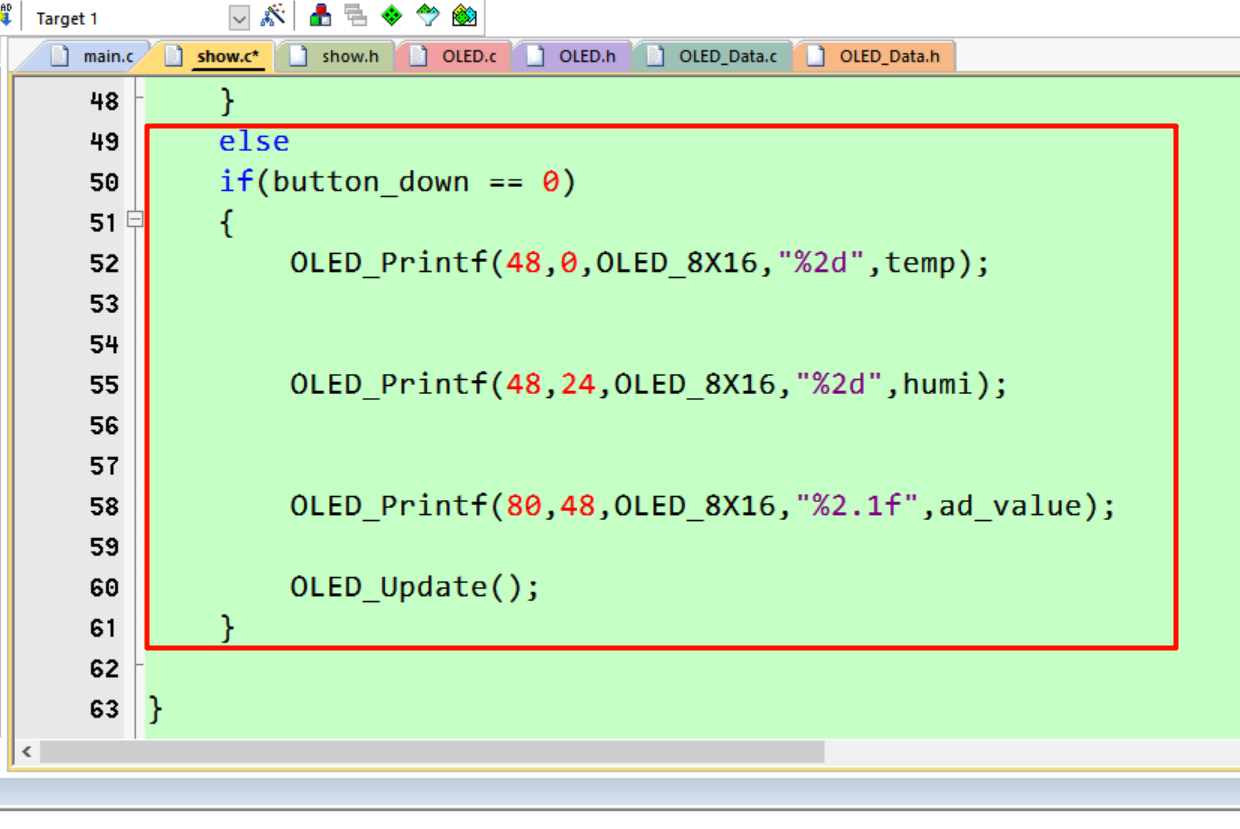

添加如下图所示的这条配置,超过时间调整为12小时

重新应用

kubectl apply -f recommended.yaml

安装kuboard(任意节点执行,推荐master)

kuboard 是一个多k8s集群的管理软件,假如公司内部有多套k8s集群,可以使用kuboard来进行管理。

sudo docker run -d \--restart=unless-stopped \--name=kuboard \-p 80:80/tcp \ # 可根据需要修改第一个暴露的port-p 10081:10081/tcp \ # 无特殊需要不建议修改-e KUBOARD_ENDPOINT="http://192.168.178.150:10081" \ # 部署在哪台机器就用什么IP:PORT-e KUBOARD_AGENT_SERVER_TCP_PORT="10081" \-v /kuboard-data:/data \ # 可根据需要修改第一个数据挂载路径swr.cn-east-2.myhuaweicloud.com/kuboard/kuboard:v3

访问的是master节点的http://192.168.178.150

- 用户名:

admin - 密 码:

Kuboard123

进入dashboard后,将自己的k8s集群导入进去

点击确定,选择集群使用身份,而后查看集群摘要

如下就是成功效果(看到计算资源的效果需要先安装metric-server服务)

安装kubectl自动补全命令

yum install bash-completion -y

永久设置自动补全

echo "source <(kubectl completion bash)" >> ~/.bashrc && bash

部署metric-server(在master执行)

下载

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.2/components.yaml

修改components.yaml配置

containers:

- args:...- --kubelet-insecure-tls # 添加这一行image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.6.2 # 修改镜像仓库地址

应用

kubectl apply -f components.yaml

查看效果

kubectl top nodesNAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master 258m 12% 1881Mi 51%

k8s-master-2 171m 8% 1067Mi 29%

k8s-node-1 60m 3% 702Mi 40%

k8s-node-2 75m 3% 785Mi 45%

安装promethes、grafana、node-exporter

拉取镜像

docker pull prom/node-exporter

docker pull prom/prometheus

docker pull grafana/grafana

安装node-exporter.yaml

cat node-exporter.yamlapiVersion: apps/v1

kind: DaemonSet

metadata:name: node-exporternamespace: kube-systemlabels:k8s-app: node-exporter

spec:selector:matchLabels:k8s-app: node-exportertemplate:metadata:labels:k8s-app: node-exporterspec:containers:- image: prom/node-exportername: node-exporterports:- containerPort: 9100protocol: TCPname: http

---

apiVersion: v1

kind: Service

metadata:labels:k8s-app: node-exportername: node-exporternamespace: kube-system

spec:ports:- name: httpport: 9100nodePort: 31672protocol: TCPtype: NodePortselector:k8s-app: node-exporterkubectl apply -f node-exporter.yaml

查看效果

kubectl get daemonset -n kube-systemNAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

calico-node 4 4 4 4 4 kubernetes.io/os=linux 21h

kube-proxy 4 4 4 4 4 kubernetes.io/os=linux 23h

node-exporter 2 2 2 2 2 <none> 96mkubectl get svc -n kube-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 23h

metrics-server ClusterIP 10.110.40.26 <none> 443/TCP 5h18m

node-exporter NodePort 10.108.60.168 <none> 9100:31672/TCP 97mkubectl get pod -A -o wideNAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system node-exporter-dqffx 1/1 Running 0 99m 10.224.109.69 k8s-node-1 <none> <none>

kube-system node-exporter-vslwc 1/1 Running 0 99m 10.224.140.72 k8s-node-2 <none> <none>部署prometheus

给prometheus创建一个集群角色(ClusterRole)和服务账号(SA),然后通过ClusterRoleBinding将SA和ClusterRole绑定

cat rbac-setup.yamlapiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: prometheus

rules:

- apiGroups: [""]resources:- nodes- nodes/proxy- services- endpoints- podsverbs: ["get", "list", "watch"]

- apiGroups:- extensionsresources:- ingressesverbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:name: prometheusnamespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: prometheus

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: prometheus

subjects:

- kind: ServiceAccountname: prometheusnamespace: kube-systemkubectl apply -f rbac-setup.yaml

cat configmap.yamlapiVersion: v1

kind: ConfigMap

metadata:name: prometheus-confignamespace: kube-system

data:prometheus.yml: |global:scrape_interval: 15sevaluation_interval: 15sscrape_configs:- job_name: 'kubernetes-apiservers'kubernetes_sd_configs:- role: endpointsscheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtbearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenrelabel_configs:- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]action: keepregex: default;kubernetes;https- job_name: 'kubernetes-nodes'kubernetes_sd_configs:- role: nodescheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtbearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenrelabel_configs:- action: labelmapregex: __meta_kubernetes_node_label_(.+)- target_label: __address__replacement: kubernetes.default.svc:443- source_labels: [__meta_kubernetes_node_name]regex: (.+)target_label: __metrics_path__replacement: /api/v1/nodes/${1}/proxy/metrics- job_name: 'kubernetes-cadvisor'kubernetes_sd_configs:- role: nodescheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtbearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenrelabel_configs:- action: labelmapregex: __meta_kubernetes_node_label_(.+)- target_label: __address__replacement: kubernetes.default.svc:443- source_labels: [__meta_kubernetes_node_name]regex: (.+)target_label: __metrics_path__replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor- job_name: 'kubernetes-service-endpoints'kubernetes_sd_configs:- role: endpointsrelabel_configs:- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]action: keepregex: true- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]action: replacetarget_label: __scheme__regex: (https?)- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]action: replacetarget_label: __metrics_path__regex: (.+)- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]action: replacetarget_label: __address__regex: ([^:]+)(?::\d+)?;(\d+)replacement: $1:$2- action: labelmapregex: __meta_kubernetes_service_label_(.+)- source_labels: [__meta_kubernetes_namespace]action: replacetarget_label: kubernetes_namespace- source_labels: [__meta_kubernetes_service_name]action: replacetarget_label: kubernetes_name- job_name: 'kubernetes-services'kubernetes_sd_configs:- role: servicemetrics_path: /probeparams:module: [http_2xx]relabel_configs:- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]action: keepregex: true- source_labels: [__address__]target_label: __param_target- target_label: __address__replacement: blackbox-exporter.example.com:9115- source_labels: [__param_target]target_label: instance- action: labelmapregex: __meta_kubernetes_service_label_(.+)- source_labels: [__meta_kubernetes_namespace]target_label: kubernetes_namespace- source_labels: [__meta_kubernetes_service_name]target_label: kubernetes_name- job_name: 'kubernetes-ingresses'kubernetes_sd_configs:- role: ingressrelabel_configs:- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]action: keepregex: true- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]regex: (.+);(.+);(.+)replacement: ${1}://${2}${3}target_label: __param_target- target_label: __address__replacement: blackbox-exporter.example.com:9115- source_labels: [__param_target]target_label: instance- action: labelmapregex: __meta_kubernetes_ingress_label_(.+)- source_labels: [__meta_kubernetes_namespace]target_label: kubernetes_namespace- source_labels: [__meta_kubernetes_ingress_name]target_label: kubernetes_name- job_name: 'kubernetes-pods'kubernetes_sd_configs:- role: podrelabel_configs:- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]action: keepregex: true- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]action: replacetarget_label: __metrics_path__regex: (.+)- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]action: replaceregex: ([^:]+)(?::\d+)?;(\d+)replacement: $1:$2target_label: __address__- action: labelmapregex: __meta_kubernetes_pod_label_(.+)- source_labels: [__meta_kubernetes_namespace]action: replacetarget_label: kubernetes_namespace- source_labels: [__meta_kubernetes_pod_name]action: replacetarget_label: kubernetes_pod_namekubectl apply -f configmap.yaml

使用部署控制器(deployment)去起一个pod来部署prometheus

cat prometheus.deploy.yml apiVersion: apps/v1

kind: Deployment

metadata:labels:name: prometheus-deploymentname: prometheusnamespace: kube-system

spec:replicas: 1selector:matchLabels:app: prometheustemplate:metadata:labels:app: prometheusspec:containers:- image: prom/prometheusname: prometheuscommand:- "/bin/prometheus"args:- "--config.file=/etc/prometheus/prometheus.yml"- "--storage.tsdb.path=/prometheus"- "--storage.tsdb.retention=24h"ports:- containerPort: 9090protocol: TCPvolumeMounts:- mountPath: "/prometheus"name: data- mountPath: "/etc/prometheus"name: config-volumeresources:requests:cpu: 100mmemory: 100Milimits:cpu: 500mmemory: 2500MiserviceAccountName: prometheusvolumes:- name: dataemptyDir: {}- name: config-volumeconfigMap:name: prometheus-configkubectl apply -f prometheus.deploy.yml

启动一个service去发布它

cat prometheus.svc.ymlkind: Service

apiVersion: v1

metadata:labels:app: prometheusname: prometheusnamespace: kube-system

spec:type: NodePortports:- port: 9090targetPort: 9090nodePort: 30003selector:app: prometheuskubectl apply -f prometheus.svc.yml

部署grafana

cat grafana-deploy.yamlapiVersion: apps/v1

kind: Deployment

metadata:name: grafana-corenamespace: kube-systemlabels:app: grafanacomponent: core

spec:replicas: 1selector:matchLabels:app: grafanatemplate:metadata:labels:app: grafanacomponent: corespec:containers:- image: grafana/grafananame: grafana-coreimagePullPolicy: IfNotPresent# env:resources:# keep request = limit to keep this container in guaranteed classlimits:cpu: 100mmemory: 100Mirequests:cpu: 100mmemory: 100Mienv:# The following env variables set up basic auth twith the default admin user and admin password.- name: GF_AUTH_BASIC_ENABLEDvalue: "true"- name: GF_AUTH_ANONYMOUS_ENABLEDvalue: "false"# - name: GF_AUTH_ANONYMOUS_ORG_ROLE# value: Admin# does not really work, because of template variables in exported dashboards:# - name: GF_DASHBOARDS_JSON_ENABLED# value: "true"readinessProbe:httpGet:path: /loginport: 3000# initialDelaySeconds: 30# timeoutSeconds: 1#volumeMounts: #先不进行挂载#- name: grafana-persistent-storage# mountPath: /var#volumes:#- name: grafana-persistent-storage#emptyDir: {}kubectl apply -f grafana-deploy.yaml

cat grafana-svc.yamlapiVersion: v1

kind: Service

metadata:name: grafananamespace: kube-systemlabels:app: grafanacomponent: core

spec:type: NodePortports:- port: 3000targetPort: 3000nodePort: 30124selector:app: grafanacomponent: corekubectl apply -f grafana-svc.yaml

cat grafana-ing.yamlkind: Ingress

metadata:name: grafananamespace: kube-system

spec:rules:- host: k8s.grafanahttp:paths:- path: /pathType: Prefixbackend:service:name: grafanaport: number: 3000kubectl apply -f grafana-ing.yaml

查看效果

查看pod和service

访问http://192.168.178.150:31672/metrics,这就是node-exporter采集的数据

访问prometheus地址http://192.168.178.150:30003/graph

访问grafana的地址http://192.168.178.150:30124/login,默认用户密码都是admin,登录后会默认让你修改密码

登录成功后添加Data sources

添加Dashboards,导入315模板