欢迎关注我的CSDN:https://spike.blog.csdn.net/

本文地址:https://spike.blog.csdn.net/article/details/144304351

免责声明:本文来源于个人知识与公开资料,仅用于学术交流,欢迎讨论,不支持转载。

LLaVA-CoT,是 思维链(Chain-of-Thought, CoT) 的视觉语言模型,通过自主的多阶段推理,提升系统化和结构化推理能力,实现深度思考,包含总结摘要(SUMMARY)、视觉解释(CAPTION)、逻辑推理(REASONING)、结论生成(CONCLUSION) 等 4 个阶段,提出 推理时阶段级束搜索(Inference-Time Stage-Level Beam Search) 方法,以实现有效的推理时扩展,结果表明在多模态推理基准测试中,超越基础模型和其他更大、甚至是闭源的模型。

Paper: LLaVA-CoT: Let Vision Language Models Reason Step-by-Step

GitHub: https://github.com/PKU-YuanGroup/LLaVA-CoT

git clone https://github.com/PKU-YuanGroup/LLaVA-CoT.git

准备模型(Llama-3.2V-11B-cot)与训练数据(LLaVA-CoT-100k),以及参考模型(Llama-3.2-11B-Vision-Instruct):

Llama-3.2V-11B-cot,40GLLaVA-CoT-100k,159GLlama-3.2-11B-Vision-Instruct,40G

cd [your path]/huggingface/# LLaVA-CoT 模型与数据

huggingface-cli download --token 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 Xkev/Llama-3.2V-11B-cot --local-dir Xkev/Llama-3.2V-11B-cot

huggingface-cli download --repo-type dataset --token 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 Xkev/LLaVA-CoT-100k --local-dir Xkev/LLaVA-CoT-100k# HuggingFace 需要权限

# huggingface-cli download --token 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 meta-llama/Llama-3.2-11B-Vision-Instruct --local-dir meta-llama/Llama-3.2-11B-Vision-Instruct

# ModelScope 模型

modelscope download --model fireicewolf/Llama-3.2-11B-Vision-Instruct --local_dir meta-llama/Llama-3.2-11B-Vision-Instruct

Meta 的 Llama-3.2-11B-Vision-Instruct 需要权限,建议下载 ModelScope 版本,即 fireicewolf/Llama-3.2-11B-Vision-Instruct

训练数据处理,参考 HuggingFace - Xkev/LLaVA-CoT-100k:

cat image.zip.part-* > image.zip # 速度较慢

unzip image.zip

训练集样式:

{"id": "f2813de1-767c-4e27-8ba8-e04fd383209d","image": "sqa/train/20839/image.png","conversations": [{"from": "human","value": "Which country is highlighted?\nContext: N/A\nOptions: (A) Solomon Islands (B) Nauru (C) Vanuatu (D) Fiji"}, {"from": "gpt","value": "<SUMMARY> I will determine the highlighted country by examining its location on the map and comparing it with the given options. I will then conclude if this country has any territorial disputes based on the supplied context. </SUMMARY>\n\n<CAPTION> The image depicts a map highlighting a country in the South Pacific region. The highlighted area is in a boxed section between Australia and New Zealand, directly addressing the region known to include several Pacific island countries. </CAPTION>\n\n<REASONING> The highlighted country is within the Pacific Islands region. Based on its position relative to neighboring larger landmasses like Australia and nearby countries such as Papua New Guinea and New Zealand, the highlighted country aligns with the location of Vanuatu. According to the context, Vanuatu has a territorial dispute over Matthew and Hunter Islands, claimed by both Vanuatu and France. Therefore, the presence of a dashed box labeled \"Disputed island\" suggests the inclusion of this dispute in the overview of the country's territories. </REASONING>\n\n<CONCLUSION> The answer is C. </CONCLUSION>"}]

}

即:

我将通过检查地图上的位置并与给定选项进行比较来确定高亮显示的国家。然后,我将根据提供的上下文得出这个国家是否有任何领土争端的结论。 图片显示了一张地图,高亮显示了南太平洋地区的一个国家。高亮区域位于澳大利亚和新西兰之间的一个框选部分,直接指向已知包含几个太平洋岛国的地区。 高亮显示的国家位于太平洋岛屿地区。根据其相对于邻近较大的陆地如澳大利亚和附近国家如巴布亚新几内亚及新西兰的位置,高亮显示的国家与瓦努阿图的位置相符。根据上下文,瓦努阿图对马修岛和亨特岛有领土争端,这两个岛屿由瓦努阿图和法国声称拥有。因此,标有“有争议的岛屿”的虚线框的存在表明将这一争端包含在国家领土的概览中。 答案是C。

测试模型,参考 使用 LLaMA-Factory 微调大模型 环境配置与训练推理:

cd [your path]/llm/LLaMA-Factory

conda activate llama_factoryunset https_proxy http_proxy# export GRADIO_ANALYTICS_ENABLED=False # 必须添加,否则报错

CUDA_VISIBLE_DEVICES=0 GRADIO_ANALYTICS_ENABLED=False API_PORT=7861 llamafactory-cli webchat \

--model_name_or_path [your path]/huggingface/Xkev/Llama-3.2V-11B-cot \

--template mllama# export GRADIO_ANALYTICS_ENABLED=False # 必须添加,否则报错

CUDA_VISIBLE_DEVICES=1 GRADIO_ANALYTICS_ENABLED=False API_PORT=7862 llamafactory-cli webchat \

--model_name_or_path [your path]/huggingface/meta-llama/Llama-3.2-11B-Vision-Instruct \

--template mllama

注意:两个模型,不能同时启动,需要分别启动。

LLaVA-CoT 能够生成 4 个不同的阶段:总结(SUMMARY)、描述(CAPTION)、推理(REASONING)、结论(CONCLUSION),即

- 总结(SUMMARY):模型对即将执行的任务进行简要概述。

- 描述(CAPTION):对图像中相关部分的描述(如果存在),重点介绍与问题相关的元素。

- 推理(REASONING):详细的分析,在此过程中,模型系统地考虑问题。

- 结论(CONCLUSION):简洁的答案总结,基于之前的推理提供最终答复。

LLaMA-Factory 的 ChatBot:

比较 LLaVA-CoT 与 Llama-Vision-Instruct 的输出差异,注意,默认模型,都没有使用 阶段级的束搜索(stage-level beam search),即只有1次推理:

LLaVA-CoT:通过 SUMMARY(总结) - CAPTION(描述) - REASONING(推理) - CONCLUSION(结论) 等 4 步进行。Llama-Vision-Instruct:通过直接推理流程。

LLaVA-CoT 如下:

I will analyze the image by focusing on the elements and composition to provide an accurate description that matches the standard answer. 我将分析图像,通过关注图像中的元素和构图,来提供准确的描述,以匹配标准答案。 The image features a person wearing a white shirt and a white face mask. They are sitting on a stool with a pink cushion. Their feet are adorned with white shoes featuring a decorative pattern. Next to them is a green stool, and a circular object is visible in the foreground. The scene is set against a white wall, creating a minimalist and modern aesthetic. 在图片中,人物穿着白色衬衫和白色罩衫。他们坐在带有粉色垫子的凳子上。他们的脚上穿着带有装饰图案的白色鞋子。在他们旁边是一个绿色的凳子,前景中可以看到一个圆形物体。场景设置在白色墙壁前,营造出简约而现代的美感。To describe the image, I will note the clothing and accessories, such as the white shirt, face mask, and shoes. I will also observe the seating arrangement, mentioning the stools and their colors. Additionally, I will describe the surrounding objects and the overall setting to capture the essence of the scene.

为了描述这张图片,我将注意服装和配饰,比如白色衬衫、罩衫和鞋子。我还将观察座位安排,提及凳子及其颜色。此外,我将描述周围的物体和整体环境,以捕捉场景的精髓。The image captures a serene moment in a minimalist, modern setting. A person, dressed in a crisp white shirt and a white face mask, is seated on a stool with a pink cushion. Their feet, clad in white shoes with a decorative pattern, rest on the ground. The person’s head is tilted back, and their arms are crossed over their chest, adding a sense of relaxation to the scene. The stool they sit on is green, providing a pop of color against the otherwise monochrome backdrop. A circular object, possibly a table or a decoration, sits in the foreground, adding depth to the image. The background is a stark white wall, devoid of any distractions, allowing the viewer’s focus to remain on the person and their surroundings. The overall composition of the image suggests a moment of quiet contemplation in a simple yet elegant environment.

图片捕捉了一个简约现代环境中的宁静时刻。一个穿着整洁白色衬衫和白色罩衫的人,坐在带有粉色垫子的凳子上。他们的脚上穿着带有装饰图案的白色鞋子,放在地上。这个人的头向后仰,双臂交叉在胸前,为场景增添了一种放松的感觉。他们坐的凳子是绿色的,在其他单色背景中提供了一抹色彩。一个圆形物体,可能是桌子或装饰品,位于前景,为图片增加了深度。背景是一面纯白色的墙壁,没有任何干扰,使观众的注意力保持在人物及其周围环境上。图片的整体构图暗示了一个在简单而优雅的环境中的沉思时刻。

Llama-Vision-Instruct 如下:

This image presents a close-up view of a woman’s legs and feet, dressed in a crisp white outfit and striking white high-heeled shoes with intricate white embellishments. She is seated on a stool, with her legs bent at the knees and her feet crossed at the ankles, as she leans back against the stool with her arms tucked behind her.

这张图片展示了一位女性的腿部和脚部的特写视角,她穿着一套清爽的白色服装,搭配着引人注目的白色高跟鞋,鞋子上装饰着复杂的白色装饰。她坐在一个凳子上,双腿在膝盖处弯曲,双脚在脚踝处交叉,同时她向后靠在凳子上,双臂藏在身后。The stool features a soft pink cushion and a light-colored wooden frame, situated in front of a mint green stool. The room’s white walls and shiny white floor provide a clean and minimalist backdrop, while the sunlight streaming through the window casts a warm glow on the scene.

凳子上有一个柔软的粉色垫子和一个浅色的木制框架,位于一个薄荷绿色的凳子前面。房间的白色墙壁和闪亮的白色地板提供了一个干净且简约的背景,而透过窗户照射进来的阳光为场景增添了温暖的光辉。

HuggingFace的在线测试网站:Llama-3.2V-11B-cot

LLaVA-COT 使用阶段级束搜索方法,在每个阶段(即总结、描述、推理、结论)中,生成多个候选结果,选择(使用LLM选择),其中最优的一个来继续生成过程,即,推理时阶段级的束搜索(Inference-Time Stage-Level Beam Search) 方法 ,需要集成至 VLMEvalKit 使用,源码如下:

def judge(self, image, prompt, outputs, type="summary")即 Judge 函数,也是通过大模型进行处理。- 默认每个阶段生成 10 个候选,选择 1 个最优的生成结果,作为阶段结果。

- 依次 4 个阶段,每个阶段生成 1 个结果,作为为最终结果。

配置 VLMEvalKit 工具,参考:评估工具 VLMEvalKit 部署与测试

在 VLMEvalKit 中,替换 llama_vision.py 脚本,测试命令:

# python3 run.py --data MME --model LLaVA-CoT --verbose

nohup torchrun --nproc-per-node=8 run.py --data MME --model LLaVA-CoT --verbose > nohup.o1.out &

显卡占用 23446MiB / 81920MiB,即 23 G 左右

源码如下:

# 定义一个函数,用于生成内部阶段的文本输出

def generate_inner_stage_beam(self, message, dataset=None):# 将传入的消息转换为提示和图片路径prompt, image_path = self.message_to_promptimg(message, dataset=dataset)# 打开图片文件image = Image.open(image_path)# 创建一个包含用户角色和内容的消息列表,内容中包含图片和文本messages = [{'role': 'user', 'content': [{'type': 'image'},{'type': 'text', 'text': prompt}]}]# 应用聊天模板并添加生成提示input_text = self.processor.apply_chat_template(messages, add_generation_prompt=True)# 将图片和文本输入处理成模型需要的格式,并转移到指定的设备(如GPU)inputs = self.processor(image, input_text, return_tensors='pt').to(self.device)# 如果不使用自定义提示,则根据不同的数据集类型设置最大新令牌数if not self.use_custom_prompt(dataset):if DATASET_TYPE(dataset) == 'MCQ' or DATASET_TYPE(dataset) == 'Y/N':self.kwargs['max_new_tokens'] = 2048else:self.kwargs['max_new_tokens'] = 2048# 定义不同的阶段和对应的结束标记stages = ['<SUMMARY>', '<CAPTION>', '<REASONING>', '<CONCLUSION>']end_markers = ['</SUMMARY>', '</CAPTION>', '</REASONING>', '</CONCLUSION>']# 获取输入ID的初始长度initial_length = len(inputs['input_ids'][0])# 深拷贝输入IDinput_ids = copy.deepcopy(inputs['input_ids'])# 遍历每个阶段和结束标记for stage, end_marker in zip(stages, end_markers):# 定义停止条件,当生成的文本包含结束标记时停止stop_criteria = StoppingCriteriaList([StopOnStrings([end_marker], self.processor.tokenizer)])# 初始化候选生成文本列表candidates = []# 生成10个候选文本for _ in range(10): generation_kwargs = self.kwargs.copy()generation_kwargs.update({'stopping_criteria': stop_criteria})# 将图片和当前输入ID处理成模型需要的格式,并转移到指定的设备inputs = self.processor(image, input_text, return_tensors='pt').to(self.device)# 使用模型生成文本output = self.model.generate(**inputs, **generation_kwargs)# 获取新生成的IDnew_generated_ids = output[0]# 解码生成的文本generated_text = self.processor.tokenizer.decode(new_generated_ids[initial_length:], skip_special_tokens=True)# 将生成的ID和文本添加到候选列表candidates.append({'input_ids': new_generated_ids.unsqueeze(0),'generated_text': generated_text,})# 通过比较和选择,从候选列表中选择最佳的文本while(len(candidates) > 1):# 随机选择两个候选文本candidate1 = candidates.pop(np.random.randint(len(candidates)))candidate2 = candidates.pop(np.random.randint(len(candidates)))outputs = [candidate1['generated_text'], candidate2['generated_text']]# 根据阶段类型和图片、提示、输出文本,选择最佳文本best_index = self.judge(image, prompt, outputs, type=stage[1:-1].lower())if best_index == 0:candidates.append(candidate1)else:candidates.append(candidate2)# 更新输入ID为最佳候选的IDinput_ids = candidates[0]['input_ids']# 解码最终的输出文本final_output = self.processor.tokenizer.decode(input_ids[0][initial_length:], skip_special_tokens=True)# 返回最终输出的文本return final_output

注意:

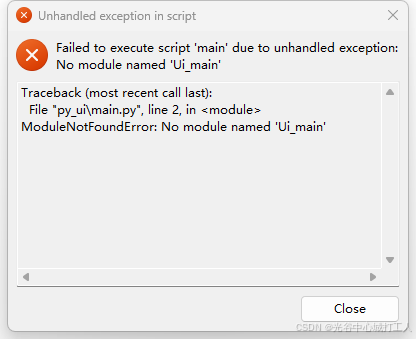

self.processor(image, input_text, return_tensors='pt')需要输入input_text而不是input_ids需要更正,否则报错。

大模型 判断(judge) 代码,让大模型给予 2 个输出的对比,判断优劣,选择 1 或选择 2 (Since [reason], I choose response [1/2].),如下:

def judge(self, image, prompt, outputs, type="summary"):input_outputs = []hint = None# ...judge_prompt += f'\n\nQuestion: {prompt}'if hint:judge_prompt += f'\n\nHint about the Question: {hint}'for i, output in enumerate(input_outputs):judge_prompt += f'\nRepsonse {i+1}: {output}'judge_prompt += f'\n\n{recall_prompt}'judge_prompt += f' Please strictly follow the following format requirements when outputting, and don’t have any other unnecessary words.'judge_prompt += f'\n\nOutput format: "Since [reason], I choose response [1/2]."'judge_message = [{'role': 'user', 'content': [{'type': 'image'},{'type': 'text', 'text': judge_prompt}]}]judge_input_text = self.processor.apply_chat_template(judge_message, add_generation_prompt=True)judge_inputs = self.processor(image, judge_input_text, return_tensors='pt').to(self.device)judge_output = self.model.generate(**judge_inputs, **self.kwargs)judge_output_text = self.processor.decode(judge_output[0][judge_inputs['input_ids'].shape[1]:]).replace('<|eot_id|>', '').replace('<|endoftext|>', '')# log to log.jsonl (json format){"prompt": prompt, "outputs": outputs, "judge_output": judge_output_text}with open('log.jsonl', 'a') as f:json_obj = {"prompt": prompt,"outputs": outputs,"judge_output": judge_output_text}f.write(json.dumps(json_obj) + '\n')if "I choose response 1" in judge_output_text:return 0else:return 1

参考:

- 视频工具:视频下载、视频帧、视频去水印

- GitHub - how to use the inference_demo.py

- GitHub - Meta-Llama/llama-recipes

- GitHub - open-compass/VLMEvalKit