一、如何使用NLTK?

定义:自然语言工具包(Natural Language Toolkit),它是一个将学术语言技术应用于文本数据集的 Python 库,称为“文本处理”的程序设计是其基本功能,专门用于研究自然语言的语法以及语义分析的能力。

安装:

首先终端下载nltk安装包pip install nltk 然后运行

import nltk

nltk.download()

出现弹窗,把想下载的一横都选上,再点击download。有时可能会出现链接失败,多试几次,网不好就挂梯子!

在执行时,根据错误提示下载,用到哪个下哪个,下的时候把nltk.download()加上执行即可。

1.用作分词的包punkt_tab

from nltk.tokenize import word_tokenize

import nltk

from nltk.tokenize import word_tokenize

#nltk.download() #用来下载其他nltk内置安装包,用到什么下什么就行,不下载就注释

def print_hi():input_str = 'Causal understanding is a defining characteristic of human cognition. The weather is bright today, suitable for going out to play.'tokens = word_tokenize(input_str)print(tokens)if __name__ == '__main__':print_hi()

输出结果:

2.Text使用

from nltk.text import Text

import nltk

from nltk.tokenize import word_tokenize

from nltk.text import Text

# nltk.download() #用来下载其他nltk内置安装包,用到什么下什么就行

def print_hi():input_str = 'Causal understanding is a defining characteristic of human cognition. The weather is bright today, suitable for going out to play.'tokens = word_tokenize(input_str)tokens = [word.lower() for word in tokens]t = Text(tokens)print(t.count('is'))#计数print(t.index('is'))#返回索引位置if __name__ == '__main__':print_hi()

输出结果:

3.stopwords停用词过筛

from nltk.corpus import stopwords

补充:停用词指的是如下图所示的一些主题含义不强烈的词

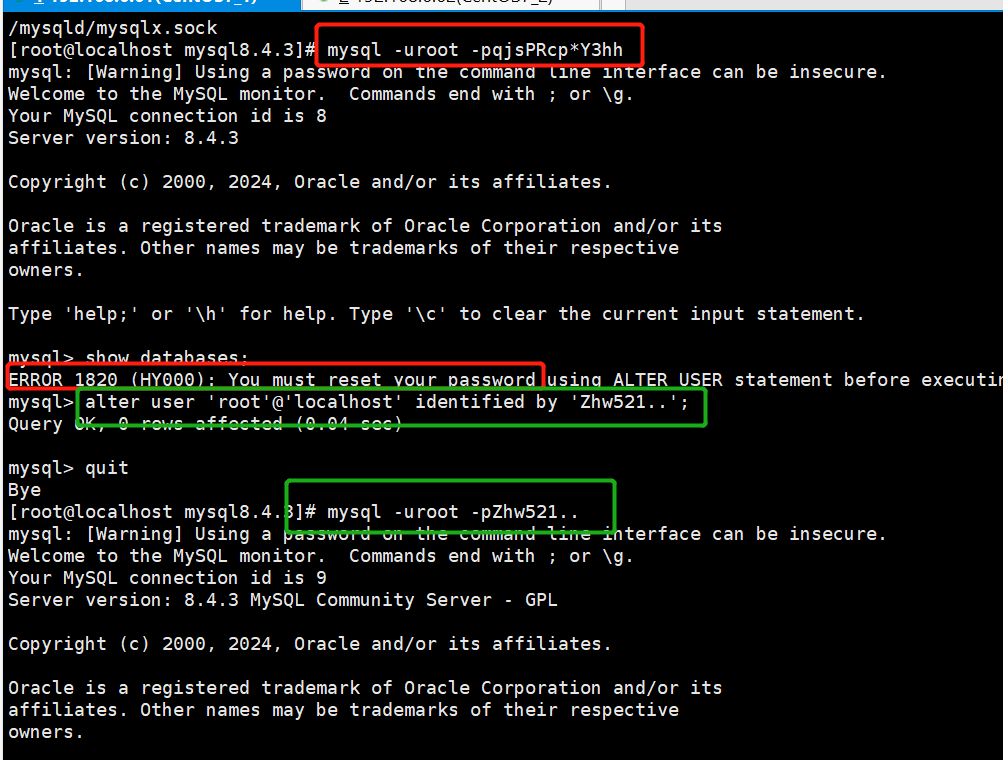

运行程序时先下载安装包

输出句子中的停用词

import nltk

from nltk.tokenize import word_tokenize

from nltk.corpus import stopwords# nltk.download() #用来下载其他nltk内置安装包,用到什么下什么就行

def print_hi():input_str = 'Causal understanding is a defining characteristic of human cognition. The weather is bright today, suitable for going out to play.'tokens = word_tokenize(input_str)tokens = [word.lower() for word in tokens] #lower()变小写tokens_set = set(tokens)#set() 函数创建一个无序不重复元素集,可进行关系测试,删除重复数据,还可以计算交集、差集、并集等。print(tokens_set.intersection(set(stopwords.words('english'))))#intersection()求交集if __name__ == '__main__':print_hi()

输出结果:

过滤掉停用词

def print_hi():input_str = 'Causal understanding is a defining characteristic of human cognition. The weather is bright today, suitable for going out to play.'tokens = word_tokenize(input_str)tokens = [word.lower() for word in tokens]tokens_set = set(tokens)filtered = [word for word in tokens_set if(word not in stopwords.words('english'))]print(filtered)

输出结果:

4.词性标注POS tag

from nltk import pos_tag

import nltk

from nltk.tokenize import word_tokenize

from nltk import pos_tag

# nltk.download() #用来下载其他nltk内置安装包,用到什么下什么就行

def print_hi():input_str = 'The weather is bright today, suitable for going out to play.'tokens = word_tokenize(input_str)tokens = [word.lower() for word in tokens]tags = pos_tag(tokens)print(tags)if __name__ == '__main__':print_hi()

常见的为: NN名词,VB动词-VBZ动词单三-VBD动词过去时-VBG动词现在进行时,JJ形容词,DT限定词如:the,a,an,IN介词

5.分块

import nltk

from nltk.chunk import RegexpParser

from nltk import pos_tag

# nltk.download() #用来下载其他nltk内置安装包,用到什么下什么就行

def print_hi():sentence = [('the','DT'),('little','JJ'),('yellow','JJ'),('dog','NN'),('died','VBD')]grammer = "MY_CT:{<DT>?<JJ>&<NN>}"#编写MY_CT块内容规定#<DT>?<JJ>&<NN>中的?和&可以换成*,@,¥等,只是起到隔断作用cp = nltk.RegexpParser(grammer)#生成规定out = cp.parse(sentence)#分块print(out)out.draw()#调用matplotlib库可视化if __name__ == '__main__':print_hi()

6.自动识别并命名实体

没下载包会出现如下图所示问题,那么代码加上nltk.download()再次执行,下载提示安装包即可。

import nltk

from nltk.tokenize import word_tokenize

from nltk import pos_tag

from nltk import ne_chunk

# nltk.download() #用来下载其他nltk内置安装包,用到什么下什么就行

def print_hi():sentence = "Edison went to Tsinghua university today."print(ne_chunk(pos_tag(word_tokenize(sentence))))if __name__ == '__main__':print_hi()

输出结果:

二、数据清理实例演示

import re

import nltk

# nltk.download() #用来下载其他nltk内置安装包,用到什么下什么就行

def print_hi():sentence = "Jane Smith,jane_smith #Test @example.net,https://tem 456 Elm \St., , ,,bob@example.com, & 1234567890,Sam O'Connor, 35,, (123) 456-7890, 789 ,"print('原始数据:',sentence,'\n')without_special_entities = re.sub(r',|#\w*|&\w*',' ',sentence)#当出现',','#+abc','&+abc'时替换成1个空格时替换成1个空格print('去除特色符号的数据:', without_special_entities, '\n')without_whitespace = re.sub(r'\s+', ' ', without_special_entities)#当空格出现1次或者无数次时替换成1个空格print('去除多余空格的数据:', without_whitespace, '\n')without_http = re.sub(r'https*:\/\/\w*','',without_whitespace)#https*:\/\/\w*\.\w*,#逐一讲解:如果规则里面只写了https*,那么当https出现就消除,但是这里面https后面还跟其他规则,那这个*只能删掉到符合后面规则的https(1个)#:\/\/指的是://#\w*指的是https://后面的文字,有多少删多少,因为*表示出现0或者多次print('去除不完全的https的数据:', without_http, '\n')if __name__ == '__main__':print_hi()

![[Java] 使用 VSCode 来开发 Java](https://i-blog.csdnimg.cn/direct/45a6b5c90c9d469ea7c2bad3ef197bbc.png)