报错信息

/07/22 10:20:28 WARN DFSClient: Error Recovery for block BP-888461729-172.16.34.148-1397820377004:blk_15089246483_16183344527 in pipeline 172.16.34.64:50010, 172.16.34.223:50010: bad datanode 172.16.34.64:50010 [DataStreamer for file /bdp/data/u9083189ae0349dbaf8f2f2ee3351579_temp/.hive-staging_hive_2022-07-22_10-16-47_909_6320972307505128897-167/-ext-10000/_temporary/0/_temporary/attempt_20220722101347_2794_m_000034_0/part-00034-c0b2f98a-7049-4a5d-bcf3-b3042cd16645-c000 block BP-888461729-172.16.34.148-1397820377004:blk_15089246483_16183344527]

22/07/22 10:21:07 WARN DFSClient: Slow ReadProcessor read fields took 51412ms (threshold=30000ms); ack: seqno: 10 status: SUCCESS status: SUCCESS downstreamAckTimeNanos: 1027966, targets: [172.16.34.64:50010, 172.16.34.95:50010] [ResponseProcessor for block BP-888461729-172.16.34.148-1397820377004:blk_15089250682_16183349712]

22/07/22 10:22:18 WARN DFSClient: DFSOutputStream ResponseProcessor exception for block BP-888461729-172.16.34.148-1397820377004:blk_15089250682_16183349712 [ResponseProcessor for block BP-888461729-172.16.34.148-1397820377004:blk_15089250682_16183349712]

java.io.EOFException: Premature EOF: no length prefix availableat org.apache.hadoop.hdfs.protocolPB.PBHelper.vintPrefixed(PBHelper.java:2207)at org.apache.hadoop.hdfs.protocol.datatransfer.PipelineAck.readFields(PipelineAck.java:176)at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer$ResponseProcessor.run(DFSOutputStream.java:867)

22/07/22 10:23:09 WARN DFSClient: DataStreamer Exception [DataStreamer for file /bdp/data/9ca8f3a33ce543909594541286116aec_temp/.hive-staging_hive_2022-07-22_10-19-47_845_1102463989488675558-143/-ext-10000/_temporary/0/_temporary/attempt_20220722101658_2821_m_000049_0/part-00049-b0b65b5f-f8f0-4b60-9e72-04dde1343ede-c000 block BP-888461729-172.16.34.148-1397820377004:blk_15089250682_16183349712]

java.io.IOException: Broken pipeat sun.nio.ch.FileDispatcherImpl.write0(Native Method)at sun.nio.ch.SocketDispatcher.write(SocketDispatcher.java:47)at sun.nio.ch.IOUtil.writeFromNativeBuffer(IOUtil.java:93)at sun.nio.ch.IOUtil.write(IOUtil.java:65)at sun.nio.ch.SocketChannelImpl.write(SocketChannelImpl.java:471)at org.apache.hadoop.net.SocketOutputStream$Writer.performIO(SocketOutputStream.java:63)at org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:142)at org.apache.hadoop.net.SocketOutputStream.write(SocketOutputStream.java:159)at org.apache.hadoop.net.SocketOutputStream.write(SocketOutputStream.java:117)at java.io.BufferedOutputStream.write(BufferedOutputStream.java:122)at java.io.DataOutputStream.write(DataOutputStream.java:107)at org.apache.hadoop.hdfs.DFSOutputStream$Packet.writeTo(DFSOutputStream.java:321)at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:643)

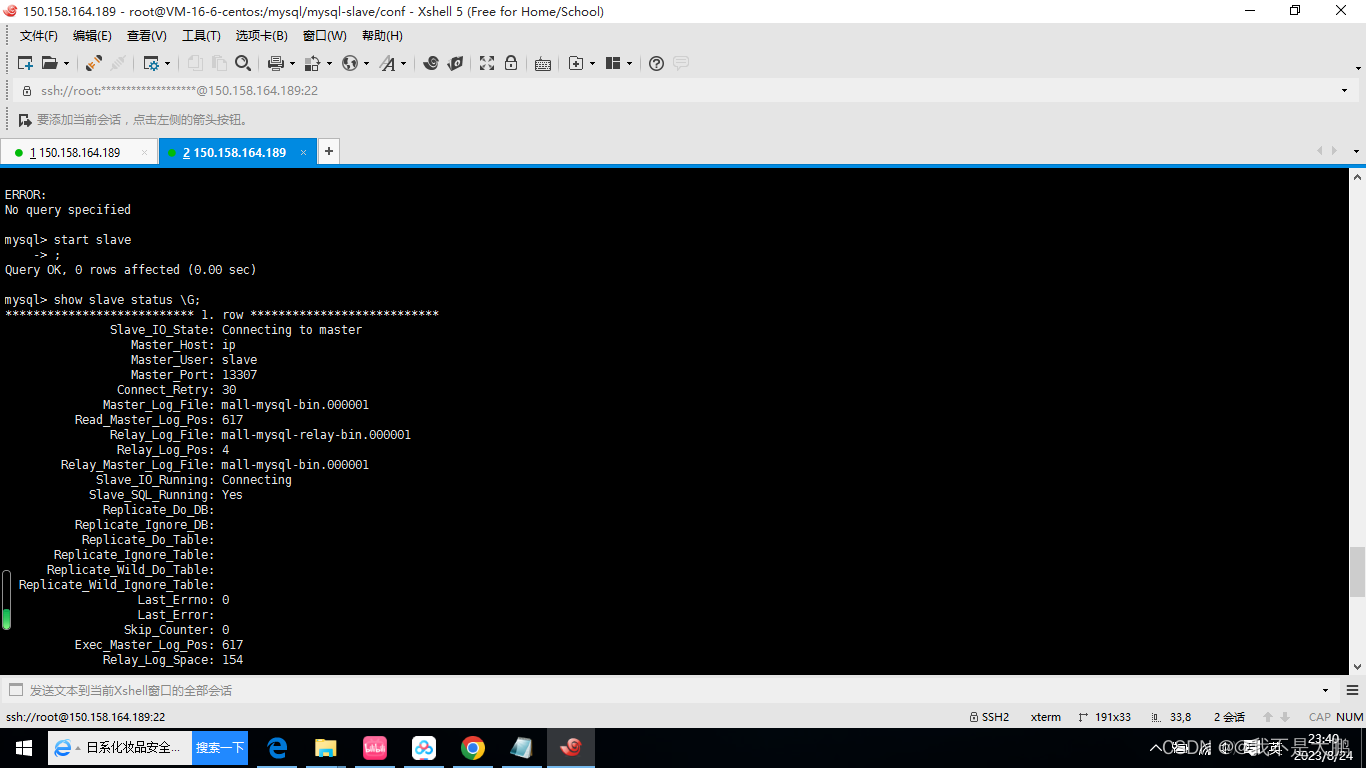

22/07/22 10:23:09 WARN DFSClient: Error Recovery for block BP-888461729-172.16.34.148-1397820377004:blk_15089250682_16183349712 in pipeline 172.16.34.64:50010, 172.16.34.95:50010: bad datanode 172.16.34.64:50010 [DataStreamer for file /bdp/data/9ca8f3a33ce543909594541286116aec_temp/.hive-staging_hive_2022-07-22_10-19-47_845_1102463989488675558-143/-ext-10000/_temporary/0/_temporary/attempt_20220722101658_2821_m_000049_0/part-00049-b0b65b5f-f8f0-4b60-9e72-04dde1343ede-c000 block BP-888461729-172.16.34.148-1397820377004:blk_15089250682_16183349712]在这里插入图片描述

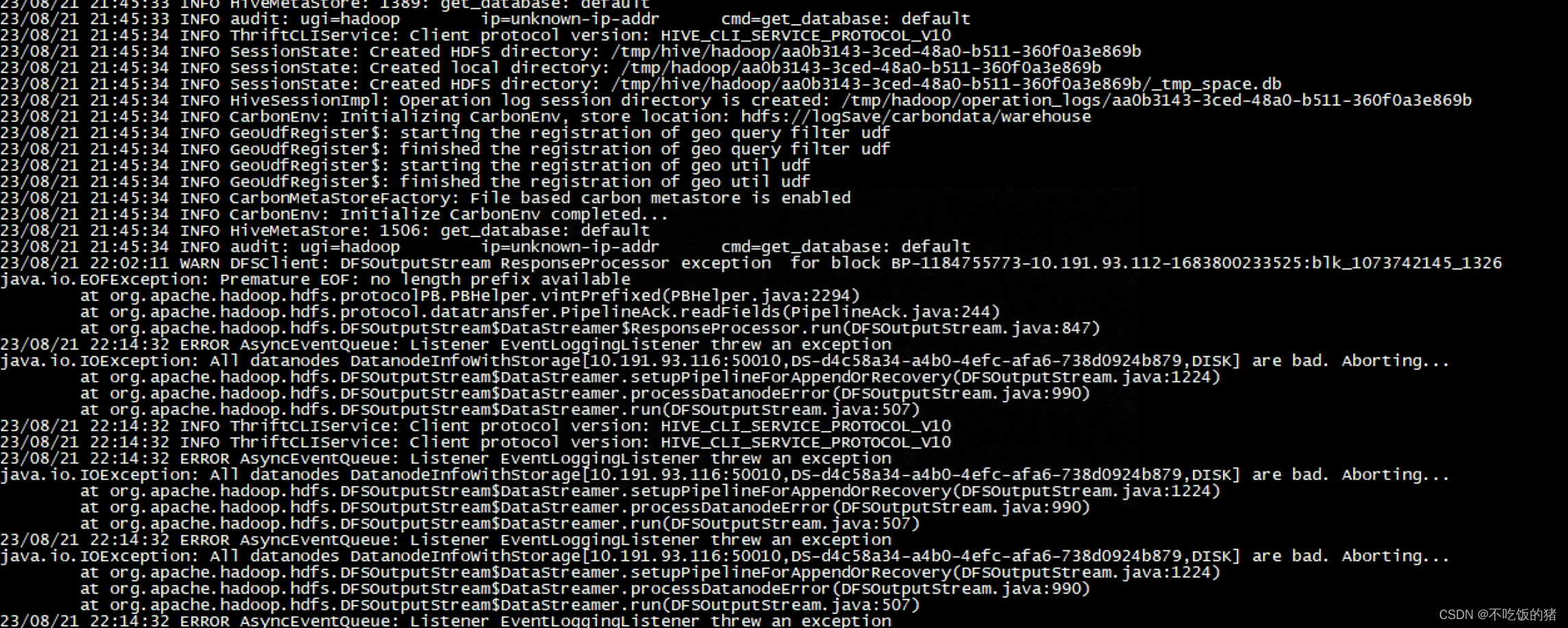

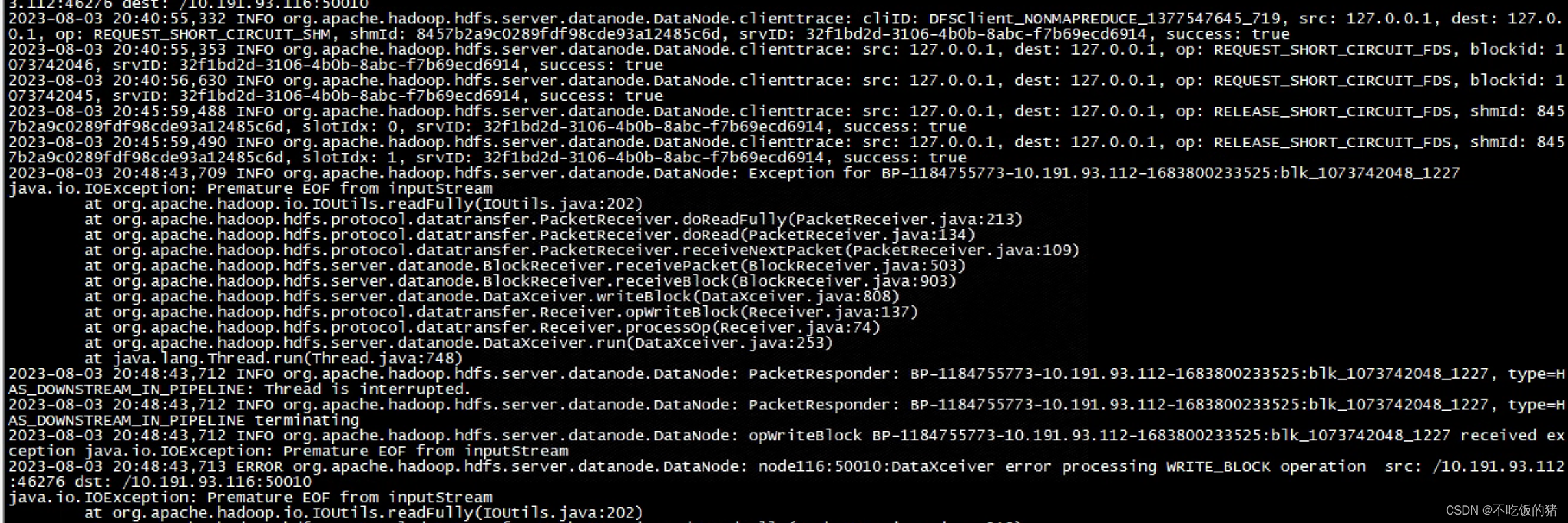

查看116节点的datanode日志

2022-07-21 12:04:27,437 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Exception for BP-888461729-172.16.34.148-1397820377004:blk_15087313175_16181097975

java.io.IOException: Premature EOF from inputStreamat org.apache.hadoop.io.IOUtils.readFully(IOUtils.java:194)at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.doReadFully(PacketReceiver.java:213)at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.doRead(PacketReceiver.java:134)at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.receiveNextPacket(PacketReceiver.java:109)at org.apache.hadoop.hdfs.server.datanode.BlockReceiver.receivePacket(BlockReceiver.java:496)at org.apache.hadoop.hdfs.server.datanode.BlockReceiver.receiveBlock(BlockReceiver.java:889)at org.apache.hadoop.hdfs.server.datanode.DataXceiver.writeBlock(DataXceiver.java:757)at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.opWriteBlock(Receiver.java:137)at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.processOp(Receiver.java:74)at org.apache.hadoop.hdfs.server.datanode.DataXceiver.run(DataXceiver.java:239)at java.lang.Thread.run(Thread.java:745)

2022-07-21 12:04:27,438 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: opWriteBlock BP-888461729-172.16.34.148-1397820377004:blk_15087313175_16181097975 received exception java.io.IOException: Premature EOF from inputStream

2022-07-21 12:04:27,438 ERROR org.apache.hadoop.hdfs.server.datanode.DataNode: bdp-171:50010:DataXceiver error processing WRITE_BLOCK operation src: /172.16.34.214:9107 dst: /172.16.34.214:50010

java.io.IOException: Premature EOF from inputStreamat org.apache.hadoop.io.IOUtils.readFully(IOUtils.java:194)at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.doReadFully(PacketReceiver.java:213)at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.doRead(PacketReceiver.java:134)at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.receiveNextPacket(PacketReceiver.java:109)at org.apache.hadoop.hdfs.server.datanode.BlockReceiver.receivePacket(BlockReceiver.java:496)at org.apache.hadoop.hdfs.server.datanode.BlockReceiver.receiveBlock(BlockReceiver.java:889)at org.apache.hadoop.hdfs.server.datanode.DataXceiver.writeBlock(DataXceiver.java:757)at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.opWriteBlock(Receiver.java:137)at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.processOp(Receiver.java:74)at org.apache.hadoop.hdfs.server.datanode.DataXceiver.run(DataXceiver.java:239)at java.lang.Thread.run(Thread.java:745)看到别人排查看到文章

https://stackoverflow.com/questions/32060049/hadoop-mapreduce-job-i-o-exception-due-to-premature-eof-from-inputstream

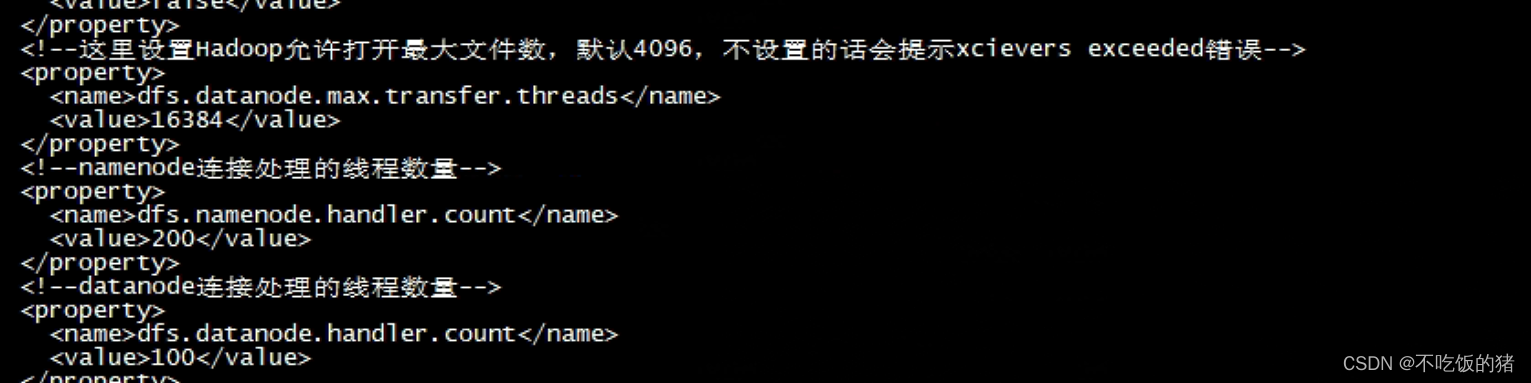

排查相关参数

查看丢包也没有,查看磁盘速度也很正常。具体还没找到原因。。。待后续找到更新