Js去除视频背景

注: 这里的去除视频背景并不是对视频文件进行操作去除背景

如果需要对视频扣除背景并导出可以使用ffmpeg等库,这里仅作播放用所以采用这种方法

由于uniapp中的canvas经过封装,且 uniapp 的

drawImage无法绘制视频帧画面,因此uniapp中不适用

实现过程是将视频使用canvas逐帧截下来对截取的图片进行处理,然后在canvas中显示处理好的图片

最后通过定时器高速处理替换,形成视频播放的效果,效果如下图⬇

边缘仍然会有些绿幕的像素,可以通过其他的处理进行优化

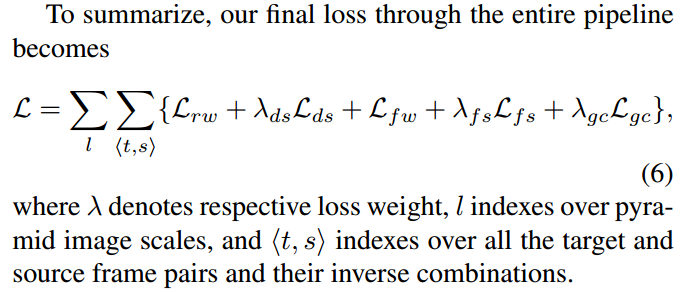

原理

首先使用canvas的 drawImage 方法将video的当前帧画面绘制到canvas中

然后再通过 getImageData 方法获取当前canvas的所有像素的rgba值组成的数组

获取到的值为[r,g,b,a,r,g,b,a,...],每一组rgba的值就是一个像素,所以获取到的数组长度是canvas的像素的数量 * 4

通过判断每一组rgb的值是否为绿幕像素,然后设置其透明通道的alpha的值为0实现效果

代码

因为canvas会受到跨域的影响导致画布被污染,因此首先需要将测试视频下载到本地

如果直接本地打开html的话同样会因为本地路径报跨域错误,需要将html,js,测试视频放在文件夹中部署一个本地服务器

可以使用http-server

npm i http-server -g# 切换到存放html,js,测试视频的文件夹 运行命令即可部署本地服务器http-server

或者

vsCode的Live server插件均可

测试视频 地址

<!DOCTYPE html>

<html lang="en"><head><style>video{width: 480px;height: 270px;}</style></head><body><video id="video" src="./63e1dd7ddd2b0.mp4" loop autoplay muted></video><canvas id="output-canvas" width="480" height="270" willReadFrequently="true"></canvas><script type="text/javascript" src="processor2.js"></script></body>

</html>

// processor2.jslet video, canvas, ctx, canvas_tmp, ctx_tmp;function init () {video = document.getElementById('video');canvas = document.getElementById('output-canvas');ctx = canvas.getContext('2d');// 创建的canvas宽高最好与显示图片的canvas、video宽高一致canvas_tmp = document.createElement('canvas');canvas_tmp.setAttribute('width', 480);canvas_tmp.setAttribute('height', 270);ctx_tmp = canvas_tmp.getContext('2d');video.addEventListener('play', computeFrame);

}function computeFrame () {if (video) {if (video.paused || video.ended) return;}// 如果视频比例和canvas比例不正确可能会出现显示形变, 调整除的值进行比例调整ctx_tmp.drawImage(video, 0, 0, video.clientWidth / 1, video.clientHeight / 1);// 获取到绘制的canvas的所有像素rgba值组成的数组let frame = ctx_tmp.getImageData(0, 0, video.clientWidth, video.clientHeight);// 共有多少像素点const pointLens = frame.data.length / 4;for (let i = 0; i < pointLens; i++) {let r = frame.data[i * 4];let g = frame.data[i * 4 + 1];let b = frame.data[i * 4 + 2];// 判断如果rgb值在这个范围内则是绿幕背景,设置alpha值为0 // 同理不同颜色的背景调整rgb的判断范围即可if (r < 100 && g > 120 && b < 200) {frame.data[i * 4 + 3] = 0;}}// 重新绘制到canvas中显示ctx.putImageData(frame, 0, 0);// 递归调用setTimeout(computeFrame, 0);

}document.addEventListener("DOMContentLoaded", () => {init();

});

使用本地服务器访问html即可看到效果,可以看到边缘仍有绿色像素闪烁

一般情况这种就可以了,使用算法进行处理的话效果会更好,但相应的资源的消耗也会提升,造成帧率下降

下面展示通过一些算法进行羽化和颜色过渡

羽化

// 返回canvas中第num个像素点所在的坐标 12 -> [1, 12]

function numToPoint (num, width) {let col = num % width;let row = Math.floor(num / width);row = col === 0 ? row : row + 1;col = col === 0 ? width : col;return [row, col];

}// 返回canvas中所在坐标的num(index + 1)值 [1, 12] -> 12

function pointToNum (point, width) {let [row, col] = point;return (row - 1) * width + col

}// 获取输入的坐标周围1像素内的所有像素的坐标组成的数组 [1, 1] -> [[1, 2], [2, 1], [2, 2]]

function getAroundPoint (point, width, height, area) {let [row, col] = point;let allAround = [];if (row > height || col > width || row < 0 || col < 0) return allAround;for (let i = 0; i < area; i++) {let pRow = row - 1 + i;for (let j = 0; j < area; j++) {let pCol = col - 1 + j;if (i === area % 2 && j === area % 2) continue;allAround.push([pRow, pCol]);}}return allAround.filter(([iRow, iCol]) => {return (iRow > 0 && iCol > 0) && (iRow <= height && iCol <= width);})

}

通过上面的函数获取到一个选定的不透明的像素周围的像素后,判断周围的像素的alpha值

如果周围的像素有存在透明的像素,则重新计算选定像素的alpha值

颜色过渡

计算修改alpha值连带计算周围像素中rgb的各项平均值给选定像素

最终处理结果如下

代码

// 新增羽化和颜色过渡// processor2.js

let video, canvas, ctx, canvas_tmp, ctx_tmp;function init () {video = document.getElementById('video');canvas = document.getElementById('output-canvas');ctx = canvas.getContext('2d');// 创建的canvas宽高最好与显示图片的canvas、video宽高一致canvas_tmp = document.createElement('canvas');canvas_tmp.setAttribute('width', 480);canvas_tmp.setAttribute('height', 270);ctx_tmp = canvas_tmp.getContext('2d');video.addEventListener('play', computeFrame);

}function numToPoint (num, width) {let col = num % width;let row = Math.floor(num / width);row = col === 0 ? row : row + 1;col = col === 0 ? width : col;return [row, col];

}function pointToNum (point, width) {let [row, col] = point;return (row - 1) * width + col

}function getAroundPoint (point, width, height, area) {let [row, col] = point;let allAround = [];if (row > height || col > width || row < 0 || col < 0) return allAround;for (let i = 0; i < area; i++) {let pRow = row - 1 + i;for (let j = 0; j < area; j++) {let pCol = col - 1 + j;if (i === area % 2 && j === area % 2) continue;allAround.push([pRow, pCol]);}}return allAround.filter(([iRow, iCol]) => {return (iRow > 0 && iCol > 0) && (iRow <= height && iCol <= width);})

}function computeFrame () {if (video) {if (video.paused || video.ended) return;}ctx_tmp.drawImage(video, 0, 0, video.clientWidth, video.clientHeight);let frame = ctx_tmp.getImageData(0, 0, video.clientWidth, video.clientHeight);//----- emergence ----------const height = frame.height;const width = frame.width;const pointLens = frame.data.length / 4;for (let i = 0; i < pointLens; i++) {let r = frame.data[i * 4];let g = frame.data[i * 4 + 1];let b = frame.data[i * 4 + 2];if (r < 150 && g > 200 && b < 150) {frame.data[i * 4 + 3] = 0;}}const tempData = [...frame.data]for (let i = 0; i < pointLens; i++) {if (frame.data[i * 4 + 3] === 0) continueconst currentPoint = numToPoint(i + 1, width);const arroundPoint = getAroundPoint(currentPoint, width, height, 3);let opNum = 0;let rSum = 0;let gSum = 0;let bSum = 0;arroundPoint.forEach((position) => {const index = pointToNum(position, width);rSum = rSum + tempData[(index - 1) * 4];gSum = gSum + tempData[(index - 1) * 4 + 1];bSum = bSum + tempData[(index - 1) * 4 + 2];if (tempData[(index - 1) * 4 + 3] !== 255) opNum++;})let alpha = (255 / arroundPoint.length) * (arroundPoint.length - opNum);if (alpha !== 255) {// debuggerframe.data[i * 4] = parseInt(rSum / arroundPoint.length);frame.data[i * 4 + 1] = parseInt(gSum / arroundPoint.length);frame.data[i * 4 + 2] = parseInt(bSum / arroundPoint.length);frame.data[i * 4 + 3] = parseInt(alpha);}}//------------------------ctx.putImageData(frame, 0, 0);setTimeout(computeFrame, 0);

}document.addEventListener("DOMContentLoaded", () => {init();

});