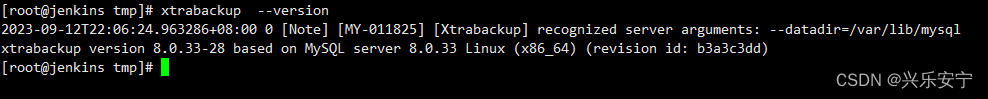

1.安装Xtrabackup

cd /tmp

wget https://downloads.percona.com/downloads/Percona-XtraBackup-8.0/Percona-XtraBackup-8.0.33-28/binary/redhat/7/x86_64/percona-xtrabackup-80-8.0.33-28.1.el7.x86_64.rpm

yum -y localinstall percona-xtrabackup-80-8.0.33-28.1.el7.x86_64.rpm

xtrabackup --version

2.安装AWS CLI

2.1 安装

yum -y install python3-pip

pip3 install awscli

2.2设置 AWS CLI 配置信息

aws_access_key_id="AKI............................" ##AWS密钥

aws_secret_access_key="JF.................................." ##AWS密钥

aws_default_region="sa-east-1" #这个是S3桶的区域,根据你开通的区域修改

aws_output_format="json" #这个是默认的

2.3使用 aws configure 命令设置 AWS CLI 配置信息

aws configure set aws_access_key_id $aws_access_key_id

aws configure set aws_secret_access_key $aws_secret_access_key

aws configure set default.region $aws_default_region

aws configure set default.output $aws_output_format

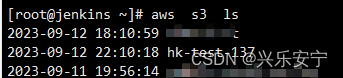

2.4验证是否配置成功

aws s3 ls

3.mysql授权备份用户

外部终端执行,不需要进入库

#mysql授权建立一个授权函数

check() {mysql=/opt/lucky/apps/mysql/bin/mysqlsock=/opt/lucky/data/data_16303/mysql.sockpasswd='123456' #改成自己本地的mysql密码sql_command="GRANT BACKUP_ADMIN ON *.* TO 'root'@'localhost'; FLUSH PRIVILEGES;"$mysql -uroot -p$passwd -S $sock -e "$sql_command"

}

##执行授权函数

check

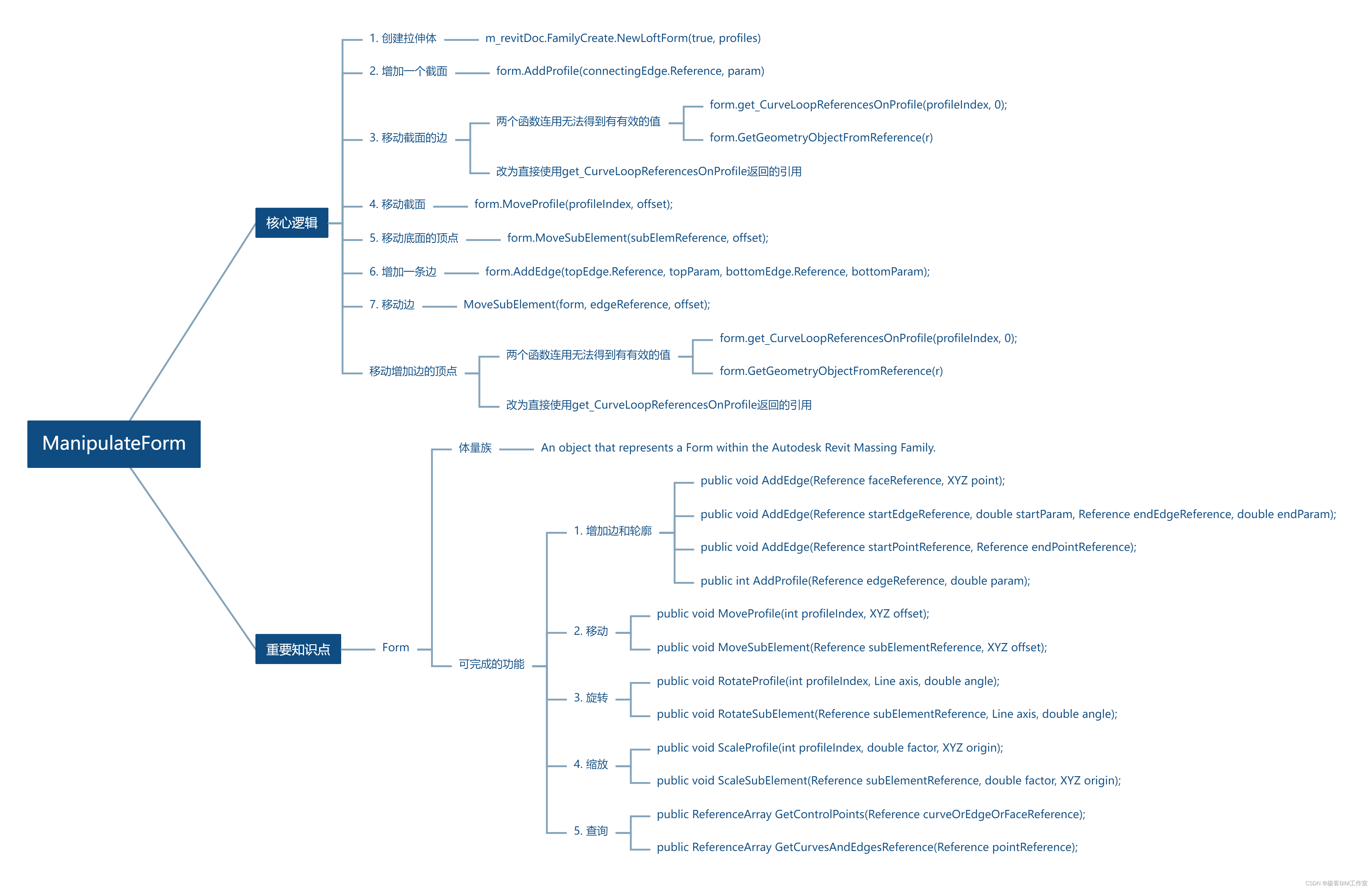

4.写入备份脚本

4.1创建一个放备份脚本的目录

脚本有两个地方需要修改的,一个是桶名字,一个是你的mysql密码

mkdir -p /data/backup/

vim /data/backup/backup_script.sh

#!/bin/bash

## --------------------- start config --------------------- ##

## xtrabackup安装目录

XTRABACKUP_DIR="/usr/bin"

## 备份存放目录

BACKUP_DIR="/opt/backup"

## mysql安装目录

MYSQL_DIR="/opt/lucky/apps/mysql"

## 配置文件目录

MYSQL_CNF="/opt/lucky/conf/my_16303.cnf"

## 数据库用户

MYSQL_USER="root"

## 数据库密码

MYSQL_PASSWORD="密码"

## 数据库端口

MYSQL_PORT=16303

## 数据库sock文件

MYSQL_SOCK="/opt/lucky/data/data_16303/mysql.sock"## S3存储桶名称

S3_BUCKET="awx的桶名"## --------------------- end config --------------------- ## ## 如果任何语句的执行结果不是true则应该退出。

set -e## 当前天

CURRENT_DATE=$(date +%F)## 当前小时(以24小时制表示)

CURRENT_HOUR=$(date +%H)## AWS CLI 命令路径

aws_cmd=$(find / -name aws)function full_backup(){## 描述:xtrabackup 全库备份PARA1=$1## 开始时间START_TIME=$(date +"%s")## 当前日期作为备份文件名后缀BACKUP_SUFFIX=$(date +"%Y%m%d%H")if [ ! -d "$BACKUP_DIR/$PARA1/full" ]; thenmkdir $BACKUP_DIR/$PARA1/full -p## 全库备份echo $(date "+%Y-%m-%d %H:%M:%S")" [Info] --> Starting full backup."$XTRABACKUP_DIR/xtrabackup --defaults-file=$MYSQL_CNF -u$MYSQL_USER -p$MYSQL_PASSWORD -S$MYSQL_SOCK --backup --target-dir=$BACKUP_DIR/$PARA1/full > $BACKUP_DIR/$PARA1/full/full_backup_$BACKUP_SUFFIX.log 2>&1 echo $(date "+%Y-%m-%d %H:%M:%S")" [Info] --> Backup logs in $BACKUP_DIR/$PARA1/full/full_backup_$BACKUP_SUFFIX.log."echo $(date "+%Y-%m-%d %H:%M:%S")" [Info] --> Backup complete, size: $(du $BACKUP_DIR/$PARA1 --max-depth=1 -hl | grep 'full' | awk '{print $1}')."echo $(date "+%Y-%m-%d %H:%M:%S")" [Info] --> Backup complete, using time: "$(($(date +"%s") - $START_TIME))" seconds."## 打包全量备份tar -zcf $BACKUP_DIR/$PARA1/full_$BACKUP_SUFFIX.tar.gz $BACKUP_DIR/$PARA1/full## 把打包好的压缩包上传到AWS S3$aws_cmd s3 cp $BACKUP_DIR/$PARA1/full_$BACKUP_SUFFIX.tar.gz s3://$S3_BUCKET/echo $(date "+%Y-%m-%d %H:%M:%S")" [Info] --> Full backup has been compressed to $BACKUP_DIR/$PARA1/full_$BACKUP_SUFFIX.tar.gz."## 上传成功后删除备份目录和备份压缩包rm -rf $BACKUP_DIR/$PARA1/fullrm -f $BACKUP_DIR/$PARA1/full_$BACKUP_SUFFIX.tar.gzecho ''elseecho $(date "+%Y-%m-%d %H:%M:%S")" [Info] --> Full backup directory already exists for the current hour."echo ''fi

}function delete_seven_days_ago(){## 描述:使用AWS命令删除S3桶内7天前的备份文件# 7天前的日期seven_days_ago=$(date -d "7 days ago" +%Y%m%d)# 列出存储桶中的文件files=$($aws_cmd s3 ls s3://$S3_BUCKET/)while read -r line; do# 获取文件名file_name=$(echo "$line" | awk '{print $4}')# 提取文件名中的日期部分(full_2023091207.tar.gz -> 2023091207)date_part=$(echo "$file_name" | grep -oE '[0-9]{10}')# 检查文件日期是否早于7天前if [[ -n "$date_part" && "$date_part" < "$seven_days_ago" ]]; thenecho "Deleting $file_name..."# 删除文件$aws_cmd s3 rm "s3://$S3_BUCKET/$file_name"echo "File $file_name deleted."fidone <<< "$files"}

## 每小时执行一次全量备份

full_backup $CURRENT_DATE

## 删除7天前的备份文件

delete_seven_days_ago

4.2赋予执行权限

chmod +x /data/backup/backup_script.sh

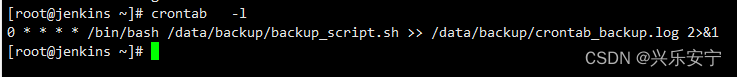

5.设定定时任务

0 * * * * /bin/bash /data/backup/backup_script.sh >> /data/backup/crontab_backup.log 2>&1