版本说明

hadoop-3.3.6(已安装)

mysql-8(已安装)

hive-3.1.3

将hive解压到对应目录后做如下配置:

基本配置与操作

1、hive-site

<configuration><!-- jdbc连接的URL --><property><name>javax.jdo.option.ConnectionURL</name><value>jdbc:mysql://chdp01:3306/hive?useSSL=false</value></property><!-- jdbc连接的Driver--><property><name>javax.jdo.option.ConnectionDriverName</name><value>com.mysql.cj.jdbc.Driver</value></property><!-- jdbc连接的username--><property><name>javax.jdo.option.ConnectionUserName</name><value>root</value></property><!-- jdbc连接的password --><property><name>javax.jdo.option.ConnectionPassword</name><value>MyPwd123@</value></property><!-- Hive默认在HDFS的工作目录 --><property><name>hive.metastore.warehouse.dir</name><value>/user/hive/warehouse</value></property></configuration>

2、下载mysql-connector-j-8.1.0.jar放到HIVE_HOME/lib/目录下

3、元数据初始化

bin/schematool -dbType mysql -initSchema -verbose

4、启动hive

bin/hive

5、测试建表与数据操作

create database sty;

use sty;

create table userInfo(

name string,

age int

)

partitioned by (dt string);set Hive.exec.dynamic.partition=true;

set hive.exec.dynamic.partition.mode=nonstrict;

insert into table userInfo partition(dt='2023-10-23') values('zhangsan',23);

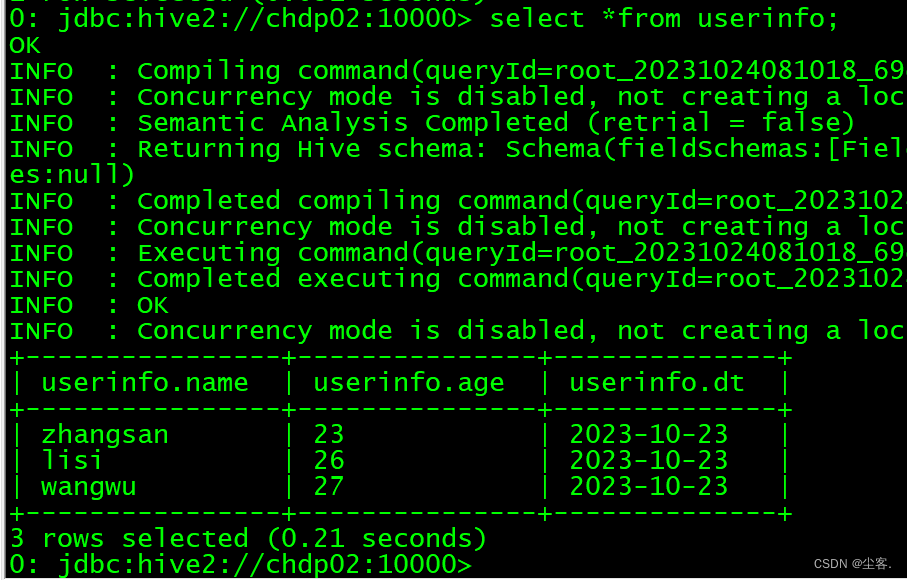

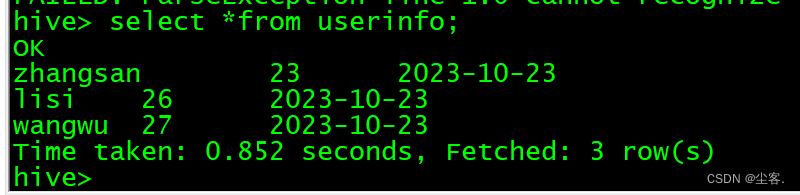

结果

HiveServer与MetaStore配置

(1)Hadoop端配置

hivesever2的模拟用户功能,依赖于Hadoop提供的proxy user(代理用户功能),只有Hadoop中的代理用户才能模拟其他用户的身份访问Hadoop集群。因此,需要将hiveserver2的启动用户设置为Hadoop的代理用户,配置方式如下:

修改配置文件core-site.xml

<property><name>hadoop.proxyuser.root.hosts</name><value>*</value>

</property>

<property><name>hadoop.proxyuser.root.users</name><value>*</value>

</property>

(2)Hive端配置

在hive-site.xml文件中添加如下配置信息:

<property><name>hive.server2.thrift.bind.host</name><value>chdp02</value>

</property>

<property><name>hive.server2.thrift.port</name><value>10000</value>

</property>

<property><name>hive.metastore.uris</name><value>thrift://chdp02:9083</value>

</property><property><name>hive.server2.active.passive.ha.enable</name><value>true</value><description>Whether HiveServer2 Active/Passive High Availability be enabled when Hive Interactive sessions are enabled.This will also require hive.server2.support.dynamic.service.discovery to be enabled.</description></property>

(3) mapred-site.xml配置

该配置项用于hive跑MR任务,否则报如下错误:

Error: Could not find or load main class

org.apache.hadoop.mapreduce.v2.app.MRAppMaster

<property><name>mapreduce.application.classpath</name><value>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*</value></property><property><name>yarn.app.mapreduce.am.env</name><value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value></property><property><name>mapreduce.map.env</name><value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value></property><property><name>mapreduce.reduce.env</name><value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value></property>

<property><name>yarn.application.classpath</name><value>/usr/sft/hadoop-3.3.6/etc/hadoop,/usr/sft/hadoop-3.3.6/share/hadoop/common/lib/*,/usr/sft/hadoop-3.3.6/share/hadoop/common/*,/usr/sft/hadoop-3.3.6/share/hadoop/hdfs,/usr/sft/hadoop-3.3.6/share/hadoop/hdfs/lib/*,/usr/sft/hadoop-3.3.6/share/hadoop/hdfs/*,/usr/sft/hadoop-3.3.6/share/hadoop/mapreduce/lib/*,/usr/sft/hadoop-3.3.6/share/hadoop/mapreduce/*,/usr/sft/hadoop-3.3.6/share/hadoop/yarn,/usr/sft/hadoop-3.3.6/share/hadoop/yarn/lib/*,/usr/sft/hadoop-3.3.6/share/hadoop/yarn/*</value></property>

(4)结果测试

对应节点先后启动metastore、hiveserver2服务

nohup hive --service metastore 2>&1 &

nohup hive --service hiveserver2 2>&1 &

启动beeline客户端连接

beeline -u jdbc:hive2://chdp02:10000 -n root