Apache Flink 1.12.0 on Yarn(3.1.1) 所遇到的問題

新搭建的FLINK集群出现的问题汇总

1.新搭建的Flink集群和Hadoop集群无法正常启动Flink任务

查看这个提交任务的日志无法发现有用的错误信息。

进一步查看yarn日志:

发现只有JobManager的错误日志出现了如下的错误:/bin/bash: /bin/java: No such file or directory。

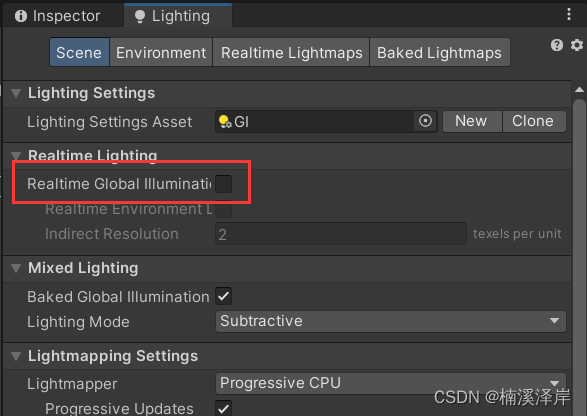

正常情况下执行配置完成java之后,执行/bin/java的会出现如下的结果:

根据查到的提示,出现这个情况(/bin/bash: /bin/java: No such file or directory)的原因是软连接的问题。因此需要在每个节点都创建软连接:ls -s /usr/java/jdk1.8.0_221 /bin/java

每个节点创建完软连接之后,再次执行:/bin/java结果如下:

每个节点的软连接已生效,再次提交任务成功。

概要

根據官方文檔配置在 $FLINK_HOME/lib 加入 flink-shaded-hadoop-3-uber-3.1.1.7.1.1.0-565-9.0.jar ,經過驗證,其實這個可以不加,只加上下面的 hadoop classpath 就行。

或者在環境變量配置文件中 加入 hadoop classpath.

## 注意:lib 後面一定要加 *export Hadoop_CLASSPATH=$Hadoop_CLASSPATH:$HADOOP_HOME/lib/*export HADOOP_CLASSPATH=`hadoop classpath`

问题1

启动 yarn-session.sh 出現 Exit code: 127 Stack trace: ExitCodeException exitCode=127,具體的錯誤日誌如下:

2023-11-01 14:26:44,408 ERROR org.apache.flink.yarn.cli.FlinkYarnSessionCli [] - Error while running the Flink session.org.apache.flink.client.deployment.ClusterDeploymentException: Couldn't deploy Yarn session clusterat org.apache.flink.yarn.YarnClusterDescriptor.deploySessionCluster(YarnClusterDescriptor.java:411) ~[flink-dist_2.11-1.12.0.jar:1.12.0]at org.apache.flink.yarn.cli.FlinkYarnSessionCli.run(FlinkYarnSessionCli.java:498) ~[flink-dist_2.11-1.12.0.jar:1.12.0]at org.apache.flink.yarn.cli.FlinkYarnSessionCli.lambda$main$4(FlinkYarnSessionCli.java:730) ~[flink-dist_2.11-1.12.0.jar:1.12.0]at java.security.AccessController.doPrivileged(Native Method) ~[?:1.8.0_221]at javax.security.auth.Subject.doAs(Subject.java:422) ~[?:1.8.0_221]at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729) ~[hadoop-common-3.1.1.jar:?]at org.apache.flink.runtime.security.contexts.HadoopSecurityContext.runSecured(HadoopSecurityContext.java:41) ~[flink-dist_2.11-1.12.0.jar:1.12.0]at org.apache.flink.yarn.cli.FlinkYarnSessionCli.main(FlinkYarnSessionCli.java:730) [flink-dist_2.11-1.12.0.jar:1.12.0]Caused by: org.apache.flink.yarn.YarnClusterDescriptor$YarnDeploymentException: The YARN application unexpectedly switched to state FAILED during deployment.Diagnostics from YARN: Application application_1617189748122_0017 failed 1 times (global limit =2; local limit is =1) due to AM Container for appattempt_1617189748122_0017_000001 exited with exitCode: 127Failing this attempt.Diagnostics: [2023-11-01 14:26:44.107]Exception from container-launch.Container id: container_1617189748122_0017_01_000001Exit code: 127[2023-11-01 14:26:44.108]Container exited with a non-zero exit code 127. Error file: prelaunch.err.Last 4096 bytes of prelaunch.err :[2023-11-01 14:26:44.109]Container exited with a non-zero exit code 127. Error file: prelaunch.err.Last 4096 bytes of prelaunch.err :For more detailed output, check the application tracking page: http://hadoop001:8088/cluster/app/application_1617189748122_0017 Then click on links to logs of each attempt.. Failing the application.If log aggregation is enabled on your cluster, use this command to further investigate the issue:yarn logs -applicationId application_1617189748122_0017at org.apache.flink.yarn.YarnClusterDescriptor.startAppMaster(YarnClusterDescriptor.java:1078) ~[flink-dist_2.11-1.12.0.jar:1.12.0]at org.apache.flink.yarn.YarnClusterDescriptor.deployInternal(YarnClusterDescriptor.java:558) ~[flink-dist_2.11-1.12.0.jar:1.12.0]at org.apache.flink.yarn.YarnClusterDescriptor.deploySessionCluster(YarnClusterDescriptor.java:404) ~[flink-dist_2.11-1.12.0.jar:1.12.0]... 7 more------------------------------------------------------------The program finished with the following exception:org.apache.flink.client.deployment.ClusterDeploymentException: Couldn't deploy Yarn session clusterat org.apache.flink.yarn.YarnClusterDescriptor.deploySessionCluster(YarnClusterDescriptor.java:411)at org.apache.flink.yarn.cli.FlinkYarnSessionCli.run(FlinkYarnSessionCli.java:498)at org.apache.flink.yarn.cli.FlinkYarnSessionCli.lambda$main$4(FlinkYarnSessionCli.java:730)at java.security.AccessController.doPrivileged(Native Method)at javax.security.auth.Subject.doAs(Subject.java:422)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729)at org.apache.flink.runtime.security.contexts.HadoopSecurityContext.runSecured(HadoopSecurityContext.java:41)at org.apache.flink.yarn.cli.FlinkYarnSessionCli.main(FlinkYarnSessionCli.java:730)Caused by: org.apache.flink.yarn.YarnClusterDescriptor$YarnDeploymentException: The YARN application unexpectedly switched to state FAILED during deployment.Diagnostics from YARN: Application application_1617189748122_0017 failed 1 times (global limit =2; local limit is =1) due to AM Container for appattempt_1617189748122_0017_000001 exited with exitCode: 127Failing this attempt.Diagnostics: [2023-11-01 14:26:44.107]Exception from container-launch.Container id: container_1617189748122_0017_01_000001Exit code: 127[2023-11-01 14:26:44.108]Container exited with a non-zero exit code 127. Error file: prelaunch.err.Last 4096 bytes of prelaunch.err :[2023-11-01 14:26:44.109]Container exited with a non-zero exit code 127. Error file: prelaunch.err.Last 4096 bytes of prelaunch.err :For more detailed output, check the application tracking page: http://hadoop001:8088/cluster/app/application_1617189748122_0017 Then click on links to logs of each attempt.. Failing the application.If log aggregation is enabled on your cluster, use this command to further investigate the issue:yarn logs -applicationId application_1617189748122_0017at org.apache.flink.yarn.YarnClusterDescriptor.startAppMaster(YarnClusterDescriptor.java:1078)at org.apache.flink.yarn.YarnClusterDescriptor.deployInternal(YarnClusterDescriptor.java:558)at org.apache.flink.yarn.YarnClusterDescriptor.deploySessionCluster(YarnClusterDescriptor.java:404)... 7 more2023-11-01 14:26:44,415 INFO org.apache.flink.yarn.YarnClusterDescriptor [] - Cancelling deployment from Deployment Failure Hook2023-11-01 14:26:44,416 INFO org.apache.hadoop.yarn.client.RMProxy [] - Connecting to ResourceManager at hadoop001/192.168.100.100:80322023-11-01 14:26:44,418 INFO org.apache.flink.yarn.YarnClusterDescriptor [] - Killing YARN application2023-11-01 14:26:44,429 INFO org.apache.hadoop.yarn.client.api.impl.YarnClientImpl [] - Killed application application_1617189748122_00172023-11-01 14:26:44,532 INFO org.apache.flink.yarn.YarnClusterDescriptor [] - Deleting files in hdfs://hadoop001:8020/user/hadoop/.flink/application_1617189748122_0017.

然後下載具體的 container 日誌:

yarn logs -applicationId application_1617189748122_0017 -containerId container_1617189748122_0017_01_000001 -out /tmp/

查看 container 日誌

LogAggregationType: AGGREGATED====================================================================LogType:jobmanager.errLogLastModifiedTime:Thu Apr 01 14:26:45 +0800 2021LogLength:48LogContents:/bin/bash: /bin/java: No such file or directoryEnd of LogType:jobmanager.err*******************************************************************************End of LogType:jobmanager.out*******************************************************************************Container: container_1617189748122_0017_01_000001 on hadoop001_53613LogAggregationType: AGGREGATED====================================================================

注意日誌中的,找不到 /bin/bash: /bin/java: No such file or directory

[hadoop@hadoop001 bin]$ echo $JAVA_HOME/usr/java/jdk1.8.0_221

然後做一個軟連接

ls -s /usr/java/jdk1.8.0_221 /bin/java

问题2

啟動 yarn-session.sh 出現 Container exited with a non-zero exit code 126,具體的錯誤日誌如下:

org.apache.flink.client.deployment.ClusterDeploymentException: Couldn't deploy Yarn session clusterat org.apache.flink.yarn.YarnClusterDescriptor.deploySessionCluster(YarnClusterDescriptor.java:411)at org.apache.flink.yarn.cli.FlinkYarnSessionCli.run(FlinkYarnSessionCli.java:498)at org.apache.flink.yarn.cli.FlinkYarnSessionCli.lambda$main$4(FlinkYarnSessionCli.java:730)at java.security.AccessController.doPrivileged(Native Method)at javax.security.auth.Subject.doAs(Subject.java:422)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729)at org.apache.flink.runtime.security.contexts.HadoopSecurityContext.runSecured(HadoopSecurityContext.java:41)at org.apache.flink.yarn.cli.FlinkYarnSessionCli.main(FlinkYarnSessionCli.java:730)Caused by: org.apache.flink.yarn.YarnClusterDescriptor$YarnDeploymentException: The YARN application unexpectedly switched to state FAILED during deployment.Diagnostics from YARN: Application application_1617189748122_0019 failed 1 times (global limit =2; local limit is =1) due to AM Container for appattempt_1617189748122_0019_000001 exited with exitCode: 126Failing this attempt.Diagnostics: [2023-11-01 14:43:23.068]Exception from container-launch.Container id: container_1617189748122_0019_01_000001Exit code: 126[2023-11-01 14:43:23.070]Container exited with a non-zero exit code 126. Error file: prelaunch.err.Last 4096 bytes of prelaunch.err :[2023-11-01 14:43:23.072]Container exited with a non-zero exit code 126. Error file: prelaunch.err.Last 4096 bytes of prelaunch.err :

查看 container 的日誌情況:

[hadoop@hadoop001 flink-1.12.0]$ yarn logs -applicationId application_1617189748122_0019 -show_application_log_info2023-11-01 15:09:07,880 INFO client.RMProxy: Connecting to ResourceManager at hadoop001/192.168.100.100:8032Application State: Completed.Container: container_1617189748122_0019_01_000001 on hadoop001_53613

下載 container 日誌,操作和上面問題 1 一樣。

查看報錯日誌

broken symlinks(find -L . -maxdepth 5 -type l -ls):End of LogType:directory.info*******************************************************************************Container: container_1617189748122_0019_01_000001 on hadoop001_53613LogAggregationType: AGGREGATED====================================================================LogType:jobmanager.errLogLastModifiedTime:Thu Apr 01 14:43:24 +0800 2021LogLength:37LogContents:/bin/bash: /bin/java: Is a directoryEnd of LogType:jobmanager.err*******************************************************************************

注意:/bin/bash: /bin/java: Is a directory ,這個是關鍵日誌,經過排查發現是軟連接出現了錯誤。

[root@hadoop001 bin]# ln -s /usr/java/jdk1.8.0_221/bin/java /bin/java[root@hadoop001 bin]#[root@hadoop001 bin]#[root@hadoop001 bin]# ll /bin/javalrwxrwxrwx 1 root root 31 Apr 1 16:09 /bin/java -> /usr/java/jdk1.8.0_221/bin/java[root@hadoop001 bin]#[root@hadoop001 bin]# /bin/java -versionjava version "1.8.0_221"Java(TM) SE Runtime Environment (build 1.8.0_221-b11)Java HotSpot(TM) 64-Bit Server VM (build 25.221-b11, mixed mode)

验证

啟動 …/bin/yarn-session.sh

如何查看正在运行的Yarn容器的日志??

众所周知,flink on yarn 分为jobmanager的容器和taskmanager的容器。1.yarn application -list2.yarn applicationattempt -list <ApplicationId>3.yarn container -list <Application AttemptId>

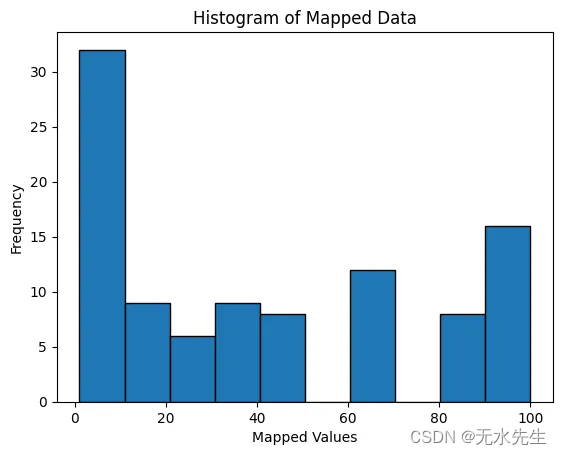

到了这个第3步,就能看到每个容器的访问的url ,分别对应着jobmanager的和taskmanager的,但是具体怎么区分是哪个taskmanager的就只能依靠ip去区分。 htpp就用 curl 进行访问,https就用curl -k进行访问。4.访问的结果包含了6种不同类型日志的访问路径:找到我们想看的日志的访问路径,然后访问,访问路径的最后的参数:-start=-4096代表了显示多少日志出来。如果想查看完整的日志,就应该把这个数调的很大5.为了查看方便,应该使用 > 的方式把访问url的日志的结果输出到日志文件中。

FLINK ON YARN提交方式详解

目前自己用到的:yarn-per-job和yarn-applicaiton他们的执行方式是不同的,执行yarn-per-job需要执行flink文件。同样的yarn-application也需要执行flik文件。./flink run -t yarn-per-job -d \

-p 1 \

-ynm test_env_job \

-yD rest.flamegraph.enabled=true \

-yD jobmanager.memory.process.size=1G \

-yD taskmanager.memory.process.size=2G \

-yD taskmanager.numberOfTaskSlots=1 \

-yD env.java.opts="-Denv=test" \

-c com.xingye.demo.TestTimer \

/cgroups_test/test/fk.jar./flink run-application -t yarn-application -d \

-p 5 \

-ynm test_impala_job \

-D rest.flamegraph.enabled=true \

-D jobmanager.memory.process.size=2G \

-D taskmanager.memory.process.size=8G \

-D taskmanager.numberOfTaskSlots=5 \

-c com.xingye.demo.ImpalaDemo1 \

/tmp/test_flink_impala/fk.jar通过两种命令的对比就发现区别:

flink run -t yarn-per-job

flink run-application -t yarn-application还有需要注意的是 -y* 这个参数是特有的使用yarn的时候就能使用的参数,也就是说yarn-per-job能用,yarn-application也能用。-yD和-D动态参数的意思,作用就是覆盖flink-conf.yaml文件中的默认配置。唯一不同的地方就在于-yD只能在使用yarn的时候指定动态参数,不能在其他模式使用比如kubernetes无法使用-yD参数。-D可以在不同的方式下指定动态参数,-D是一种更通用的指定动态参数的方式。总结:yarn-per-job和yarn-application运行的都是同一个文件,相同点在于都能使用yarn模式下特有的-y*的参数,并且都能使用-D动态参数。

![[SSD综述 1.4] SSD固态硬盘的架构和功能导论](https://img-blog.csdnimg.cn/3314a649fd594853894c6587026dd1cc.png)