时长比较短的音频:https://huggingface.co/datasets/PolyAI/minds14/viewer/en-US

时长比较长的音频:https://huggingface.co/datasets/librispeech_asr?row=8

此次测试过程暂时只使用比较短的音频

使用fast_whisper测试

下载安装,参考官方网站即可

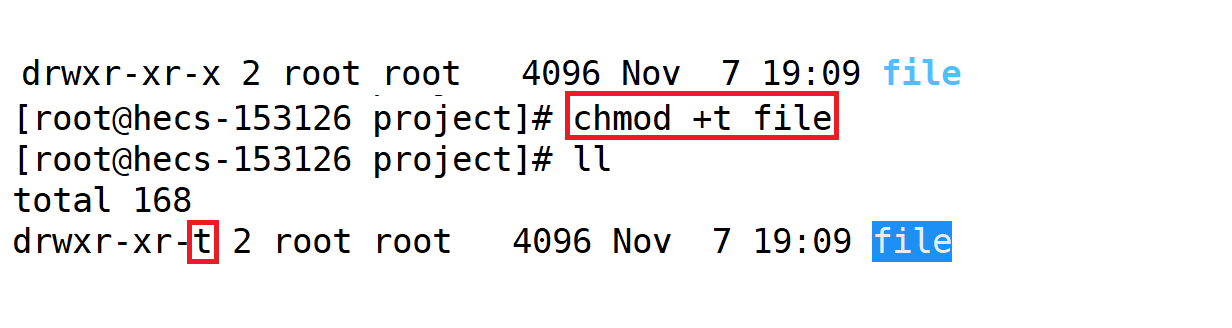

报错提示:

Could not load library libcudnn_ops_infer.so.8. Error: libcudnn_ops_infer.so.8: cannot open shared object file: No such file or directory

Please make sure libcudnn_ops_infer.so.8 is in your library path!

解决办法:

找到有libcudnn_ops_infer.so.8 的路径,在我的电脑中,改文件所在的路径为

在终端导入 export LD_LIBRARY_PATH=/opt/audio/venv/lib/python3.10/site-packages/nvidia/cudnn/lib:$LD_LIBRARY_PATH

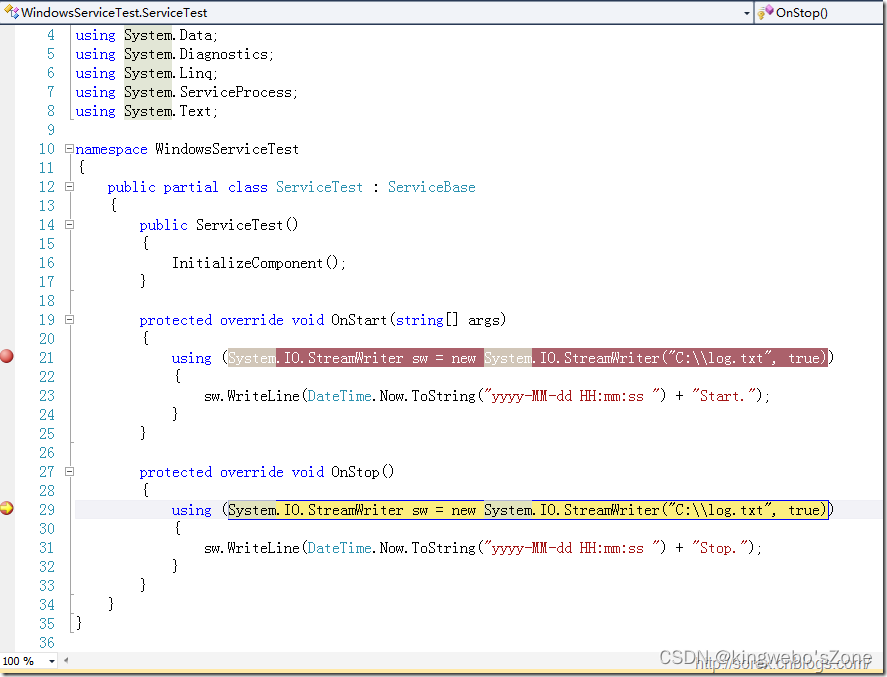

test_fast_whisper.py

import subprocess

import os

import time

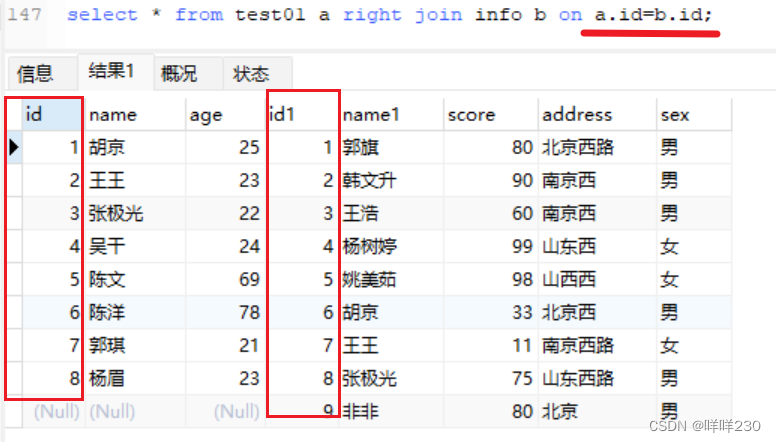

import unittest

import openpyxl

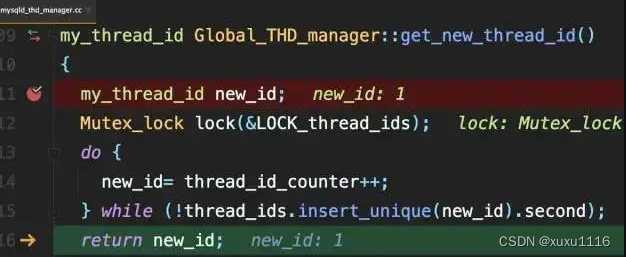

from pydub import AudioSegment

from datasets import load_datasetfrom faster_whisper import WhisperModelclass TestFastWhisper(unittest.TestCase):def setUp(self):passdef test_fastwhisper(self):# 替换为您的脚本路径# 设置HTTP代理os.environ["http_proxy"] = "http://10.10.10.178:7890"os.environ["HTTP_PROXY"] = "http://10.10.10.178:7890"# 不知道此处为什么不能生效,必须要在终端中手动导入os.environ["LD_LIBRARY_PATH"] = "/opt/audio/venv/lib/python3.10/site-packages/nvidia/cudnn/lib:$LD_LIBRARY_PATH"# 设置HTTPS代理os.environ["https_proxy"] = "http://10.10.10.178:7890"os.environ["HTTPS_PROXY"] = "http://10.10.10.178:7890"print("load whisper")# 使用fast_whisper model_size = "large-v2"# Run on GPU with FP16fast_whisper_model = WhisperModel(model_size, device="cuda", compute_type="float16")minds_14 = load_dataset("PolyAI/minds14", "en-US", split="train") # for en-USworkbook = openpyxl.Workbook()# 创建一个工作表worksheet = workbook.active# 设置表头worksheet["A1"] = "Audio Path"worksheet["B1"] = "Audio Duration (seconds)"worksheet["C1"] = "Audio Size (MB)"worksheet["D1"] = "Correct Text"worksheet["E1"] = "Transcribed Text"worksheet["F1"] = "Cost Time (seconds)"for index, each in enumerate(minds_14, start=2):audioPath = each["path"]print(audioPath)# audioArray = each["audio"]audioDuration = len(AudioSegment.from_file(audioPath))/1000audioSize = os.path.getsize(audioPath)/ (1024 * 1024)CorrectText = each["transcription"]tran_start_time = time.time()segments, info = fast_whisper_model.transcribe(audioPath, beam_size=5)segments = list(segments) # The transcription will actually run here.print("Detected language '%s' with probability %f" % (info.language, info.language_probability))text = ""for segment in segments:text += segment.textcost_time = time.time() - tran_start_timeprint("Audio Path:", audioPath)print("Audio Duration (seconds):", audioDuration)print("Audio Size (MB):", audioSize)print("Correct Text:", CorrectText)print("Transcription Time (seconds):", cost_time)print("Transcribed Text:", text)worksheet[f"A{index}"] = audioPathworksheet[f"B{index}"] = audioDurationworksheet[f"C{index}"] = audioSizeworksheet[f"D{index}"] = CorrectTextworksheet[f"E{index}"] = textworksheet[f"F{index}"] = cost_time# breakworkbook.save("fast_whisper_output_data.xlsx")print("数据已保存到 fast_whisper_output_data.xlsx 文件")if __name__ == '__main__':unittest.main()使用whisper测试

下载安装,参考官方网站即可,代码与上面代码类似

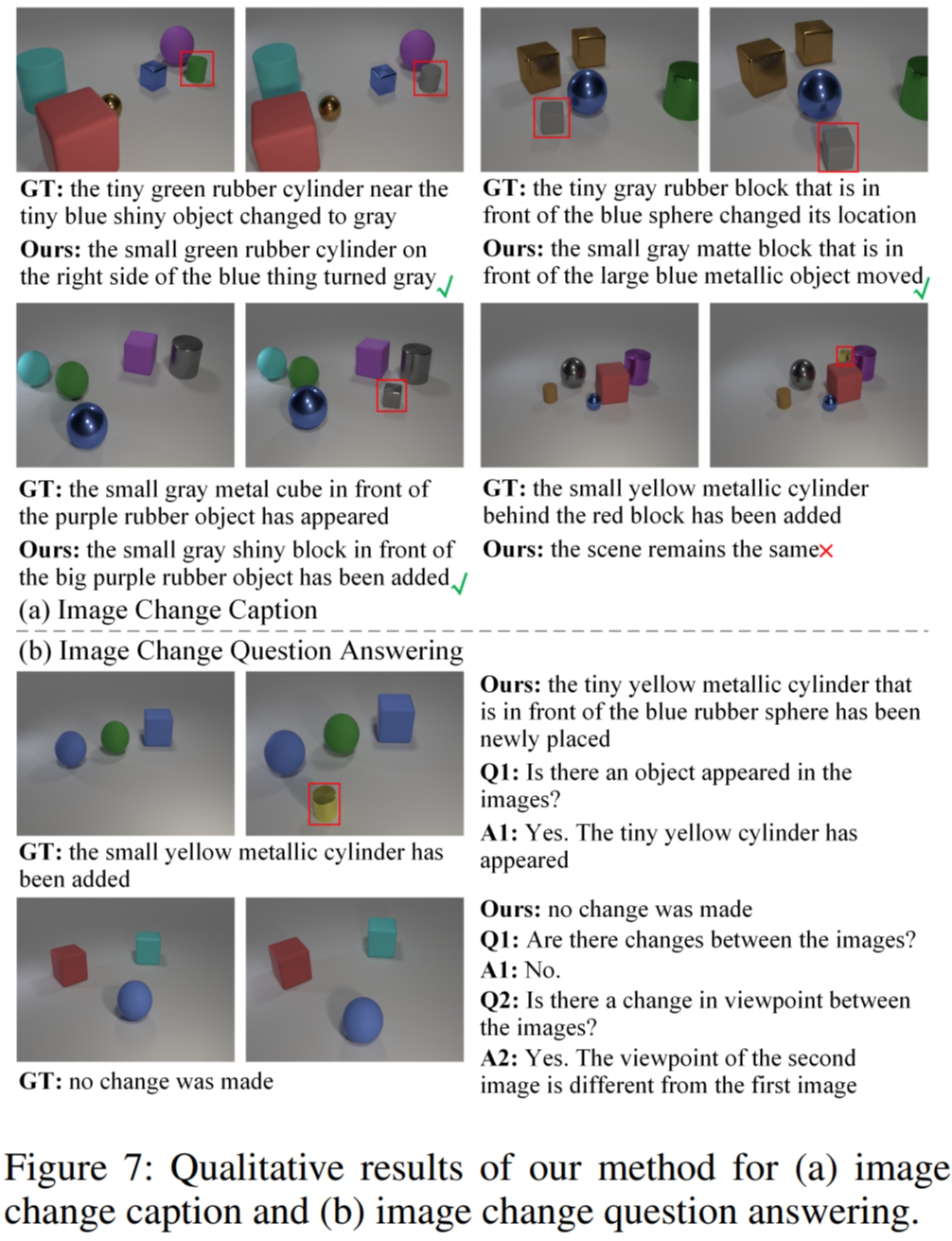

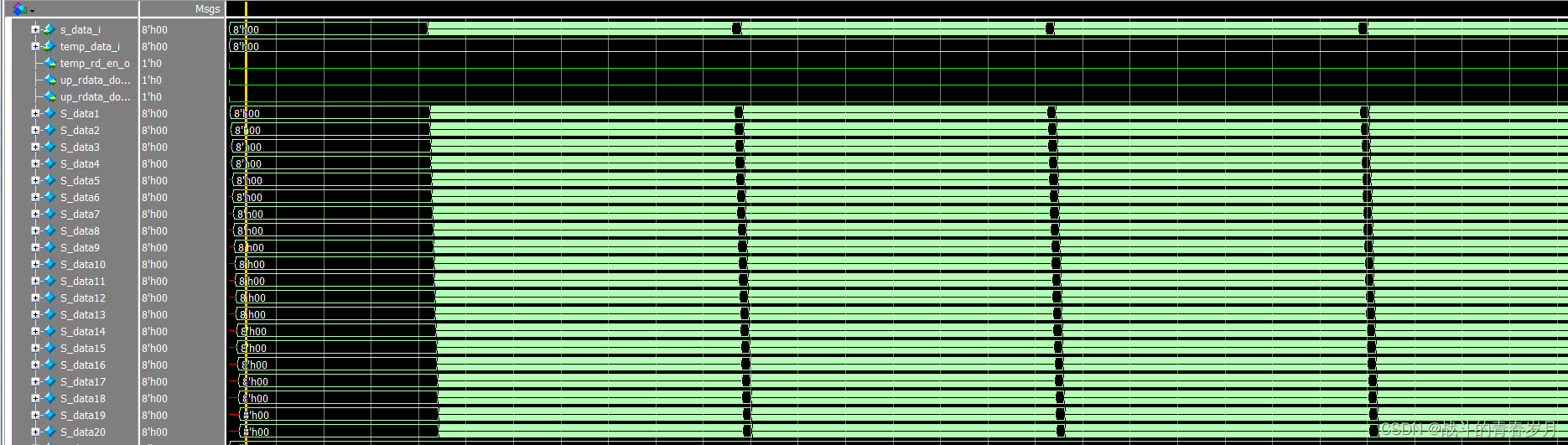

测试结果可视化

不太熟悉用numbers,凑合着看一下就行

很明显,fast_whisper速度要更快一些