二进制文件-docker方式

1、准备的服务器

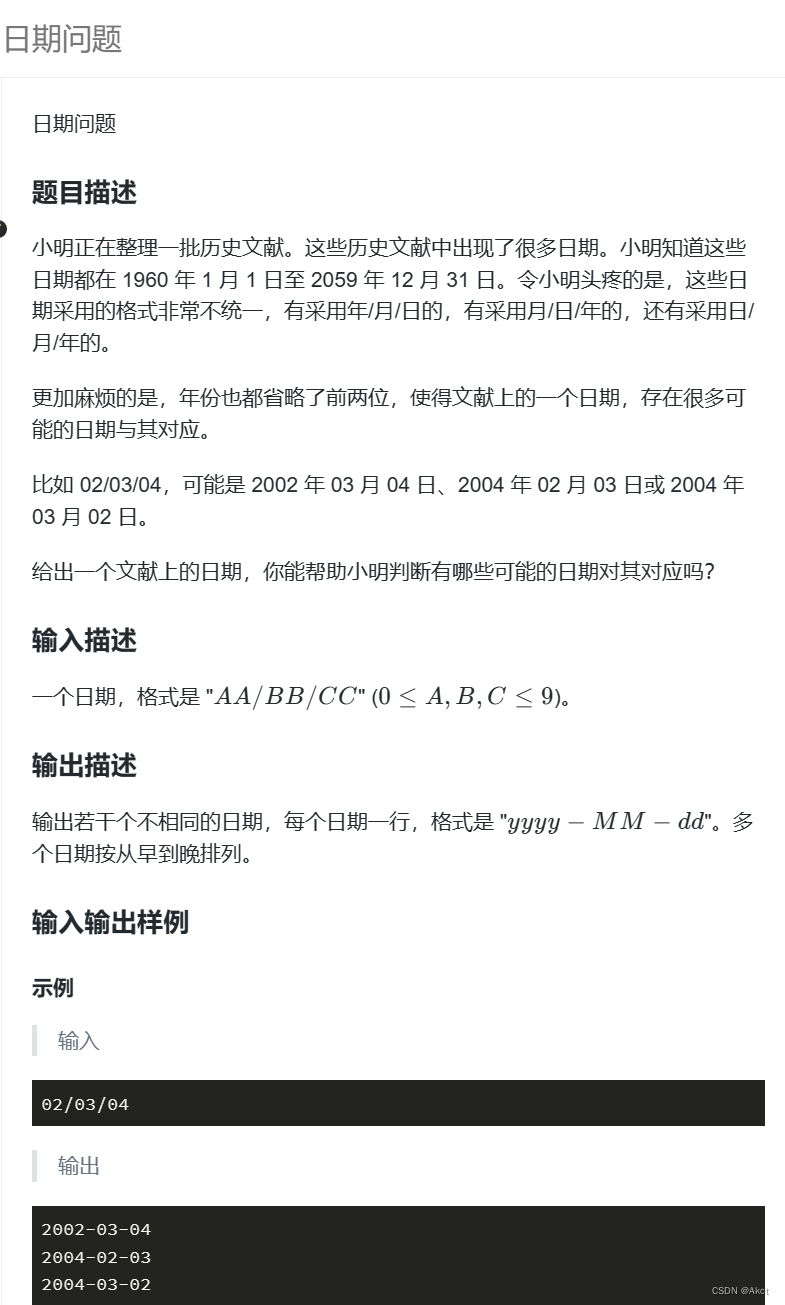

| 角色 | ip | 组件 |

|---|---|---|

| k8s-master1 | 192.168.11.111 | kube-apiserver,kube-controller-manager,kube-scheduler,etcd |

| k8s-master2 | 192.168.11.112 | kube-apiserver,kube-controller-manager,kube-scheduler,etcd |

| k8s-node1 | 192.168.11.113 | kubelet,kube-proxy,docker |

| k8s-node2 | 192.168.11.114 | kubelet,kube-proxy,docker |

| Load Balancer(Master) | 192.168.11.115 | keepalived,haproxy |

| Load Balancer(Backup) | 192.168.11.116 | keepalived,haproxy |

| lb | 192.168.11.100(VIP) |

2、系统初始化

1、设置host 根据上面的规划执行

#安装集群环境所需要的依赖

yum install wget sed vim tree jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git -y

hostnamectl set-hostname k8s-master1 #在master执行

hostnamectl set-hostname 其他省略

2、关闭防火墙

#关闭现有防火墙firewalld

systemctl disable firewalld

systemctl stop firewalld

firewall-cmd --state

3、关闭selinux

#关闭selinux

setenforce 0 #临时

sed -ri 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config #永久(一定要重启操作系统)

sestatus

4、交换分区设置

#关闭 swap

swapoff -a #临时

sed -ri 's/.*swap.*/#&/' /etc/fstab #永久

echo "vm.swappiness=0" >> /etc/sysctl.conf #永久 当前操作系统没办法重启。执行此操作

sysctl -p

5、主机与IP地址解析(全部执行)

#添加hosts[所有节点都添加]

cat > /etc/hosts << EOF

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.11.111 k8s-master1

192.168.11.112 k8s-master2

192.168.11.113 k8s-node1

192.168.11.114 k8s-node2

192.168.11.115 k8s-lb1

192.168.11.116 k8s-lb2

EOF

#重启生效

sysctl --system #生效

6、主机系统时间同步

#时间同步

yum install ntpdate -y

ntpdate time.windows.com

#制定时间同步计划任务 一个小时做一次时间同步

#1、创建命令

crontab -e

#2、按a进入编辑状态

a

#3、编辑一下内容

0 */1 * * * ntpdate time1.aliyun.com

#4、wq保存即可

wq

7、主机ipvs管理工具安装及模块加载

为集群节点安装,负载均衡节点不用安装(ha1、ha2不安装)

yum -y install ipvsadm ipset sysstat conntrack libseccomp

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

#授权、运行、检查是否加载

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack

8、主机系统优化

#ulimit -SHn 65535 #临时的

#永久的

cat <<EOF >> /etc/security/limits.conf

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

EOF

9、Linux内核升级

#安装perl

yum -y install perl

#导入el

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

#将elrepo导入到linux系统中(yam源)

yum -y install https://www.elrepo.org/elrepo-release-7.0-4.el7.elrepo.noarch.rpm

#安装kernel-lt版本,ml为最新稳定版本,lt为长期维护版本

yum --enablerepo="elrepo-kernel" -y install kernel-lt.x86_64

#设置刚才升级的为内核优先启动项

grub2-set-default 0

#执行生效操作

grub2-mkconfig -o /boot/grub2/grub.cfg

10、开启主机内核路由转发及网桥过滤

- 所有主机均需要操作。

配置内核加载br_netfilter和iptables放行ipv6和ipv4的流量,确保集群内的容器能够正常通信。

#内核优化k8s.conf

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 131072

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

#设置生效

sysctl --system

#加载br_netfilter

modprobe br_netfilter

#查看是否加载

lsmod | grep br_netfilter

9、免密登录

作用:用于服务器之间的相互拷贝、免去密码校验等。不是环境搭建的相关知识点,可省略

#生成密钥(在k8s-master1上执行)

ssh-keygen

#然后将生成的秘钥复制到需要传输文件的服务器上

ssh-copy-id root@k8s-master2

ssh-copy-id root@k8s-master3

ssh-copy-id root@k8s-worker1

#配置成功后,尝试登录某个服务器,校验是否生成成功

ssh root@k8s-master1

2、ETCD集群部署

查看 kubernetes2-binary-system(ca-etcd-apiserver).md文档

3、负载均衡器安装

ha1和ha2上执行

1、安装haproxy与keepalived

yum -y install haproxy keepalived

2、 HAProxy配置

注意住下面的k8s-master对应的master所有节点ip

cat >/etc/haproxy/haproxy.cfg<<"EOF"

globalmaxconn 2000ulimit-n 16384log 127.0.0.1 local0 errstats timeout 30sdefaultslog globalmode httpoption httplogtimeout connect 5000timeout client 50000timeout server 50000timeout http-request 15stimeout http-keep-alive 15sfrontend monitor-inbind *:33305mode httpoption httplogmonitor-uri /monitorfrontend k8s-masterbind 0.0.0.0:6443bind 127.0.0.1:6443mode tcpoption tcplogtcp-request inspect-delay 5sdefault_backend k8s-masterbackend k8s-mastermode tcpoption tcplogoption tcp-checkbalance roundrobindefault-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100#此处将所有的master配置到此处server k8s-master1 192.168.11.111:6443 checkserver k8s-master2 192.168.11.112:6443 check

EOF

3、KeepAlived

主从配置不一致。需要修改好对应的信息

1、ha1配置以下文件

cat >/etc/keepalived/keepalived.conf<<"EOF"

! Configuration File for keepalived

global_defs {router_id LVS_DEVEL

script_user rootenable_script_security

}

vrrp_script chk_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 5weight -5fall 2

rise 1

}

vrrp_instance VI_1 {#指定当前为主的masterstate MASTERinterface ens33#广播的话暴漏的ip此处为当前ip地址mcast_src_ip 192.168.11.115virtual_router_id 51#优先级100大于从服务的99priority 100advert_int 2authentication {auth_type PASSauth_pass K8SHA_KA_AUTH}virtual_ipaddress {#配置规划的虚拟ip192.168.11.100}#配置对ha1进行监控的脚本track_script {#指定执行脚本的名称(vrrp_script chk_apiserver此处做了配置)chk_apiserver}

}

EOF

2、ha2配置以下文件

cat >/etc/keepalived/keepalived.conf<<"EOF"

! Configuration File for keepalived

global_defs {router_id LVS_DEVEL

script_user rootenable_script_security

}

vrrp_script chk_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 5weight -5fall 2

rise 1

}

vrrp_instance VI_1 {state BACKUPinterface ens33mcast_src_ip 192.168.11.116virtual_router_id 51priority 99advert_int 2authentication {auth_type PASSauth_pass K8SHA_KA_AUTH}virtual_ipaddress {192.168.11.100}#配置对ha1进行监控的脚本track_script {#指定执行脚本的名称(vrrp_script chk_apiserver此处做了配置)chk_apiserver}

}

EOF

4、健康检查脚本

ha1及ha2均要配置

1、准备检查脚本

cat > /etc/keepalived/check_apiserver.sh <<"EOF"

#!/bin/bash

err=0

for k in $(seq 1 3)

docheck_code=$(pgrep haproxy)if [[ $check_code == "" ]]; thenerr=$(expr $err + 1)sleep 1continueelseerr=0breakfi

doneif [[ $err != "0" ]]; thenecho "systemctl stop keepalived"/usr/bin/systemctl stop keepalivedexit 1

elseexit 0

fi

EOF

2、赋予权限

chmod +x /etc/keepalived/check_apiserver.sh

5、启动服务并验证

systemctl daemon-reload

systemctl enable --now haproxy

systemctl enable --now keepalived

#查看启动状态

systemctl status keepalived haproxy

#查看虚拟ip是否配置成功了

ip address show

4、部署Master

在master1上执行,分发到其他需要的节点

1、下载kubernetes二进制文件

1、创建工作目录

#创建临时工作目录.用于将所有需要的文件都整理好放到此处,然后整体打包复制到工作目录中,并且分发给master和对应的worker节点上

mkdir -vp /usr/local/kubernetes/k8s

#真正的工作目录

mkdir -vp /opt/kubernetes/{ssl,cfg,logs}

cd /usr/local/kubernetes/k8s

#创建日志目录

mkdir -p /var/log/kubernetes

2、软件包下载

#官网地址

#https://github.com/kubernetes/kubernetes/releases

#wget https://dl.k8s.io/v1.21.10/kubernetes-server-linux-amd64.tar.gz

#wget https://dl.k8s.io/v1.27.3/kubernetes-server-linux-amd64.tar.gz

wget https://dl.k8s.io/v1.28.0/kubernetes-server-linux-amd64.tar.gz

#解压缩

tar -xvf kubernetes-server-linux-amd64.tar.gz

#进入目录

cd kubernetes/server/bin/

#复制到系统可执行目录中

\cp -R kube-apiserver kube-controller-manager kube-scheduler kubectl kubelet kube-proxy /usr/local/bin/

3、包分发

分发给master节点

#master节点创建目录

mkdir -vp /usr/local/bin

#传输文件

scp kube-apiserver kube-controller-manager kube-scheduler kubectl k8s-master2:/usr/local/bin/

分发给node节点

#node节点创建工作目录

mkdir -vp /usr/local/bin

#4、分发kubelet、kube-proxy到服务器(分发给worker主机)

for i in k8s-node1 k8s-node2;do scp kubelet kube-proxy $i:/usr/local/bin;done

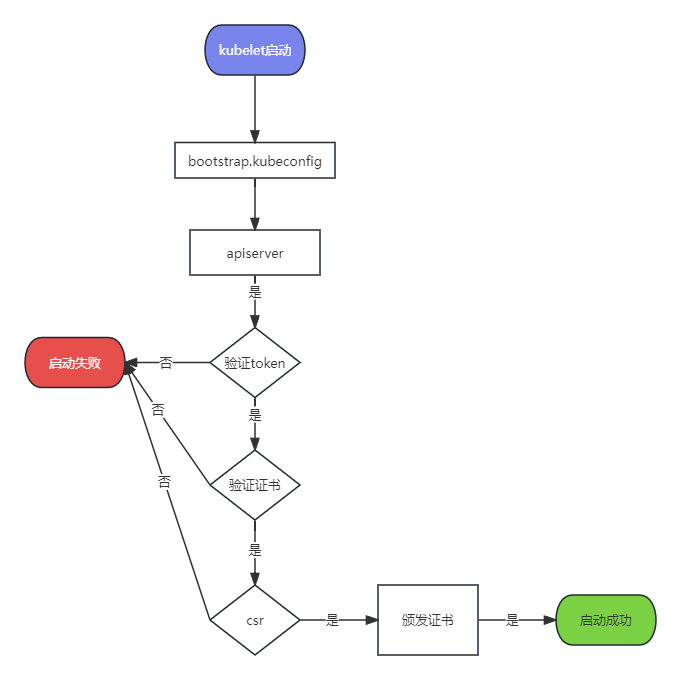

4、生成token.cvs

Master上的apiserver启用TLS认证后,Node节点kubelet和kube-proxy要和kube-apiserver进行通信,必须使用CA签发的有效整数才可以,当Node节点很多的时候,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化操作流程,k8s引入了TLS Bootstrapping机制来自动颁发客户端证书,kubelet会以一个低权限用户向apiserver申请证书,kubelet的证书由apiserver动态签署.

cat > /opt/kubernetes/cfg/token.csv << EOF

$(head -c 16 /dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

2、部署apiserver

1、准备apiserver证书文件

cd /usr/local/kubernetes/etcd

#1、使用自签CA签发kube-apiserver HTTPS证书

cat > kube-apiserver-csr.json << "EOF"

{

"CN": "kubernetes","hosts": ["127.0.0.1","192.168.11.111","192.168.11.112","192.168.11.113","192.168.11.114","192.168.11.115","192.168.11.116","192.168.11.100","10.96.0.1","kubernetes","kubernetes.default","kubernetes.default.svc","kubernetes.default.svc.cluster","kubernetes.default.svc.cluster.local"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "Beijing","L": "Beijing","O": "kubemsb","OU": "CN"}]

}

EOF

2、生成apiserver证书

#生成证书文件(生成kube-apiserver-key.pem、kube-apiserver.pem、kube-apiserver.csr)

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver#存放apiserver的ssl相关文件

mkdir -vp /opt/kubernetes/ssl/api-server

#将生成的密钥文件移动到临时工作目录中

\cp -R ca*.pem kube-apiserver*.pem /opt/kubernetes/ssl/api-server

3、kube-apiserver.conf配制文件

#apiserver

cat > /opt/kubernetes/cfg/kube-apiserver.conf << EOF

KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \\--anonymous-auth=false \\--bind-address=192.168.11.111 \\--advertise-address=192.168.11.111 \\--secure-port=6443 \\--authorization-mode=Node,RBAC \\--runtime-config=api/all=true \\--enable-bootstrap-token-auth \\--service-cluster-ip-range=10.96.0.0/16 \\--token-auth-file=/opt/kubernetes/cfg/token.csv \\--service-node-port-range=1-32767 \\--tls-cert-file=/opt/kubernetes/ssl/api-server/kube-apiserver.pem \\--tls-private-key-file=/opt/kubernetes/ssl/api-server/kube-apiserver-key.pem \\--client-ca-file=/opt/kubernetes/ssl/api-server/ca.pem \\--kubelet-client-certificate=/opt/kubernetes/ssl/api-server/kube-apiserver.pem \\--kubelet-client-key=/opt/kubernetes/ssl/api-server/kube-apiserver-key.pem \\--service-account-key-file=/opt/kubernetes/ssl/api-server/ca-key.pem \\--service-account-signing-key-file=/opt/kubernetes/ssl/api-server/ca-key.pem \\--service-account-issuer=api \\--etcd-cafile=/opt/etcd/ssl/ca.pem \\--etcd-certfile=/opt/etcd/ssl/etcd.pem \\--etcd-keyfile=/opt/etcd/ssl/etcd-key.pem \\--etcd-servers=https://192.168.11.111:2379,https://192.168.11.112:2379 \\--enable-swagger-ui=true \\--allow-privileged=true \\--apiserver-count=3 \\--audit-log-maxage=30 \\--audit-log-maxbackup=3 \\--audit-log-maxsize=100 \\--audit-log-path=/var/log/kube-apiserver-audit.log \\--event-ttl=1h \\--v=4"

EOF

4、kube-apiserver.service启动文件

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=etcd.service

Wants=etcd.service[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver.conf

ExecStart=/usr/local/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536[Install]

WantedBy=multi-user.target

EOF

切记查看启动是否有该文件$KUBE_APISERVER_OPTS

5、分发给其他master节点

注意:修改对应kube-apiserver.conf的ip地址

#在对应的master上创建文件夹

mkdir -vp /opt/kubernetes/cfg/

mkdir -vp /opt/kubernetes/ssl/api-server

#复制所有的文件到对应服务器上

scp -r /opt/kubernetes/cfg/ k8s-master2:/opt/kubernetes/cfg/

scp -r /usr/lib/systemd/system/kube-apiserver.service k8s-master2:/usr/lib/systemd/system/

scp -r /opt/kubernetes/ssl/api-server/* k8s-master2:/opt/kubernetes/ssl/api-server

6、启动kube-apiserver

systemctl daemon-reload

systemctl enable --now kube-apiserver

systemctl status kube-apiserver

3、部署kubectl

1、生成kubectl证书

#创建存放kubectl的证书文件的工作目录

mkdir -vp /opt/kubernetes/ssl/kubectl

#切换到工作目录下

cd /usr/local/kubernetes/etcd/

cat > admin-csr.json << "EOF"

{"CN": "admin","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "Beijing","L": "Beijing","O": "system:masters", "OU": "system"}]

}

EOF

说明:

后续 kube-apiserver 使用 RBAC 对客户端(如 kubelet、kube-proxy、Pod)请求进行授权;

kube-apiserver 预定义了一些 RBAC 使用的 RoleBindings,如 cluster-admin 将 Group system:masters 与 Role cluster-admin 绑定,该 Role 授予了调用kube-apiserver 的所有 API的权限;

O指定该证书的 Group 为 system:masters,kubelet 使用该证书访问 kube-apiserver 时 ,由于证书被 CA 签名,所以认证通过,同时由于证书用户组为经过预授权的 system:masters,所以被授予访问所有 API 的权限;

注:

这个admin 证书,是将来生成管理员用的kubeconfig 配置文件用的,现在我们一般建议使用RBAC 来对kubernetes 进行角色权限控制, kubernetes 将证书中的CN 字段 作为User, O 字段作为 Group;

"O": "system:masters", 必须是system:masters,否则后面kubectl create clusterrolebinding报错。

2、生成kubectl的admin相关证书文件

#生成证书文件

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

#查看生成的文件

ls admin*.pem ca*.pem

admin-key.pem admin.pem ca-key.pem ca.pem

#复制生成的证书文件到工作目录下

\cp -R ca*.pem admin*.pem /opt/kubernetes/ssl/kubectl/

3、生成kubeconfig配置文件

kube.config 为 kubectl 的配置文件,包含访问 apiserver 的所有信息,如 apiserver 地址、CA 证书和自身使用的证书

切记:下面的ip以及配置文件别写错了

#当前命令在/usr/local/kubernetes/etcd下执行

cd /usr/local/kubernetes/etcd/

#此处的ip配置的是VIP的地址

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.11.100:6443 --kubeconfig=kube.configkubectl config set-credentials admin --client-certificate=admin.pem --client-key=admin-key.pem --embed-certs=true --kubeconfig=kube.configkubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=kube.configkubectl config use-context kubernetes --kubeconfig=kube.config

4、准备kubectl配置文件并进行角色绑定

#创建root下的文件夹

mkdir ~/.kube

#将上面第四步生成的文件复制到文件夹下面

\cp -R kube.config ~/.kube/config

#集群角色的绑定 并且通过/root/.kube/config文件进行控制

kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes --kubeconfig=/root/.kube/config

5、将配置文件导入到系统环境变量中

export KUBECONFIG=$HOME/.kube/config

6、同步配置文件到其他master节点

注意:需要在master2和master3上提前创建/root/.kube,然后文件传输过去,即可使用kubectl客户端工具

# 在master2和master3上创建该目录

# mkdir /root/.kube

scp /root/.kube/config k8s-master2:/root/.kube/

scp /root/.kube/config k8s-master3:/root/.kube/

#导入系统环境变量中

export KUBECONFIG=$HOME/.kube/config

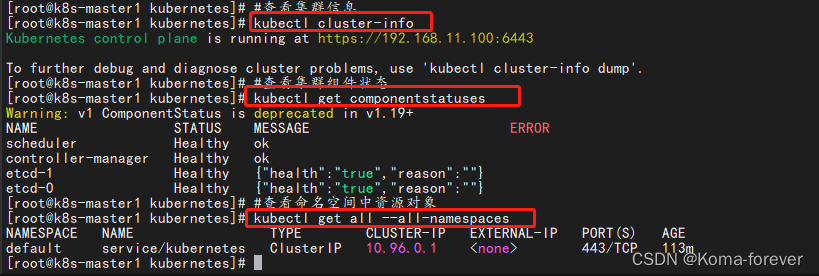

7、集群状态查看

#查看集群信息

kubectl cluster-info

#查看集群组件状态

kubectl get componentstatuses

#查看命名空间中资源对象

kubectl get all --all-namespaces

8、配置kubectl命令补全(可选)

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

kubectl completion bash > ~/.kube/completion.bash.inc

source '/root/.kube/completion.bash.inc'

source $HOME/.bash_profile

4、部署kube-controller-manager

1、准备kube-controller-manager证书文件

#创建工作目录

mkdir -vp /opt/kubernetes/ssl/kube-controller-manager

#创建kube-controller-manager文件

cd /usr/local/kubernetes/etcd/

cat > kube-controller-manager-csr.json << "EOF"

{"CN": "system:kube-controller-manager","key": {"algo": "rsa","size": 2048},"hosts": ["127.0.0.1","192.168.11.111","192.168.11.112"],"names": [{"C": "CN","ST": "Beijing","L": "Beijing","O": "system:kube-controller-manager","OU": "system"}]

}

EOF

2、生成kube-controller-manager证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

#复制文件到工作目录中

\cp -r ca*.pem kube-controller-manager*.pem /opt/kubernetes/ssl/kube-controller-manager

3、创建kube-controller-manager.kubeconfig文件

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.11.100:6443 --kubeconfig=kube-controller-manager.kubeconfigkubectl config set-credentials system:kube-controller-manager --client-certificate=kube-controller-manager.pem --client-key=kube-controller-manager-key.pem --embed-certs=true --kubeconfig=kube-controller-manager.kubeconfigkubectl config set-context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfigkubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

## 将生成的文件复制到工作目录中

\cp -R kube-controller-manager.kubeconfig /opt/kubernetes/cfg/kube-controller-manager.kubeconfig

4、创建kube-controller-manager.conf配置文件

cat > /opt/kubernetes/cfg/kube-controller-manager.conf << "EOF"

KUBE_CONTROLLER_MANAGER_OPTS="--secure-port=10257 \--bind-address=127.0.0.1 \--kubeconfig=/opt/kubernetes/cfg/kube-controller-manager.kubeconfig \--service-cluster-ip-range=10.96.0.0/16 \--cluster-name=kubernetes \--cluster-signing-cert-file=/opt/kubernetes/ssl/kube-controller-manager/ca.pem \--cluster-signing-key-file=/opt/kubernetes/ssl/kube-controller-manager/ca-key.pem \--allocate-node-cidrs=true \--cluster-cidr=10.244.0.0/16 \--root-ca-file=/opt/kubernetes/ssl/kube-controller-manager/ca.pem \--service-account-private-key-file=/opt/kubernetes/ssl/kube-controller-manager/ca-key.pem \--leader-elect=true \--feature-gates=RotateKubeletServerCertificate=true \--controllers=*,bootstrapsigner,tokencleaner \--tls-cert-file=/opt/kubernetes/ssl/kube-controller-manager/kube-controller-manager.pem \--tls-private-key-file=/opt/kubernetes/ssl/kube-controller-manager/kube-controller-manager-key.pem \--use-service-account-credentials=true \--v=2"

EOF

5、创建kube-controller-manager.service服务

cat > /usr/lib/systemd/system/kube-controller-manager.service << "EOF"

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-manager.conf

ExecStart=/usr/local/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

RestartSec=5[Install]

WantedBy=multi-user.target

EOF

6、同步到其他master节点

#在其他的master上执行

#mkdir -vp /opt/kubernetes/ssl/kube-controller-manager

#复制所有的文件到对应服务器上

scp -r /opt/kubernetes/cfg/kube-controller-manager* k8s-master2:/opt/kubernetes/cfg/

scp -r /usr/lib/systemd/system/kube-controller-manager.service k8s-master2:/usr/lib/systemd/system/

scp -r /opt/kubernetes/ssl/kube-controller-manager/* k8s-master2:/opt/kubernetes/ssl/kube-controller-manager

7、启动kube-controller-manager

systemctl daemon-reload

systemctl enable --now kube-controller-manager

systemctl status kube-controller-manager

5、部署kube-scheduler

1、准备kube-scheduler证书文件

#创建证书工作目录

mkdir -vp /opt/kubernetes/ssl/kube-scheduler

cd /usr/local/kubernetes/etcd

cat > kube-scheduler-csr.json << "EOF"

{"CN": "system:kube-scheduler","hosts": ["127.0.0.1","192.168.11.111","192.168.11.112"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "Beijing","L": "Beijing","O": "system:kube-scheduler","OU": "system"}]

}

EOF

2、生成kube-scheduler证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

#复制生成的ssl证书文件到工作目录中

\cp -R ca*.pem kube-scheduler*.pem /opt/kubernetes/ssl/kube-scheduler

3、创建kube-scheduler.kubeconfig文件

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.11.100:6443 --kubeconfig=kube-scheduler.kubeconfigkubectl config set-credentials system:kube-scheduler --client-certificate=kube-scheduler.pem --client-key=kube-scheduler-key.pem --embed-certs=true --kubeconfig=kube-scheduler.kubeconfigkubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfigkubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

#将生成的文件复制到工作目录下

\cp -R kube-scheduler.kubeconfig /opt/kubernetes/cfg/kube-scheduler.kubeconfig4、创建kube-scheduler.conf配置文件

cat > /opt/kubernetes/cfg/kube-scheduler.conf << "EOF"

KUBE_SCHEDULER_OPTS=" \

--leader-elect=true \

--kubeconfig=/opt/kubernetes/cfg/kube-scheduler.kubeconfig \

--v=2"

EOF

5、创建kube-scheduler.service

cat > /usr/lib/systemd/system/kube-scheduler.service << "EOF"

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf

ExecStart=/usr/local/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

RestartSec=5[Install]

WantedBy=multi-user.target

EOF

6、分发依赖

#在其他的master上执行

#mkdir -vp /opt/kubernetes/ssl/kube-scheduler

#复制所有的文件到对应服务器上

scp -r /opt/kubernetes/cfg/kube-scheduler* k8s-master2:/opt/kubernetes/cfg/

scp -r /usr/lib/systemd/system/kube-scheduler.service k8s-master2:/usr/lib/systemd/system/

scp -r /opt/kubernetes/ssl/kube-scheduler/* k8s-master2:/opt/kubernetes/ssl/kube-scheduler

7、启动kube-scheduler

systemctl daemon-reload

systemctl enable --now kube-scheduler

systemctl status kube-scheduler

6、集群状态查询

#查看集群信息

kubectl cluster-info

#查看集群组件状态

kubectl get componentstatuses

#查看命名空间中资源对象

kubectl get all --all-namespaces

5、部署Node

1、安装docker

1、安装docker

#1、更新yum

yum update

#2、安装需要的软件包

yum install -y yum-utils device-mapper-persistent-data lvm2

#3、设置阿里云镜像

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

## 查看相关版本

# yum list installed | grep docker

#http://mirrors.163.com/docker-ce/linux/centos/7.6/x86_64/stable/Packages/

#下载k8s匹配的版本(k8s为1.27 docker为v20.10.18...v20.10.21)

#yum install docker-ce-20.10.20-3.el7

yum install docker-ce

docker -v

#6、设置开机启动

systemctl daemon-reload

#设置docker开机自启

systemctl enable --now docker

systemctl start docker

systemctl status docker

#关闭docker开机自启

#systemctl disable docker

2、环境配置

- 1.24之后官方弃用docker说明:

https://kubernetes.io/zh-cn/docs/setup/production-environment/container-runtimes/#docker

注意exec-opts是启动kubelet需要的参数,和kubelet.json的"cgroupDriver"保持一致

mkdir -p /etc/docker

#准备docker的环境配置以及工作目录

#k8s 1.24之后需要指定守护进程加载方式native.cgroupdriver=systemd

cat > /etc/docker/daemon.json << EOF

{"data-root":"/usr/local/dockerWorkspace/","exec-opts": ["native.cgroupdriver=systemd"],"registry-mirrors" : ["https://registry.docker-cn.com","http://hub-mirror.c.163.com","https://docker.mirrors.ustc.edu.cn","https://cr.console.aliyun.com"]

}

EOF

#重启docker

systemctl restart docker

1.Docker中国区官方镜像 https://registry.docker-cn.com

2.网易 http://hub-mirror.c.163.com

3.ustc https://docker.mirrors.ustc.edu.cn

4.中国科技大学 https://docker.mirrors.ustc.edu.cn

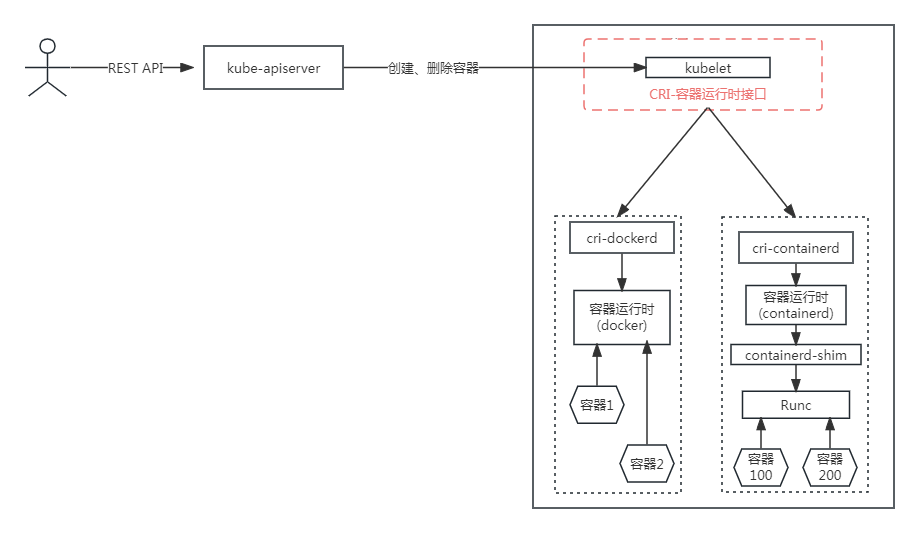

3、cri-dockerd安装

#下载文件

wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.4/cri-dockerd-0.3.4-3.el7.x86_64.rpm

#安装cri-dockerd

yum install cri-dockerd-0.3.4-3.el7.x86_64.rpm

#然后编辑cri-dockerd.service[安装后文件存在此处]

vim /usr/lib/systemd/system/cri-docker.service

#修改ExecStart配置

#ExecStart=/usr/bin/cri-dockerd --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.7 --container-runtime-endpoint fd://

ExecStart=/usr/bin/cri-dockerd --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.8 --container-runtime-endpoint fd://

#启动cri-docker

systemctl enable --now cri-docker

#查看启动状态

systemctl status cri-docker

2、安装kubelet

(因为CA、cfssl都在master1上,所以在master1上生成证书相关内容,复制并分发到node节点上)

1、复制ca证书

node节点上,安装以下内容kubelet、kube-proxy、docker[或者containerd]

#创建工作目录

mkdir -vp /opt/kubernetes/ssl/{kubelet,kube-proxy}

\cp -R /usr/local/kubernetes/etcd/ca*.pem /opt/kubernetes/ssl/kubelet/

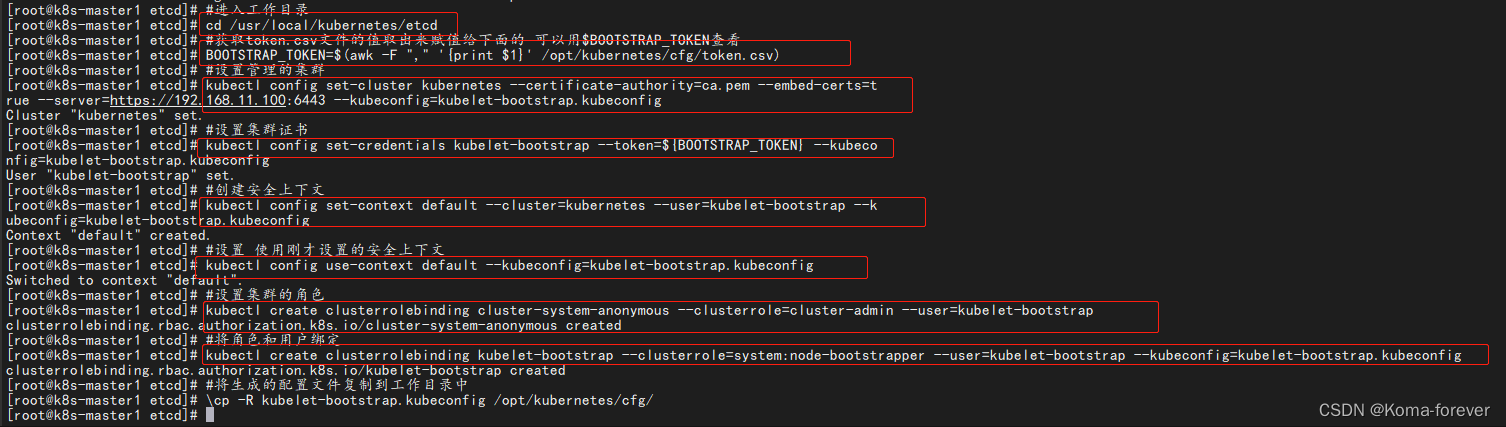

2、生成kubelet-bootstrap.kubeconfig配置文件

#进入工作目录

cd /usr/local/kubernetes/etcd

#获取token.csv文件的值取出来赋值给下面的 可以用$BOOTSTRAP_TOKEN查看

BOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /opt/kubernetes/cfg/token.csv)

#设置管理的集群

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.11.100:6443 --kubeconfig=kubelet-bootstrap.kubeconfig

#设置集群证书

kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=kubelet-bootstrap.kubeconfig

#创建安全上下文

kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig

#设置 使用刚才设置的安全上下文

kubectl config use-context default --kubeconfig=kubelet-bootstrap.kubeconfig#设置集群的角色

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=kubelet-bootstrap

#将角色和用户绑定

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig#将生成的配置文件复制到工作目录中

\cp -R kubelet-bootstrap.kubeconfig /opt/kubernetes/cfg/#查看 对角色cluster-system-anonymous的描述

kubectl describe clusterrolebinding cluster-system-anonymous

#查看kubelet-bootstrap角色用户信息

kubectl describe clusterrolebinding kubelet-bootstrap

注意: 如果执行中出现某个角色已存在,删除即可

kubectl delete clusterrolebinding kubelet-bootstrap

3、同步证书文件到集群node节点上

注意:需要的证书文件都已生成,复制到k8s-node1节点上后,步骤四准备kubelet.json等后续操作就不在k8s-master1上继续执行了。去k8s-node1上执行。此处谨记

在对应的node上创建工作目录

mkdir -vp /opt/kubernetes/{cfg,ssl,bin}

mkdir -vp /opt/kubernetes/ssl/kubelet/

#复制配置文件config和角色权限配置文件

for i in k8s-node1 k8s-node2;do scp /opt/kubernetes/cfg/kubelet* $i:/opt/kubernetes/cfg/;done

#复制ssl文件

for i in k8s-node1 k8s-node2;do scp /opt/kubernetes/ssl/kubelet/* $i:/opt/kubernetes/ssl/kubelet/;done

4、准备kubelet.json文件node上执行

里面的#注释去掉,否则启动失败

cat > /opt/kubernetes/cfg/kubelet.json << "EOF"

{"kind": "KubeletConfiguration","apiVersion": "kubelet.config.k8s.io/v1beta1","authentication": {"x509": {"clientCAFile": "/opt/kubernetes/ssl/kubelet/ca.pem"},"webhook": {"enabled": true,"cacheTTL": "2m0s"},"anonymous": {"enabled": false}},"authorization": {"mode": "Webhook","webhook": {"cacheAuthorizedTTL": "5m0s","cacheUnauthorizedTTL": "30s"}},#本机ip(集群则需要注意,配置当前node节点ip)"address": "192.168.11.113","port": 10250,"readOnlyPort": 10255,#和config.toml的systemd_cgroup = true对应的cgroup保持一致"cgroupDriver": "systemd", "hairpinMode": "promiscuous-bridge","serializeImagePulls": false,"clusterDomain": "cluster.local.","clusterDNS": ["10.96.0.2"]

}

EOF

5、准备kubelet.service文件

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig 此处没有该文件,后续启动会自动生成该文件

cat > /usr/lib/systemd/system/kubelet.service << "EOF"

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

#After=containerd.service

#Requires=containerd.service

#启动的容器是docker则配置docker,如果是containerd则配置containerd.service

After=docker.service

Requires=docker.service[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/usr/local/bin/kubelet \--bootstrap-kubeconfig=/opt/kubernetes/cfg/kubelet-bootstrap.kubeconfig \--cert-dir=/opt/kubernetes/ssl/kubelet \--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \--config=/opt/kubernetes/cfg/kubelet.json \--rotate-certificates \--container-runtime-endpoint=unix:///run/cri-dockerd.sock \--pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.8 \--v=2

Restart=on-failure

RestartSec=5[Install]

WantedBy=multi-user.target

EOF

- 注意

containerd指定为:–container-runtime-endpoint=unix:///run/containerd/containerd.sock

docker指定为:–container-runtime-endpoint=unix:///run/cri-dockerd.sock

Unit:特别注意指定的docker容器,还是contained容器

下载异常使用如下配置 --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.8 \

6、同步到其他node节点

#切记,一定要修改里面的ip地址

for i in k8s-node2 k8s-node3;do scp /opt/kubernetes/cfg/kubelet.json $i:/opt/kubernetes/cfg ;done

for i in k8s-node2 k8s-node3;do scp /usr/lib/systemd/system/kubelet.service $i:/usr/lib/systemd/system ;done

7、创建目录并启动

(在对应的node机器上执行,别在master上执行)

mkdir -p /var/lib/kubelet

mkdir -p /var/log/kubernetessystemctl daemon-reload

systemctl enable --now kubelet

systemctl status kubelet

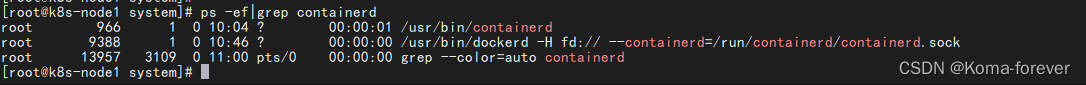

8、启动报错排查

Aug 4 19:12:40 k8s-master03 kubelet: E0804 19:12:40.726264 21343 run.go:74] "command failed" err="failed to run Kubelet: validate service connection: CRI v1 runtime API is not implemented for endpoint \"unix:///run/containerd/containerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.RuntimeService"

使用命令查看容器启动情况ps -ef|grep containerd

删除对应的docker即可 然后重启kubelet

3、安装kube-proxy

(因为CA、cfssl都在master1上,所以在master1上生成证书相关内容,复制并分发到node节点上)

kube-proxy:为容器提供现相应的网络支持

1、创建kube-proxy证书请求文件

#生成工作目录

mkdir -vp /opt/kubernetes/ssl/kube-proxy

mkdir -vp /opt/kubernetes/yaml

cd /usr/local/kubernetes/etcd

#ca-config文件

cat > kube-proxy-csr.json << "EOF"

{"CN": "system:kube-proxy","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "Beijing","L": "Beijing","O": "kubemsb","OU": "CN"}]

}

EOF

2、生成证书

#生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

#复制到工作目录中

\cp -R ca*.pem kube-proxy*.pem /opt/kubernetes/ssl/kube-proxy

3、创建kube-proxy.kubeconfig文件

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.11.100:6443 --kubeconfig=kube-proxy.kubeconfigkubectl config set-credentials kube-proxy --client-certificate=kube-proxy.pem --client-key=kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfigkubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfigkubectl config use-context default --kubeconfig=kube-proxy.kubeconfig#将生成的证书文件复制到工作目录中

\cp -R kube-proxy.kubeconfig /opt/kubernetes/cfg/

4、同步证书文件到集群node节点上

注意:需要的证书文件都已生成,复制到k8s-node1节点上后,步骤四准备kubelet.json等后续操作就不在k8s-master1上继续执行了。去k8s-node1上执行。此处谨记

在对应的node上创建工作目录

mkdir -vp /opt/kubernetes/ssl/kube-proxy

mkdir -vp /opt/kubernetes/yaml

#复制配置文件config和角色权限配置文件

for i in k8s-node1 k8s-node2;do scp /opt/kubernetes/cfg/kube-proxy* $i:/opt/kubernetes/cfg/;done

#复制ssl文件

for i in k8s-node1 k8s-node2;do scp /opt/kubernetes/ssl/kube-proxy/* $i:/opt/kubernetes/ssl/kube-proxy/;done

5、创建kube-proxy.service服务配置文件node上执行

注意:每台服务器记得修改对应的ip地址

cat > /opt/kubernetes/yaml/kube-proxy.yaml << "EOF"

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 192.168.11.113

clientConnection:kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig

clusterCIDR: 10.244.0.0/16

healthzBindAddress: 192.168.11.113:10256

kind: KubeProxyConfiguration

metricsBindAddress: 192.168.11.113:10249

mode: "ipvs"

EOF

6、创建kube-proxy.service服务管理文件

cat > /usr/lib/systemd/system/kube-proxy.service << "EOF"

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \--config=/opt/kubernetes/yaml/kube-proxy.yaml \--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536[Install]

WantedBy=multi-user.target

EOF

6、同步文件到集群节点

在对应的node上创建工作目录

mkdir -vp /opt/kubernetes/yaml

for i in k8s-node1 k8s-node2;do scp /usr/lib/systemd/system/kubelet.service $i:/usr/lib/systemd/system/;done

for i in k8s-node1 k8s-node2;do scp /opt/kubernetes/yaml/kube-proxy.yaml $i:/opt/kubernetes/yaml/;done

7、服务启动

mkdir -p /var/lib/kube-proxy

systemctl daemon-reload

systemctl enable --now kube-proxy

systemctl status kube-proxy

6、部署CNI网络master安装

flannel和cacico(推荐)任选其一

1、创建工作目录

mkdir -vp /opt/kubernetes/systemYaml

cd /opt/kubernetes/systemYaml

2、flannel的安装

- CNI网络插件的主要功能就是为了实现pod资源能够跨宿主机进行通信

要在每个pod节点上安装

Flannel 由CoreOS开发,用于解决docker集群跨主机通讯的覆盖网络(overlay network),它的主要思路是:预先留出一个网段,每个主机使用其中一部分,

然后每个容器被分配不同的ip;让所有的容器认为大家在同一个直连的网络,底层通过UDP/VxLAN/Host-GW等进行报文的封装和转发解决Pod内容器与容器之间的通信Flannel实质上是一种“覆盖网络(overlaynetwork)”,也就是将TCP数据包装在另一种网络包里面进行路由转发和通信,目前已经支持udp、vxlan、host-gw、aws-vpc、gce

和alloc路由等数据转发方式,默认的节点间数据通信方式是UDP转发。它的功能是让集群中的不同节点主机创建的Docker容器都具有全集群唯一的虚拟IP地址。

Flannel的设计目的就是为集群中的所有节点重新规划IP地址的使用规则,从而使得不同节点上的容器能够获得同属一个内网且不重复的IP地址,并让属于不同节点上的容器能够

直接通过内网IP通信。

- Flannel工作原理

node1上的pod1 要和node2上的pod1进行通信1.数据从node1上的Pod1源容器中发出,经由所在主机的docker0 虚拟网卡转发到flannel0虚拟网卡;2.再由flanneld把pod ip封装到udp中(里面封装的是源pod IP和目的pod IP);3.根据在etcd保存的路由表信息,通过物理网卡发送给目的node2的flanneld,来进行解封装暴露出udp里的pod IP;4.最后根据目的pod IP经flannel0虚拟网卡和docker0虚拟网卡转发到目的pod中,最后完成通信

1、配置10-flannel.conflistist

mkdir -p /etc/cni/net.d

#下载cni

#wget https://github.com/containernetworking/plugins/releases/download/v0.8.2/cni-plugins-linux-amd64-v0.8.2.tgz

cat > /etc/cni/net.d/10-flannel.conflist << EOF

{"name": "cbr0","plugins": [{"type": "flannel","delegate": {"hairpinMode": true,"isDefaultGateway": true}},{"type": "portmap","capabilities": {"portMappings": true}}]

}

EOF

2、github下载(网速较慢)

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

3、手动创建yaml文件(推荐使用)

cat > /opt/kubernetes/systemYaml/kube-flannel.yml << "EOF"

---

kind: Namespace

apiVersion: v1

metadata:name: kube-flannellabels:k8s-app: flannelpod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:labels:k8s-app: flannelname: flannel

rules:

- apiGroups:- ""resources:- podsverbs:- get

- apiGroups:- ""resources:- nodesverbs:- get- list- watch

- apiGroups:- ""resources:- nodes/statusverbs:- patch

- apiGroups:- networking.k8s.ioresources:- clustercidrsverbs:- list- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:labels:k8s-app: flannelname: flannel

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: flannel

subjects:

- kind: ServiceAccountname: flannelnamespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:labels:k8s-app: flannelname: flannelnamespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:name: kube-flannel-cfgnamespace: kube-flannellabels:tier: nodek8s-app: flannelapp: flannel

data:cni-conf.json: |{"name": "cbr0","cniVersion": "0.3.1","plugins": [{"type": "flannel","delegate": {"hairpinMode": true,"isDefaultGateway": true}},{"type": "portmap","capabilities": {"portMappings": true}}]}net-conf.json: |{"Network": "10.244.0.0/16","Backend": {"Type": "vxlan"}}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:name: kube-flannel-dsnamespace: kube-flannellabels:tier: nodeapp: flannelk8s-app: flannel

spec:selector:matchLabels:app: flanneltemplate:metadata:labels:tier: nodeapp: flannelspec:affinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/osoperator: Invalues:- linuxhostNetwork: truepriorityClassName: system-node-criticaltolerations:- operator: Existseffect: NoScheduleserviceAccountName: flannelinitContainers:- name: install-cni-pluginimage: docker.io/flannel/flannel-cni-plugin:v1.2.0command:- cpargs:- -f- /flannel- /opt/cni/bin/flannelvolumeMounts:- name: cni-pluginmountPath: /opt/cni/bin- name: install-cniimage: docker.io/flannel/flannel:v0.22.1command:- cpargs:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistvolumeMounts:- name: cnimountPath: /etc/cni/net.d- name: flannel-cfgmountPath: /etc/kube-flannel/containers:- name: kube-flannelimage: docker.io/flannel/flannel:v0.22.1command:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgrresources:requests:cpu: "100m"memory: "50Mi"securityContext:privileged: falsecapabilities:add: ["NET_ADMIN", "NET_RAW"]env:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: EVENT_QUEUE_DEPTHvalue: "5000"volumeMounts:- name: runmountPath: /run/flannel- name: flannel-cfgmountPath: /etc/kube-flannel/- name: xtables-lockmountPath: /run/xtables.lockvolumes:- name: runhostPath:path: /run/flannel- name: cni-pluginhostPath:path: /opt/cni/bin- name: cnihostPath:path: /etc/cni/net.d- name: flannel-cfgconfigMap:name: kube-flannel-cfg- name: xtables-lockhostPath:path: /run/xtables.locktype: FileOrCreate

EOF

4、部署应用

#下载网络插件

kubectl apply -f kube-flannel.yml

#查看下载、以及运行情况

kubectl get pods -n kube-flannel

#查看集群运行情况

kubectl get nodes

5、注意

注意:

1、本人在部署flannel的时候,出现了好多问题,error getting ClusterInformation: connection is unauthorized: Unauthorized

其过程是安装了calico后,卸载掉,重新安装flannel出现安装不上的问题,报以上错误,后来经过查询,找到解决办法

解决办法是删除掉 /etc/cni/net.d/ 目录下的 calico 配置文件即可。所有的master和node节点都排查一遍

2、特别注意的是第一步的10-flannel.conflistist要执行,。不然读取不到配置文件会报错

3、下载calico

说明: 集群网络插件

安装参考网址:https://projectcalico.docs.tigera.io/about/about-calico

1、下载calico.yaml

#官网版本

https://docs.tigera.io/archive

#下载指定版本

#应用operator资源清单文件

wget https://raw.githubusercontent.com/projectcalico/calico/master/manifests/calico.yaml --no-check-certificate

#放开此配置

4894 - name: CALICO_IPV4POOL_CIDR

4895 value: "10.244.0.0/16"

文件位置: doc/kubernetes/calicoFile/calico.yaml

2、修改应用文件

k8s和calico的版本对应关系官网查看:https://projectcalico.docs.tigera.io/archive/v3.20/getting-started/kubernetes/requirements

#启动应用

kubectl apply -f calico.yaml

#查看下载、以及运行情况

kubectl get pods -n kube-system

#查看集群运行情况

kubectl get nodes

4、注意

如果是使用flannel网络插件,这两个cidr可以不一样,无所谓啦,因为它用的是iptables,那如果是calico,用的是ipvs,cidr必须保持一致

在二进制方式安装的,这个cidr一般是定义在kube-proxy和kube-controller-manager这两个核心服务的配置文件内的。

kube-controller-manager.conf文件的–cluster-cidr=和kube-proxy.yaml的clusterCIDR必须保持一致,否则pod报错

7、部署CoreDNS

提供pod的域名解析

coredns在K8S中的用途,主要是用作服务发现,也就是服务(应用)之间相互定位的过程。

官网:https://github.com/coredns/coredns/tags

https://github.com/kubernetes/kubernetes/blob/master/cluster/addons/dns/coredns/coredns.yaml.base

5、创建coredns.yaml(此版本为1.8.4)

cat > /opt/kubernetes/systemYaml/coredns.yaml << "EOF"

apiVersion: v1

kind: ServiceAccount

metadata:name: corednsnamespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:labels:kubernetes.io/bootstrapping: rbac-defaultsname: system:coredns

rules:- apiGroups:- ""resources:- endpoints- services- pods- namespacesverbs:- list- watch- apiGroups:- discovery.k8s.ioresources:- endpointslicesverbs:- list- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:annotations:rbac.authorization.kubernetes.io/autoupdate: "true"labels:kubernetes.io/bootstrapping: rbac-defaultsname: system:coredns

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:coredns

subjects:

- kind: ServiceAccountname: corednsnamespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:name: corednsnamespace: kube-system

data:Corefile: |.:53 {errorshealth {lameduck 5s}readykubernetes cluster.local in-addr.arpa ip6.arpa {fallthrough in-addr.arpa ip6.arpa}prometheus :9153forward . /etc/resolv.conf {max_concurrent 1000}cache 30loopreloadloadbalance}

---

apiVersion: apps/v1

kind: Deployment

metadata:name: corednsnamespace: kube-systemlabels:k8s-app: kube-dnskubernetes.io/name: "CoreDNS"

spec:# replicas: not specified here:# 1. Default is 1.# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.strategy:type: RollingUpdaterollingUpdate:maxUnavailable: 1selector:matchLabels:k8s-app: kube-dnstemplate:metadata:labels:k8s-app: kube-dnsspec:priorityClassName: system-cluster-criticalserviceAccountName: corednstolerations:- key: "CriticalAddonsOnly"operator: "Exists"nodeSelector:kubernetes.io/os: linuxaffinity:podAntiAffinity:preferredDuringSchedulingIgnoredDuringExecution:- weight: 100podAffinityTerm:labelSelector:matchExpressions:- key: k8s-appoperator: Invalues: ["kube-dns"]topologyKey: kubernetes.io/hostnamecontainers:- name: corednsimage: coredns/coredns:1.10.1imagePullPolicy: IfNotPresentresources:limits:memory: 170Mirequests:cpu: 100mmemory: 70Miargs: [ "-conf", "/etc/coredns/Corefile" ]volumeMounts:- name: config-volumemountPath: /etc/corednsreadOnly: trueports:- containerPort: 53name: dnsprotocol: UDP- containerPort: 53name: dns-tcpprotocol: TCP- containerPort: 9153name: metricsprotocol: TCPsecurityContext:allowPrivilegeEscalation: falsecapabilities:add:- NET_BIND_SERVICEdrop:- allreadOnlyRootFilesystem: truelivenessProbe:httpGet:path: /healthport: 8080scheme: HTTPinitialDelaySeconds: 60timeoutSeconds: 5successThreshold: 1failureThreshold: 5readinessProbe:httpGet:path: /readyport: 8181scheme: HTTPdnsPolicy: Defaultvolumes:- name: config-volumeconfigMap:name: corednsitems:- key: Corefilepath: Corefile

---

apiVersion: v1

kind: Service

metadata:name: kube-dnsnamespace: kube-systemannotations:prometheus.io/port: "9153"prometheus.io/scrape: "true"labels:k8s-app: kube-dnskubernetes.io/cluster-service: "true"kubernetes.io/name: "CoreDNS"

spec:selector:k8s-app: kube-dnsclusterIP: 10.96.0.2ports:- name: dnsport: 53protocol: UDP- name: dns-tcpport: 53protocol: TCP- name: metricsport: 9153protocol: TCP

EOF

6、启动并应用

kubectl apply -f coredns.yamlkubectl get pods -A

kubectl get pods -n kube-system

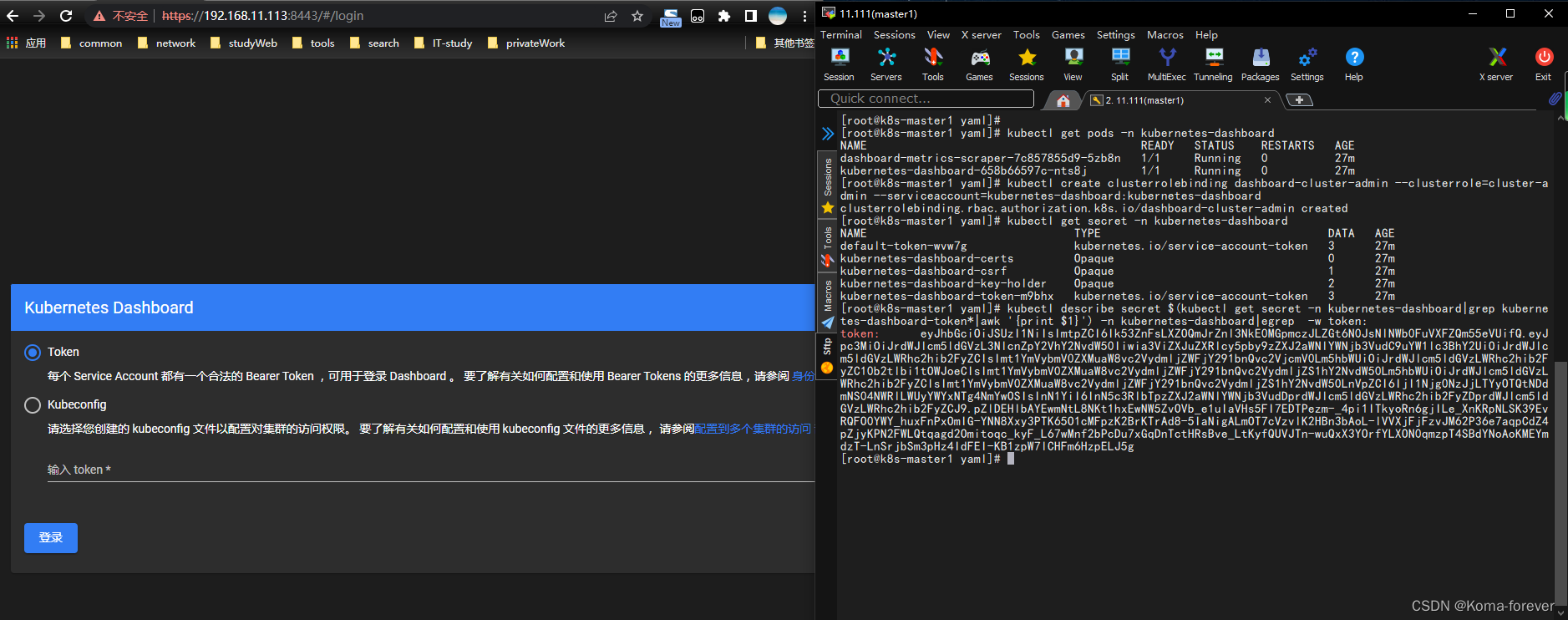

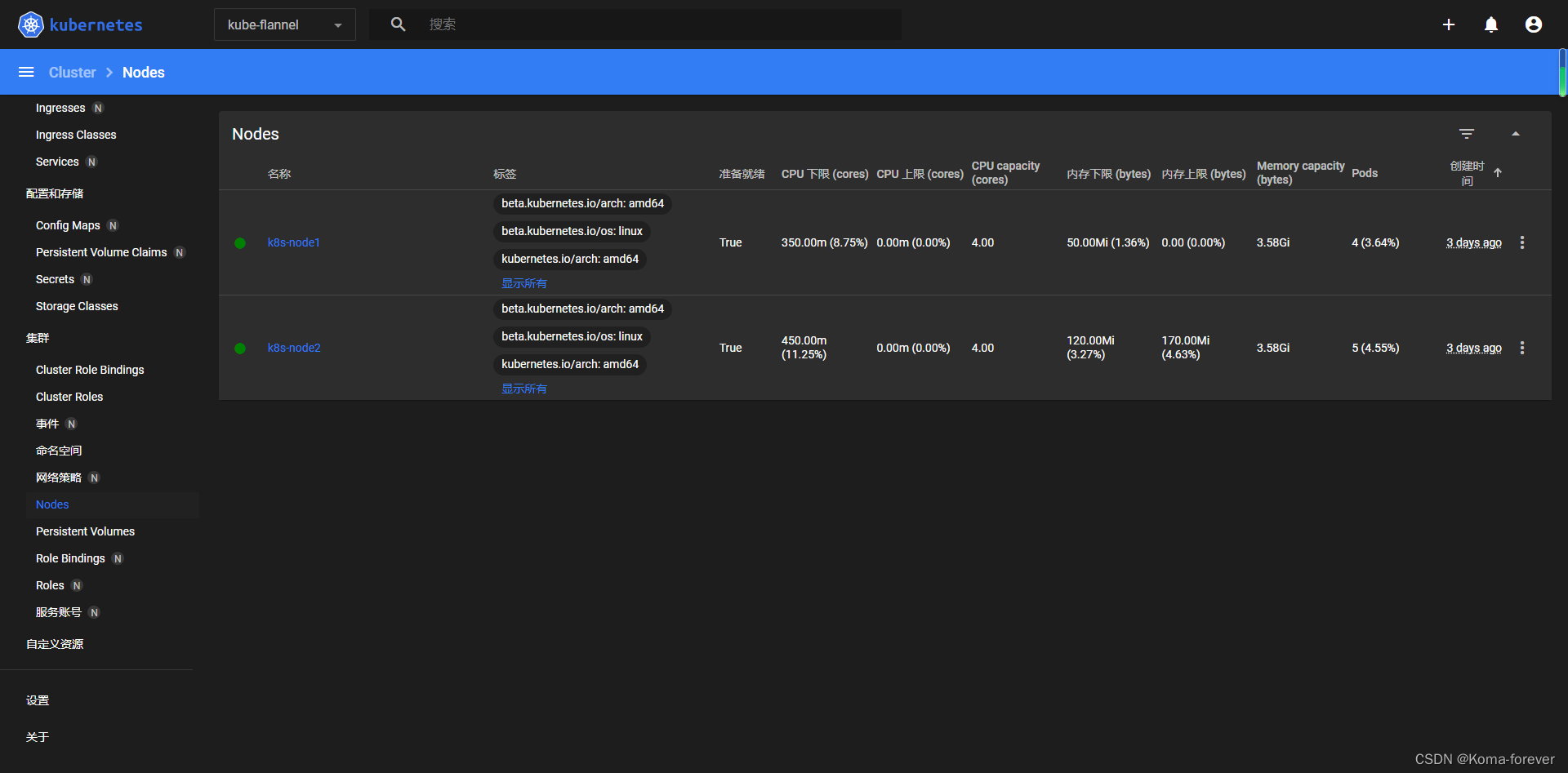

8、部署Web UI

1、下载部署

#进入到系统相关的yaml的工作目录

cd /opt/kubernetes/systemYaml

#官网地址

https://github.com/kubernetes/dashboard

https://kubernetes.io/docs/tasks/access-application-cluster/web-ui-dashboard

#下载

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml --no-check-certificate

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml --no-check-certificate

#找对应支持的版本号

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.2/aio/deploy/recommended.yaml2、修改配置信息

#修改如下内容

kind: Service

apiVersion: v1

metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboard

spec:type: NodePortports:- port: 443targetPort: 8443nodePort: 8443selector:k8s-app: kubernetes-dashboard

3、启动部署

#启动

kubectl apply -f recommended.yaml

#查看启动情况

kubectl get svc -n kubernetes-dashboard

kubectl get pods -n kubernetes-dashboard

#访问

https://IP+SVCPort

4、通过Token令牌登入

1、下载文件

#创建一个 ClusterRoleBinding 对象,并赋予cluster-admin权限,即访问整个集群的权限,包括查看和修改所有资源的权限

kubectl create clusterrolebinding dashboard-cluster-admin --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:kubernetes-dashboard

#查看secret

kubectl get secret -n kubernetes-dashboard

#获取token

kubectl describe secret $(kubectl get secret -n kubernetes-dashboard|grep kubernetes-dashboard-token*|awk '{print $1}') -n kubernetes-dashboard|egrep -w token:

2、复制登录接口

eyJhbGciOiJSUzI1NiIsImtpZCI6Ik53ZnFsLXZOQmJrZnl3NkE0MGpmczJLZGt6N0JsNlNWb0FuVXFZQm55eVUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1tOWJoeCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjI1Njg0NzJjLTYyOTQtNDdmNS04NWRlLWUyYWYxNTg4NmYwOSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.pZlDEHlbAYEwmNtL8NKt1hxEwNW5ZvOVb_e1uIaVHs5FI7EDTPezm-_4pi1ITkyoRn6gjILe_XnKRpNLSK39EvRQFO0YWY_huxFnPxOmIG-YNN8Xxy3PTK65O1cMFpzK2BrKTrAd8-5IaNigALmOT7cVzvlK2HBn3bAoL-lVVXjFjFzvJM62P36e7aqpCdZ4pZjyKPN2FWLQtqagd2Omitoqc_kyF_L67wMnf2bPcDu7xGqDnTctHRsBve_LtKyfQUVJTn-wuQxX3YOrfYLXON0qmzpT4SBdYNoAoKMEYmdzT-LnSrjbSm3pHz4IdFEI-KB1zpW7lCHFm6HzpELJ5g

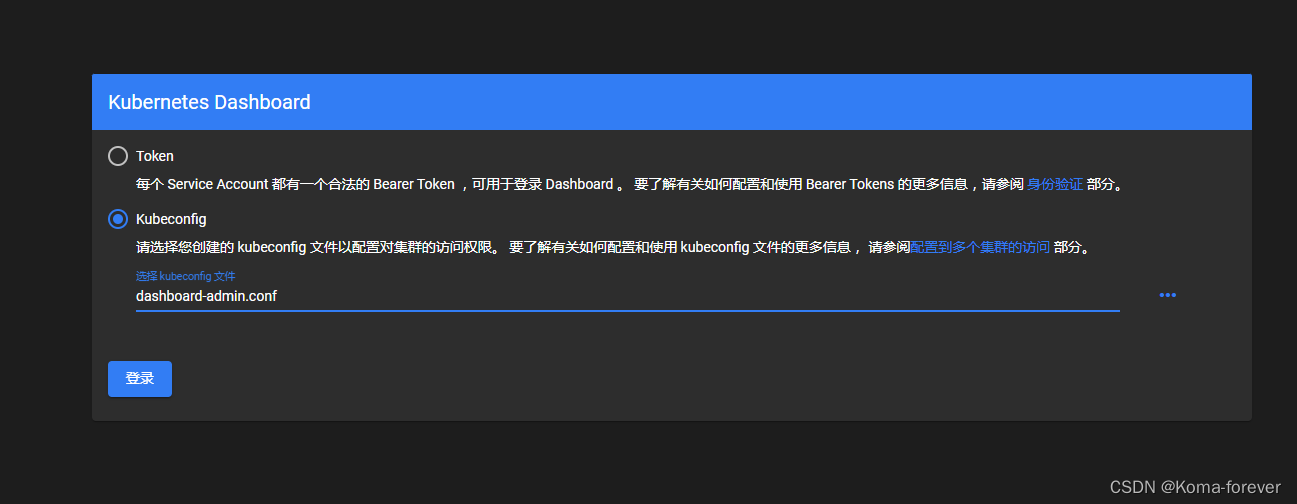

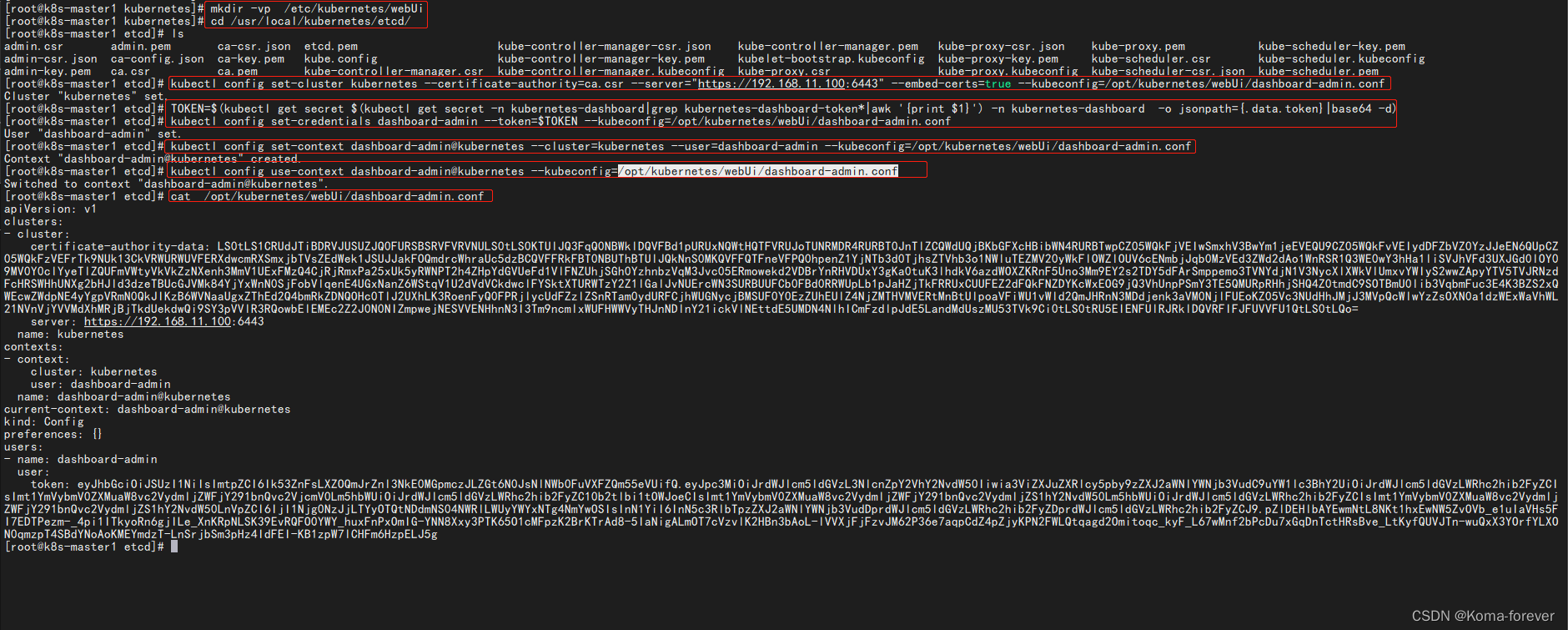

5、Kubeconfig登录

#1、创建cluster集群

mkdir -vp /etc/kubernetes/webUi

#进入ca生成证书所在的目录,ca.crt的目录

cd /usr/local/kubernetes/etcd/

#--server指定的是访问apiserver的地址,此处我的是keepalived代理地址

kubectl config set-cluster kubernetes --certificate-authority=ca.csr --server="https://192.168.11.100:6443" --embed-certs=true --kubeconfig=/opt/kubernetes/webUi/dashboard-admin.conf#创建credentials

TOKEN=$(kubectl get secret $(kubectl get secret -n kubernetes-dashboard|grep kubernetes-dashboard-token*|awk '{print $1}') -n kubernetes-dashboard -o jsonpath={.data.token}|base64 -d)kubectl config set-credentials dashboard-admin --token=$TOKEN --kubeconfig=/opt/kubernetes/webUi/dashboard-admin.conf#创建context

kubectl config set-context dashboard-admin@kubernetes --cluster=kubernetes --user=dashboard-admin --kubeconfig=/opt/kubernetes/webUi/dashboard-admin.conf

#切换context的current-context是dashboard-admin@kubernetes

kubectl config use-context dashboard-admin@kubernetes --kubeconfig=/opt/kubernetes/webUi/dashboard-admin.conf

#然后进入/opt/kubernetes/webUi目录下,下载到本地,

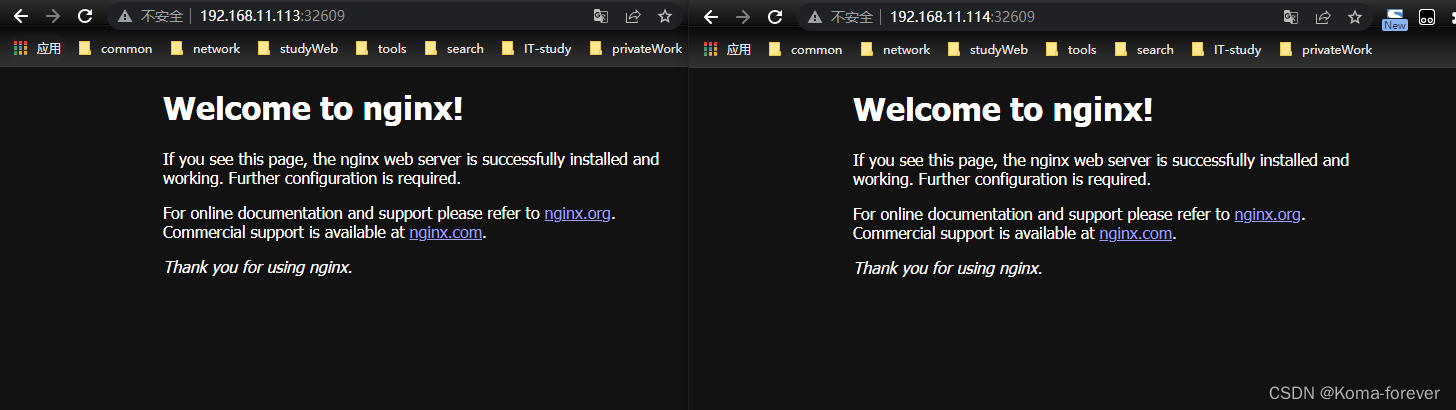

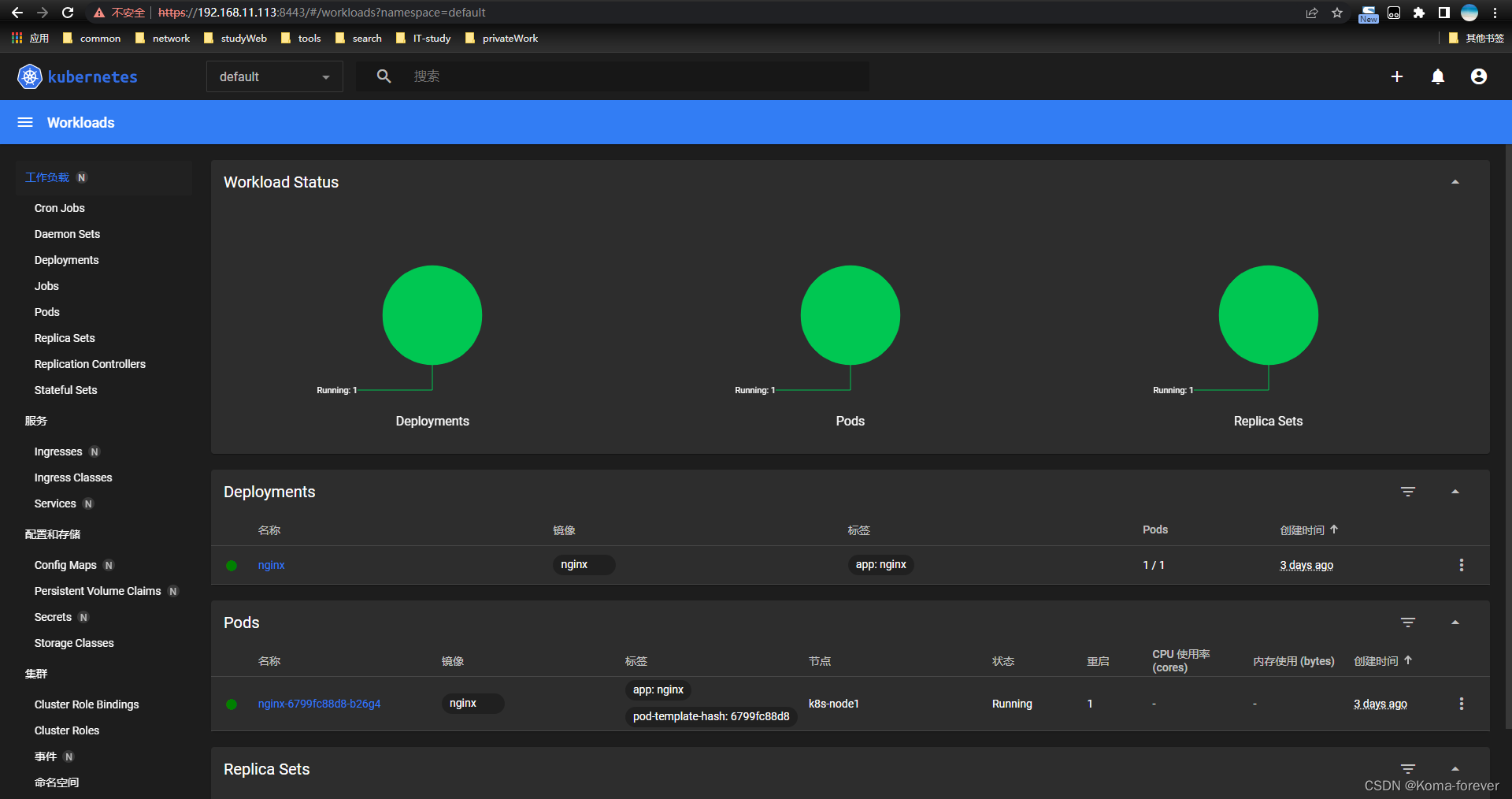

9、部署nginx

cat > /opt/kubernetes/yaml/nginx-test.yaml << "EOF"

---

apiVersion: apps/v1

kind: Deployment

metadata:name: nginxnamespace: default # 指定命名空间,如果不想指定,可以将此行删除labels:app: nginx

spec:replicas: 2selector:matchLabels:app: nginxtemplate:metadata:labels:app: nginxspec:containers:- name: nginximage: nginx:latestimagePullPolicy: IfNotPresentports:- name: httpprotocol: TCPcontainerPort: 80resources:limits:cpu: "1.0"memory: 512Mirequests:cpu: "0.5"memory: 128Mi

---

apiVersion: v1

kind: Service

metadata:annotations:name: nginx-test-servicenamespace: default # 指定命名空间,如果不想指定,可以将此行删除**

spec:ports:- port: 80targetPort: 80nodePort: 80protocol: TCPselector:app: nginxsessionAffinity: Nonetype: NodePort

EOF

#部署应用

kubectl apply -f nginx-test.yaml

#查看对外暴漏的外网端口

kubectl get pod,svc