授权声明:本篇文章授权活动官方亚马逊云科技文章转发、改写权,包括不限于在 Developer Centre, 知乎,自媒体平台,第三方开发者媒体等亚马逊云科技官方渠道。

本文基于以下软硬件工具:

+ aws ec2

+ frp-0.52.3

+ mediamtx-1.3.0

+ ffmpeg-5.1.4

+ opencv-4.7.0

0. 环境

aws ec2 一台

本地ubuntu18

本地win10 + 火狐浏览器

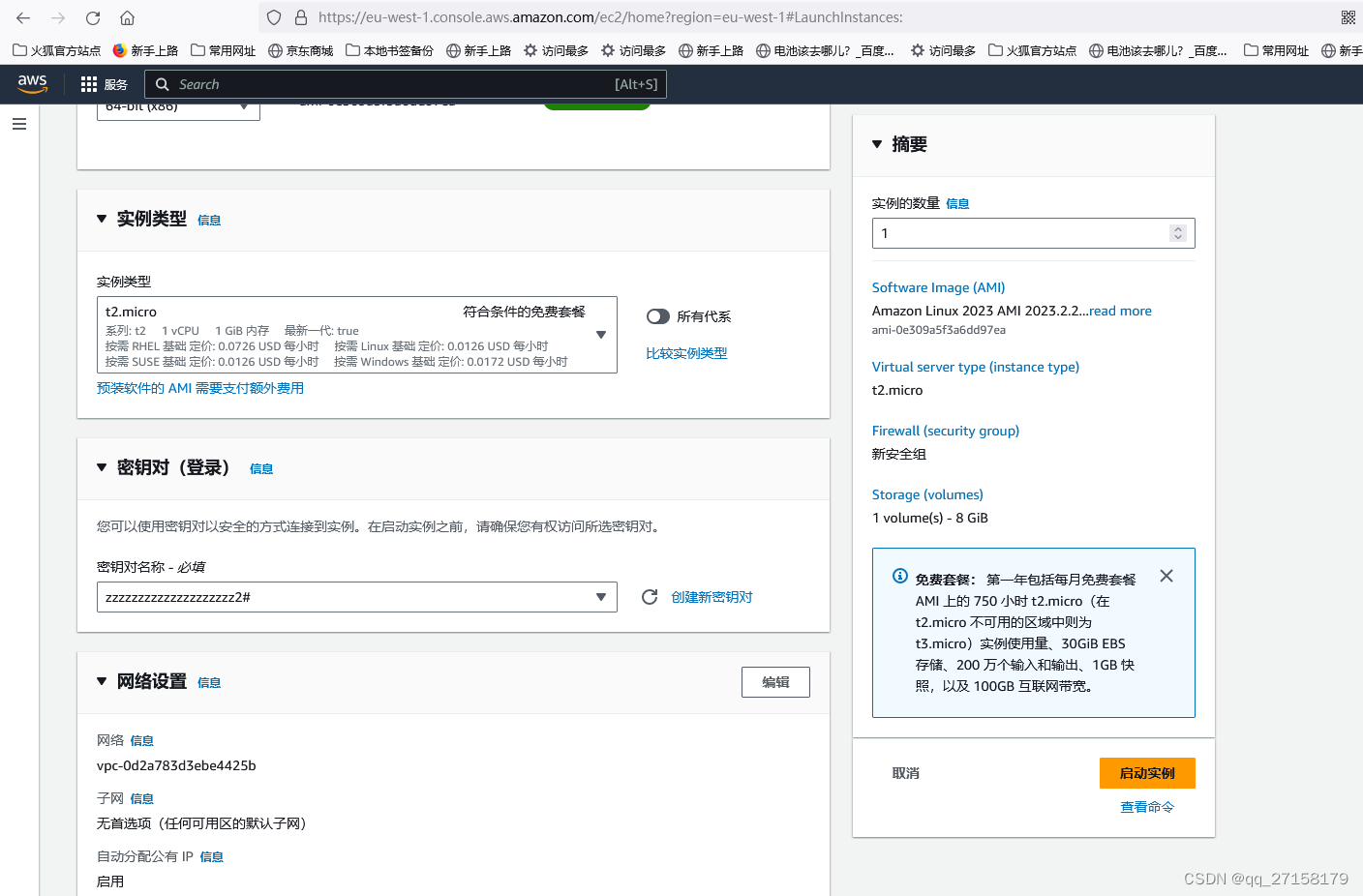

1. aws ec2准备

1.1 控制台

本步骤我们低成本创建服务器。

在控制台主页,地区选择 爱尔兰 eu-west-1

创建服务器

Search栏输入 -> EC2

-> 启动实例 ->

-> Quick Start: Amazon Linux aws,

-> 实例类型:选择符合条件的免费套餐,t2.micro

-> 密钥对:创建密钥对

-> 名称:zzzzzzzzzzzzzzzzzzzz2#,RSA,.pem,然后保存到本地。ssh登录需要用到。

-> 创建实例

得到了服务器IP:54.229.195.3

1.2 连接到实例

1.2.1 控制台登录

点击实例ID:i-043213d6d1d2c8752

-> 点击 连接 -> 连接

1.2.2 ssh

用 MobaXterm为例

打开 MobaXterm -> Session -> SSH

-> Remote host: 54.229.195.3

-> 勾选Specify username: ec2-user

-> Avanced SSH settings

-> 勾选 Use private key

1.3 部署frp测试

1.3.1 下载可执行文件

到

https://github.com/fatedier/frp/releases

下载

当前最新版本是0.52.3

wget https://github.com/fatedier/frp/releases/download/v0.52.3/frp_0.52.3_linux_amd64.tar.gz

1.3.2 解压

tar -zvxf frp_0.52.3_linux_amd64.tar.gz1.3.3 切换目录

cd frp_0.52.3_linux_amd641.3.4 修改配置

vim frps.toml修改为以下内容:

bindPort = 30000

auth.method = "token"

auth.token = "520101"

webServer.addr = "0.0.0.0"

webServer.port = 30001

webServer.user = "admin"

webServer.password = "jian@123"运行

./frps -c frps.toml &1.3.5 服务器开启端口

通过web配置,开启自定义TCP,30000、30001、30002、30003

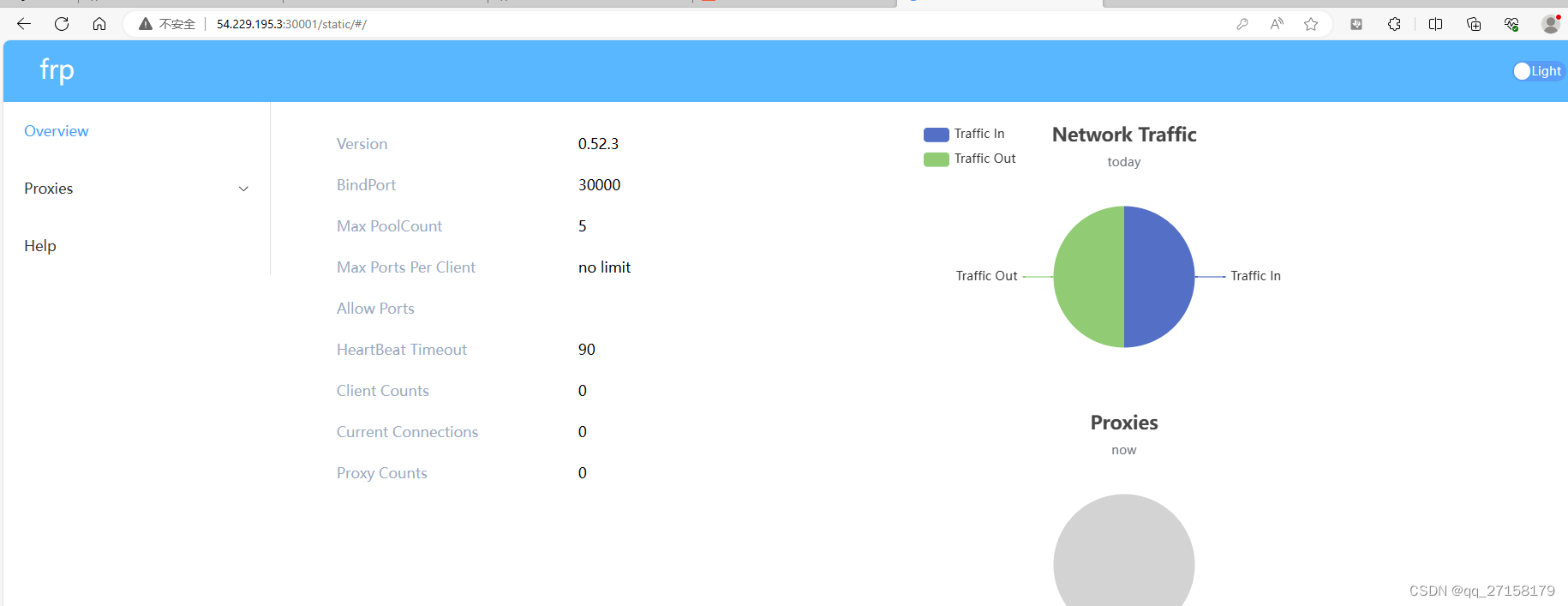

1.3.6 运行

./frps -c frps.ini &

1.3.7 测试web

浏览器打开 54.229.195.3:30001

登录信息:

admin

admin@123

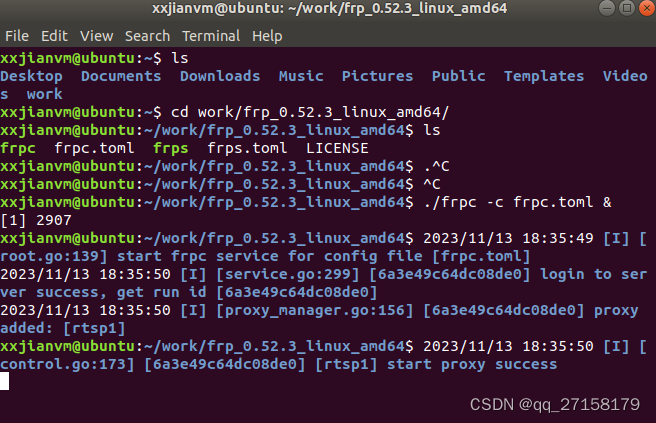

2. 本地ubuntu18 部署 frp

2.1 下载可执行文件

到

https://github.com/fatedier/frp/releases

下载

当前最新版本是0.52.3

wget https://github.com/fatedier/frp/releases/download/v0.52.3/frp_0.52.3_linux_amd64.tar.gz

2.2 解压

tar -zvxf frp_0.52.3_linux_amd64.tar.gz

2.3 切换目录

cd frp_0.52.3_linux_amd64

2.4 修改配置

vim frpc.ini修改为以下内容:

serverAddr = "54.229.195.3"

serverPort = 30000auth.method = "token"

auth.token = "520101"[[proxies]]

name = "rtsp1"

type = "tcp"

localIP = "127.0.0.1"

localPort = 8554

remotePort = 30002

2.5 运行

./frpc -c frpc.toml &

3. 本地ubuntu18部署mediamtx

获取可执行文件

https://github.com/bluenviron/mediamtx/releases

下载得到了mediamtx_v1.2.1_linux_amd64.tar.gz

运行:

tar -zvxf mediamtx_v1.2.1_linux_amd64.tar.gz

cd mediamtx

./mediamtx

4. 本地ubuntu18部署 ffmpeg

4.1 安装依赖

4.1.1 编译依赖

sudo apt-get update -qq && sudo apt-get -y install \autoconf \automake \build-essential \cmake \git-core \libass-dev \libfreetype6-dev \libgnutls28-dev \libmp3lame-dev \libsdl2-dev \libtool \libva-dev \libvdpau-dev \libvorbis-dev \libxcb1-dev \libxcb-shm0-dev \libxcb-xfixes0-dev \meson \ninja-build \pkg-config \texinfo \wget \yasm \zlib1g-dev

4.1.2 插件依赖

sudo apt-get install -y nasm && \

sudo apt-get install -y libx264-dev && \

sudo apt-get install -y libx265-dev libnuma-dev && \

sudo apt-get install -y libvpx-dev && \

sudo apt-get install -y libfdk-aac-dev && \

sudo apt-get install -y libopus-dev4.2 编译

4.2.1 获取源码

https://ffmpeg.org/download.html#releases根据参考[3],得到“考虑到opencv4.7.0开始支持ffmpeg5.x版本,因此下载了ffmpeg 5.1版本”

下载

wget https://ffmpeg.org/releases/ffmpeg-5.1.4.tar.gztar -zvxf ffmpeg-5.1.4.tar.gz4.2.2 配置

./configure \--prefix="$HOME/work/ffmpeg/install" \--extra-libs="-lpthread -lm" \--ld="g++" \--enable-gpl \--enable-gnutls \--enable-libass \--enable-libfdk-aac \--enable-libfreetype \--enable-libmp3lame \--enable-libopus \--enable-libvorbis \--enable-libvpx \--enable-libx264 \--enable-libx265 \--enable-nonfree和官方编译教程对比,去掉了 libsvtav1 、libdav1d、libaom选项。

4.3 编译

make -j8

4.4 安装

make install

4.5 测试

4.5.1 ffmpeg推流

#设置环境变量 可以编译安装之后设置,也可以现在按照下方的路径规律,替换掉安装的根目录,主要是不是一步步的编译方式,所有这个过程不太严谨

gedit ~/.bashrc

#在末尾添加

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/home/xxjianvm/work/ffmpeg/install/lib/

export PATH=$PATH:/home/xxjianvm/work/ffmpeg/install/bin/

#激活环境变量

source ~/.bashrc下载测试视频

wget http://clips.vorwaerts-gmbh.de/big_buck_bunny.mp4

循环推流

ffmpeg -re -stream_loop -1 -i big_buck_bunny.mp4 -c copy -f rtsp rtsp://127.0.0.1:8554/stream

4.5.2 ffplay播放

ffplay rtsp://127.0.0.1:8554/stream

4.5.3 ffplay测试经过亚马逊的视频流

ffplay rtsp://54.229.195.3:30002/stream

5. opencv

5.1 准备源码

https://opencv.org/releases/

下载了

opencv-4.7.0.tar.gz

放在~/work/opencv

解压

cd ~/work/opencv

tar -zvxf opencv-4.7.0.tar.gz

5.2 安装依赖Required Packages

# compiler ✓

$ sudo apt-get install build-essential

# required ✓

$ sudo apt-get install cmake git libgtk2.0-dev pkg-config libavcodec-dev libavformat-dev libswscale-dev

# optional ✓

$ sudo apt-get install python3-dev python3-numpy libtbb2 libtbb-dev libjpeg-dev libpng-dev libtiff-dev libjasper-dev libdc1394-22-dev5.2.1 error : E: Unable to locate package libjasper-dev

问题:aliyun的ubuntu源找不到libjasper-dev

解决办法:

sudo add-apt-repository "deb http://security.ubuntu.com/ubuntu xenial-security main"

sudo apt update#5.2.2 安装numpy(跳过)

#由于上面的python3-numpy是python3.6的,因此用pip3.7再安装一遍

#python3 -m pip install numpy

#

#$ sudo apt-get install -y libgstreamer-plugins-base1.0-dev \

# libpng16-16 \

# build-essential \

# cmake \

# git \

# pkg-config \

# libjpeg-dev \

# libgtk2.0-dev \

# libv4l-dev \

# libatlas-base-dev \

# gfortran \

# libhdf5-dev \

# libtiff5-dev \

# libtbb-dev \

# libeigen3-dev

5.3 编译安装

5.3.1 编译命令

$ cd opencv-4.7.0/

$ mkdir build && cd build/$ cmake -DCMAKE_BUILD_TYPE=RELEASE \

-DCMAKE_INSTALL_PREFIX=/home/xxjian/work/opencv/install/ \

-DPYTHON_DEFAULT_EXECUTABLE=$(python3 -c "import sys; print(sys.executable)") \

-DPYTHON3_EXECUTABLE=$(python3 -c "import sys; print(sys.executable)") \

-DPYTHON3_NUMPY_INCLUDE_DIRS=$(python3 -c "import numpy; print (numpy.get_include())") \

-DPYTHON3_PACKAGES_PATH=$(python3 -c "from distutils.sysconfig import get_python_lib; print(get_python_lib())") \

\

-DBUILD_DOCS=OFF \

-DBUILD_EXAMPLES=OFF \

-DBUILD_TESTS=OFF \

-DBUILD_PERF_TESTS=OFF \

-DFFMPEG_DIR=/home/xxjianvm/work/ffmpeg/install \

..

5.3.2 编译

$ make -j85.3.3 安装

$ sudo make install5.4 验证

$ python3

import cv2

cv2.__version__

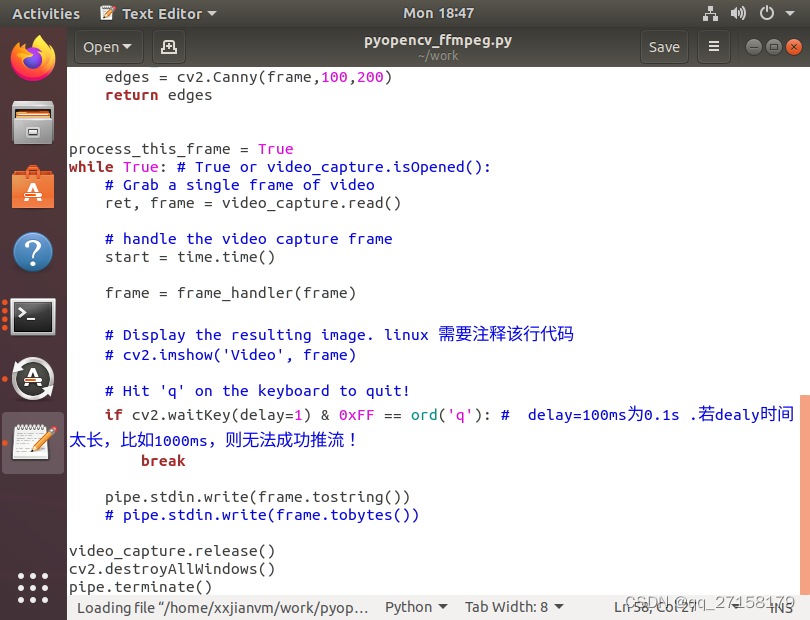

6. 运行基于python-opencv的拉流处理再推流的脚本

6.1 pyopencv_ffmpeg.py

创建pyopencv_ffmpeg.py,添加以下内容:

import cv2

import subprocess

import time

import numpy as np'''拉流url地址,指定 从哪拉流'''

# video_capture = cv2.VideoCapture(0, cv2.CAP_DSHOW) # 自己摄像头

pull_url = 'rtsp://127.0.0.1:8554/stream' # "rtsp_address"

video_capture = cv2.VideoCapture(pull_url) # 调用摄像头的rtsp协议流

# pull_url = "rtmp_address"'''推流url地址,指定 用opencv把各种处理后的流(视频帧) 推到 哪里'''

push_url = "rtsp://127.0.0.1:8554/stream/ai"width = int(video_capture.get(cv2.CAP_PROP_FRAME_WIDTH))

height = int(video_capture.get(cv2.CAP_PROP_FRAME_HEIGHT))

fps = int(video_capture.get(cv2.CAP_PROP_FPS)) # Error setting option framerate to value 0.

print("width", width, "height", height, "fps:", fps) # command = [r'D:\Softwares\ffmpeg-5.1-full_build\bin\ffmpeg.exe', # windows要指定ffmpeg地址

command = ['ffmpeg', # linux不用指定'-y', '-an','-f', 'rawvideo','-vcodec','rawvideo','-pix_fmt', 'bgr24', #像素格式'-s', "{}x{}".format(width, height),'-r', str(fps), # 自己的摄像头的fps是0,若用自己的notebook摄像头,设置为15、20、25都可。 '-i', '-','-c:v', 'libx264', # 视频编码方式'-pix_fmt', 'yuv420p','-preset', 'ultrafast','-f', 'rtsp', # flv rtsp'-rtsp_transport', 'tcp', # 使用TCP推流,linux中一定要有这行push_url] # rtsp rtmp

pipe = subprocess.Popen(command, shell=False, stdin=subprocess.PIPE)def frame_handler(frame):kernel = np.ones((5,5),np.float32)/25dst = cv2.filter2D(frame,-1,kernel)return dstprocess_this_frame = True

while True: # True or video_capture.isOpened():# Grab a single frame of videoret, frame = video_capture.read()# handle the video capture framestart = time.time()frame = frame_handler(frame) # Display the resulting image. linux 需要注释该行代码# cv2.imshow('Video', frame)# Hit 'q' on the keyboard to quit!if cv2.waitKey(delay=1) & 0xFF == ord('q'): # delay=100ms为0.1s .若dealy时间太长,比如1000ms,则无法成功推流!breakpipe.stdin.write(frame.tostring())# pipe.stdin.write(frame.tobytes())video_capture.release()

cv2.destroyAllWindows()

pipe.terminate()

6.2 运行测试

python3 pyopencv_ffmpeg.py

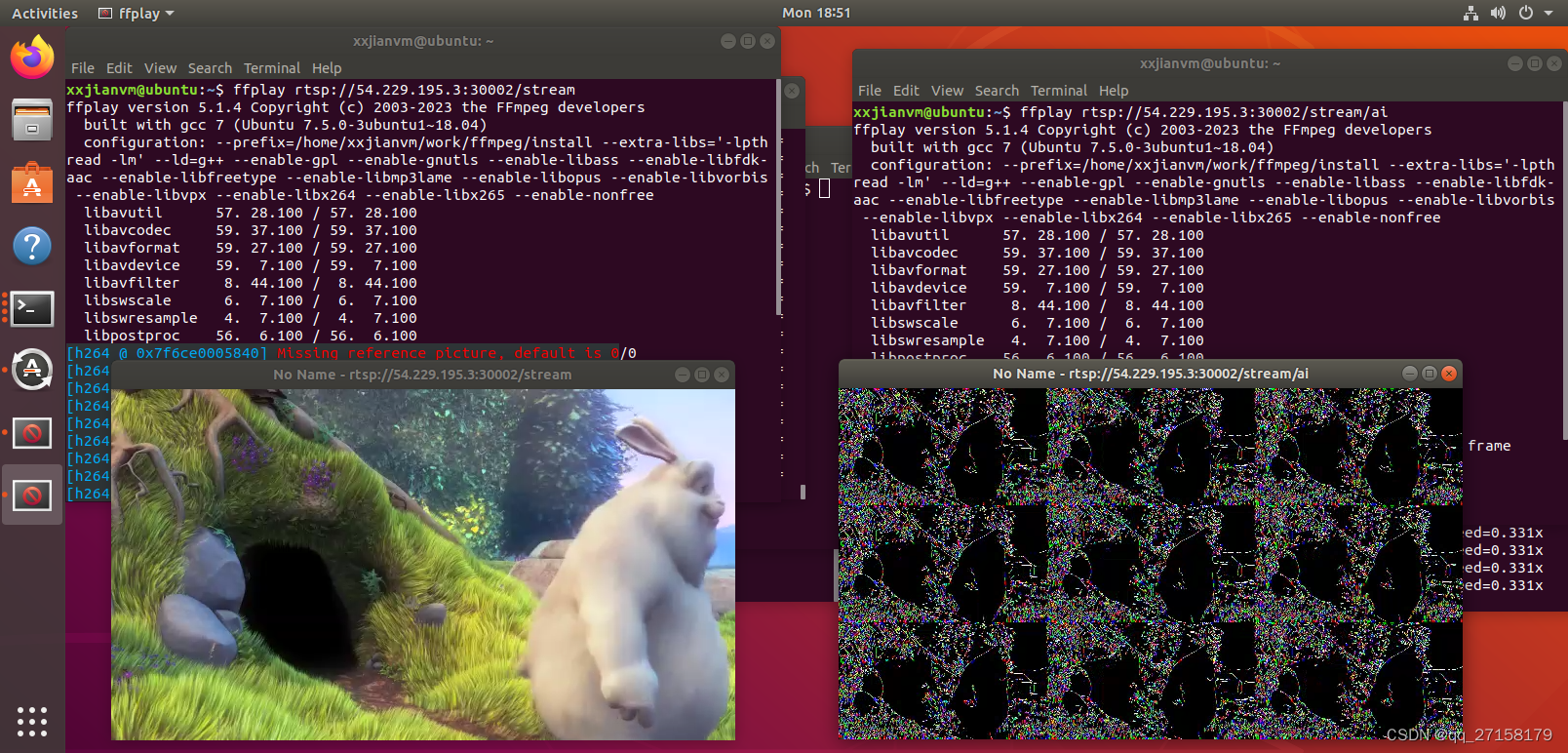

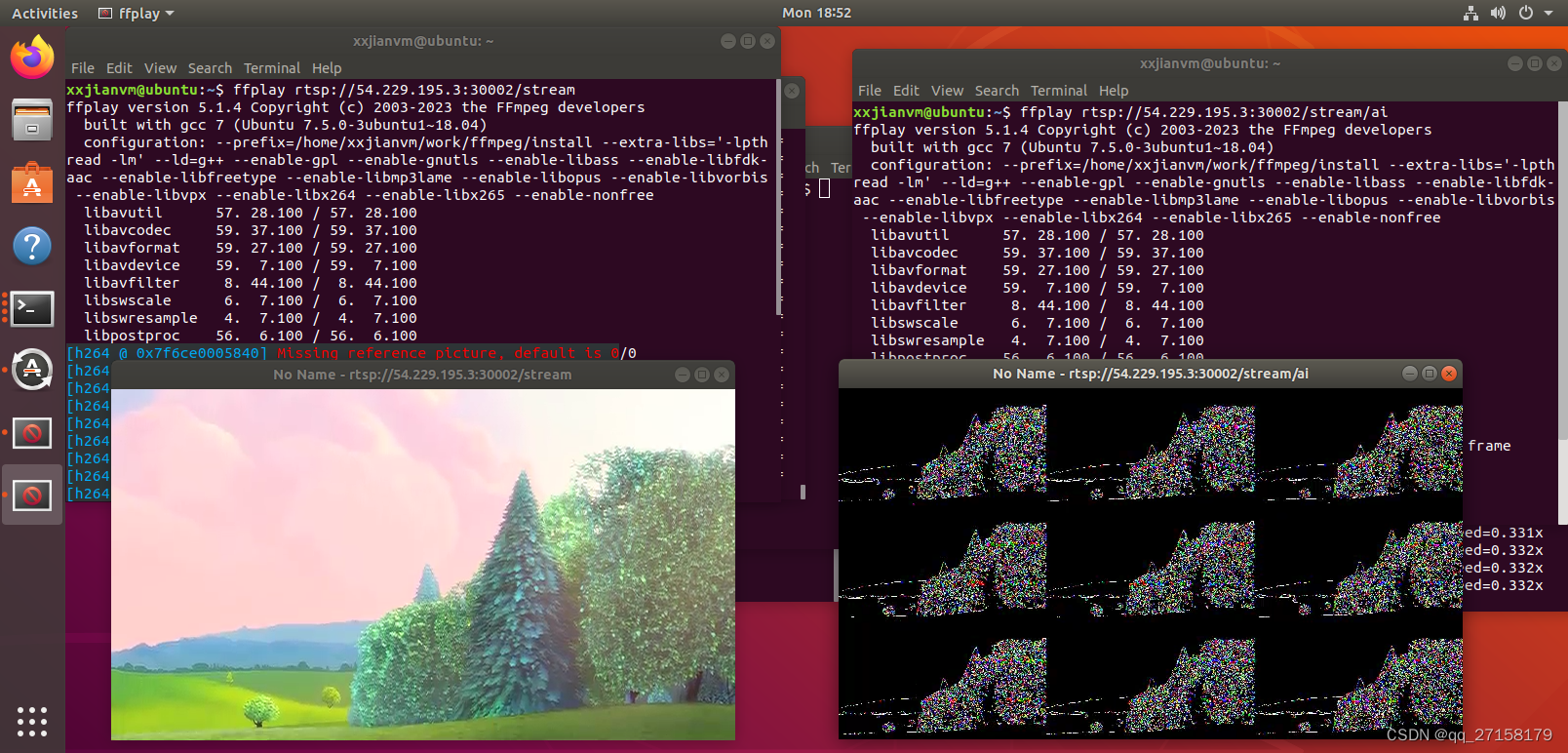

7. 观察效果

创建两个控制台,分别输入以下指令:

ffplay rtsp://54.229.195.3:30002/stream

ffplay rtsp://54.229.195.3:30002/stream/ai

由于目前电脑处理能力比较弱,处理函数内添加太多操作,就会推流不成功,所以处理能力还有点弱。另外经过opencv的推流是没有声音的。