一、日期正则表达式测试

- 匹配HH:mm:ss正则表达式写法有很多列举两个

.(点)代表任意匹配

^必须以xxx开头, 如^[a-z],必须以a-z的字母开头

: 精确匹配,必须是:

([0-1]?[0-9]|2[0-3]).([0-5][0-9]).([0-5][0-9])

^([0-1]?[0-9]|2[0-3]).([0-5][0-9]).([0-5][0-9])$

([0-1]?[0-9]|2[0-3]):([0-5][0-9]):([0-5][0-9])

public class RegexDemo {public static void main(String[] args) {String str = "2023-10-10 00:00:00";String regex1 ="^\\d{4}-\\d{2}-\\d{2}.([0-1]?[0-9]|2[0-3]).([0-5][0-9]).([0-5][0-9])$";Pattern p = Pattern.compile(regex1);Matcher m = p.matcher(str);boolean flag = m.matches();System.out.println("yyyy-MM-dd HH:mm:ss格式正则表达式测试" + flag);String regex2 ="^\\d{4}-\\d{2}-\\d{2}.\\d{2}:\\d{2}:\\d{2}$";Pattern p2 = Pattern.compile(regex2);Matcher m2 = p2.matcher(str);boolean flag2 = m2.matches();System.out.println("yyyy-MM-dd HH:mm:ss忽略时分秒" + flag2);String regex3 ="^2023-10-10 \\d{2}:\\d{2}:\\d{2}$";Pattern p3 = Pattern.compile(regex3);Matcher m3 = p3.matcher(str);boolean flag3 = m3.matches();System.out.println("yyyy-MM-dd HH:mm:ss忽略时分秒精确匹配" + flag3);}

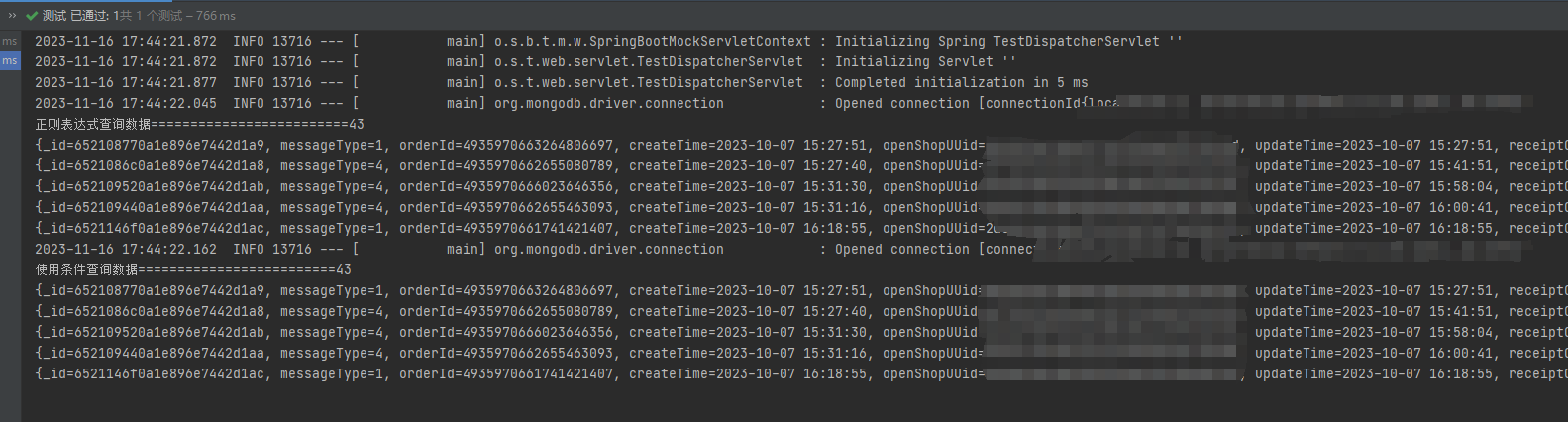

}二、Mongo正则查询与条件查询对比

@Resourceprivate MongoTemplate mongoTemplate;@Testpublic void compareRegexToEquals() {String str ="^"+"2023-10-07"+".\\d{2}:\\d{2}:\\d{2}$";Pattern pattern = Pattern.compile(str, Pattern.CASE_INSENSITIVE);List<Map> maps = mongoTemplate.find(new Query(Criteria.where("updateTime").regex(pattern)).with(Sort.by(Sort.Order.asc("updateTime"))),Map.class, "tbopen_shop_uuid");System.out.println("正则表达式查询数据=========================" + maps.size() );maps = maps.stream().limit(5).collect(Collectors.toList());maps.forEach(System.out::println);String startTime = "2023-10-07 00:00:00";String endTime = "2023-10-07 23:59:59";List<Map> shop = mongoTemplate.find(new Query(Criteria.where("updateTime").gte(startTime).lte(endTime)).with(Sort.by(Sort.Order.asc("updateTime"))),Map.class, "tbopen_shop_uuid");System.out.println("使用条件查询数据=========================" + shop.size());shop = maps.stream().limit(5).collect(Collectors.toList());shop.forEach(System.out::println);}

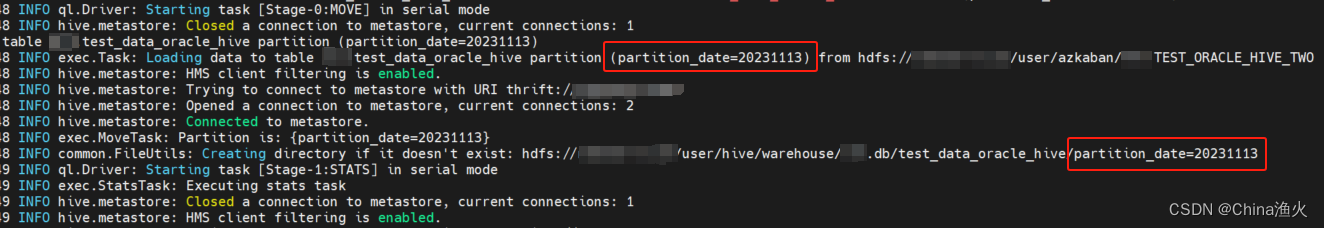

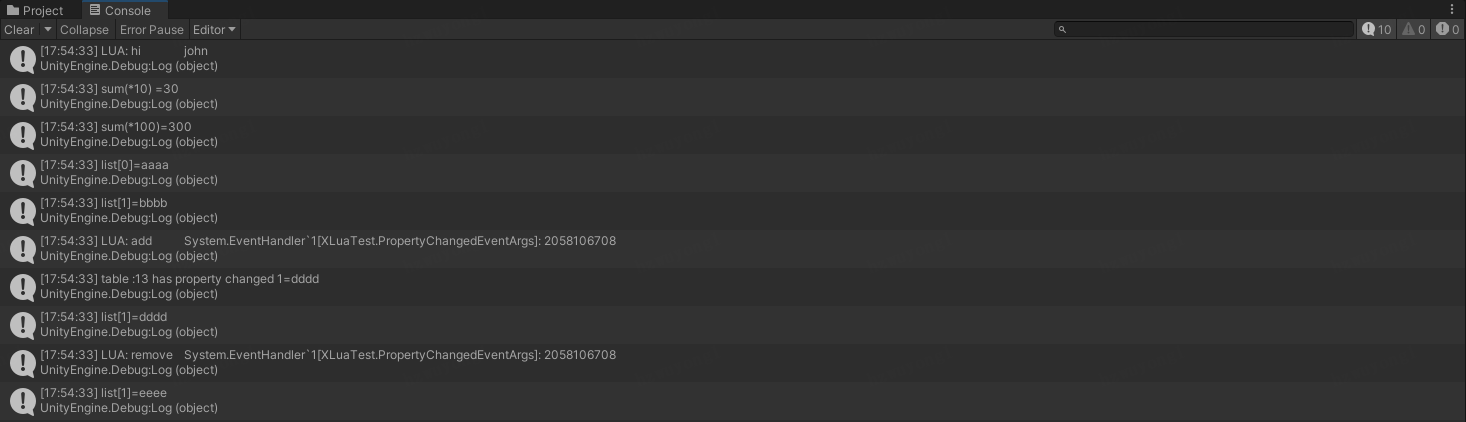

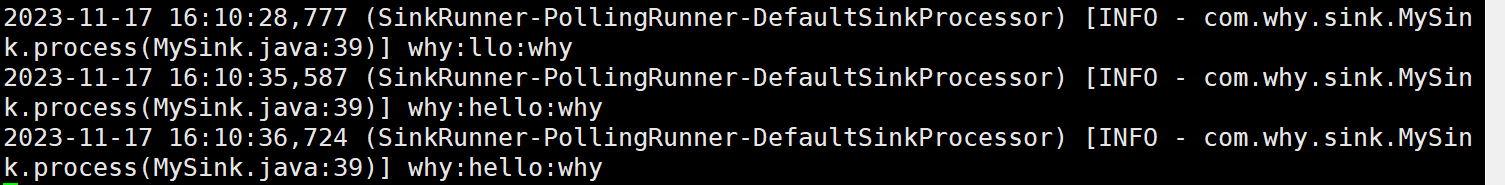

我这里这查询了一天的测试数据,比对结果后发现两种查询结果是一致的,取了前五条数据做对比,

突然发现正则还是挺牛的!

之后我们取每天的值进行字符串拼接则可以实现分组统计的需求了.