1. k8s1.28.x 的概述

1.1 k8s 1.28.x 更新

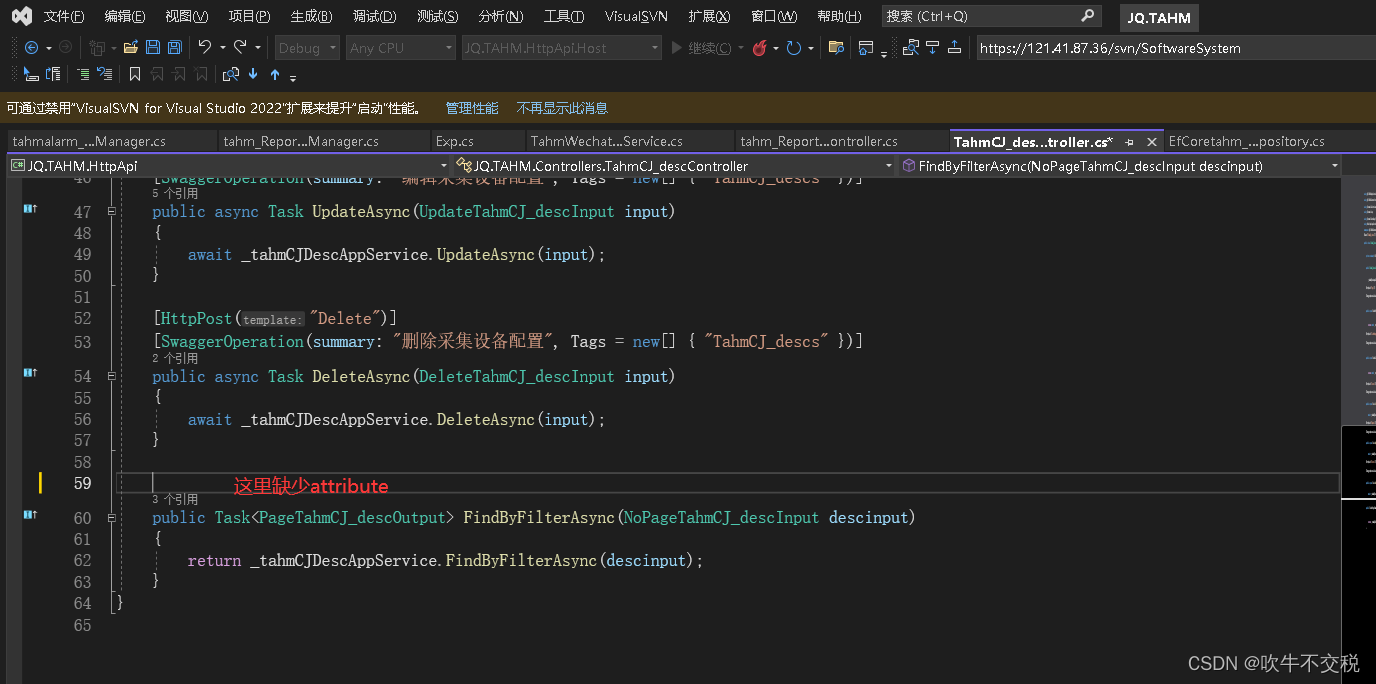

Kubernetes v1.28 是 2023 年的第二个大版本更新,包含了 46 项主要的更新。 而今年发布的第一个版本 v1.27 有近 60 项,所以可以看出来,在发布节奏调整后, 每个 Kubernetes 版本中都会包含很多新的变化。 其中 20 个增强功能正在进入 Alpha 阶段,14 个将升级到 Beta 阶段,而另外 12 个则将升级到稳定版。 可以看出来很多都是新特性。 更多内容查看k8s 更新介绍 https://zhuanlan.zhihu.com/p/649838674 logo 如下:

2. 系统准备

操作系统:OpenEuler23.03x64

主机名:cat /etc/hosts

---

172.16.10.51 flyfish51

172.16.10.52 flyfish52

172.16.10.53 flyfish53

172.16.10.54 flyfish54

172.16.10.55 flyfish55

----注: 本次安装前三台,flyfish51 作为 master flyfish52/flyfish53 作为worker系统关闭selinux / 关闭firewalld 清空iptables防火墙规则系统初始化

#修改时区,同步时间

yum install chrond -y

vim /etc/chrony.conf

-----

ntpdate ntp1.aliyun.com iburst

-----

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo 'Asia/Shanghai' > /etc/timezone#关闭防火墙,selinux

systemctl stop firewalld

systemctl disable firewalld

sed -i 's/enforcing/disabled/' /etc/selinux/config

setenforce 0## 关闭swapswapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab#系统优化

cat > /etc/sysctl.d/k8s_better.conf << EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

modprobe br_netfilter

lsmod |grep conntrack

modprobe ip_conntrack

sysctl -p /etc/sysctl.d/k8s_better.conf#确保每台机器的uuid不一致,如果是克隆机器,修改网卡配置文件删除uuid那一行

cat /sys/class/dmi/id/product_uuid所有节点安装ipv4转发支持

###系统依赖包

yum -y install wget jq psmisc vim net-tools nfs-utils socat telnet device-mapper-persistent-data lvm2 git network-scripts tar curl -yyum install -y conntrack ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git### 开启ipvs 转发

modprobe br_netfilter cat > /etc/sysconfig/modules/ipvs.modules << EOF #!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF chmod 755 /etc/sysconfig/modules/ipvs.modules bash /etc/sysconfig/modules/ipvs.modules lsmod | grep -e ip_vs -e nf_conntrack全部节点安装 containerd

创建 /etc/modules-load.d/containerd.conf 配置文件:cat << EOF > /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOFmodprobe overlay

modprobe br_netfilter获取阿里云YUM源

vim /etc/yum.repos.d/docker-ce.repo

------------------

[docker-ce-stable]

name=Docker CE Stable - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/9/$basearch/stable

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

----------------------yum makecache 下载安装:yum install -y containerd.io生成containerd的配置文件

mkdir /etc/containerd -p

生成配置文件

containerd config default > /etc/containerd/config.toml

编辑配置文件

vim /etc/containerd/config.toml

-----

SystemdCgroup = false 改为 SystemdCgroup = true# sandbox_image = "k8s.gcr.io/pause:3.6"

改为:

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9"------# systemctl enable containerd

Created symlink from /etc/systemd/system/multi-user.target.wants/containerd.service to /usr/lib/systemd/system/containerd.service.

# systemctl start containerd

# ctr images ls 安装 kubernetes 1.28.2

1.添加阿里云YUM软件源cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOFyum makecache ## 查看所有的可用版本

yum list kubelet --showduplicates | sort -r |grep 1.28 2.2 安装 kubeadm kubectl kubelet

目前最新版本是1.28.1,我们直接上最新版yum install -y kubectl kubelet kubeadm为了实现docker使用的cgroupdriver与kubelet使用的cgroup的一致性,建议修改如下文件内容。# vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"设置kubelet为开机自启动即可,由于没有生成配置文件,集群初始化后自动启动

# systemctl enable kubelet准备k8s1.28.1 所需要的镜像kubeadm config images list --kubernetes-version=v1.28.1

## 使用以下命令从阿里云仓库拉取镜像

# kubeadm config images pull --image-repository registry.aliyuncs.com/google_containers集群初始化

使用kubeadm init命令初始化在flyfish51上执行,报错请看k8s报错汇总kubeadm init --kubernetes-version=v1.28.1 --pod-network-cidr=10.224.0.0/16 --apiserver-advertise-address=172.16.10.51 --image-repository registry.aliyuncs.com/google_containers--apiserver-advertise-address 集群通告地址

--image-repository 由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址

--kubernetes-version K8s版本,与上面安装的一致

--service-cidr 集群内部虚拟网络,Pod统一访问入口

--pod-network-cidr Pod网络,,与下面部署的CNI网络组件yaml中保持一致Your Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 172.16.10.51:6443 --token l02pkw.2ccruhqj9qelkv3p \--discovery-token-ca-cert-hash sha256:a960721267396dd59a38d67a48be7e9afb42e6730ef666926ea535ccbb65591b

flyfish52/flyfish53 执行

kubeadm join 172.16.10.51:6443 --token l02pkw.2ccruhqj9qelkv3p \--discovery-token-ca-cert-hash sha256:a960721267396dd59a38d67a48be7e9afb42e6730ef666926ea535ccbb65591b# 查看集群节点:

kubectl get node 部署网络插件

网络组件有很多种,只需要部署其中一个即可,推荐Calico。Calico是一个纯三层的数据中心网络方案,Calico支持广泛的平台,包括Kubernetes、OpenStack等。Calico 在每一个计算节点利用 Linux Kernel 实现了一个高效的虚拟路由器( vRouter) 来负责数据转发,而每个 vRouter 通过 BGP 协议负责把自己上运行的 workload 的路由信息向整个 Calico 网络内传播。此外,Calico 项目还实现了 Kubernetes 网络策略,提供ACL功能。1.下载Calicowget https://docs.tigera.io/archive/v3.25/manifests/calico.yamlvim calico.yaml

...

- name: CALICO_IPV4POOL_CIDRvalue: "10.244.0.0/16"

...kubectl apply -f calico.yaml下载:ctr -n k8s.io i pull -k docker.io/calico/cni:v3.25.0

ctr -n k8s.io i pull -k docker.io/calico/node:v3.25.0

ctr -n k8s.io i pull -k docker.io/calico/kube-controllers:v3.25.0导出:ctr -n k8s.io i export cni.tar.gz docker.io/calico/cni:v3.25.0

ctr -n k8s.io i export kube-controllers.tar.gz docker.io/calico/kube-controllers:v3.25.0

ctr -n k8s.io i export node.tar.gz docker.io/calico/node:v3.25.0导入:ctr -n k8s.io i import cni.tar.gz

ctr -n k8s.io i import kube-controllers.tar.gz

ctr -n k8s.io i import node.tar.gz

kubectl get node

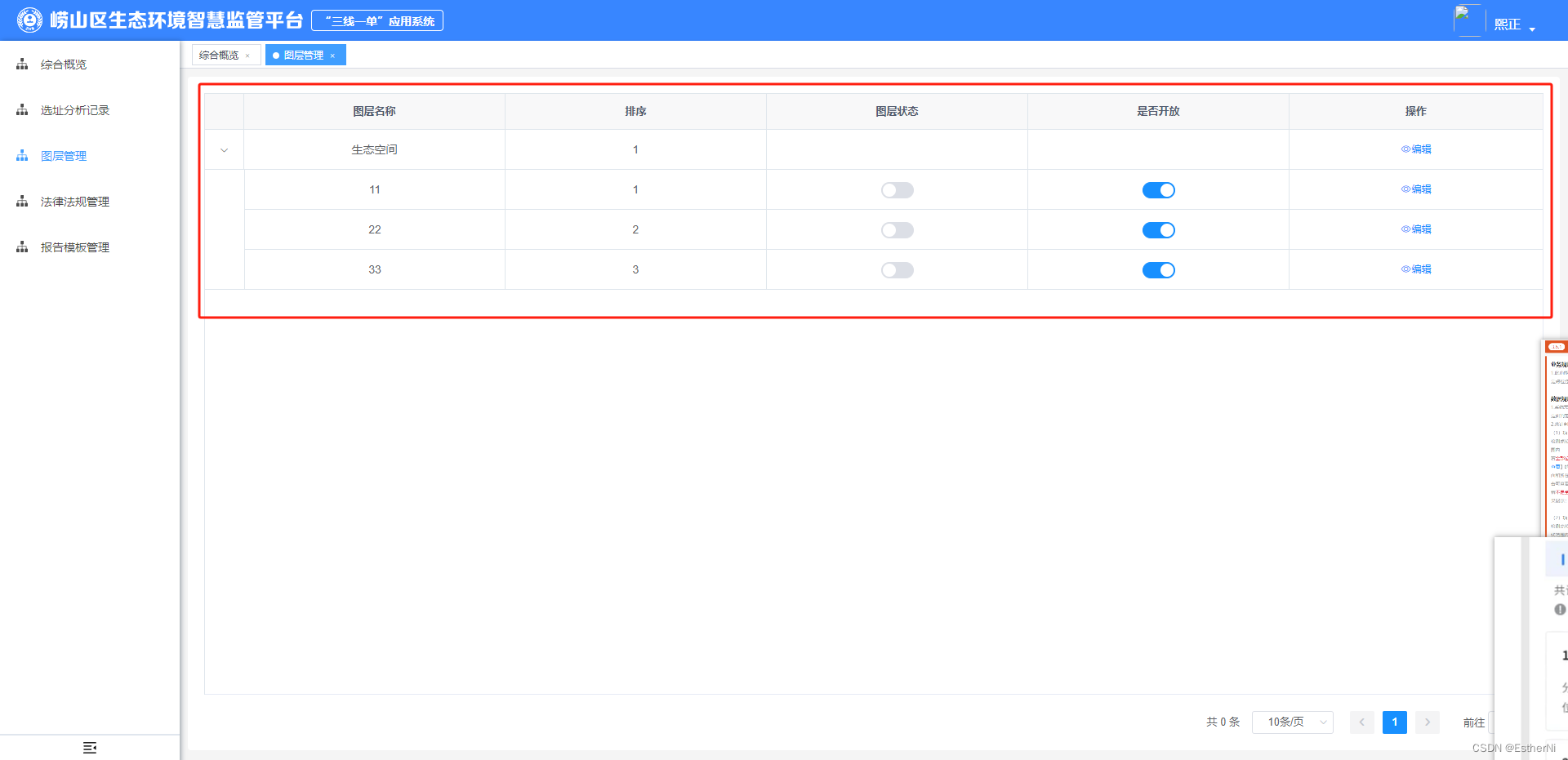

kubectl get node -o wide 2.3 部署dashboard

1.下载yaml文件官网下载地址目前最新版本为v2.7.0wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yamlvim recommended.yaml

----

kind: Service

apiVersion: v1

metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboard

spec:ports:- port: 443targetPort: 8443nodePort: 30001type: NodePort selector:k8s-app: kubernetes-dashboard

----

kubectl apply -f recommended.yamlkubectl get pods -n kubernetes-dashboard

kubectl get pods,svc -n kubernetes-dashboard创建用户:

wget https://raw.githubusercontent.com/cby-chen/Kubernetes/main/yaml/dashboard-user.yamlkubectl apply -f dashboard-user.yaml

创建token

kubectl -n kubernetes-dashboard create token admin-user转载自: CTO-安装k8s1.28.x