我在在之前的文章 “使用 Elasticsearch 检测抄袭 (一)” 介绍了如何检文章抄袭。这个在许多的实际使用中非常有意义。我在 CSDN 上的文章也经常被人引用或者抄袭。有的人甚至也不用指明出处。这对文章的作者来说是很不公平的。文章介绍的内容针对很多的博客网站也非常有意义。在那篇文章中,我觉得针对一些开发者来说,不一定能运行的很好。在今天的这篇文章中,我特意使用本地部署,并使用 jupyter notebook 来进行一个展示。这样开发者能一步一步地完整地运行起来。

安装

安装 Elasticsearch 及 Kibana

如果你还没有安装好自己的 Elasticsearch 及 Kibana,那么请参考一下的文章来进行安装:

-

如何在 Linux,MacOS 及 Windows 上进行安装 Elasticsearch

-

Kibana:如何在 Linux,MacOS 及 Windows 上安装 Elastic 栈中的 Kibana

在安装的时候,请选择 Elastic Stack 8.x 进行安装。在安装的时候,我们可以看到如下的安装信息:

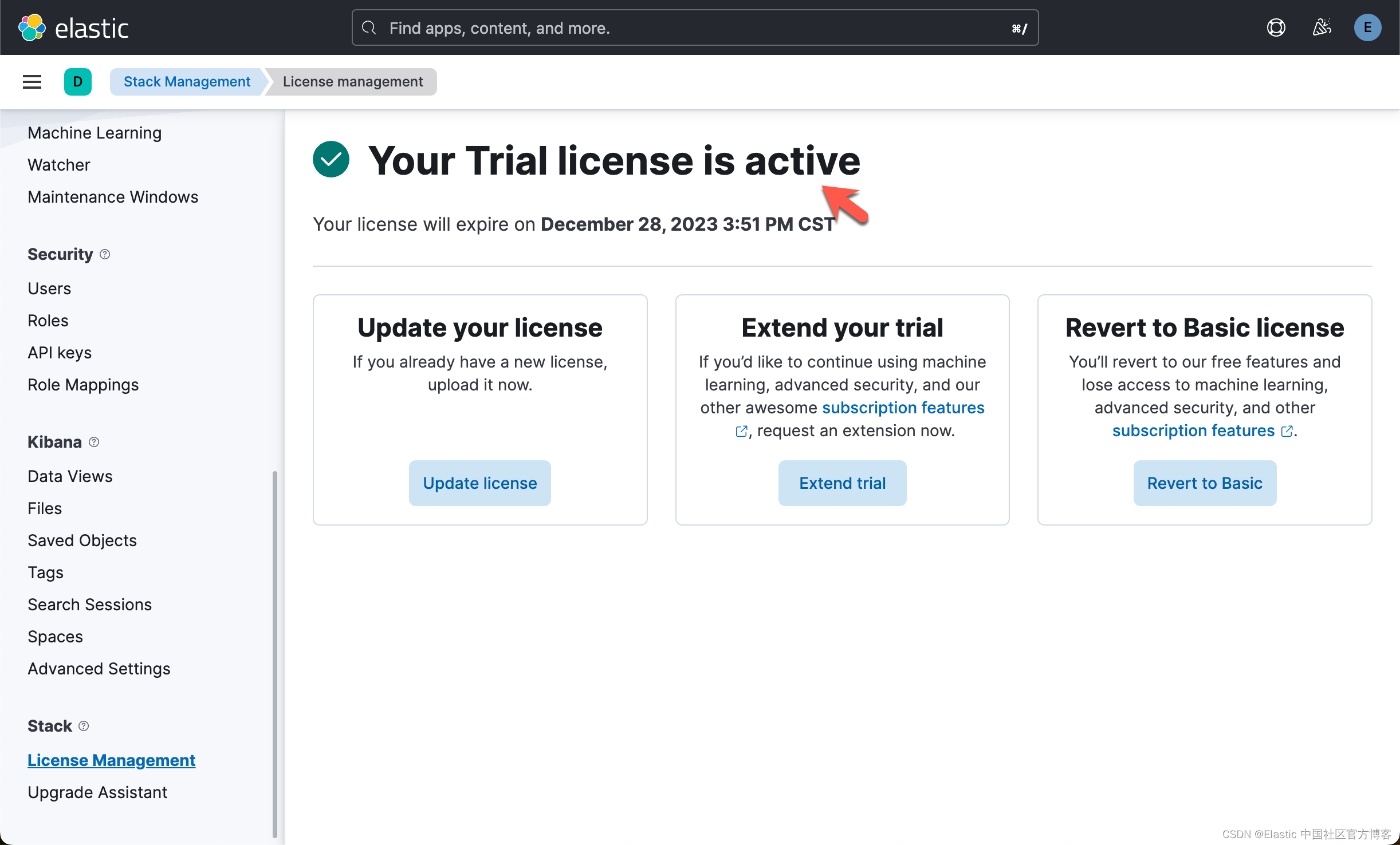

为了能够上传向量模型,我们必须订阅白金版或试用。

上传模型

注意:如果我们在这里通过命令行来进行上传模型的话,那么你就不需要在下面的代码中来实现上传。可以省去那些个步骤。

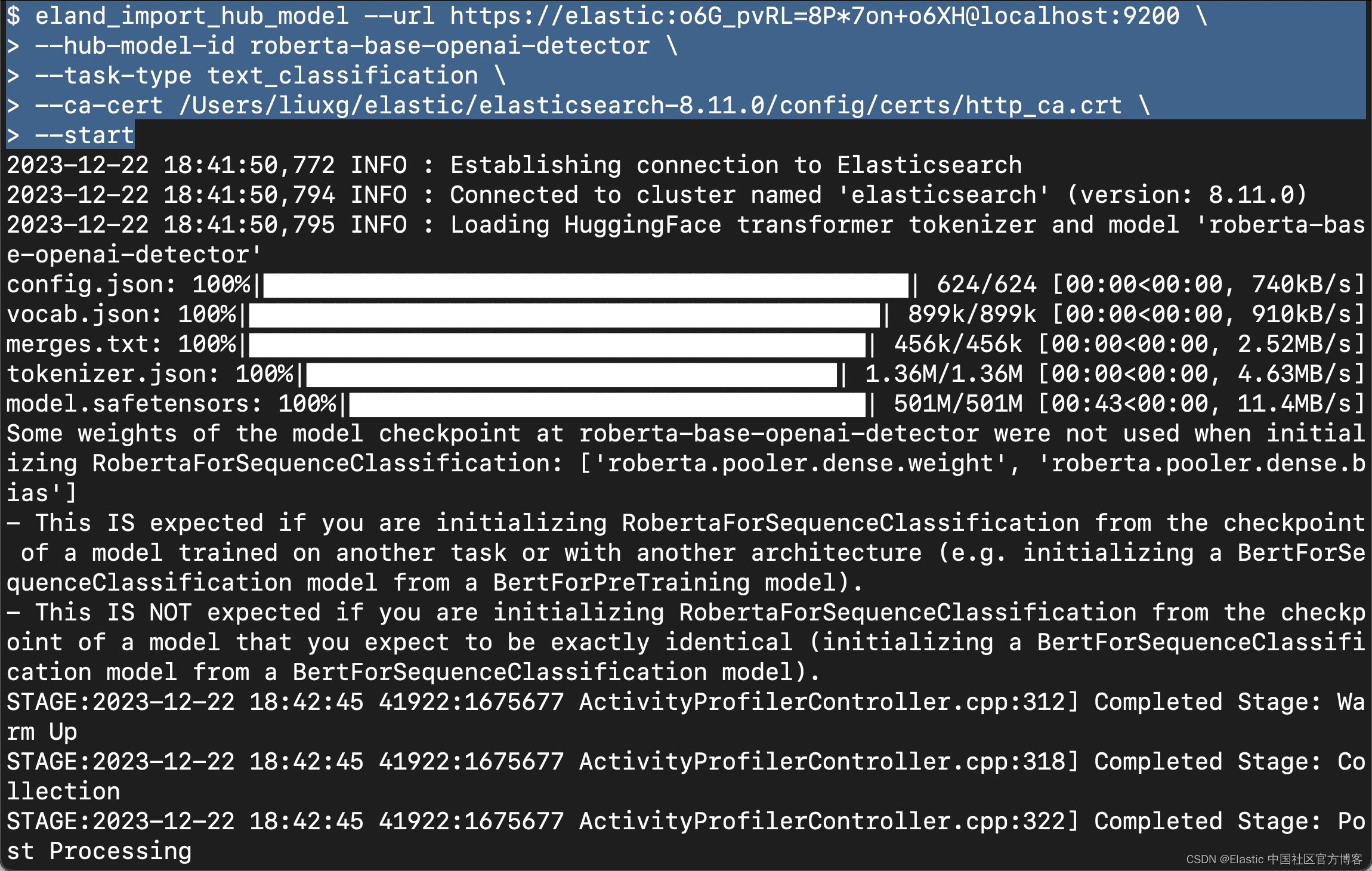

我们可以参考之前的文章 “Elasticsearch:使用 NLP 问答模型与你喜欢的圣诞歌曲交谈”。我们使用如下的命令来上传 OpenAI detection 模型:

eland_import_hub_model --url https://elastic:o6G_pvRL=8P*7on+o6XH@localhost:9200 \--hub-model-id roberta-base-openai-detector \--task-type text_classification \--ca-cert /Users/liuxg/elastic/elasticsearch-8.11.0/config/certs/http_ca.crt \--start在上面,我们需要根据自己的配置修改上面的证书路径,Elasticsearch 的访问地址。

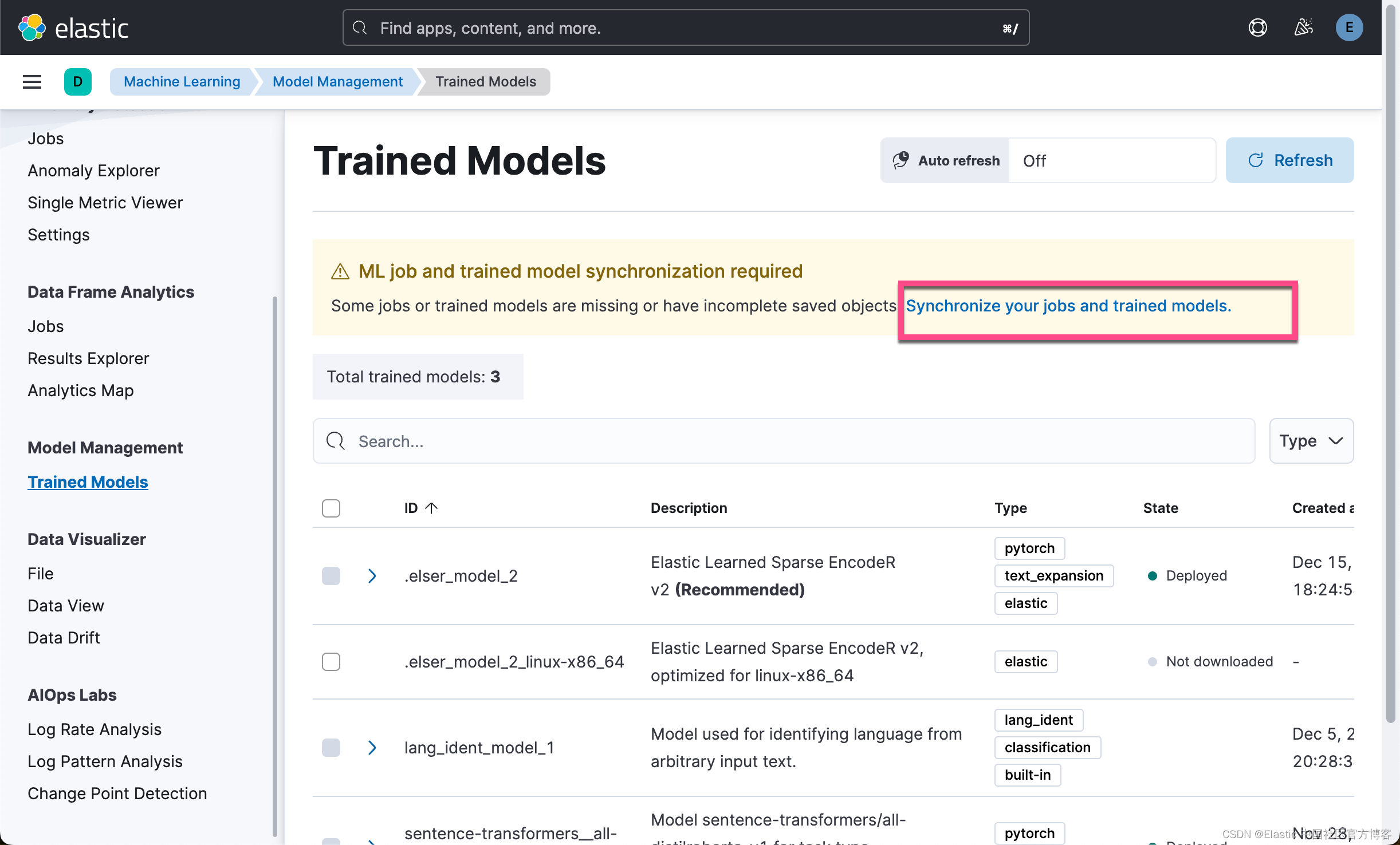

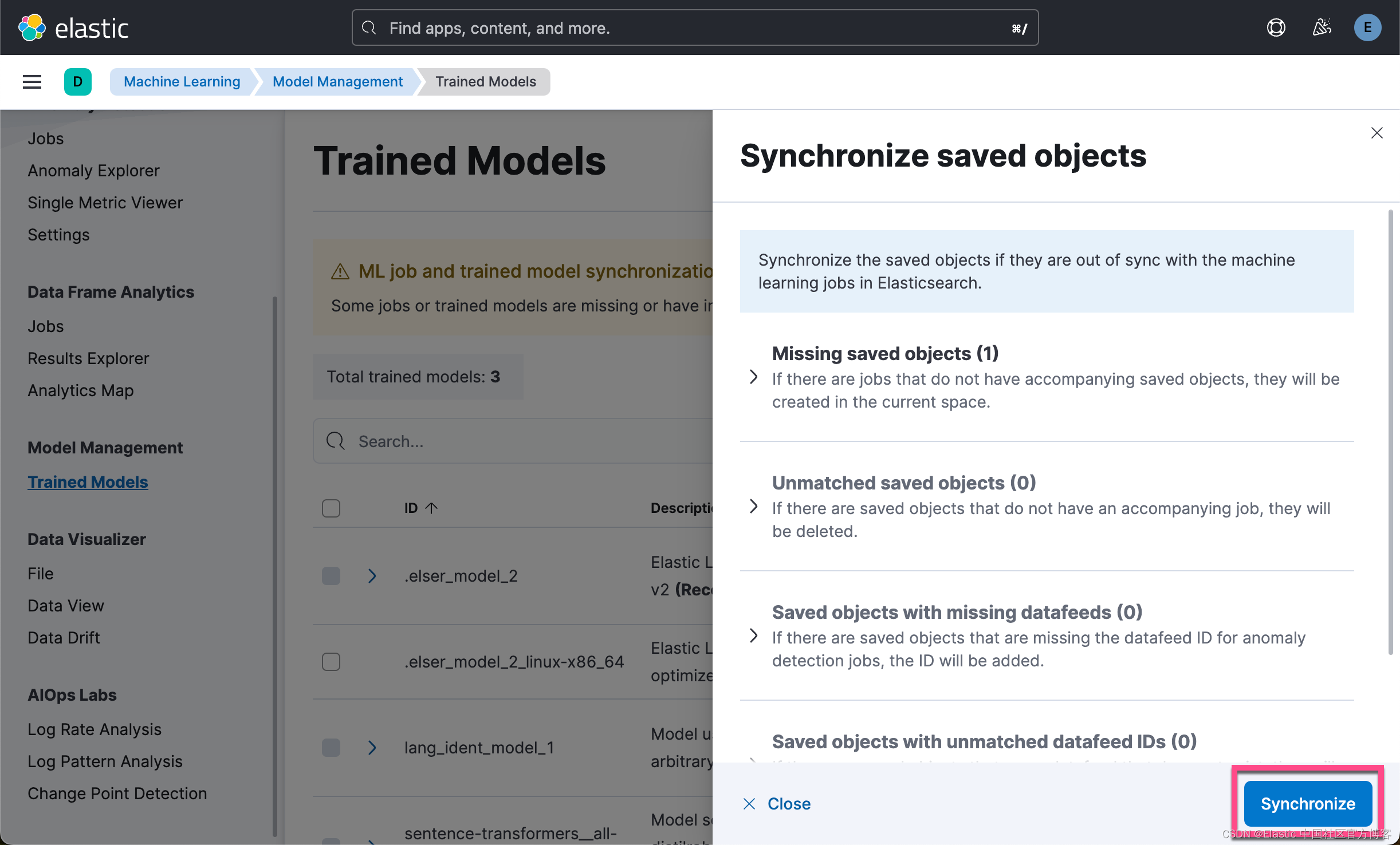

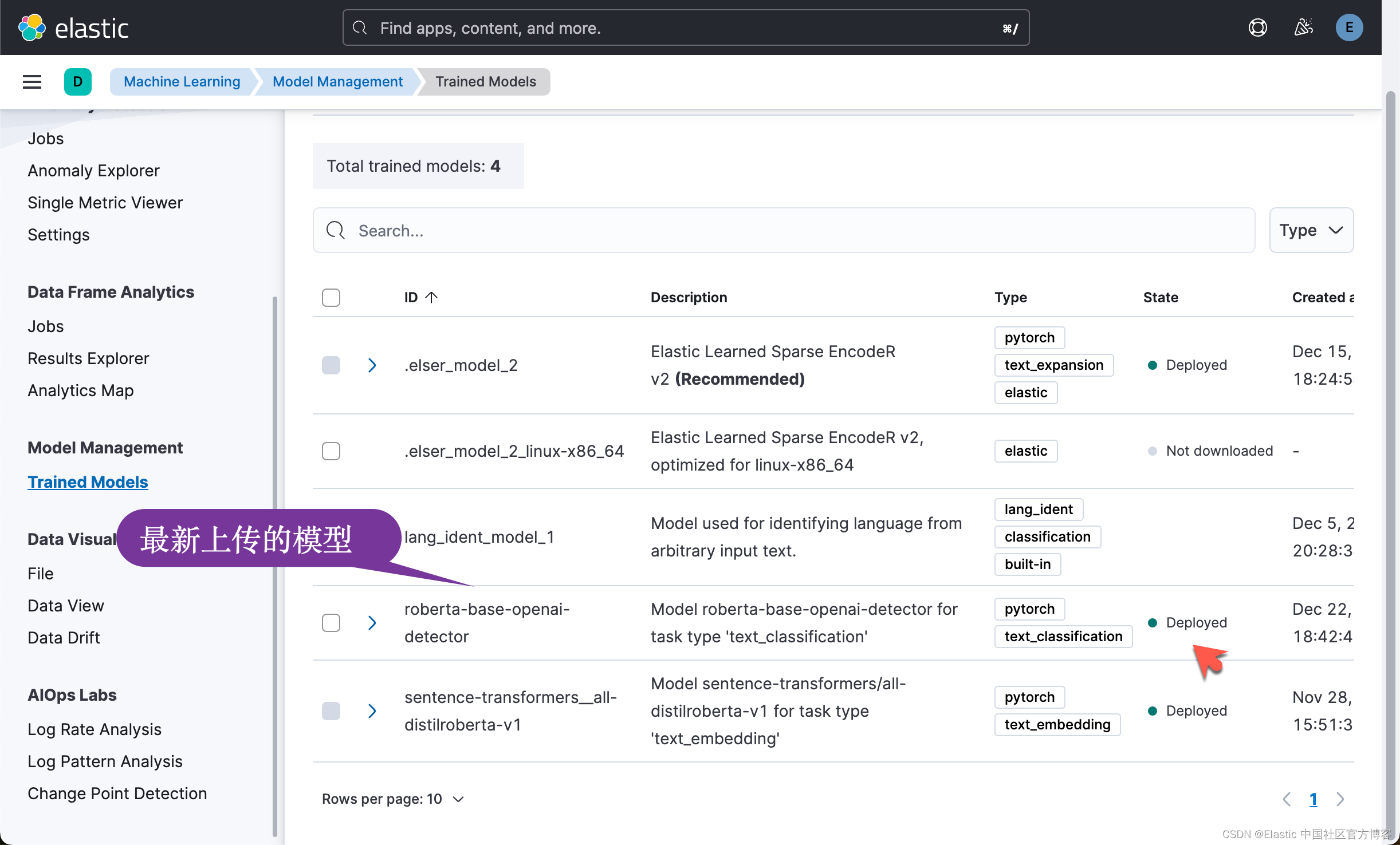

我们可以在 Kibana 中查看最新上传的模型:

接下来,按照同样的方法,我们安装文本嵌入模型。

eland_import_hub_model --url https://elastic:o6G_pvRL=8P*7on+o6XH@localhost:9200 \--hub-model-id sentence-transformers/all-mpnet-base-v2 \--task-type text_embedding \--ca-cert /Users/liuxg/elastic/elasticsearch-8.11.0/config/certs/http_ca.crt \--start

为了方便大家学习,我们可以在如下的地址下载代码:

git clone https://github.com/liu-xiao-guo/elasticsearch-labs我们可以在如下的位置找到 jupyter notebook:

$ pwd

/Users/liuxg/python/elasticsearch-labs/supporting-blog-content/plagiarism-detection-with-elasticsearch

$ ls

plagiarism_detection_es_self_managed.ipynb运行代码

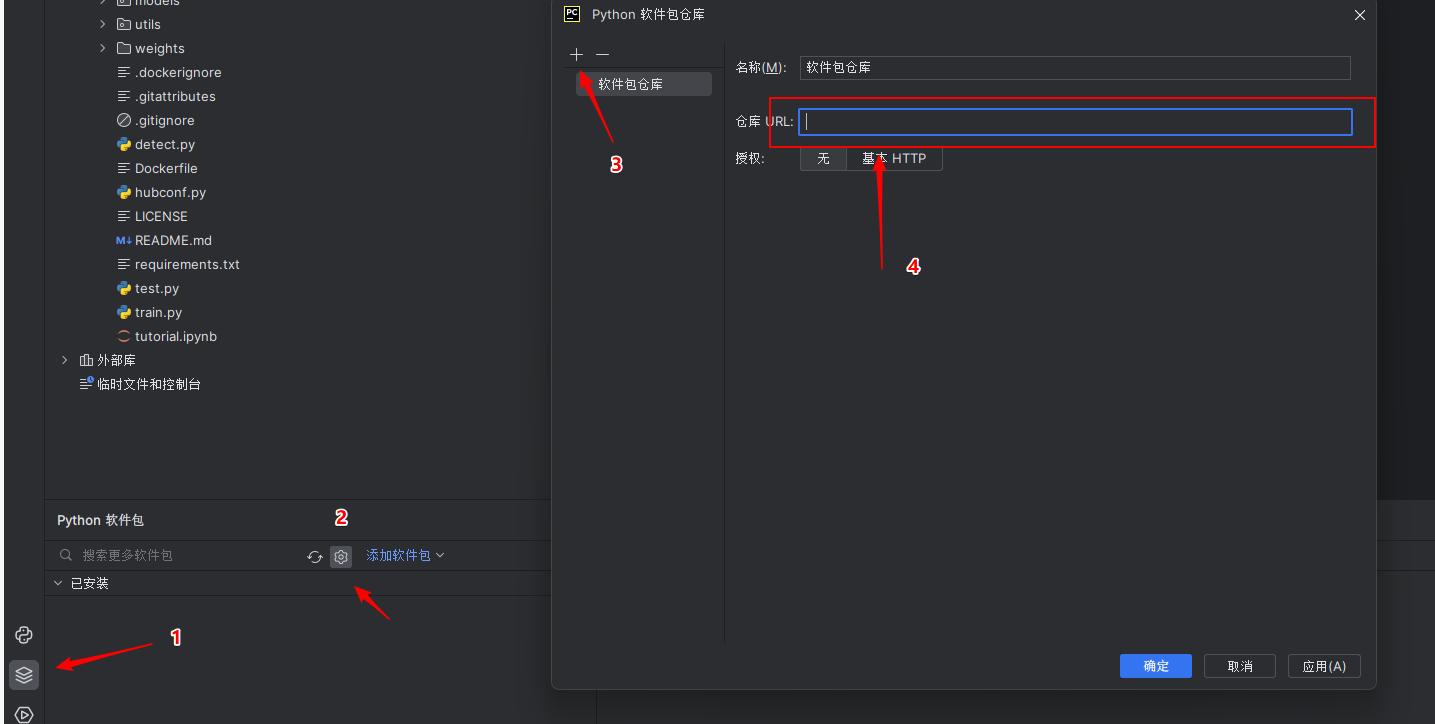

接下来,我们开始运行 notebook。我们首先安装相应的 python 包:

pip3 install elasticsearch==8.11

pip3 -q install eland elasticsearch sentence_transformers transformers torch==2.1.0在运行代码之前,我们先设置如下的变量:

export ES_USER="elastic"

export ES_PASSWORD="o6G_pvRL=8P*7on+o6XH"

export ES_ENDPOINT="localhost"我们还需要把 Elasticsearch 的证书拷贝到当前的目录中:

$ pwd

/Users/liuxg/python/elasticsearch-labs/supporting-blog-content/plagiarism-detection-with-elasticsearch

$ cp ~/elastic/elasticsearch-8.11.0/config/certs/http_ca.crt .

$ ls

http_ca.crt plagiarism_detection_es_self_managed.ipynb

plagiarism_detection_es.ipynb导入包:

from elasticsearch import Elasticsearch, helpers

from elasticsearch.client import MlClient

from eland.ml.pytorch import PyTorchModel

from eland.ml.pytorch.transformers import TransformerModel

from urllib.request import urlopen

import json

from pathlib import Path

import os连接到 Elasticsearch

elastic_user=os.getenv('ES_USER')

elastic_password=os.getenv('ES_PASSWORD')

elastic_endpoint=os.getenv("ES_ENDPOINT")url = f"https://{elastic_user}:{elastic_password}@{elastic_endpoint}:9200"

client = Elasticsearch(url, ca_certs = "./http_ca.crt", verify_certs = True)print(client.info())

上传 detector 模型

hf_model_id ='roberta-base-openai-detector'

tm = TransformerModel(model_id=hf_model_id, task_type="text_classification")#set the modelID as it is named in Elasticsearch

es_model_id = tm.elasticsearch_model_id()# Download the model from Hugging Face

tmp_path = "models"

Path(tmp_path).mkdir(parents=True, exist_ok=True)

model_path, config, vocab_path = tm.save(tmp_path)# Load the model into Elasticsearch

ptm = PyTorchModel(client, es_model_id)

ptm.import_model(model_path=model_path, config_path=None, vocab_path=vocab_path, config=config)#Start the model

s = MlClient.start_trained_model_deployment(client, model_id=es_model_id)

s.body

我们可以在 Kibana 中进行查看:

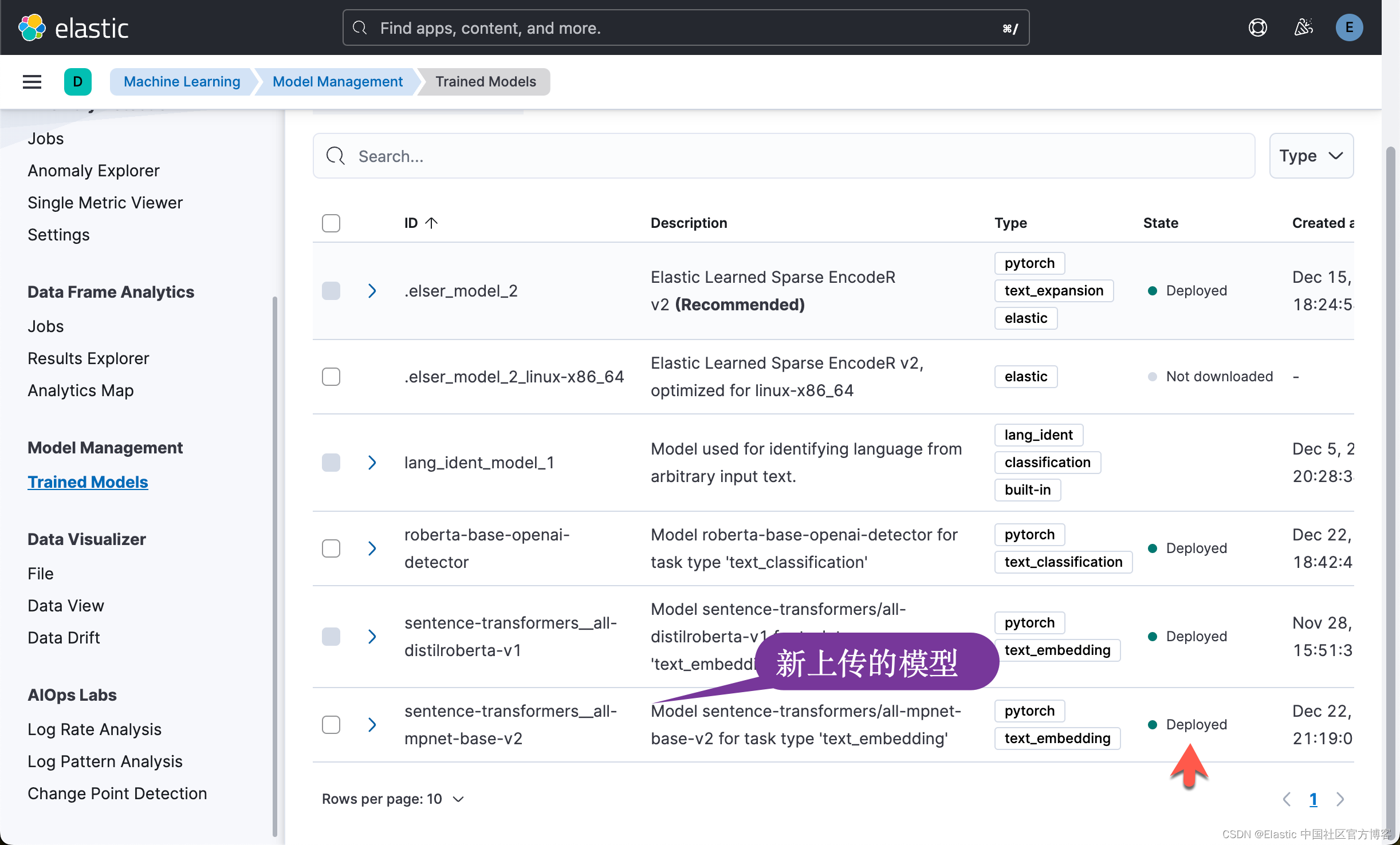

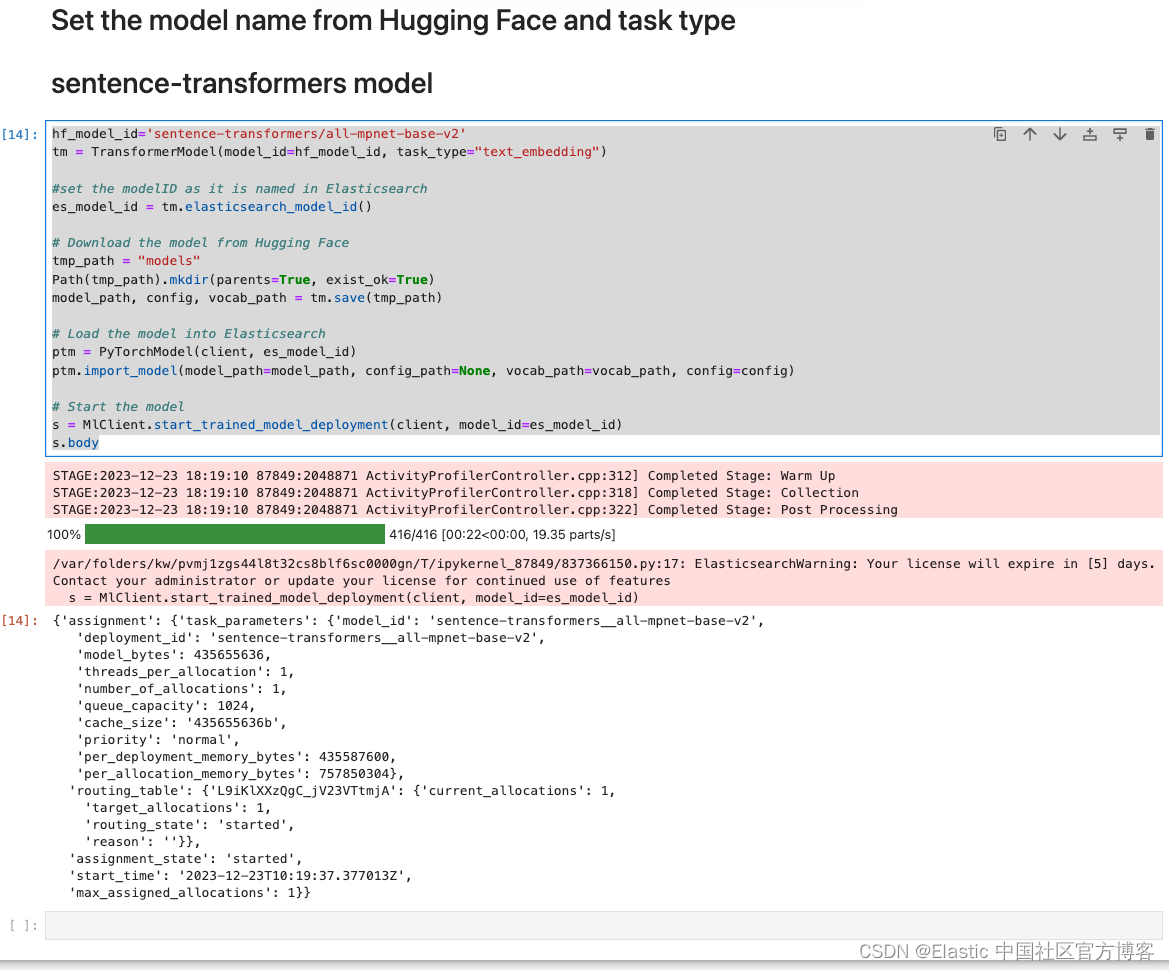

上传 text embedding 模型

hf_model_id='sentence-transformers/all-mpnet-base-v2'

tm = TransformerModel(model_id=hf_model_id, task_type="text_embedding")#set the modelID as it is named in Elasticsearch

es_model_id = tm.elasticsearch_model_id()# Download the model from Hugging Face

tmp_path = "models"

Path(tmp_path).mkdir(parents=True, exist_ok=True)

model_path, config, vocab_path = tm.save(tmp_path)# Load the model into Elasticsearch

ptm = PyTorchModel(client, es_model_id)

ptm.import_model(model_path=model_path, config_path=None, vocab_path=vocab_path, config=config)# Start the model

s = MlClient.start_trained_model_deployment(client, model_id=es_model_id)

s.body

我们可以在 Kibana 中查看:

创建源索引

client.indices.create(

index="plagiarism-docs",

mappings= {"properties": {"title": {"type": "text","fields": {"keyword": {"type": "keyword"}}},"abstract": {"type": "text","fields": {"keyword": {"type": "keyword"}}},"url": {"type": "keyword"},"venue": {"type": "keyword"},"year": {"type": "keyword"}}

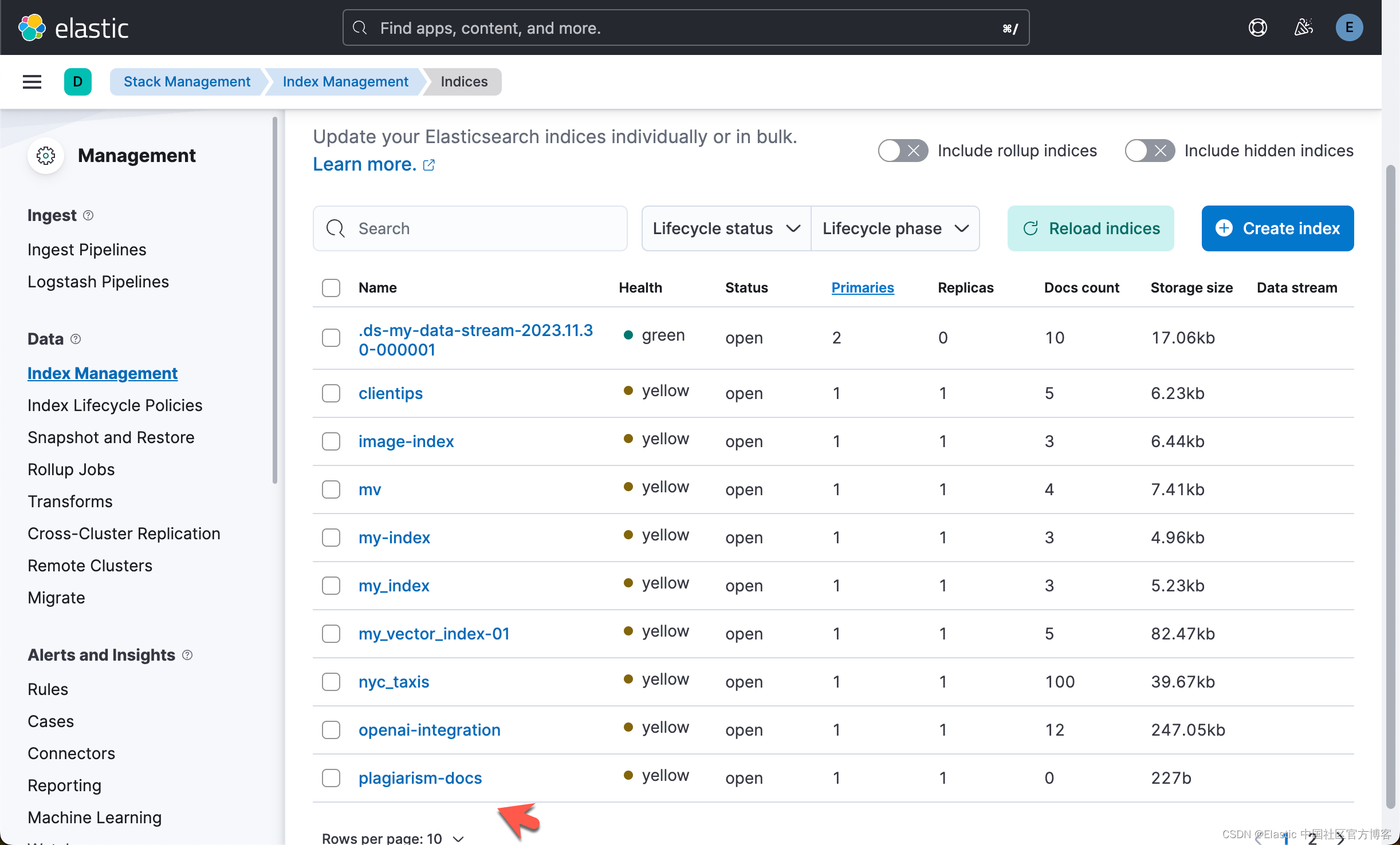

})我们可以在 Kibana 中进行查看:

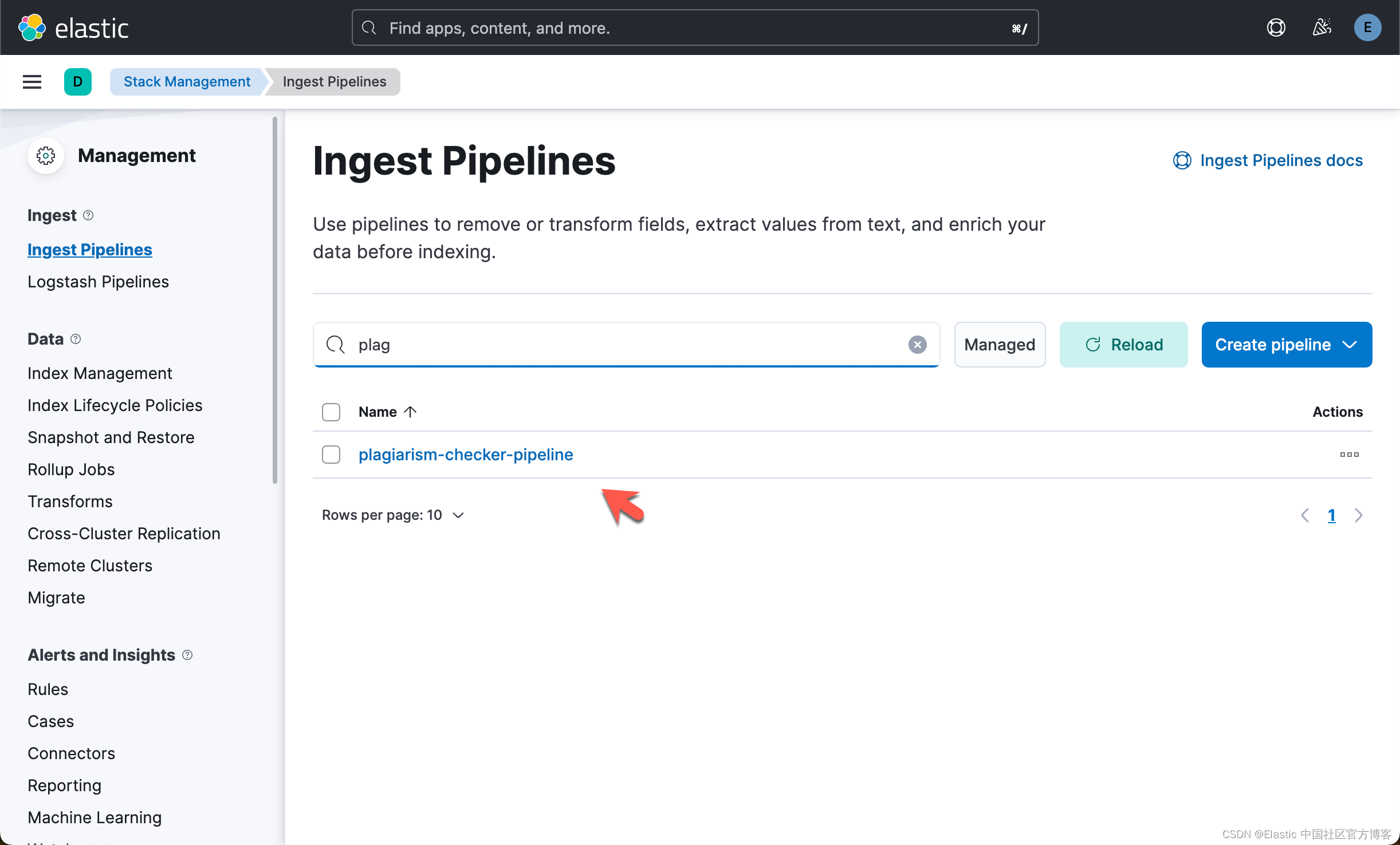

创建 checker ingest pipeline

client.ingest.put_pipeline(id="plagiarism-checker-pipeline",processors = [{"inference": { #for ml models - to infer against the data that is being ingested in the pipeline"model_id": "roberta-base-openai-detector", #text classification model id"target_field": "openai-detector", # Target field for the inference results"field_map": { #Maps the document field names to the known field names of the model."abstract": "text_field" # Field matching our configured trained model input. Typically for NLP models, the field name is text_field.}}},{"inference": {"model_id": "sentence-transformers__all-mpnet-base-v2", #text embedding model model id"target_field": "abstract_vector", # Target field for the inference results"field_map": { #Maps the document field names to the known field names of the model."abstract": "text_field" # Field matching our configured trained model input. Typically for NLP models, the field name is text_field.}}}]

)我们可以在 Kibana 中进行查看:

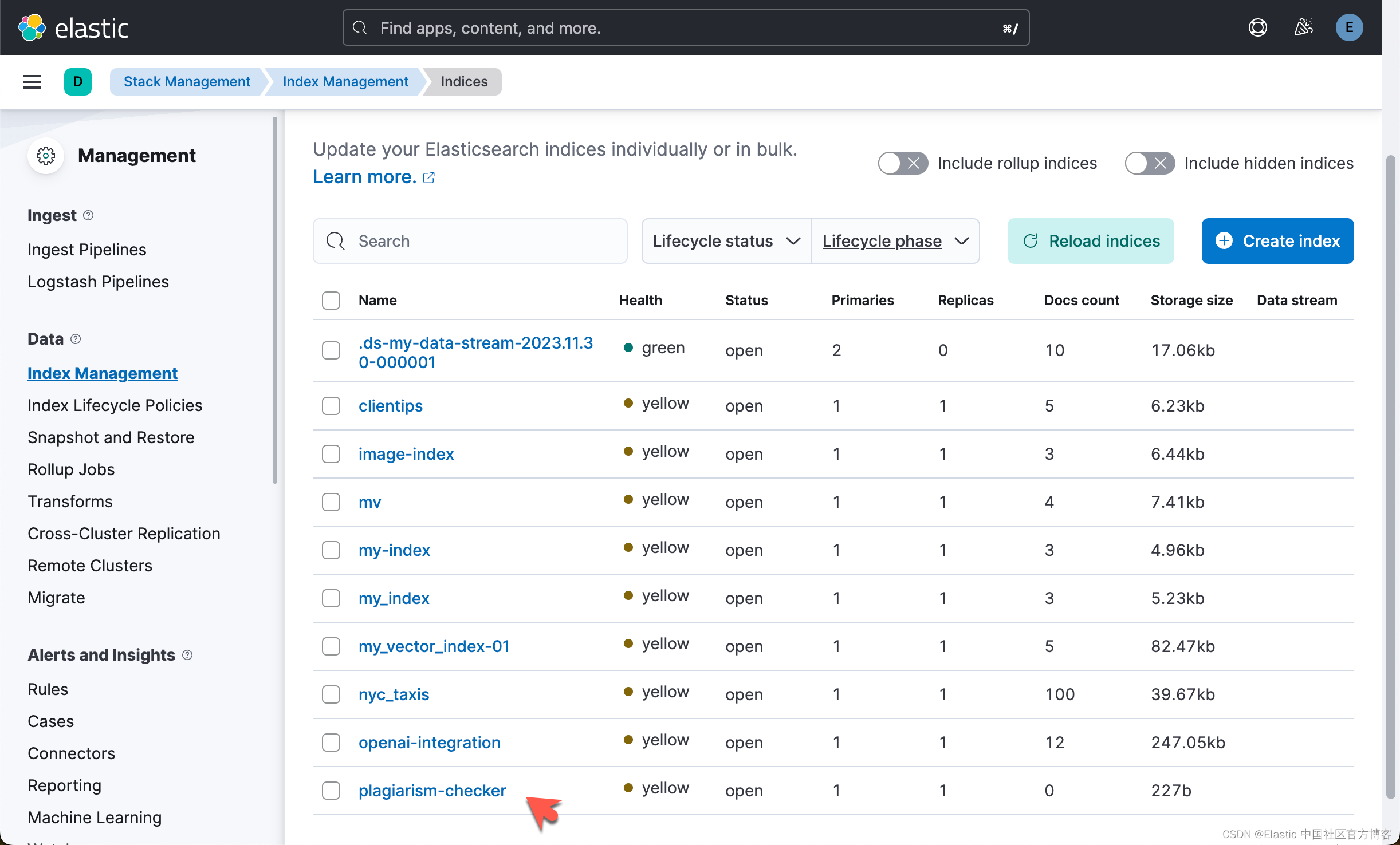

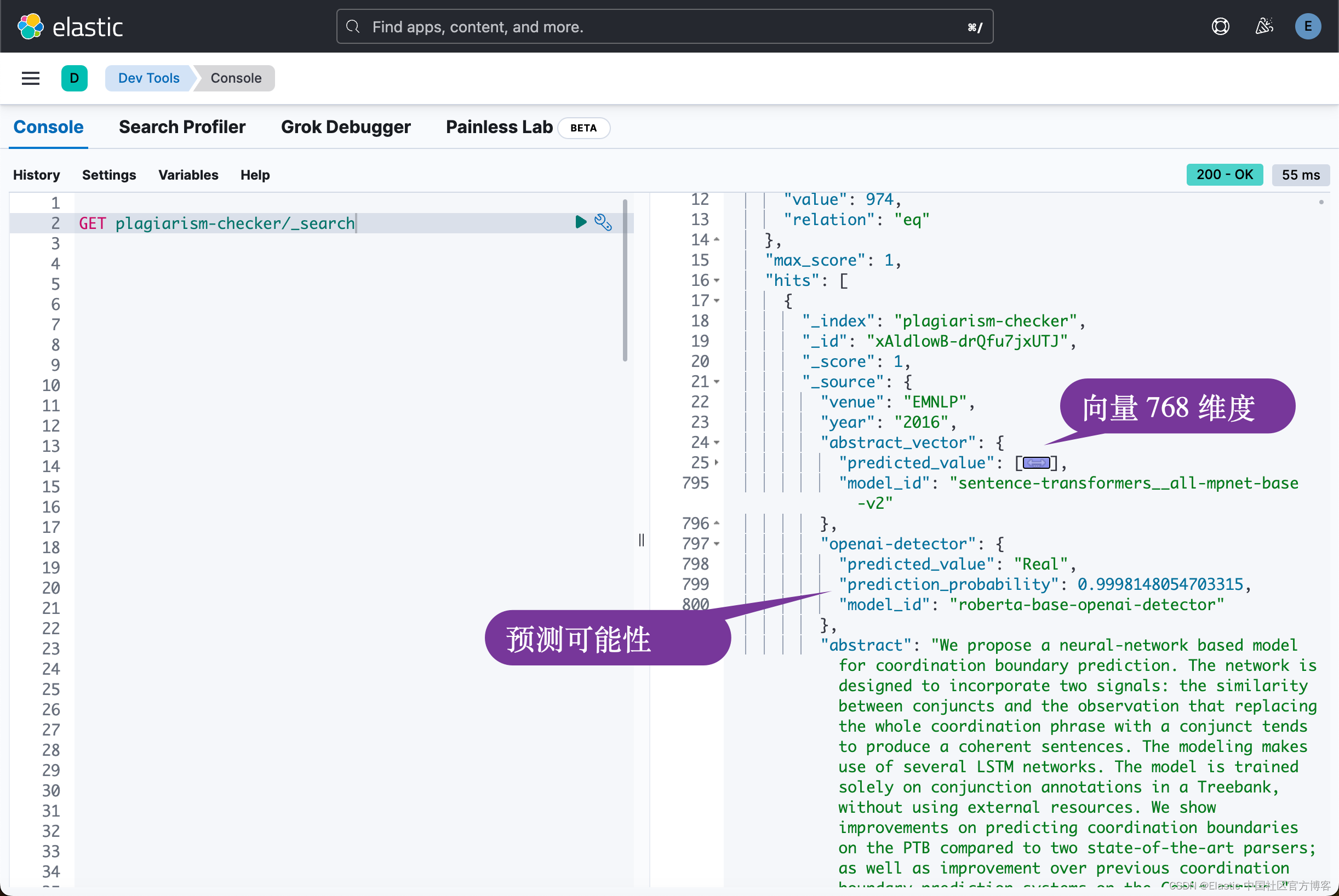

创建 plagiarism checker 索引

client.indices.create(

index="plagiarism-checker",

mappings={

"properties": {"title": {"type": "text","fields": {"keyword": {"type": "keyword"}}},"abstract": {"type": "text","fields": {"keyword": {"type": "keyword"}}},"url": {"type": "keyword"},"venue": {"type": "keyword"},"year": {"type": "keyword"},"abstract_vector.predicted_value": { # Inference results field, target_field.predicted_value"type": "dense_vector","dims": 768, # embedding_size"index": "true","similarity": "dot_product" # When indexing vectors for approximate kNN search, you need to specify the similarity function for comparing the vectors.}}

}

)我们可以在 Kibana 中进行查看:

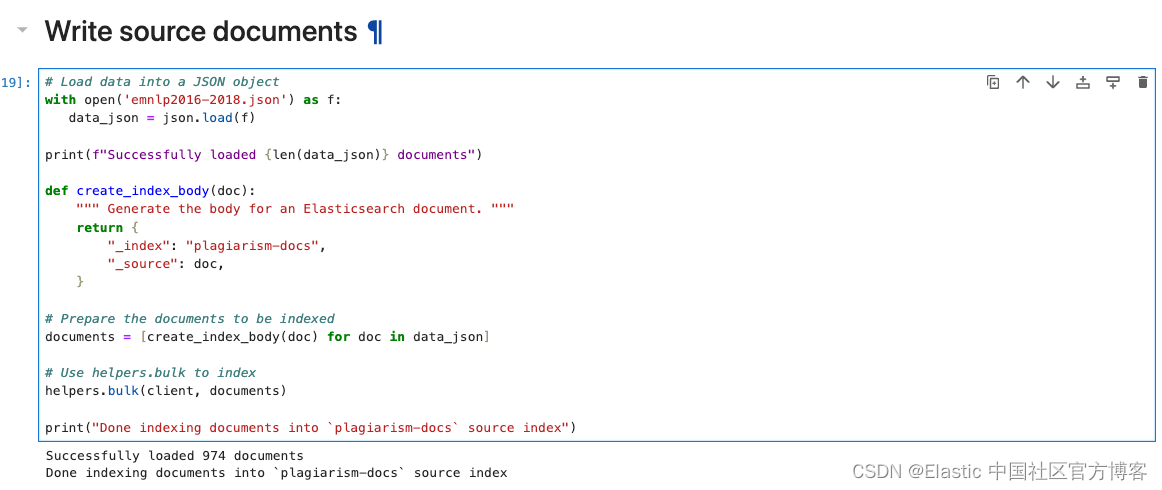

写入源文档

我们首先把地址 https://public.ukp.informatik.tu-darmstadt.de/reimers/sentence-transformers/datasets/emnlp2016-2018.json 里的文档下载到当前目录下:

$ pwd

/Users/liuxg/python/elasticsearch-labs/supporting-blog-content/plagiarism-detection-with-elasticsearch

$ ls

emnlp2016-2018.json plagiarism_detection_es.ipynb

http_ca.crt plagiarism_detection_es_self_managed.ipynb

models如上所示,emnlp2016-2018.json 就是我们下载的文档。

# Load data into a JSON object

with open('emnlp2016-2018.json') as f:data_json = json.load(f)print(f"Successfully loaded {len(data_json)} documents")def create_index_body(doc):""" Generate the body for an Elasticsearch document. """return {"_index": "plagiarism-docs","_source": doc,}# Prepare the documents to be indexed

documents = [create_index_body(doc) for doc in data_json]# Use helpers.bulk to index

helpers.bulk(client, documents)print("Done indexing documents into `plagiarism-docs` source index")

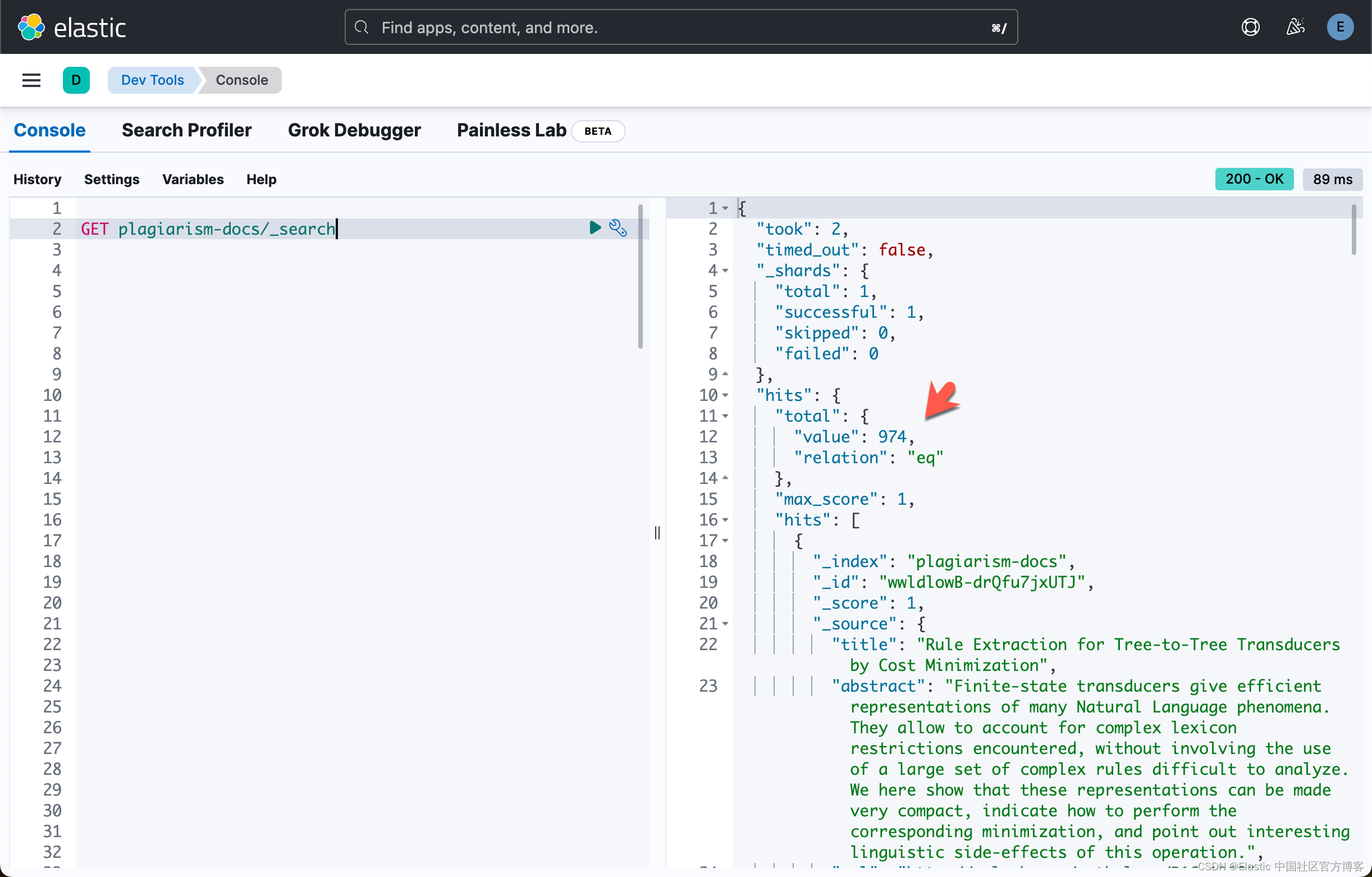

我们可以在 Kibana 中进行查看:

使用 ingest pipeline 进行 reindex

client.reindex(wait_for_completion=False,source={"index": "plagiarism-docs"},dest= {"index": "plagiarism-checker","pipeline": "plagiarism-checker-pipeline"}

)

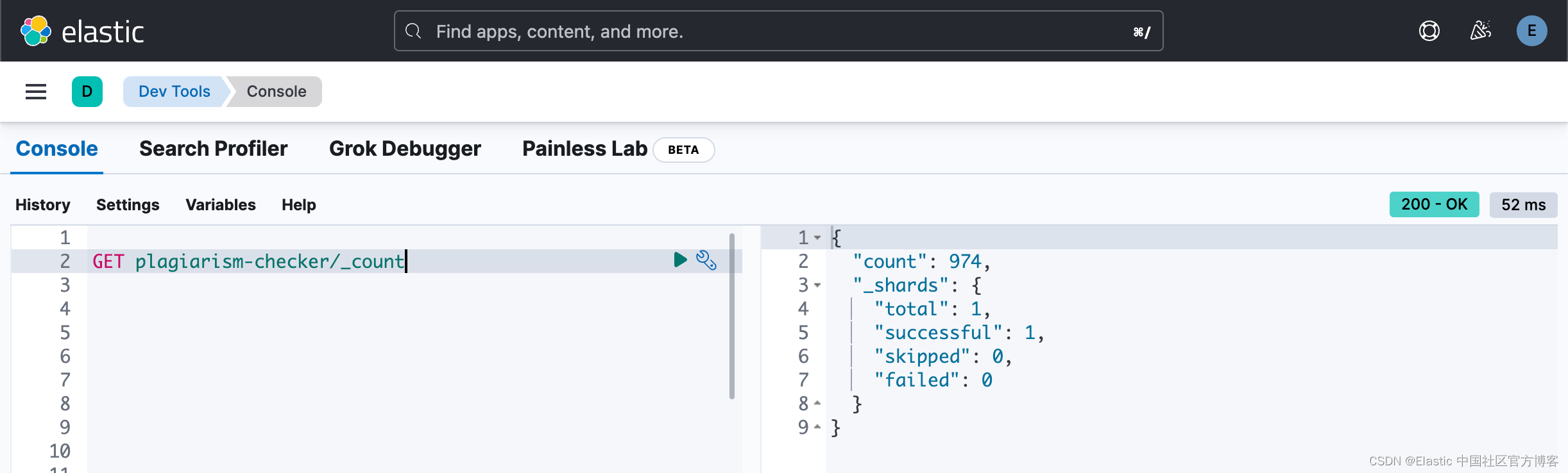

在上面,我们设置 wait_for_completion=False。这是一个异步的操作。我们需要等一段时间让上面的 reindex 完成。我们可以通过检查如下的文档数:

上面表明我们的文档已经完成。我们再接着查看一下 plagiarism-checker 索引中的文档:

检查重复文字

direct plagarism

model_text = 'Understanding and reasoning about cooking recipes is a fruitful research direction towards enabling machines to interpret procedural text. In this work, we introduce RecipeQA, a dataset for multimodal comprehension of cooking recipes. It comprises of approximately 20K instructional recipes with multiple modalities such as titles, descriptions and aligned set of images. With over 36K automatically generated question-answer pairs, we design a set of comprehension and reasoning tasks that require joint understanding of images and text, capturing the temporal flow of events and making sense of procedural knowledge. Our preliminary results indicate that RecipeQA will serve as a challenging test bed and an ideal benchmark for evaluating machine comprehension systems. The data and leaderboard are available at http://hucvl.github.io/recipeqa.'response = client.search(index='plagiarism-checker', size=1,knn={"field": "abstract_vector.predicted_value","k": 9,"num_candidates": 974,"query_vector_builder": {"text_embedding": {"model_id": "sentence-transformers__all-mpnet-base-v2","model_text": model_text}}}

)for hit in response['hits']['hits']:score = hit['_score']title = hit['_source']['title']abstract = hit['_source']['abstract']openai = hit['_source']['openai-detector']['predicted_value']url = hit['_source']['url']if score > 0.9:print(f"\nHigh similarity detected! This might be plagiarism.")print(f"\nMost similar document: '{title}'\n\nAbstract: {abstract}\n\nurl: {url}\n\nScore:{score}\n")if openai == 'Fake':print("This document may have been created by AI.\n")elif score < 0.7:print(f"\nLow similarity detected. This might not be plagiarism.")if openai == 'Fake':print("This document may have been created by AI.\n")else:print(f"\nModerate similarity detected.")print(f"\nMost similar document: '{title}'\n\nAbstract: {abstract}\n\nurl: {url}\n\nScore:{score}\n")if openai == 'Fake':print("This document may have been created by AI.\n")ml_client = MlClient(client)model_id = 'roberta-base-openai-detector' #open ai text classification modeldocument = [{"text_field": model_text}

]ml_response = ml_client.infer_trained_model(model_id=model_id, docs=document)predicted_value = ml_response['inference_results'][0]['predicted_value']if predicted_value == 'Fake':print("Note: The text query you entered may have been generated by AI.\n")

similar text - paraphrase plagiarism

model_text = 'Comprehending and deducing information from culinary instructions represents a promising avenue for research aimed at empowering artificial intelligence to decipher step-by-step text. In this study, we present CuisineInquiry, a database for the multifaceted understanding of cooking guidelines. It encompasses a substantial number of informative recipes featuring various elements such as headings, explanations, and a matched assortment of visuals. Utilizing an extensive set of automatically crafted question-answer pairings, we formulate a series of tasks focusing on understanding and logic that necessitate a combined interpretation of visuals and written content. This involves capturing the sequential progression of events and extracting meaning from procedural expertise. Our initial findings suggest that CuisineInquiry is poised to function as a demanding experimental platform.'response = client.search(index='plagiarism-checker', size=1,knn={"field": "abstract_vector.predicted_value","k": 9,"num_candidates": 974,"query_vector_builder": {"text_embedding": {"model_id": "sentence-transformers__all-mpnet-base-v2","model_text": model_text}}}

)for hit in response['hits']['hits']:score = hit['_score']title = hit['_source']['title']abstract = hit['_source']['abstract']openai = hit['_source']['openai-detector']['predicted_value']url = hit['_source']['url']if score > 0.9:print(f"\nHigh similarity detected! This might be plagiarism.")print(f"\nMost similar document: '{title}'\n\nAbstract: {abstract}\n\nurl: {url}\n\nScore:{score}\n")if openai == 'Fake':print("This document may have been created by AI.\n")elif score < 0.7:print(f"\nLow similarity detected. This might not be plagiarism.")if openai == 'Fake':print("This document may have been created by AI.\n")else:print(f"\nModerate similarity detected.")print(f"\nMost similar document: '{title}'\n\nAbstract: {abstract}\n\nurl: {url}\n\nScore:{score}\n")if openai == 'Fake':print("This document may have been created by AI.\n")ml_client = MlClient(client)model_id = 'roberta-base-openai-detector' #open ai text classification modeldocument = [{"text_field": model_text}

]ml_response = ml_client.infer_trained_model(model_id=model_id, docs=document)predicted_value = ml_response['inference_results'][0]['predicted_value']if predicted_value == 'Fake':print("Note: The text query you entered may have been generated by AI.\n")

完整的代码可以在地址下载:https://github.com/liu-xiao-guo/elasticsearch-labs/blob/main/supporting-blog-content/plagiarism-detection-with-elasticsearch/plagiarism_detection_es_self_managed.ipynb

![[Angular] 笔记 10:服务与依赖注入](https://img-blog.csdnimg.cn/direct/c5f4e7938b7a48cd999fdb21660c4127.png)