code:GitHub - sstzal/DiffTalk: [CVPR2023] The implementation for "DiffTalk: Crafting Diffusion Models for Generalized Audio-Driven Portraits Animation"

问题1. ERROR: Failed building wheel for pysptk

Cython.Compiler.Errors.CompileError: pysptk/_sptk.pyx[end of output]note: This error originates from a subprocess, and is likely not a problem with pip.ERROR: Failed building wheel for pysptk

Failed to build pysptk

ERROR: Could not build wheels for pysptk, which is required to install pyproject.toml-based projects问题 2. ModuleNotFoundError: No module named 'ldm'

Traceback (most recent call last):File "scripts/inference.py", line 7, in <module>from ldm.util import instantiate_from_config

ModuleNotFoundError: No module named 'ldm'问题3. 安装依赖失败

pip install -e git+https://github.com/CompVis/taming-transformers.git@24268930bf1dce879235a7fddd0b2355b84d7ea6#egg=taming_transformers

Obtaining clip from git+https://github.com/openai/CLIP.git@d50d76daa670286dd6cacf3bcd80b5e4823fc8e1#egg=clipCloning https://github.com/openai/CLIP.git (to revision d50d76daa670286dd6cacf3bcd80b5e4823fc8e1) to ./src/clipRunning command git clone --filter=blob:none --quiet https://github.com/openai/CLIP.git /data/DiffTalk-main/src/clipfatal: unable to access 'https://github.com/openai/CLIP.git/': Failed to connect to github.com port 443: Connection timed outerror: subprocess-exited-with-error× git clone --filter=blob:none --quiet https://github.com/openai/CLIP.git /data/DiffTalk-main/src/clip did not run successfully.│ exit code: 128╰─> See above for output.note: This error originates from a subprocess, and is likely not a problem with pip.

error: subprocess-exited-with-error× git clone --filter=blob:none --quiet https://github.com/openai/CLIP.git /data/DiffTalk-main/src/clip did not run successfully.

│ exit code: 128

╰─> See above for output.note: This error originates from a subprocess, and is likely not a problem with pip.解决:拿出来单独下载,加镜像源:

pip install -e git+https://github.com/CompVis/taming-transformers.git@24268930bf1dce879235a7fddd0b2355b84d7ea6#egg=taming_transformers -i https://pypi.douban.com/simple/网络问题看脸色,多试几次

问题4. 下载HDTF数据集

在github上找到了别人写的下载脚本:Modified version of the script to download both audio and video by yukyeongleee · Pull Request #11 · MRzzm/HDTF · GitHub

下载tqdm 和youtube-dl库即可

但是报错:connect:network is unreachable

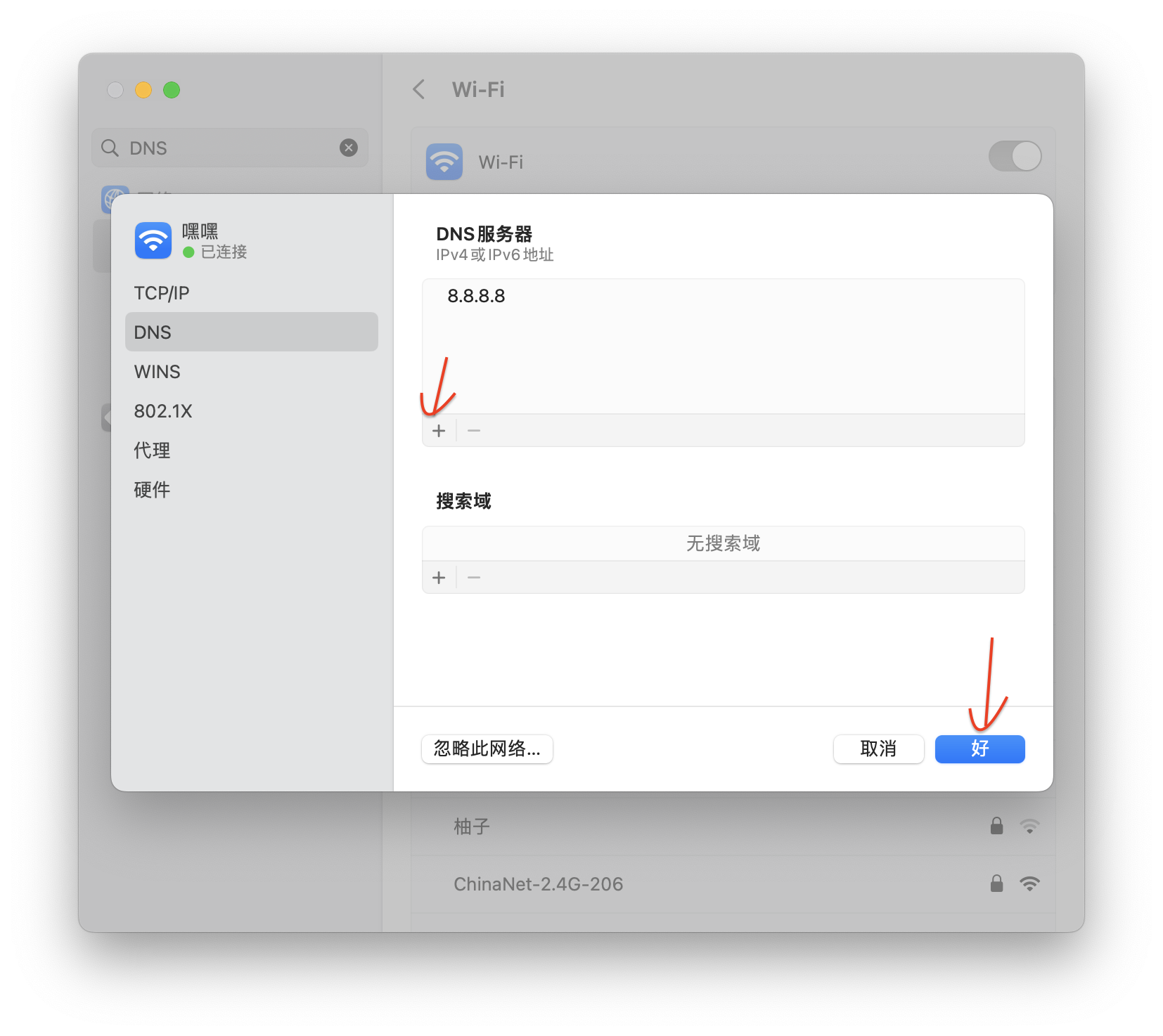

卡了两天,试过各种办法都没有解决,包括且不限于,改为全局代理、添加--proxy设置、偶然发现这可能是因为我用的vscode服务器是linux系统,于是尝试在自己的电脑上下载,成功解决了这个报错

报错2:ERROR: Unable to extract uploader id

ERROR: Unable to extract uploader id; please report this issue on https://yt-dl.org/bug . Make sure you are using the latest version; see https://yt-dl.org/update on how to update. Be sure to call youtube-dl with the --verbose flag and include its complete output.解决:参考youtube-dl报错解决_error: unable to extract uploader id;-CSDN博客

把youtube-dl换成yt-dlp即可~

def download_video(video_id, download_path, resolution: int=None, video_format="mp4", log_file=None):"""Download video from YouTube.:param video_id: YouTube ID of the video.:param download_path: Where to save the video.:param video_format: Format to download.:param log_file: Path to a log file for youtube-dl.:return: Tuple: path to the downloaded video and a bool indicating success.Copy-pasted from https://github.com/ytdl-org/youtube-dl"""# if os.path.isfile(download_path): return True # File already existsif log_file is None:stderr = subprocess.DEVNULLelse:stderr = open(log_file, "a")# video_selection = f"bestvideo[ext={video_format}]"# video_selection = video_selection if resolution is None else f"{video_selection}[height={resolution}]"video_selection = f"bestvideo[ext={video_format}]+bestaudio[ext=m4a]/best[ext={video_format}]"video_selection = video_selection if resolution is None else f"{video_selection}[height={resolution}]"command = ["yt-dlp","https://youtube.com/watch?v={}".format(video_id), "--quiet", "-f",video_selection,"--output", download_path,"--no-continue"]return_code = subprocess.call(command, stderr=stderr)success = return_code == 0if log_file is not None:stderr.close()return success and os.path.isfile(download_path)

_videos_raw文件夹里的是原始视频,后缀000的是处理过后的视频

问题5:视频预处理

安装DiffTalk的要求,还要对视频进一步处理:设置为25 fps;提取音频信号和面部特征点。

问题6:如何分割数据集和验证集?

数据目录下,有用于验证的data_test.txt和用于训练的data_train.txt。如何分割数据集?通过肖像还是视频?通过肖像是指训练集中的人物在验证集中不重复出现。通过视频是指随机地将所有视频放入训练和验证集。train_name.txt和data_train.txt之间的关系是什么?

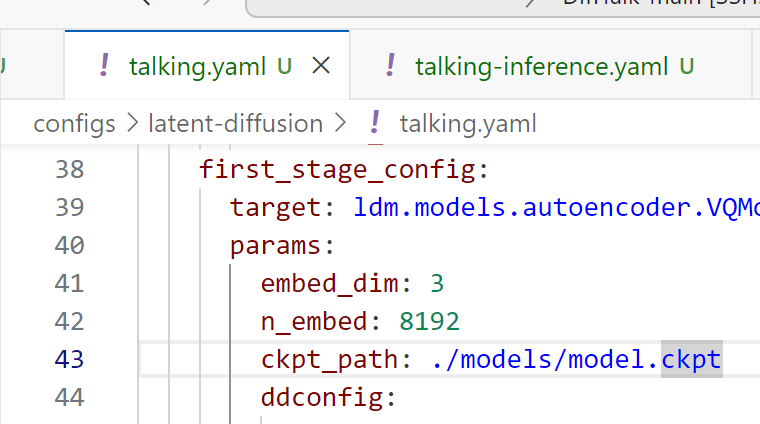

话说这个model.ckpt是什么,作者没给预训练权重吗

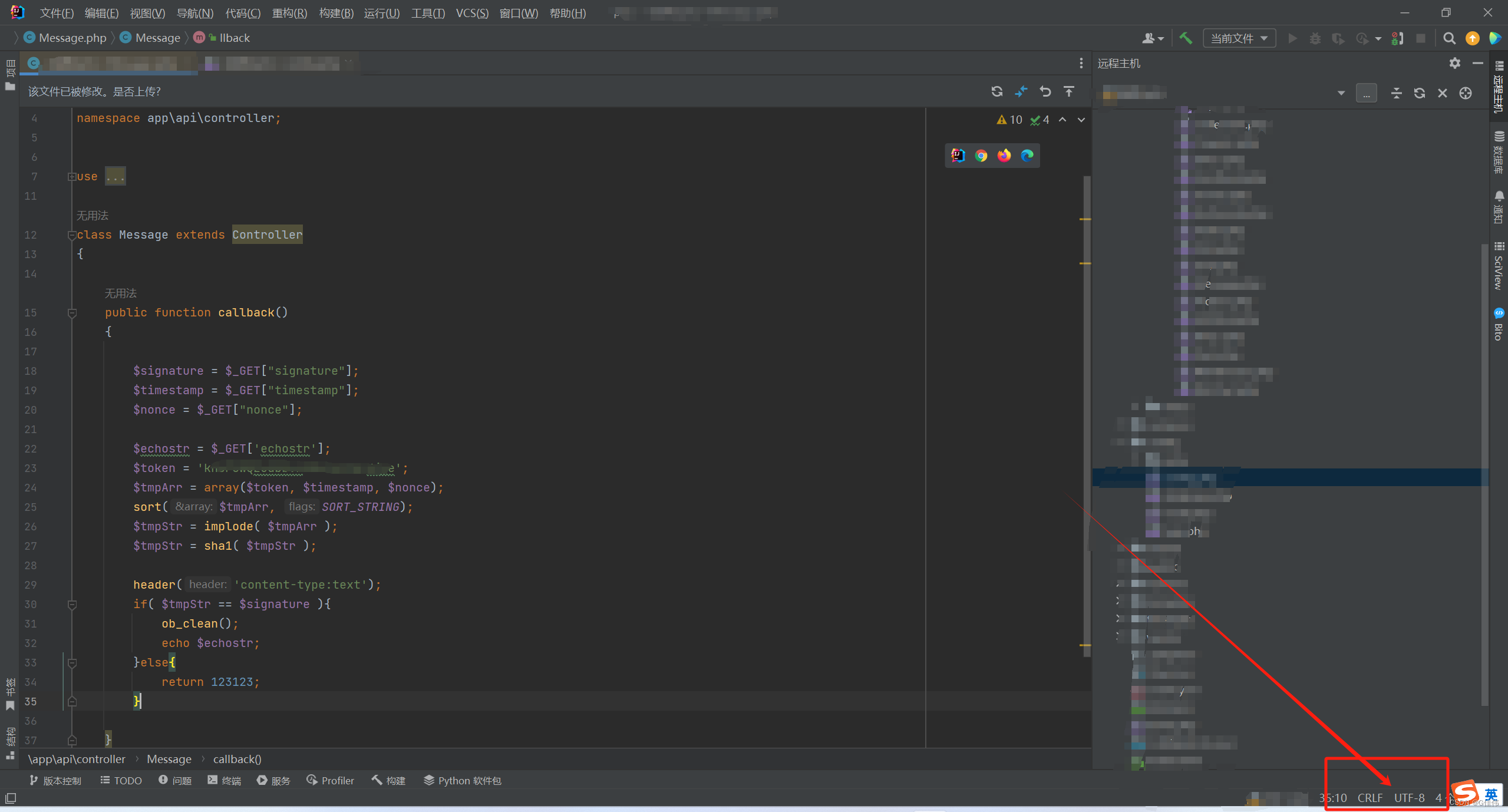

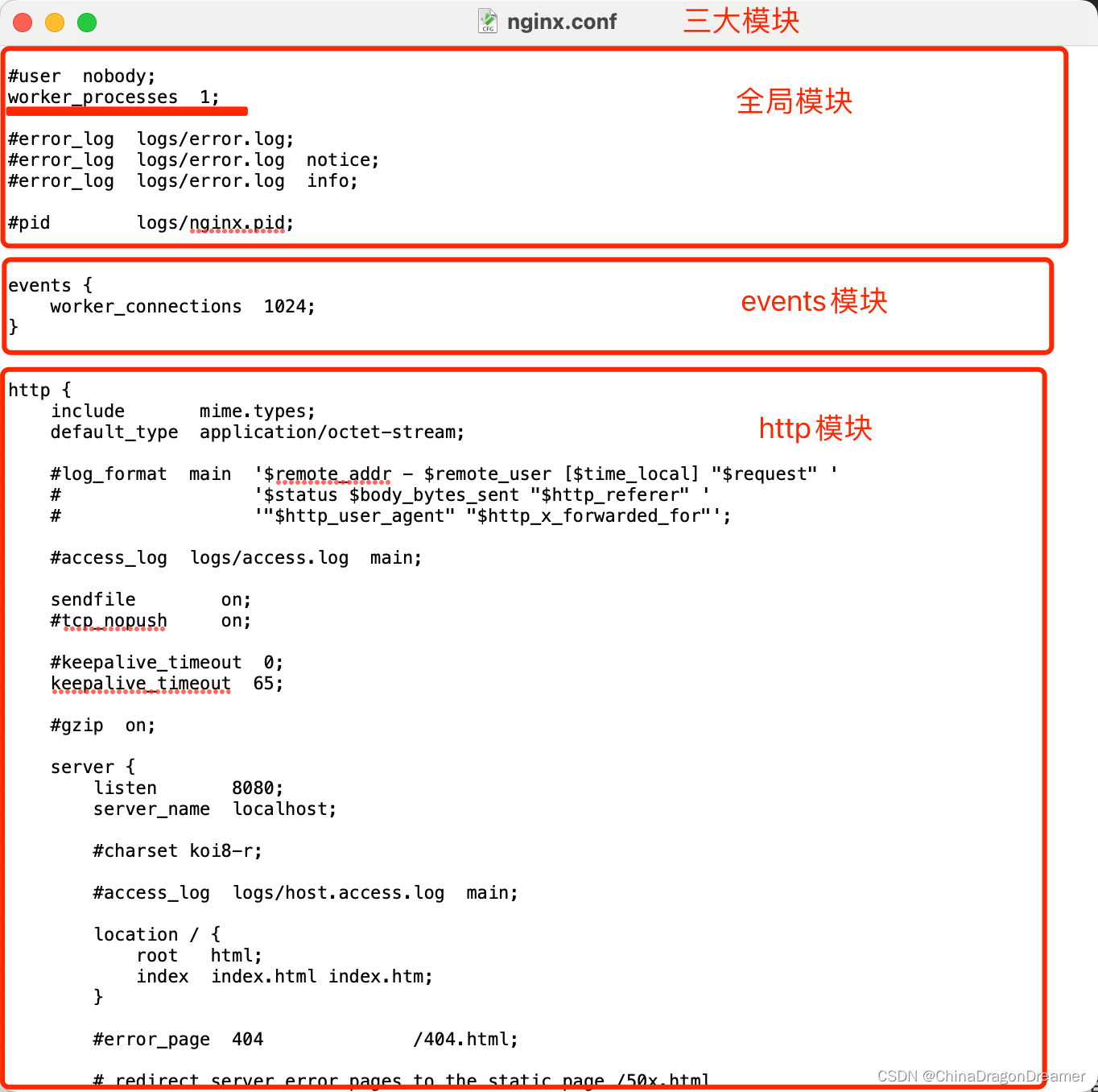

以及,指定使用哪个gpu运行,是要写在sh脚本里的,比如run.sh:

CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python main.py --base configs/latent-diffusion/talking.yaml -t --gpus 0,1,2,3,4,5,6,7,