专属领域论文订阅

关注{晓理紫|小李子},每日更新论文,如感兴趣,请转发给有需要的同学,谢谢支持

如果你感觉对你有所帮助,请关注我,每日准时为你推送最新论文。

分类:

- 大语言模型LLM

- 视觉模型VLM

- 扩散模型

- 视觉导航

- 具身智能,机器人

- 强化学习

- 开放词汇,检测分割

== LLM ==

标题: Mind Your Format: Towards Consistent Evaluation of In-Context Learning Improvements

作者: Anton Voronov, Lena Wolf, Max Ryabinin

PubTime: 2024-01-22

Downlink: http://arxiv.org/abs/2401.06766v2

GitHub: https://github.com/yandex-research/mind-your-format|

中文摘要: 大型语言模型通过几个例子展示了学习解决新任务的非凡能力。提示模板,或者输入示例被格式化以获得提示的方式,是上下文学习的一个重要但经常被忽视的方面。在这项工作中,我们对模板格式对情境学习绩效的影响进行了全面的研究。我们评估了prompt模板对模型(从770M到70B参数)和4个标准分类数据集的影响。我们表明,模板的不良选择会将最强模型和推理方法的性能降低到随机猜测的水平。更重要的是,最好的模板不会在不同的设置之间转移,甚至不会在同一系列的型号之间转移。我们的研究结果表明,目前流行的评估方法,忽略了模板的选择,可能会给出误导的结果,由于不同的工作中不同的模板。作为缓解这个问题的第一步,我们提出了模板集合,它跨几个模板聚合模型预测。这种简单的测试时间增强提高了平均性能,同时对随机模板集的选择具有鲁棒性。

摘要: Large language models demonstrate a remarkable capability for learning to solve new tasks from a few examples. The prompt template, or the way the input examples are formatted to obtain the prompt, is an important yet often overlooked aspect of in-context learning. In this work, we conduct a comprehensive study of the template format’s influence on the in-context learning performance. We evaluate the impact of the prompt template across models (from 770M to 70B parameters) and 4 standard classification datasets. We show that a poor choice of the template can reduce the performance of the strongest models and inference methods to a random guess level. More importantly, the best templates do not transfer between different setups and even between models of the same family. Our findings show that the currently prevalent approach to evaluation, which ignores template selection, may give misleading results due to different templates in different works. As a first step towards mitigating this issue, we propose Template Ensembles that aggregate model predictions across several templates. This simple test-time augmentation boosts average performance while being robust to the choice of random set of templates.

标题: Large Language Models Should Ask Clarifying Questions to Increase Confidence in Generated Code

作者: Jie JW Wu

PubTime: 2024-01-22

Downlink: http://arxiv.org/abs/2308.13507v2

Project: https://mapsworkshop.github.io/).|

中文摘要: 大型语言模型(LLMs)显著提高了在代码生成领域执行任务的能力。然而,LLMs成为有能力的程序员和成为顶级软件工程师之间仍然存在差距。基于对顶级软件工程师经常提出澄清性问题以减少需求和编码解决方案中的模糊性的观察,我认为这同样应该应用于代码生成任务的LLMs。通过在生成最终代码之前询问各种主题的试探性问题,可以缓解使用LLMs编程的挑战,例如不明确的意图规范、缺乏计算思维和不期望的代码质量。这反过来增加了对生成代码的信心。在这项工作中,我探索如何利用更好的沟通技巧来获得对生成代码的更大信心。我提出了一个以通信为中心的过程,它使用LLM生成的通信器来识别问题描述和生成的代码中具有高度模糊性或低置信度的问题。然后,我会问一些澄清性的问题,以获得用户的回答,从而改进代码。

摘要: Large language models (LLMs) have significantly improved the ability to perform tasks in the field of code generation. However, there is still a gap between LLMs being capable coders and being top-tier software engineers. Based on the observation that toplevel software engineers often ask clarifying questions to reduce ambiguity in both requirements and coding solutions, I argue that the same should be applied to LLMs for code generation tasks. By asking probing questions in various topics before generating the final code, the challenges of programming with LLMs, such as unclear intent specification, lack of computational thinking, and undesired code quality, may be alleviated. This, in turn, increases confidence in the generated code. In this work, I explore how to leverage better communication skills to achieve greater confidence in generated code. I propose a communication-centered process that uses an LLM-generated communicator to identify issues with high ambiguity or low confidence in problem descriptions and generated code. I then ask clarifying questions to obtain responses from users for refining the code.

标题: Beyond Task Performance: Evaluating and Reducing the Flaws of Large Multimodal Models with In-Context Learning

作者: Mustafa Shukor, Alexandre Rame, Corentin Dancette

PubTime: 2024-01-22

Downlink: http://arxiv.org/abs/2310.00647v2

Project: https://evalign-icl.github.io/|

GitHub: https://github.com/mshukor/EvALign-ICL.|

中文摘要: 随着大型语言模型(LLMs)的成功,大型多模态模型(LMMs),如Flamingo模型及其后续竞争对手,已经开始成为走向通才代理的自然步骤。然而,与最近的LMM的互动揭示了当前评估基准很难捕捉到的主要局限性。事实上,任务性能(例如,VQA准确性)本身并不能提供足够的线索来理解它们的真实能力、局限性以及这些模型在多大程度上符合人类的期望。为了完善我们对这些缺陷的理解,我们偏离了当前的评估范式,并且(1)在5个不同的轴上评估了10个最近的开源LMM,从3B到80B参数尺度;幻觉、弃权、组合性、可解释性和指令遵循。我们对这些轴的评估揭示了LMMs的主要缺陷。虽然当前调整这些模型的首选解决方案是基于培训,如指令调整或RLHF,但我们宁愿(2)探索免培训情境学习(ICL)作为解决方案,并研究它如何影响这些限制。基于我们的ICL研究,(3)我们进一步推动ICL,并提出新的多模态ICL变体,如;多任务——ICL,后见之明链——ICL,和自我纠正——ICL。我们的发现如下。(1)尽管LMM取得了成功,但它们仍有缺陷,仅通过扩展无法解决。(2)ICL对LMMs缺陷的影响是微妙的;尽管ICL对提高可解释性和答案弃权很有效,但它只是稍微提高了指令遵循,并没有提高写作能力,实际上甚至放大了幻觉。(3)建议的ICL变体作为有效解决其中一些缺陷的事后方法是有希望的。代码可在以下网址获得:https://github.com/mshukor/EvALign-ICL。

摘要: Following the success of Large Language Models (LLMs), Large Multimodal Models (LMMs), such as the Flamingo model and its subsequent competitors, have started to emerge as natural steps towards generalist agents. However, interacting with recent LMMs reveals major limitations that are hardly captured by the current evaluation benchmarks. Indeed, task performances (e.g., VQA accuracy) alone do not provide enough clues to understand their real capabilities, limitations, and to which extent such models are aligned to human expectations. To refine our understanding of those flaws, we deviate from the current evaluation paradigm, and (1) evaluate 10 recent open-source LMMs from 3B up to 80B parameter scale, on 5 different axes; hallucinations, abstention, compositionality, explainability and instruction following. Our evaluation on these axes reveals major flaws in LMMs. While the current go-to solution to align these models is based on training, such as instruction tuning or RLHF, we rather (2) explore the training-free in-context learning (ICL) as a solution, and study how it affects these limitations. Based on our ICL study, (3) we push ICL further and propose new multimodal ICL variants such as; Multitask-ICL, Chain-of-Hindsight-ICL, and Self-Correcting-ICL. Our findings are as follows. (1) Despite their success, LMMs have flaws that remain unsolved with scaling alone. (2) The effect of ICL on LMMs flaws is nuanced; despite its effectiveness for improved explainability, answer abstention, ICL only slightly improves instruction following, does not improve compositional abilities, and actually even amplifies hallucinations. (3) The proposed ICL variants are promising as post-hoc approaches to efficiently tackle some of those flaws. The code is available here: https://github.com/mshukor/EvALign-ICL.

标题: CheXagent: Towards a Foundation Model for Chest X-Ray Interpretation

作者: Zhihong Chen, Maya Varma, Jean-Benoit Delbrouck

PubTime: 2024-01-22

Downlink: http://arxiv.org/abs/2401.12208v1

Project: https://stanford-aimi.github.io/chexagent.html|

中文摘要: 胸部X射线(CXRs)是临床实践中最常进行的成像测试。视觉——语言基础模型(FMs)开发的最新进展带来了执行自动CXR解释的可能性,这可以帮助医生进行临床决策并改善患者的结果。然而,开发能够准确解释CXR的FMs是具有挑战性的,因为(1)医学图像领域中大规模视觉语言数据集的可用性有限,(2)缺乏能够捕捉医学数据复杂性的视觉和语言编码器,以及(3)缺乏用于对FMs在CXR解释上的能力进行基准测试的评估框架。在这项工作中,我们通过首先引入\emph{CheXinstruct}来解决这些挑战,这是一个从28个公开可用的数据集中筛选出来的大规模指令调优数据集。然后,我们介绍\emph{CheXagent}——一种能够分析和总结CXR的指令调谐FM。为了构建CheXagent,我们设计了一个用于解析放射学报告的临床大型语言模型(LLM),一个用于表示CXR图像的视觉编码器,以及一个连接视觉和语言模态的网络。最后,我们介绍\emph{CheXbench}-一种新的基准,旨在系统地评估8个临床相关CXR解释任务的FMs。五位放射科专家的广泛定量评估和定性审查表明,CheXagent在CheXbench任务上优于以前开发的普通和医疗领域FMs。此外,为了提高模型的透明度,我们对性别、种族和年龄等因素进行了公平性评估,以突出潜在的绩效差异。我们的项目位于\url{https://stanford-aimi.github.io/chexagent.html}。

摘要: Chest X-rays (CXRs) are the most frequently performed imaging test in clinical practice. Recent advances in the development of vision-language foundation models (FMs) give rise to the possibility of performing automated CXR interpretation, which can assist physicians with clinical decision-making and improve patient outcomes. However, developing FMs that can accurately interpret CXRs is challenging due to the (1) limited availability of large-scale vision-language datasets in the medical image domain, (2) lack of vision and language encoders that can capture the complexities of medical data, and (3) absence of evaluation frameworks for benchmarking the abilities of FMs on CXR interpretation. In this work, we address these challenges by first introducing \emph{CheXinstruct} - a large-scale instruction-tuning dataset curated from 28 publicly-available datasets. We then present \emph{CheXagent} - an instruction-tuned FM capable of analyzing and summarizing CXRs. To build CheXagent, we design a clinical large language model (LLM) for parsing radiology reports, a vision encoder for representing CXR images, and a network to bridge the vision and language modalities. Finally, we introduce \emph{CheXbench} - a novel benchmark designed to systematically evaluate FMs across 8 clinically-relevant CXR interpretation tasks. Extensive quantitative evaluations and qualitative reviews with five expert radiologists demonstrate that CheXagent outperforms previously-developed general- and medical-domain FMs on CheXbench tasks. Furthermore, in an effort to improve model transparency, we perform a fairness evaluation across factors of sex, race and age to highlight potential performance disparities. Our project is at \url{https://stanford-aimi.github.io/chexagent.html}.

标题: Knowledge Fusion of Large Language Models

作者: Fanqi Wan, Xinting Huang, Deng Cai

PubTime: 2024-01-22

Downlink: http://arxiv.org/abs/2401.10491v2

GitHub: https://github.com/fanqiwan/FuseLLM|

中文摘要: 虽然从头开始训练大型语言模型(LLMs)可以生成具有独特功能和优势的模型,但它的成本很高,并且可能导致冗余功能。或者,一个具有成本效益和令人信服的方法是将现有的预先培训的LLMs合并到一个更有效的模型中。然而,由于这些LLM的不同架构,直接混合它们的权重是不切实际的。在本文中,我们引入了LLM知识融合的概念,旨在将现有LLM的能力结合起来,并将它们转移到一个LLM中。通过利用源LLM的生成分布,我们将它们的集体知识和独特优势具体化,从而潜在地将目标模型的能力提升到任何单个源LLM的能力之外。我们使用三种不同架构的流行LLMs——Llama-2、MPT和OpenLLaMA——跨各种基准和任务验证了我们的方法。我们的发现证实,LLMs的融合可以提高目标模型在一系列能力方面的性能,如推理、常识和代码生成。我们的代码、模型权重和数据在\url{https://github.com/fanqiwan/FuseLLM}公开。

摘要: While training large language models (LLMs) from scratch can generate models with distinct functionalities and strengths, it comes at significant costs and may result in redundant capabilities. Alternatively, a cost-effective and compelling approach is to merge existing pre-trained LLMs into a more potent model. However, due to the varying architectures of these LLMs, directly blending their weights is impractical. In this paper, we introduce the notion of knowledge fusion for LLMs, aimed at combining the capabilities of existing LLMs and transferring them into a single LLM. By leveraging the generative distributions of source LLMs, we externalize their collective knowledge and unique strengths, thereby potentially elevating the capabilities of the target model beyond those of any individual source LLM. We validate our approach using three popular LLMs with different architectures–Llama-2, MPT, and OpenLLaMA–across various benchmarks and tasks. Our findings confirm that the fusion of LLMs can improve the performance of the target model across a range of capabilities such as reasoning, commonsense, and code generation. Our code, model weights, and data are public at \url{https://github.com/fanqiwan/FuseLLM}.

标题: Temporal Blind Spots in Large Language Models

作者: Jonas Wallat, Adam Jatowt, Avishek Anand

PubTime: 2024-01-22

Downlink: http://arxiv.org/abs/2401.12078v1

GitHub: https://github.com/jwallat/temporalblindspots|

中文摘要: 大型语言模型(LLMs)最近因其无与伦比的执行各种自然语言处理任务的能力而获得了极大的关注。这些模型得益于其先进的自然语言理解能力,已经展示了令人印象深刻的零镜头性能。然而,LLMs中使用的预训练数据通常局限于特定的语料库,导致固有的新鲜度和时间范围限制。因此,这引起了对LLMs对于涉及时间意图的任务的有效性的关注。在这项研究中,我们旨在调查通用LLMs在部署用于需要时间理解的任务时的潜在局限性。我们特别注意通过三个流行的时态QA数据集处理事实时态知识。具体来说,我们观察到在关于过去的详细问题上表现不佳,令人惊讶的是,对于相当新的信息。在手动和自动测试中,我们发现了多个时间错误,并描述了QA性能恶化的条件。我们的分析有助于理解LLM的局限性,并为开发能够更好地满足面向时间的任务需求的未来模型提供了有价值的见解。代码可从\footnote{https://github.com/jwallat/temporalblindspots}获得。

摘要: Large language models (LLMs) have recently gained significant attention due to their unparalleled ability to perform various natural language processing tasks. These models, benefiting from their advanced natural language understanding capabilities, have demonstrated impressive zero-shot performance. However, the pre-training data utilized in LLMs is often confined to a specific corpus, resulting in inherent freshness and temporal scope limitations. Consequently, this raises concerns regarding the effectiveness of LLMs for tasks involving temporal intents. In this study, we aim to investigate the underlying limitations of general-purpose LLMs when deployed for tasks that require a temporal understanding. We pay particular attention to handling factual temporal knowledge through three popular temporal QA datasets. Specifically, we observe low performance on detailed questions about the past and, surprisingly, for rather new information. In manual and automatic testing, we find multiple temporal errors and characterize the conditions under which QA performance deteriorates. Our analysis contributes to understanding LLM limitations and offers valuable insights into developing future models that can better cater to the demands of temporally-oriented tasks. The code is available\footnote{https://github.com/jwallat/temporalblindspots}.

==VLM==

标题: Exploring Simple Open-Vocabulary Semantic Segmentation

作者: Zihang Lai

PubTime: 2024-01-22

Downlink: http://arxiv.org/abs/2401.12217v1

GitHub: https://github.com/zlai0/S-Seg|

中文摘要: 开放词汇语义分割模型旨在从一组任意开放词汇文本中准确地为图像中的每个像素分配语义标签。为了学习这种像素级对齐,当前的方法通常依赖于(i)图像级VL模型(例如,剪辑),(ii)地面真实遮罩,和(iii)自定义分组编码器的组合。在本文中,我们介绍了S-Seg,这是一种新颖的模型,可以在不依赖于上述任何元素的情况下实现惊人的强性能。S-Seg利用伪掩码和语言来训练掩码成形器,并且可以很容易地从公开可用的图像——文本数据集中进行训练。与以前的工作相反,我们的模型直接训练像素级特征和语言对齐。一旦经过训练,S-Seg可以很好地推广到多个测试数据集,而不需要微调。此外,S-Seg还具有数据可扩展性的额外优势,并在通过自我培训进行增强时不断改进。我们相信我们简单而有效的方法将作为未来研究的坚实基线。

摘要: Open-vocabulary semantic segmentation models aim to accurately assign a semantic label to each pixel in an image from a set of arbitrary open-vocabulary texts. In order to learn such pixel-level alignment, current approaches typically rely on a combination of (i) image-level VL model (e.g. CLIP), (ii) ground truth masks, and (iii) custom grouping encoders. In this paper, we introduce S-Seg, a novel model that can achieve surprisingly strong performance without depending on any of the above elements. S-Seg leverages pseudo-mask and language to train a MaskFormer, and can be easily trained from publicly available image-text datasets. Contrary to prior works, our model directly trains for pixel-level features and language alignment. Once trained, S-Seg generalizes well to multiple testing datasets without requiring fine-tuning. In addition, S-Seg has the extra benefits of scalability with data and consistently improvement when augmented with self-training. We believe that our simple yet effective approach will serve as a solid baseline for future research.

标题: Interpreting CLIP's Image Representation via Text-Based Decomposition

作者: Yossi Gandelsman, Alexei A. Efros, Jacob Steinhardt

PubTime: 2024-01-22

Downlink: http://arxiv.org/abs/2310.05916v3

Project: https://yossigandelsman.github.io/clip_decomposition/|

中文摘要: 我们通过分析单个模型组件如何影响最终表示来研究剪辑图像编码器。我们将图像表示分解为单个图像块、模型层和注意力头的总和,并使用CLIP的文本表示来解释总和。通过解释注意力头部,我们通过自动查找跨越其输出空间的文本表示来表征每个头部的角色,这揭示了许多头部的特定属性角色(例如,位置或形状)。接下来,解释图像补丁,我们发现了剪辑中出现的空间定位。最后,我们利用这种理解从剪辑中去除虚假特征,并创建一个强大的零镜头图像分割器。我们的结果表明,对Transformer model模型的可扩展理解是可以实现的,并且可以用于修复和改进模型。

摘要: We investigate the CLIP image encoder by analyzing how individual model components affect the final representation. We decompose the image representation as a sum across individual image patches, model layers, and attention heads, and use CLIP’s text representation to interpret the summands. Interpreting the attention heads, we characterize each head’s role by automatically finding text representations that span its output space, which reveals property-specific roles for many heads (e.g. location or shape). Next, interpreting the image patches, we uncover an emergent spatial localization within CLIP. Finally, we use this understanding to remove spurious features from CLIP and to create a strong zero-shot image segmenter. Our results indicate that a scalable understanding of transformer models is attainable and can be used to repair and improve models.

标题: SpatialVLM: Endowing Vision-Language Models with Spatial Reasoning Capabilities

作者: Boyuan Chen, Zhuo Xu, Sean Kirmani

PubTime: 2024-01-22

Downlink: http://arxiv.org/abs/2401.12168v1

Project: https://spatial-vlm.github.io/|

中文摘要: 对空间关系的理解和推理是视觉问答(VQA)和机器人的基本能力。虽然视觉语言模型(VLM)在某些VQA基准测试中表现出显著的性能,但它们在3D空间推理方面仍然缺乏能力,例如识别物理对象的定量关系,如距离或大小差异。我们假设VLMs有限的空间推理能力是由于训练数据中缺乏3D空间知识,并旨在通过用互联网规模的空间推理数据训练VLMs来解决这一问题。为此,我们提出了一个系统来促进这种方法。我们首先开发了一个自动3D空间VQA数据生成框架,可以在1000万张真实图像上扩展多达20亿个VQA示例。然后,我们研究了训练配方中的各种因素,包括数据质量、训练管道和VLM架构。我们的工作以度量空间中第一个互联网规模的3D空间推理数据集为特色。通过在这样的数据上训练VLM,我们显著增强了它在定性和定量空间VQA上的能力。最后,我们证明了这种VLM由于其定量估计能力,在思维链空间推理和机器人学中开启了新的下游应用。项目网站:https://spatial-vlm.github.io/

摘要: Understanding and reasoning about spatial relationships is a fundamental capability for Visual Question Answering (VQA) and robotics. While Vision Language Models (VLM) have demonstrated remarkable performance in certain VQA benchmarks, they still lack capabilities in 3D spatial reasoning, such as recognizing quantitative relationships of physical objects like distances or size differences. We hypothesize that VLMs’ limited spatial reasoning capability is due to the lack of 3D spatial knowledge in training data and aim to solve this problem by training VLMs with Internet-scale spatial reasoning data. To this end, we present a system to facilitate this approach. We first develop an automatic 3D spatial VQA data generation framework that scales up to 2 billion VQA examples on 10 million real-world images. We then investigate various factors in the training recipe, including data quality, training pipeline, and VLM architecture. Our work features the first internet-scale 3D spatial reasoning dataset in metric space. By training a VLM on such data, we significantly enhance its ability on both qualitative and quantitative spatial VQA. Finally, we demonstrate that this VLM unlocks novel downstream applications in chain-of-thought spatial reasoning and robotics due to its quantitative estimation capability. Project website: https://spatial-vlm.github.io/

标题: Towards the Detection of Diffusion Model Deepfakes

作者: Jonas Ricker, Simon Damm, Thorsten Holz

PubTime: 2024-01-22

Downlink: http://arxiv.org/abs/2210.14571v4

GitHub: https://github.com/jonasricker/diffusion-model-deepfake-detection|

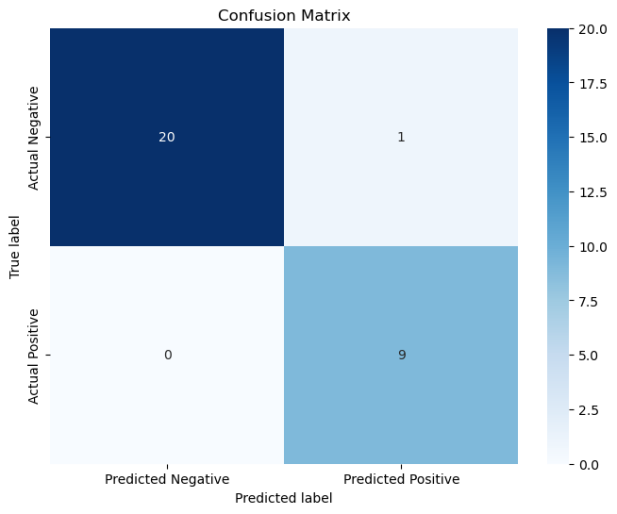

中文摘要: 在过去几年中,扩散模型(DMs)的视觉质量达到了前所未有的水平。然而,相对较少关注DM生成的图像的检测,这对于防止对我们的社会产生不利影响至关重要。相比之下,生成对抗网络(GANs)已经从法医学的角度进行了广泛的研究。在这项工作中,我们因此采取自然的下一步来评估以前的方法是否可以用来检测由DMs生成的图像。我们的实验产生了两个关键发现:(1)最先进的GAN检测器不能可靠地区分真实图像和DM生成的图像,但是(2)在DM生成的图像上重新训练它们允许几乎完美的检测,这甚至显著地推广到GAN。结合特征空间分析,我们的结果导致假设DMs产生更少的可检测伪影,因此与GANs相比更难检测。一个可能的原因是DM生成的图像中没有网格状频率伪影,这是GANs的一个已知弱点。然而,我们进行了有趣的观察,扩散模型倾向于低估高频,我们将其归因于学习目标。

摘要: In the course of the past few years, diffusion models (DMs) have reached an unprecedented level of visual quality. However, relatively little attention has been paid to the detection of DM-generated images, which is critical to prevent adverse impacts on our society. In contrast, generative adversarial networks (GANs), have been extensively studied from a forensic perspective. In this work, we therefore take the natural next step to evaluate whether previous methods can be used to detect images generated by DMs. Our experiments yield two key findings: (1) state-of-the-art GAN detectors are unable to reliably distinguish real from DM-generated images, but (2) re-training them on DM-generated images allows for almost perfect detection, which remarkably even generalizes to GANs. Together with a feature space analysis, our results lead to the hypothesis that DMs produce fewer detectable artifacts and are thus more difficult to detect compared to GANs. One possible reason for this is the absence of grid-like frequency artifacts in DM-generated images, which are a known weakness of GANs. However, we make the interesting observation that diffusion models tend to underestimate high frequencies, which we attribute to the learning objective.

标题: MathVista: Evaluating Mathematical Reasoning of Foundation Models in Visual Contexts

作者: Pan Lu, Hritik Bansal, Tony Xia

PubTime: 2024-01-21

Downlink: http://arxiv.org/abs/2310.02255v3

Project: https://mathvista.github.io/.|

中文摘要: 大型语言模型(LLMs)和大型多模态模型(LMMs)在许多任务和领域中表现出令人印象深刻的问题解决技能,但它们在视觉上下文中的数学推理能力尚未得到系统研究。为了弥合这一差距,我们推出了MathVista,这是一个旨在结合不同数学和视觉任务挑战的基准测试。它由6,141个示例组成,来自28个涉及数学的现有多模态数据集和3个新创建的数据集(即IQTest、FunctionQA和PaperQA)。完成这些任务需要细粒度、深入的视觉理解和组合推理,所有最先进的基础模型都觉得这很有挑战性。借助MathVista,我们对12个著名的基础模型进行了全面的定量评估。性能最好的GPT-4V模型实现了49.9%的整体精度,大大超过性能第二好的巴德15.1%。我们的深入分析表明,GPT-4V的优越性主要归因于其增强的视觉感知和数学推理。然而,GPT-4V仍然比人类的性能低10.4%,因为它经常难以理解复杂的数字和进行严格的推理。这一重大差距凸显了MathVista将在开发通用人工智能代理方面发挥的关键作用,这些代理能够处理数学密集型和视觉丰富的现实世界任务。我们进一步探索了自我验证的新能力,自我一致性的应用,以及GPT-4V的交互式聊天机器人功能,突出了它在未来研究中的潜力。该项目可在https://mathvista.github.io/。

摘要: Large Language Models (LLMs) and Large Multimodal Models (LMMs) exhibit impressive problem-solving skills in many tasks and domains, but their ability in mathematical reasoning in visual contexts has not been systematically studied. To bridge this gap, we present MathVista, a benchmark designed to combine challenges from diverse mathematical and visual tasks. It consists of 6,141 examples, derived from 28 existing multimodal datasets involving mathematics and 3 newly created datasets (i.e., IQTest, FunctionQA, and PaperQA). Completing these tasks requires fine-grained, deep visual understanding and compositional reasoning, which all state-of-the-art foundation models find challenging. With MathVista, we have conducted a comprehensive, quantitative evaluation of 12 prominent foundation models. The best-performing GPT-4V model achieves an overall accuracy of 49.9%, substantially outperforming Bard, the second-best performer, by 15.1%. Our in-depth analysis reveals that the superiority of GPT-4V is mainly attributed to its enhanced visual perception and mathematical reasoning. However, GPT-4V still falls short of human performance by 10.4%, as it often struggles to understand complex figures and perform rigorous reasoning. This significant gap underscores the critical role that MathVista will play in the development of general-purpose AI agents capable of tackling mathematically intensive and visually rich real-world tasks. We further explore the new ability of self-verification, the application of self-consistency, and the interactive chatbot capabilities of GPT-4V, highlighting its promising potential for future research. The project is available at https://mathvista.github.io/.

标题: Pixel-Wise Recognition for Holistic Surgical Scene Understanding

作者: Nicolás Ayobi, Santiago Rodríguez, Alejandra Pérez

PubTime: 2024-01-20

Downlink: http://arxiv.org/abs/2401.11174v1

Project: https://link.springer.com/chapter/10.1007/978-3-031-16449-1_42|https://ieeexplore.ieee.org/document/10230819|

中文摘要: 本文介绍了前列腺切除术的整体和多粒度手术场景理解(GraSP)数据集,这是一个精心策划的基准,将手术场景理解建模为具有不同粒度级别的互补任务的层次结构。我们的方法能够对手术活动进行多层次的理解,包括长期任务,如手术阶段和步骤识别,以及短期任务,包括手术器械分割和原子视觉动作检测。为了利用我们提出的基准,我们引入了动作、阶段、步骤和仪器分割变压器(TAPIS)模型,这是一种通用架构,它将全局视频特征提取器与来自仪器分割模型的局部区域建议相结合,以解决我们基准的多粒度问题。通过大量的实验,我们展示了在短期识别任务中包含分割注释的影响,强调了每个任务的不同粒度要求,并建立了TAPIS优于以前提出的基线和传统的基于CNN的模型。此外,我们通过多个公共基准验证了我们方法的稳健性,确认了我们数据集的可靠性和适用性。这项工作代表了内窥镜视觉向前迈出的重要一步,为未来的研究提供了一个新的和全面的框架,以全面了解外科手术。

摘要: This paper presents the Holistic and Multi-Granular Surgical Scene Understanding of Prostatectomies (GraSP) dataset, a curated benchmark that models surgical scene understanding as a hierarchy of complementary tasks with varying levels of granularity. Our approach enables a multi-level comprehension of surgical activities, encompassing long-term tasks such as surgical phases and steps recognition and short-term tasks including surgical instrument segmentation and atomic visual actions detection. To exploit our proposed benchmark, we introduce the Transformers for Actions, Phases, Steps, and Instrument Segmentation (TAPIS) model, a general architecture that combines a global video feature extractor with localized region proposals from an instrument segmentation model to tackle the multi-granularity of our benchmark. Through extensive experimentation, we demonstrate the impact of including segmentation annotations in short-term recognition tasks, highlight the varying granularity requirements of each task, and establish TAPIS’s superiority over previously proposed baselines and conventional CNN-based models. Additionally, we validate the robustness of our method across multiple public benchmarks, confirming the reliability and applicability of our dataset. This work represents a significant step forward in Endoscopic Vision, offering a novel and comprehensive framework for future research towards a holistic understanding of surgical procedures.

== diffusion model ==

标题: DITTO: Diffusion Inference-Time T-Optimization for Music Generation

作者: Zachary Novack, Julian McAuley, Taylor Berg-Kirkpatrick

PubTime: 2024-01-22

Downlink: http://arxiv.org/abs/2401.12179v1

Project: https://DITTO-Music.github.io/web/.|

中文摘要: 我们提出了扩散推理时间T-优化(DITTO),这是一种通用框架,用于通过优化初始噪声潜伏期在推理时间控制预训练的文本到音乐扩散模型。我们的方法可用于优化任何可微特征匹配损失,以实现目标(风格化)输出,并利用梯度检查点提高内存效率。我们展示了音乐生成的惊人广泛的应用,包括修复、外绘和循环以及强度、旋律和音乐结构控制——所有这些都无需微调底层模型。当我们将我们的方法与相关的训练、指导和基于优化的方法进行比较时,我们发现DITTO在几乎所有任务上都实现了最先进的性能,包括在可控性、音频质量和计算效率方面优于可比方法,从而为扩散模型的高质量、灵活、免训练控制打开了大门。声音示例可以在https://DITTO-Music.github.io/web/。

摘要: We propose Diffusion Inference-Time T-Optimization (DITTO), a general-purpose frame-work for controlling pre-trained text-to-music diffusion models at inference-time via optimizing initial noise latents. Our method can be used to optimize through any differentiable feature matching loss to achieve a target (stylized) output and leverages gradient checkpointing for memory efficiency. We demonstrate a surprisingly wide-range of applications for music generation including inpainting, outpainting, and looping as well as intensity, melody, and musical structure control - all without ever fine-tuning the underlying model. When we compare our approach against related training, guidance, and optimization-based methods, we find DITTO achieves state-of-the-art performance on nearly all tasks, including outperforming comparable approaches on controllability, audio quality, and computational efficiency, thus opening the door for high-quality, flexible, training-free control of diffusion models. Sound examples can be found at https://DITTO-Music.github.io/web/.

标题: Towards the Detection of Diffusion Model Deepfakes

作者: Jonas Ricker, Simon Damm, Thorsten Holz

PubTime: 2024-01-22

Downlink: http://arxiv.org/abs/2210.14571v4

GitHub: https://github.com/jonasricker/diffusion-model-deepfake-detection|

中文摘要: 在过去几年中,扩散模型(DMs)的视觉质量达到了前所未有的水平。然而,相对较少关注DM生成的图像的检测,这对于防止对我们的社会产生不利影响至关重要。相比之下,生成对抗网络(GANs)已经从法医学的角度进行了广泛的研究。在这项工作中,我们因此采取自然的下一步来评估以前的方法是否可以用来检测由DMs生成的图像。我们的实验产生了两个关键发现:(1)最先进的GAN检测器不能可靠地区分真实图像和DM生成的图像,但是(2)在DM生成的图像上重新训练它们允许几乎完美的检测,这甚至显著地推广到GAN。结合特征空间分析,我们的结果导致假设DMs产生更少的可检测伪影,因此与GANs相比更难检测。一个可能的原因是DM生成的图像中没有网格状频率伪影,这是GANs的一个已知弱点。然而,我们进行了有趣的观察,扩散模型倾向于低估高频,我们将其归因于学习目标。

摘要: In the course of the past few years, diffusion models (DMs) have reached an unprecedented level of visual quality. However, relatively little attention has been paid to the detection of DM-generated images, which is critical to prevent adverse impacts on our society. In contrast, generative adversarial networks (GANs), have been extensively studied from a forensic perspective. In this work, we therefore take the natural next step to evaluate whether previous methods can be used to detect images generated by DMs. Our experiments yield two key findings: (1) state-of-the-art GAN detectors are unable to reliably distinguish real from DM-generated images, but (2) re-training them on DM-generated images allows for almost perfect detection, which remarkably even generalizes to GANs. Together with a feature space analysis, our results lead to the hypothesis that DMs produce fewer detectable artifacts and are thus more difficult to detect compared to GANs. One possible reason for this is the absence of grid-like frequency artifacts in DM-generated images, which are a known weakness of GANs. However, we make the interesting observation that diffusion models tend to underestimate high frequencies, which we attribute to the learning objective.

标题: MaskDiff: Modeling Mask Distribution with Diffusion Probabilistic Model for Few-Shot Instance Segmentation

作者: Minh-Quan Le, Tam V. Nguyen, Trung-Nghia Le

PubTime: 2024-01-21

Downlink: http://arxiv.org/abs/2303.05105v2

GitHub: https://github.com/minhquanlecs/MaskDiff.|

摘要: Few-shot instance segmentation extends the few-shot learning paradigm to the instance segmentation task, which tries to segment instance objects from a query image with a few annotated examples of novel categories. Conventional approaches have attempted to address the task via prototype learning, known as point estimation. However, this mechanism depends on prototypes (\eg mean of K − K- K−shot) for prediction, leading to performance instability. To overcome the disadvantage of the point estimation mechanism, we propose a novel approach, dubbed MaskDiff, which models the underlying conditional distribution of a binary mask, which is conditioned on an object region and K − K- K−shot information. Inspired by augmentation approaches that perturb data with Gaussian noise for populating low data density regions, we model the mask distribution with a diffusion probabilistic model. We also propose to utilize classifier-free guided mask sampling to integrate category information into the binary mask generation process. Without bells and whistles, our proposed method consistently outperforms state-of-the-art methods on both base and novel classes of the COCO dataset while simultaneously being more stable than existing methods. The source code is available at: https://github.com/minhquanlecs/MaskDiff.

标题: Scalable High-Resolution Pixel-Space Image Synthesis with Hourglass Diffusion Transformers

作者: Katherine Crowson, Stefan Andreas Baumann, Alex Birch

PubTime: 2024-01-21

Downlink: http://arxiv.org/abs/2401.11605v1

Project: https://crowsonkb.github.io/hourglass-diffusion-transformers/|

中文摘要: 我们介绍了沙漏扩散Transformer model(HDiT),这是一种图像生成模型,它展示了像素计数的线性缩放,支持直接在像素空间中进行高分辨率(例如KaTeX parse error: Undefined control sequence: \乘 at position 5: 1024\̲乘̲以1024)的训练。它建立在Transformer model架构的基础上,众所周知,该架构可以扩展到数十亿个参数,它弥合了卷积U网的效率和变压器的可扩展性之间的差距。HDiT训练成功,没有典型的高分辨率训练技术,如多尺度架构,潜在的自动编码器或自我调节。我们证明了HDiT在ImageNet 25 6 2 256^2 2562上的性能与现有模型具有竞争力,并为FFHQ上的扩散模型创造了新的技术水平—— 102 4 2 1024^2 10242。

摘要: We present the Hourglass Diffusion Transformer (HDiT), an image generative model that exhibits linear scaling with pixel count, supporting training at high-resolution (e.g. 1024 × 1024 1024 \times 1024 1024×1024) directly in pixel-space. Building on the Transformer architecture, which is known to scale to billions of parameters, it bridges the gap between the efficiency of convolutional U-Nets and the scalability of Transformers. HDiT trains successfully without typical high-resolution training techniques such as multiscale architectures, latent autoencoders or self-conditioning. We demonstrate that HDiT performs competitively with existing models on ImageNet 25 6 2 256^2 2562, and sets a new state-of-the-art for diffusion models on FFHQ- 102 4 2 1024^2 10242.

标题: Promptable Game Models: Text-Guided Game Simulation via Masked Diffusion Models

作者: Willi Menapace, Aliaksandr Siarohin, Stéphane Lathuilière

PubTime: 2024-01-21

Downlink: http://arxiv.org/abs/2303.13472v3

Project: https://snap-research.github.io/promptable-game-models/.|http://dx.doi.org/10.1145/3635705|

中文摘要: 神经视频游戏模拟器作为生成和编辑视频的强大工具出现。他们的想法是将游戏表现为由代理人的行动驱动的环境状态的演变。虽然这种范式使用户能够一个动作一个动作地玩游戏,但它的僵化排除了更多语义形式的控制。为了克服这一限制,我们用指定为一组自然语言动作和期望状态的提示来扩充游戏模型。其结果——一个可提示的游戏模型(PGM)——使用户可以通过用高级和低级动作序列来提示游戏。最吸引人的是,我们的PGM解锁了导演模式,通过提示的形式为代理指定目标来玩游戏。这需要学习由我们的动画模型封装的“游戏人工智能”,使用高级约束来导航场景,与对手比赛,并设计策略来赢得一分。为了呈现结果状态,我们使用封装在合成模型中的合成NeRF表示。为了促进未来的研究,我们提出了新收集的,注释和校准的网球和《我的世界》数据集。我们的方法在渲染质量方面明显优于现有的神经视频游戏模拟器,并解锁了超出当前技术水平能力的应用程序。我们的框架、数据和模型可在https://snap-research.github.io/promptable-game-models/。获得

摘要: Neural video game simulators emerged as powerful tools to generate and edit videos. Their idea is to represent games as the evolution of an environment’s state driven by the actions of its agents. While such a paradigm enables users to play a game action-by-action, its rigidity precludes more semantic forms of control. To overcome this limitation, we augment game models with prompts specified as a set of natural language actions and desired states. The result-a Promptable Game Model (PGM)-makes it possible for a user to play the game by prompting it with high- and low-level action sequences. Most captivatingly, our PGM unlocks the director’s mode, where the game is played by specifying goals for the agents in the form of a prompt. This requires learning “game AI”, encapsulated by our animation model, to navigate the scene using high-level constraints, play against an adversary, and devise a strategy to win a point. To render the resulting state, we use a compositional NeRF representation encapsulated in our synthesis model. To foster future research, we present newly collected, annotated and calibrated Tennis and Minecraft datasets. Our method significantly outperforms existing neural video game simulators in terms of rendering quality and unlocks applications beyond the capabilities of the current state of the art. Our framework, data, and models are available at https://snap-research.github.io/promptable-game-models/.

标题: DiffuMask: Synthesizing Images with Pixel-level Annotations for Semantic Segmentation Using Diffusion Models

作者: Weijia Wu, Yuzhong Zhao, Mike Zheng Shou

PubTime: 2024-01-21

Downlink: http://arxiv.org/abs/2303.11681v4

Project: https://weijiawu.github.io/DiffusionMask/.|

中文摘要: 用像素标签收集和注释图像既费时又费力。相比之下,使用生成模型(如DALL-E、稳定扩散)可以免费获得合成数据。在本文中,我们表明,有可能自动获得由现成的稳定扩散模型生成的合成图像的准确语义掩码,该模型在训练期间仅使用文本——图像对。我们的方法称为DiffuMask,利用了文本和图像之间交叉注意力图的潜力,这是自然和无缝的,可以将文本驱动的图像合成扩展到语义掩码生成。DiffuMask使用文本引导的交叉注意信息来定位特定于类别/单词的区域,这些区域与实用技术相结合,以创建一种新颖的高分辨率和类别区分像素遮罩。这些方法大大降低了数据采集和标注的成本。实验表明,在DiffuMask的合成数据上训练的现有分割方法可以实现相对于真实数据的竞争性能(VOC 2012,Cityscapes)。对于某些类别(如bird),DiffuMask表现出良好的性能,接近真实数据的最新结果(差距在3%以内)。此外,在开放词汇分割(零镜头)设置中,DiffuMask在VOC 2012的看不见的类上实现了新的SOTA结果。该项目的网址是https://weijiawu.github.io/DiffusionMask/。

摘要: Collecting and annotating images with pixel-wise labels is time-consuming and laborious. In contrast, synthetic data can be freely available using a generative model (e.g., DALL-E, Stable Diffusion). In this paper, we show that it is possible to automatically obtain accurate semantic masks of synthetic images generated by the Off-the-shelf Stable Diffusion model, which uses only text-image pairs during training. Our approach, called DiffuMask, exploits the potential of the cross-attention map between text and image, which is natural and seamless to extend the text-driven image synthesis to semantic mask generation. DiffuMask uses text-guided cross-attention information to localize class/word-specific regions, which are combined with practical techniques to create a novel high-resolution and class-discriminative pixel-wise mask. The methods help to reduce data collection and annotation costs obviously. Experiments demonstrate that the existing segmentation methods trained on synthetic data of DiffuMask can achieve a competitive performance over the counterpart of real data (VOC 2012, Cityscapes). For some classes (e.g., bird), DiffuMask presents promising performance, close to the stateof-the-art result of real data (within 3% mIoU gap). Moreover, in the open-vocabulary segmentation (zero-shot) setting, DiffuMask achieves a new SOTA result on Unseen class of VOC 2012. The project website can be found at https://weijiawu.github.io/DiffusionMask/.

== Visual Navigation==

标题: Spatial and Temporal Hierarchy for Autonomous Navigation using Active Inference in Minigrid Environment

作者: Daria de Tinguy, Toon van de Maele, Tim Verbelen

PubTime: 2024-01-22

Downlink: http://arxiv.org/abs/2312.05058v2

中文摘要: 强有力的证据表明,人类使用拓扑标志和粗粒度路径集成的组合来探索他们的环境。这种方法依赖于可识别的环境特征(拓扑地标)以及距离和方向的估计(粗粒度路径整合)来构建周围环境的认知地图。这种认知地图被认为展示了一种层次结构,允许在解决复杂的导航任务时进行有效的规划。受人类行为的启发,本文提出了一个可扩展的分层主动推理模型,用于自主导航、探索和面向目标的行为。该模型使用视觉观察和运动感知将好奇心驱动的探索与目标导向的行为结合起来。使用不同层次的推理来计划运动,即从上下文到地点再到运动。这允许在新的空间中有效导航,并向目标快速前进。通过结合这些人类导航策略及其对环境的分层表示,该模型为自主导航和探索提出了一种新的解决方案。在微型网格环境下通过仿真验证了该方法的有效性。

摘要: Robust evidence suggests that humans explore their environment using a combination of topological landmarks and coarse-grained path integration. This approach relies on identifiable environmental features (topological landmarks) in tandem with estimations of distance and direction (coarse-grained path integration) to construct cognitive maps of the surroundings. This cognitive map is believed to exhibit a hierarchical structure, allowing efficient planning when solving complex navigation tasks. Inspired by human behaviour, this paper presents a scalable hierarchical active inference model for autonomous navigation, exploration, and goal-oriented behaviour. The model uses visual observation and motion perception to combine curiosity-driven exploration with goal-oriented behaviour. Motion is planned using different levels of reasoning, i.e., from context to place to motion. This allows for efficient navigation in new spaces and rapid progress toward a target. By incorporating these human navigational strategies and their hierarchical representation of the environment, this model proposes a new solution for autonomous navigation and exploration. The approach is validated through simulations in a mini-grid environment.

专属领域论文订阅

关注{晓理紫|小李子},每日更新论文,如感兴趣,请转发给有需要的同学,谢谢支持。谢谢提供建议

如果你感觉对你有所帮助,请关注我,每日准时为你推送最新论文