机器学习笔记-聚类算法

- 聚类算法K-means

- k-means的模型评估

- k-means的优化

- PCA降维

- 主成分分析-PCA降维

- PCA+K-means例子

聚类算法K-means

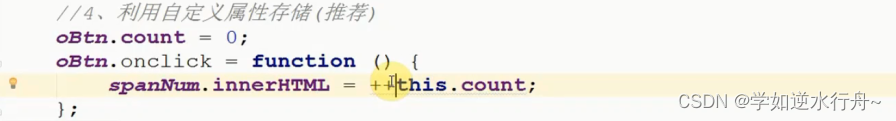

- 代码

import matplotlib.pyplot as plt

from sklearn.datasets.samples_generator import make_blobs

from sklearn.cluster import KMeans

from sklearn.metrics import calinski_harabaz_score# 创建数据

X, y = make_blobs(n_samples=1000, n_features=2, centers=[[-1, -1], [0, 0], [1, 1], [2, 2]], cluster_std=[0.4, 0.2, 0.2, 0.2], random_state=9)plt.scatter(X[:, 0], X[:, 1], marker="o")

plt.show()

## 图# kmeans训练,且可视化 聚类=2

y_pre = KMeans(n_clusters=2, random_state=9).fit_predict(X)# 可视化展示

plt.scatter(X[:, 0], X[:, 1], c=y_pre)

plt.show()# 用ch_scole查看最后效果

print(calinski_harabaz_score(X, y_pre))## 图# kmeans训练,且可视化 聚类=3

y_pre = KMeans(n_clusters=3, random_state=9).fit_predict(X)# 可视化展示

plt.scatter(X[:, 0], X[:, 1], c=y_pre)

plt.show()# 用ch_scole查看最后效果

print(calinski_harabaz_score(X, y_pre))## 图# kmeans训练,且可视化 聚类=4

y_pre = KMeans(n_clusters=4, random_state=9).fit_predict(X)# 可视化展示

plt.scatter(X[:, 0], X[:, 1], c=y_pre)

plt.show()# 用ch_scole查看最后效果

print(calinski_harabaz_score(X, y_pre))

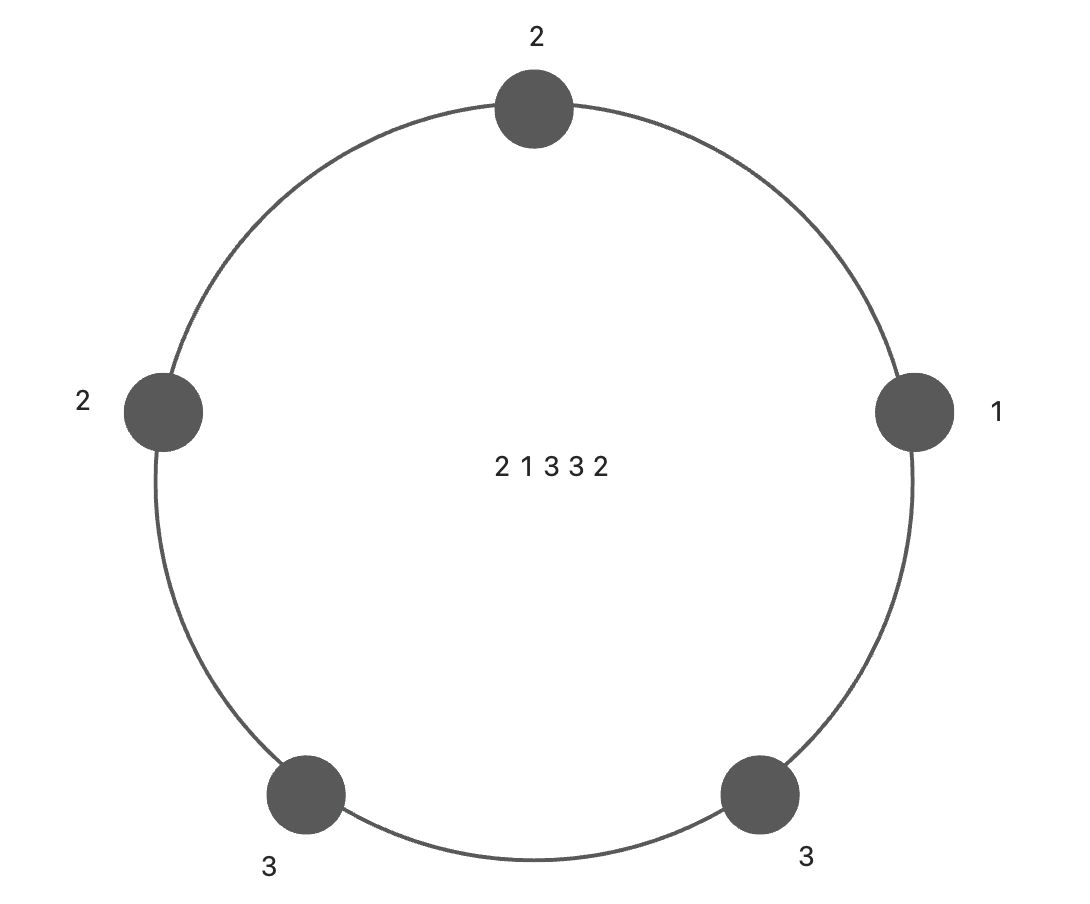

- K-means流程:随机设置k=3,3个值,然后用这三个点和其他点进行欧式距离的计算,然后看哪个点和其他点的距离最小,然后取平均值。循环往复,直到最后一个平均值的点和上一个选取的点重合,完毕。

k-means的模型评估

- 方法一SSE

k-means的优化

PCA降维

-

去除一些无关变量

-

API

-

代码

import pandas as pd

from sklearn.feature_selection import VarianceThreshold

from scipy.stats import pearsonr, spearmanrdef var_thr():"""特征选择:低方差特征过滤:return:"""data = pd.read_csv("./data/factor_returns.csv")# print(data)print(data.shape)# 实例化一个对象transfer = VarianceThreshold(threshold=10)# 转换transfer_data = transfer.fit_transform(data.iloc[:, 1:10])print(transfer_data)print(data.iloc[:, 1:10].shape)print(transfer_data.shape)def pea_demo():"""皮尔逊相关系数:return:"""# 准备数据x1 = [12.5, 15.3, 23.2, 26.4, 33.5, 34.4, 39.4, 45.2, 55.4, 60.9]x2 = [21.2, 23.9, 32.9, 34.1, 42.5, 43.2, 49.0, 52.8, 59.4, 63.5]# 判断ret = pearsonr(x1, x2)print("皮尔逊相关系数的结果是:\n", ret)def spea_demo():"""斯皮尔曼相关系数:return:"""# 准备数据x1 = [12.5, 15.3, 23.2, 26.4, 33.5, 34.4, 39.4, 45.2, 55.4, 60.9]x2 = [21.2, 23.9, 32.9, 34.1, 42.5, 43.2, 49.0, 52.8, 59.4, 63.5]# 判断ret = spearmanr(x1, x2)print("斯皮尔曼相关系数的结果是:\n", ret)if __name__ == '__main__':# var_thr()# pea_demo()# spea_demo()主成分分析-PCA降维

import pandas as pd

from sklearn.feature_selection import VarianceThreshold

from scipy.stats import pearsonr, spearmanr

from sklearn.decomposition import PCAdef pca_demo():"""pca降维:return:"""data = [[2, 8, 4, 5], [6, 3, 0, 8], [5, 4, 9, 1]]# pca小数保留百分比transfer = PCA(n_components=0.9)trans_data = transfer.fit_transform(data)print("保留0.9的数据最后维度为:\n", trans_data)# pca小数保留百分比transfer = PCA(n_components=3)trans_data = transfer.fit_transform(data)print("保留三列数据:\n", trans_data)if __name__ == '__main__':pca_demo()