文章目录

- 系列文章索引

- 一、概述

- 1、版本匹配

- 2、导包

- 二、编码实现

- 1、基本使用

- 2、更多配置

- 3、自定义序列化器

- 4、Flink SQL方式

- 三、踩坑

- 1、The MySQL server has a timezone offset (0 seconds ahead of UTC) which does not match the configured timezone Asia/Shanghai.

- 参考资料

系列文章索引

Flink从入门到实践(一):Flink入门、Flink部署

Flink从入门到实践(二):Flink DataStream API

Flink从入门到实践(三):数据实时采集 - Flink MySQL CDC

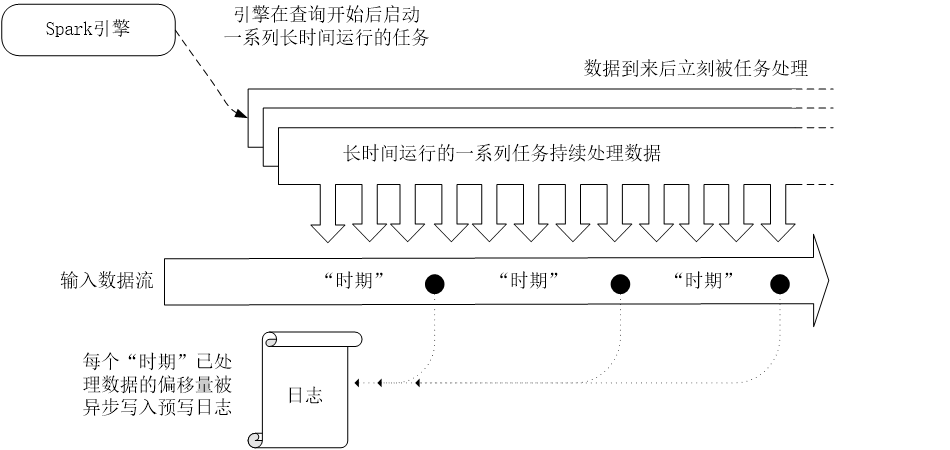

一、概述

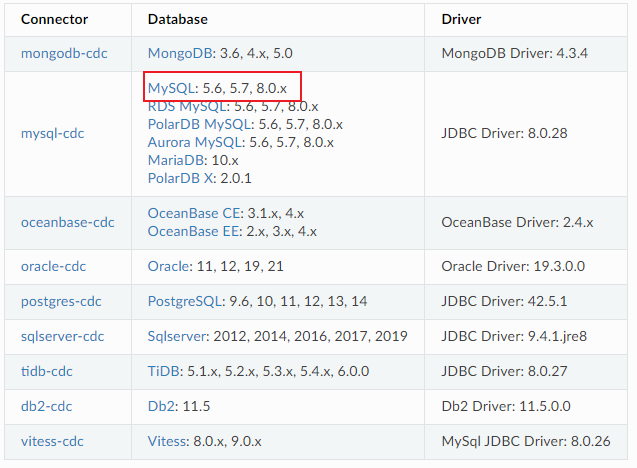

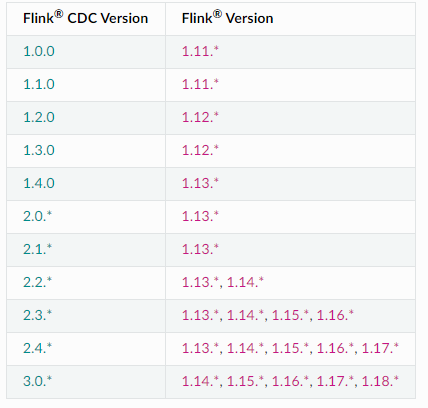

1、版本匹配

注意MySQL的版本,本次是使用MySQL8.0进行演示。

同时,Flink支持很多数据库的cdc。

同时也要对应好版本,我们本次使用Flink是1.18,同时FlinkCDC也是3.0版本

2、导包

<dependency><groupId>org.apache.flink</groupId><artifactId>flink-clients</artifactId><version>1.18.0</version>

</dependency>

<dependency><groupId>org.apache.flink</groupId><artifactId>flink-streaming-java</artifactId><version>1.18.0</version>

</dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-connector-base</artifactId><version>1.18.0</version>

</dependency><dependency><groupId>com.ververica</groupId><artifactId>flink-connector-mysql-cdc</artifactId><version>3.0.0</version>

</dependency><dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId><version>8.0.27</version>

</dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-table-runtime</artifactId><version>1.18.0</version>

</dependency>二、编码实现

1、基本使用

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import com.ververica.cdc.debezium.JsonDebeziumDeserializationSchema;

import com.ververica.cdc.connectors.mysql.source.MySqlSource;/*** Flink MySql CDC* 每次启动之后,会将所有数据采集一遍*/

public class FlinkCDC01 {public static void main(String[] args) throws Exception {MySqlSource<String> mySqlSource = MySqlSource.<String>builder().hostname("192.168.56.10").port(3306).databaseList("testdb") // 要监听的数据库,可以填多个,支持正则表达式.tableList("testdb.access") // 监听的表,可以填多个,需要db.表,支持正则表达式.username("root").password("root").deserializer(new JsonDebeziumDeserializationSchema()) // converts SourceRecord to JSON String.build();StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();// 开启检查点env.enableCheckpointing(3000);env.fromSource(mySqlSource, WatermarkStrategy.noWatermarks(), "MySQL Source")// 1个并行任务.setParallelism(1).print().setParallelism(1); // 对接收器使用并行性1来保持消息顺序env.execute("Print MySQL Snapshot + Binlog");}

}

结果是json数据:

{

“before”: null,

“after”: {

“id”: 1,

“name”: “1”

},

“source”: {

“version”: “1.9.7.Final”,

“connector”: “mysql”,

“name”: “mysql_binlog_source”,

“ts_ms”: 1707353812000,

“snapshot”: “false”,

“db”: “testdb”, // 库名

“sequence”: null,

“table”: “access”, // 表名

“server_id”: 1,

“gtid”: null,

“file”: “binlog.000005”,

“pos”: 374,

“row”: 0,

“thread”: 9,

“query”: null

},

“op”: “c”, // 操作 c是create;u是update;d是delete;r是read

“ts_ms”: 1707353812450,

“transaction”: null

}

2、更多配置

https://ververica.github.io/flink-cdc-connectors/master/content/connectors/mysql-cdc%28ZH%29.html

配置选项scan.startup.mode指定 MySQL CDC 使用者的启动模式。有效枚举包括:

initial (默认):在第一次启动时对受监视的数据库表执行初始快照,并继续读取最新的 binlog。

earliest-offset:跳过快照阶段,从可读取的最早 binlog 位点开始读取

latest-offset:首次启动时,从不对受监视的数据库表执行快照, 连接器仅从 binlog 的结尾处开始读取,这意味着连接器只能读取在连接器启动之后的数据更改。

specific-offset:跳过快照阶段,从指定的 binlog 位点开始读取。位点可通过 binlog 文件名和位置指定,或者在 GTID 在集群上启用时通过 GTID 集合指定。

timestamp:跳过快照阶段,从指定的时间戳开始读取 binlog 事件。

3、自定义序列化器

import com.ververica.cdc.debezium.DebeziumDeserializationSchema;

import io.debezium.data.Envelope;

import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.util.Collector;

import org.apache.kafka.connect.data.Field;

import org.apache.kafka.connect.data.Struct;

import org.apache.kafka.connect.source.SourceRecord;import java.util.List;public class DomainDeserializationSchema implements DebeziumDeserializationSchema<String> {@Overridepublic void deserialize(SourceRecord sourceRecord, Collector<String> collector) throws Exception {String topic = sourceRecord.topic();String[] split = topic.split("\\.");System.out.println("数据库:" + split[1]);System.out.println("表:" + split[2]);Struct value = (Struct)sourceRecord.value();// 获取before信息Struct before = value.getStruct("before");System.out.println("before:" + before);if (before != null) {// 所有字段List<Field> fields = before.schema().fields();for (Field field : fields) {System.out.println("before field:" + field.name() + " value:" + before.get(field));}}// 获取after信息Struct after = value.getStruct("after");System.out.println("after:" + after);if (after != null) {// 所有字段List<Field> fields = after.schema().fields();for (Field field : fields) {System.out.println("after field:" + field.name() + " value:" + after.get(field));}}// 操作类型Envelope.Operation operation = Envelope.operationFor(sourceRecord);System.out.println("操作:" + operation);// 收集序列化后的结果collector.collect("aaaaaaaaaaaaa");}@Overridepublic TypeInformation<String> getProducedType() {return BasicTypeInfo.STRING_TYPE_INFO; // 类型}

}MySqlSource<String> mySqlSource = MySqlSource.<String>builder().hostname("192.168.56.10").port(3306).databaseList("testdb") // 要监听的数据库,可以填多个.tableList("testdb.access") // 监听的表,可以填多个.username("root").password("root").deserializer(new DomainDeserializationSchema()) // 序列化器.build();

4、Flink SQL方式

CDC用的少,还是StreamAPI用的多。

三、踩坑

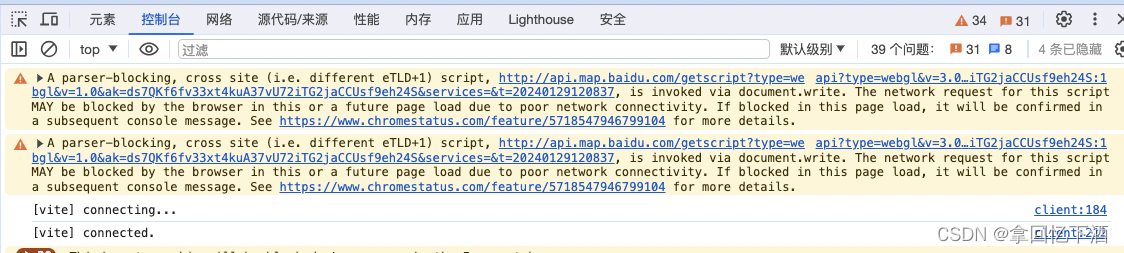

1、The MySQL server has a timezone offset (0 seconds ahead of UTC) which does not match the configured timezone Asia/Shanghai.

2024-02-08 08:36:33 INFO 5217 — [lt-dispatcher-6] o.a.f.r.executiongraph.ExecutionGraph : Source: MySQL Source -> Sink: Print to Std. Out (1/1) (e2371dabd0c952a5dfa7c053cbde80c3_cbc357ccb763df2852fee8c4fc7d55f2_0_2) switched from CREATED to SCHEDULED.

2024-02-08 08:36:33 INFO 5217 — [lt-dispatcher-8] o.a.f.r.r.s.FineGrainedSlotManager : Received resource requirements from job 369b1c979674a0444f679dd13264ea88: [ResourceRequirement{resourceProfile=ResourceProfile{UNKNOWN}, numberOfRequiredSlots=1}]

2024-02-08 08:36:33 INFO 5218 — [lt-dispatcher-6] o.a.flink.runtime.jobmaster.JobMaster : Trying to recover from a global failure.

org.apache.flink.util.FlinkException: Global failure triggered by OperatorCoordinator for ‘Source: MySQL Source -> Sink: Print to Std. Out’ (operator cbc357ccb763df2852fee8c4fc7d55f2).

at org.apache.flink.runtime.operators.coordination.OperatorCoordinatorHolder L a z y I n i t i a l i z e d C o o r d i n a t o r C o n t e x t . f a i l J o b ( O p e r a t o r C o o r d i n a t o r H o l d e r . j a v a : 624 ) a t o r g . a p a c h e . f l i n k . r u n t i m e . o p e r a t o r s . c o o r d i n a t i o n . R e c r e a t e O n R e s e t O p e r a t o r C o o r d i n a t o r LazyInitializedCoordinatorContext.failJob(OperatorCoordinatorHolder.java:624) at org.apache.flink.runtime.operators.coordination.RecreateOnResetOperatorCoordinator LazyInitializedCoordinatorContext.failJob(OperatorCoordinatorHolder.java:624)atorg.apache.flink.runtime.operators.coordination.RecreateOnResetOperatorCoordinatorQuiesceableContext.failJob(RecreateOnResetOperatorCoordinator.java:248)

at org.apache.flink.runtime.source.coordinator.SourceCoordinatorContext.failJob(SourceCoordinatorContext.java:395)

at org.apache.flink.runtime.source.coordinator.SourceCoordinator.start(SourceCoordinator.java:225)

at org.apache.flink.runtime.operators.coordination.RecreateOnResetOperatorCoordinator D e f e r r a b l e C o o r d i n a t o r . r e s e t A n d S t a r t ( R e c r e a t e O n R e s e t O p e r a t o r C o o r d i n a t o r . j a v a : 416 ) a t o r g . a p a c h e . f l i n k . r u n t i m e . o p e r a t o r s . c o o r d i n a t i o n . R e c r e a t e O n R e s e t O p e r a t o r C o o r d i n a t o r . l a m b d a DeferrableCoordinator.resetAndStart(RecreateOnResetOperatorCoordinator.java:416) at org.apache.flink.runtime.operators.coordination.RecreateOnResetOperatorCoordinator.lambda DeferrableCoordinator.resetAndStart(RecreateOnResetOperatorCoordinator.java:416)atorg.apache.flink.runtime.operators.coordination.RecreateOnResetOperatorCoordinator.lambdaresetToCheckpoint 7 ( R e c r e a t e O n R e s e t O p e r a t o r C o o r d i n a t o r . j a v a : 156 ) a t j a v a . u t i l . c o n c u r r e n t . C o m p l e t a b l e F u t u r e . u n i W h e n C o m p l e t e ( C o m p l e t a b l e F u t u r e . j a v a : 774 ) a t j a v a . u t i l . c o n c u r r e n t . C o m p l e t a b l e F u t u r e . u n i W h e n C o m p l e t e S t a g e ( C o m p l e t a b l e F u t u r e . j a v a : 792 ) a t j a v a . u t i l . c o n c u r r e n t . C o m p l e t a b l e F u t u r e . w h e n C o m p l e t e ( C o m p l e t a b l e F u t u r e . j a v a : 2153 ) a t o r g . a p a c h e . f l i n k . r u n t i m e . o p e r a t o r s . c o o r d i n a t i o n . R e c r e a t e O n R e s e t O p e r a t o r C o o r d i n a t o r . r e s e t T o C h e c k p o i n t ( R e c r e a t e O n R e s e t O p e r a t o r C o o r d i n a t o r . j a v a : 143 ) a t o r g . a p a c h e . f l i n k . r u n t i m e . o p e r a t o r s . c o o r d i n a t i o n . O p e r a t o r C o o r d i n a t o r H o l d e r . r e s e t T o C h e c k p o i n t ( O p e r a t o r C o o r d i n a t o r H o l d e r . j a v a : 284 ) a t o r g . a p a c h e . f l i n k . r u n t i m e . c h e c k p o i n t . C h e c k p o i n t C o o r d i n a t o r . r e s t o r e S t a t e T o C o o r d i n a t o r s ( C h e c k p o i n t C o o r d i n a t o r . j a v a : 2044 ) a t o r g . a p a c h e . f l i n k . r u n t i m e . c h e c k p o i n t . C h e c k p o i n t C o o r d i n a t o r . r e s t o r e L a t e s t C h e c k p o i n t e d S t a t e I n t e r n a l ( C h e c k p o i n t C o o r d i n a t o r . j a v a : 1719 ) a t o r g . a p a c h e . f l i n k . r u n t i m e . c h e c k p o i n t . C h e c k p o i n t C o o r d i n a t o r . r e s t o r e L a t e s t C h e c k p o i n t e d S t a t e T o A l l ( C h e c k p o i n t C o o r d i n a t o r . j a v a : 1647 ) a t o r g . a p a c h e . f l i n k . r u n t i m e . s c h e d u l e r . S c h e d u l e r B a s e . r e s t o r e S t a t e ( S c h e d u l e r B a s e . j a v a : 434 ) a t o r g . a p a c h e . f l i n k . r u n t i m e . s c h e d u l e r . D e f a u l t S c h e d u l e r . r e s t a r t T a s k s ( D e f a u l t S c h e d u l e r . j a v a : 419 ) a t o r g . a p a c h e . f l i n k . r u n t i m e . s c h e d u l e r . D e f a u l t S c h e d u l e r . l a m b d a 7(RecreateOnResetOperatorCoordinator.java:156) at java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:774) at java.util.concurrent.CompletableFuture.uniWhenCompleteStage(CompletableFuture.java:792) at java.util.concurrent.CompletableFuture.whenComplete(CompletableFuture.java:2153) at org.apache.flink.runtime.operators.coordination.RecreateOnResetOperatorCoordinator.resetToCheckpoint(RecreateOnResetOperatorCoordinator.java:143) at org.apache.flink.runtime.operators.coordination.OperatorCoordinatorHolder.resetToCheckpoint(OperatorCoordinatorHolder.java:284) at org.apache.flink.runtime.checkpoint.CheckpointCoordinator.restoreStateToCoordinators(CheckpointCoordinator.java:2044) at org.apache.flink.runtime.checkpoint.CheckpointCoordinator.restoreLatestCheckpointedStateInternal(CheckpointCoordinator.java:1719) at org.apache.flink.runtime.checkpoint.CheckpointCoordinator.restoreLatestCheckpointedStateToAll(CheckpointCoordinator.java:1647) at org.apache.flink.runtime.scheduler.SchedulerBase.restoreState(SchedulerBase.java:434) at org.apache.flink.runtime.scheduler.DefaultScheduler.restartTasks(DefaultScheduler.java:419) at org.apache.flink.runtime.scheduler.DefaultScheduler.lambda 7(RecreateOnResetOperatorCoordinator.java:156)atjava.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:774)atjava.util.concurrent.CompletableFuture.uniWhenCompleteStage(CompletableFuture.java:792)atjava.util.concurrent.CompletableFuture.whenComplete(CompletableFuture.java:2153)atorg.apache.flink.runtime.operators.coordination.RecreateOnResetOperatorCoordinator.resetToCheckpoint(RecreateOnResetOperatorCoordinator.java:143)atorg.apache.flink.runtime.operators.coordination.OperatorCoordinatorHolder.resetToCheckpoint(OperatorCoordinatorHolder.java:284)atorg.apache.flink.runtime.checkpoint.CheckpointCoordinator.restoreStateToCoordinators(CheckpointCoordinator.java:2044)atorg.apache.flink.runtime.checkpoint.CheckpointCoordinator.restoreLatestCheckpointedStateInternal(CheckpointCoordinator.java:1719)atorg.apache.flink.runtime.checkpoint.CheckpointCoordinator.restoreLatestCheckpointedStateToAll(CheckpointCoordinator.java:1647)atorg.apache.flink.runtime.scheduler.SchedulerBase.restoreState(SchedulerBase.java:434)atorg.apache.flink.runtime.scheduler.DefaultScheduler.restartTasks(DefaultScheduler.java:419)atorg.apache.flink.runtime.scheduler.DefaultScheduler.lambdanull 2 ( D e f a u l t S c h e d u l e r . j a v a : 379 ) a t j a v a . u t i l . c o n c u r r e n t . C o m p l e t a b l e F u t u r e . u n i R u n ( C o m p l e t a b l e F u t u r e . j a v a : 719 ) a t j a v a . u t i l . c o n c u r r e n t . C o m p l e t a b l e F u t u r e 2(DefaultScheduler.java:379) at java.util.concurrent.CompletableFuture.uniRun(CompletableFuture.java:719) at java.util.concurrent.CompletableFuture 2(DefaultScheduler.java:379)atjava.util.concurrent.CompletableFuture.uniRun(CompletableFuture.java:719)atjava.util.concurrent.CompletableFutureUniRun.tryFire(CompletableFuture.java:701)

at java.util.concurrent.CompletableFuture C o m p l e t i o n . r u n ( C o m p l e t a b l e F u t u r e . j a v a : 456 ) a t o r g . a p a c h e . f l i n k . r u n t i m e . r p c . p e k k o . P e k k o R p c A c t o r . l a m b d a Completion.run(CompletableFuture.java:456) at org.apache.flink.runtime.rpc.pekko.PekkoRpcActor.lambda Completion.run(CompletableFuture.java:456)atorg.apache.flink.runtime.rpc.pekko.PekkoRpcActor.lambdahandleRunAsync 4 ( P e k k o R p c A c t o r . j a v a : 451 ) a t o r g . a p a c h e . f l i n k . r u n t i m e . c o n c u r r e n t . C l a s s L o a d i n g U t i l s . r u n W i t h C o n t e x t C l a s s L o a d e r ( C l a s s L o a d i n g U t i l s . j a v a : 68 ) a t o r g . a p a c h e . f l i n k . r u n t i m e . r p c . p e k k o . P e k k o R p c A c t o r . h a n d l e R u n A s y n c ( P e k k o R p c A c t o r . j a v a : 451 ) a t o r g . a p a c h e . f l i n k . r u n t i m e . r p c . p e k k o . P e k k o R p c A c t o r . h a n d l e R p c M e s s a g e ( P e k k o R p c A c t o r . j a v a : 218 ) a t o r g . a p a c h e . f l i n k . r u n t i m e . r p c . p e k k o . F e n c e d P e k k o R p c A c t o r . h a n d l e R p c M e s s a g e ( F e n c e d P e k k o R p c A c t o r . j a v a : 85 ) a t o r g . a p a c h e . f l i n k . r u n t i m e . r p c . p e k k o . P e k k o R p c A c t o r . h a n d l e M e s s a g e ( P e k k o R p c A c t o r . j a v a : 168 ) a t o r g . a p a c h e . p e k k o . j a p i . p f . U n i t C a s e S t a t e m e n t . a p p l y ( C a s e S t a t e m e n t s . s c a l a : 33 ) a t o r g . a p a c h e . p e k k o . j a p i . p f . U n i t C a s e S t a t e m e n t . a p p l y ( C a s e S t a t e m e n t s . s c a l a : 29 ) a t s c a l a . P a r t i a l F u n c t i o n . a p p l y O r E l s e ( P a r t i a l F u n c t i o n . s c a l a : 127 ) a t s c a l a . P a r t i a l F u n c t i o n . a p p l y O r E l s e 4(PekkoRpcActor.java:451) at org.apache.flink.runtime.concurrent.ClassLoadingUtils.runWithContextClassLoader(ClassLoadingUtils.java:68) at org.apache.flink.runtime.rpc.pekko.PekkoRpcActor.handleRunAsync(PekkoRpcActor.java:451) at org.apache.flink.runtime.rpc.pekko.PekkoRpcActor.handleRpcMessage(PekkoRpcActor.java:218) at org.apache.flink.runtime.rpc.pekko.FencedPekkoRpcActor.handleRpcMessage(FencedPekkoRpcActor.java:85) at org.apache.flink.runtime.rpc.pekko.PekkoRpcActor.handleMessage(PekkoRpcActor.java:168) at org.apache.pekko.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:33) at org.apache.pekko.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:29) at scala.PartialFunction.applyOrElse(PartialFunction.scala:127) at scala.PartialFunction.applyOrElse 4(PekkoRpcActor.java:451)atorg.apache.flink.runtime.concurrent.ClassLoadingUtils.runWithContextClassLoader(ClassLoadingUtils.java:68)atorg.apache.flink.runtime.rpc.pekko.PekkoRpcActor.handleRunAsync(PekkoRpcActor.java:451)atorg.apache.flink.runtime.rpc.pekko.PekkoRpcActor.handleRpcMessage(PekkoRpcActor.java:218)atorg.apache.flink.runtime.rpc.pekko.FencedPekkoRpcActor.handleRpcMessage(FencedPekkoRpcActor.java:85)atorg.apache.flink.runtime.rpc.pekko.PekkoRpcActor.handleMessage(PekkoRpcActor.java:168)atorg.apache.pekko.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:33)atorg.apache.pekko.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:29)atscala.PartialFunction.applyOrElse(PartialFunction.scala:127)atscala.PartialFunction.applyOrElse(PartialFunction.scala:126)

at org.apache.pekko.japi.pf.UnitCaseStatement.applyOrElse(CaseStatements.scala:29)

at scala.PartialFunction O r E l s e . a p p l y O r E l s e ( P a r t i a l F u n c t i o n . s c a l a : 175 ) a t s c a l a . P a r t i a l F u n c t i o n OrElse.applyOrElse(PartialFunction.scala:175) at scala.PartialFunction OrElse.applyOrElse(PartialFunction.scala:175)atscala.PartialFunctionOrElse.applyOrElse(PartialFunction.scala:176)

at scala.PartialFunction O r E l s e . a p p l y O r E l s e ( P a r t i a l F u n c t i o n . s c a l a : 176 ) a t o r g . a p a c h e . p e k k o . a c t o r . A c t o r . a r o u n d R e c e i v e ( A c t o r . s c a l a : 547 ) a t o r g . a p a c h e . p e k k o . a c t o r . A c t o r . a r o u n d R e c e i v e OrElse.applyOrElse(PartialFunction.scala:176) at org.apache.pekko.actor.Actor.aroundReceive(Actor.scala:547) at org.apache.pekko.actor.Actor.aroundReceive OrElse.applyOrElse(PartialFunction.scala:176)atorg.apache.pekko.actor.Actor.aroundReceive(Actor.scala:547)atorg.apache.pekko.actor.Actor.aroundReceive(Actor.scala:545)

at org.apache.pekko.actor.AbstractActor.aroundReceive(AbstractActor.scala:229)

at org.apache.pekko.actor.ActorCell.receiveMessage(ActorCell.scala:590)

at org.apache.pekko.actor.ActorCell.invoke(ActorCell.scala:557)

at org.apache.pekko.dispatch.Mailbox.processMailbox(Mailbox.scala:280)

at org.apache.pekko.dispatch.Mailbox.run(Mailbox.scala:241)

at org.apache.pekko.dispatch.Mailbox.exec(Mailbox.scala:253)

at java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:289)

at java.util.concurrent.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1067)

at java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1703)

at java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:172)

Caused by: org.apache.flink.table.api.ValidationException: The MySQL server has a timezone offset (0 seconds ahead of UTC) which does not match the configured timezone Asia/Shanghai. Specify the right server-time-zone to avoid inconsistencies for time-related fields.

at com.ververica.cdc.connectors.mysql.MySqlValidator.checkTimeZone(MySqlValidator.java:184)

at com.ververica.cdc.connectors.mysql.MySqlValidator.validate(MySqlValidator.java:72)

at com.ververica.cdc.connectors.mysql.source.MySqlSource.createEnumerator(MySqlSource.java:197)

at org.apache.flink.runtime.source.coordinator.SourceCoordinator.start(SourceCoordinator.java:221)

… 42 common frames omitted

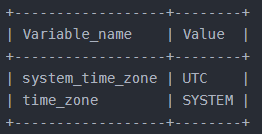

查看mysql:

show variables like ‘%time_zone%’;

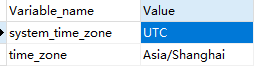

解决方案:

SET time_zone = 'Asia/Shanghai';

SET @@global.time_zone = 'Asia/Shanghai';

#再次查看

SELECT @@global.time_zone;

show variables like '%time_zone%';

参考资料

源码:https://github.com/ververica/flink-cdc-connectors

文档:https://ververica.github.io/flink-cdc-connectors/master/content/overview/cdc-connectors.html

官网:https://ververica.github.io/flink-cdc-connectors/