spark submit 提交作业的时候提示Promise already complete

完整日志如下

File "/data5/hadoop/yarn/local/usercache/processuser/appcache/application_1706192609294_136972/container_e41_1706192609294_136972_02_000001/py4j-0.10.6-src.zip/py4j/protocol.py", line 320, in get_return_value

py4j.protocol.Py4JJavaError: An error occurred while calling None.org.apache.spark.api.java.JavaSparkContext.

: java.lang.IllegalStateException: Promise already completed.at scala.concurrent.Promise$class.complete(Promise.scala:55)at scala.concurrent.impl.Promise$DefaultPromise.complete(Promise.scala:153)at scala.concurrent.Promise$class.success(Promise.scala:86)at scala.concurrent.impl.Promise$DefaultPromise.success(Promise.scala:153)at org.apache.spark.deploy.yarn.ApplicationMaster.org$apache$spark$deploy$yarn$ApplicationMaster$$sparkContextInitialized(ApplicationMaster.scala:421)at org.apache.spark.deploy.yarn.ApplicationMaster$.sparkContextInitialized(ApplicationMaster.scala:828)at org.apache.spark.scheduler.cluster.YarnClusterScheduler.postStartHook(YarnClusterScheduler.scala:32)at org.apache.spark.SparkContext.<init>(SparkContext.scala:563)at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)at java.lang.reflect.Constructor.newInstance(Constructor.java:423)at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)at py4j.Gateway.invoke(Gateway.java:238)at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)at py4j.GatewayConnection.run(GatewayConnection.java:214)at java.lang.Thread.run(Thread.java:748)

这种情况是python版本和pyspark版本不一致的情况导致的

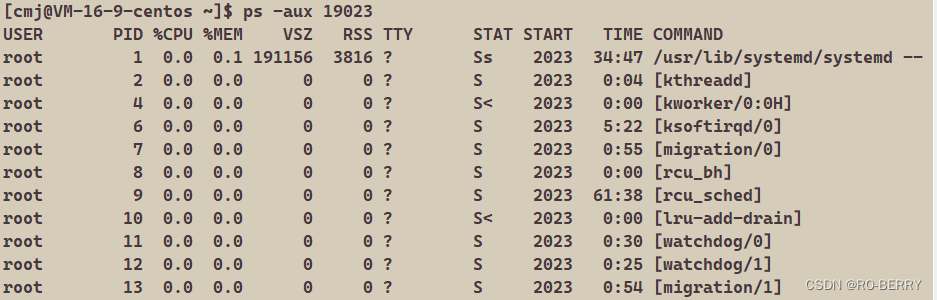

说一下查询pyspark兼容python版本查询的方法

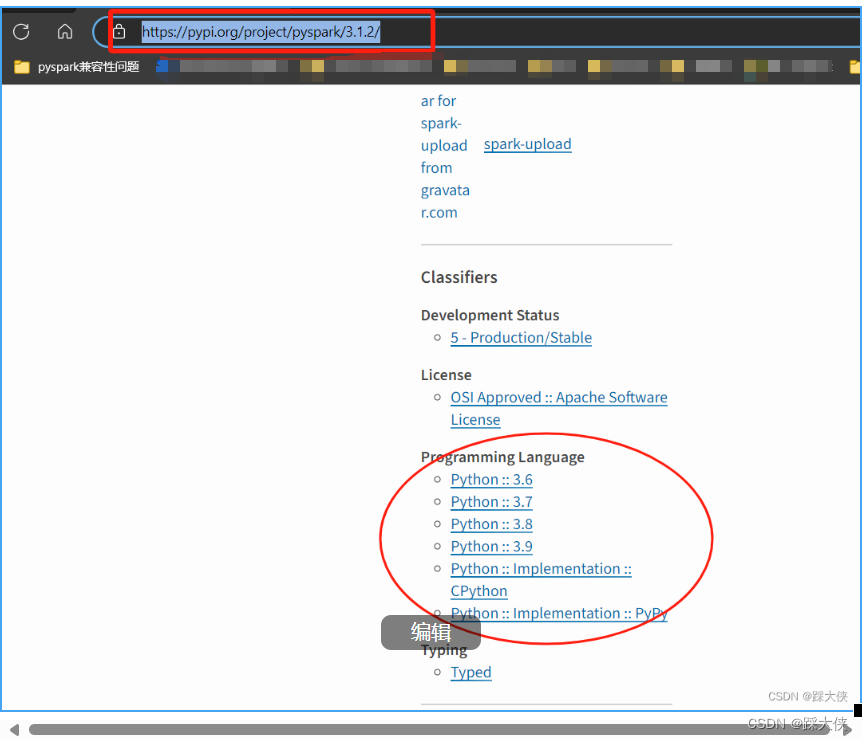

访问 pyspark · PyPI

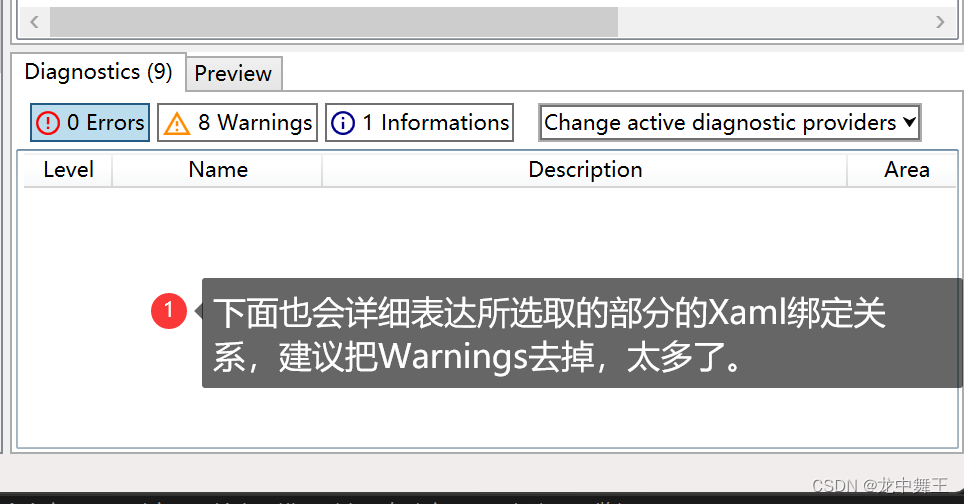

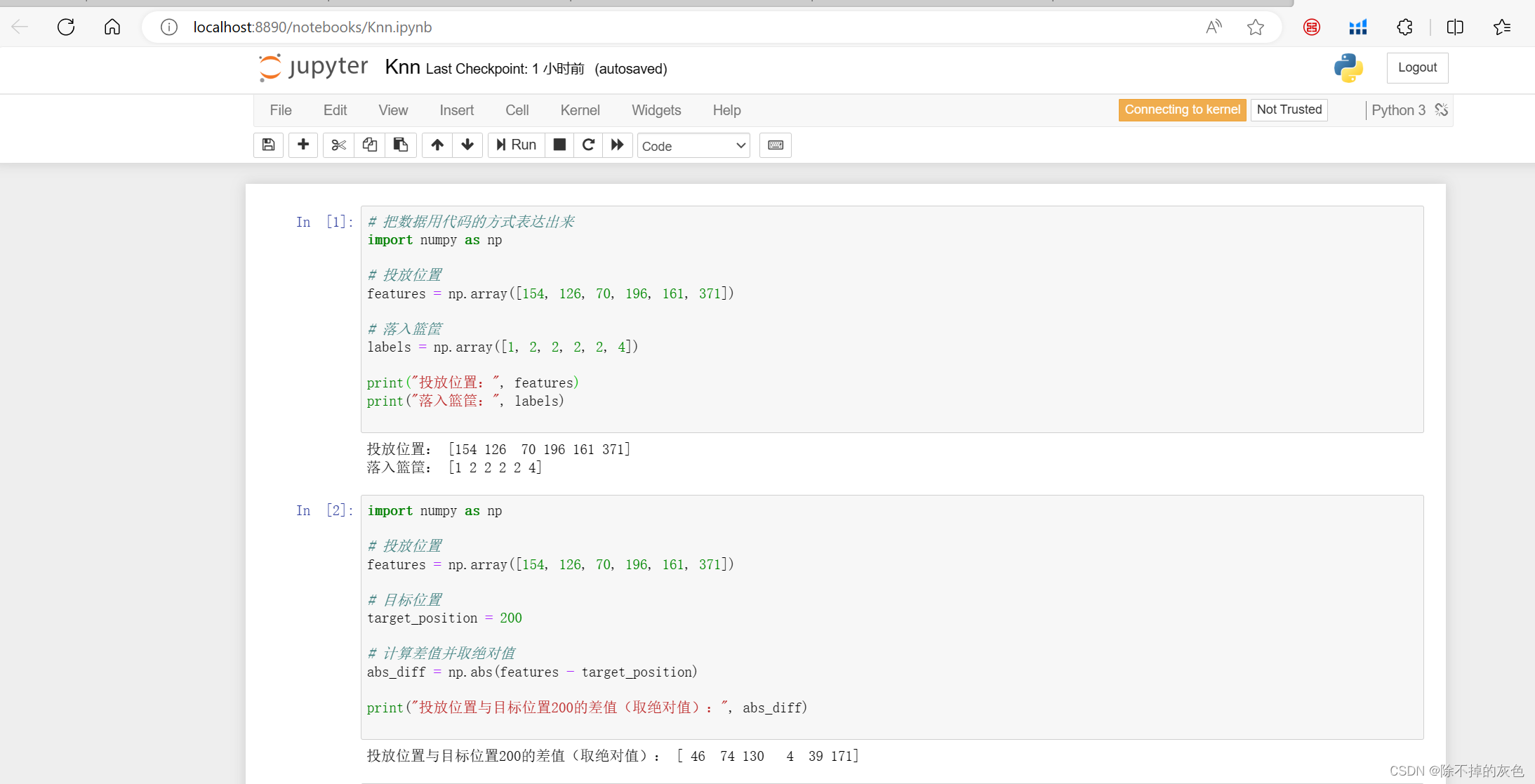

然后看左下角记录的支持的python的版本列表,如图所以是查询的pyspark3.1.2的所支持的python列表