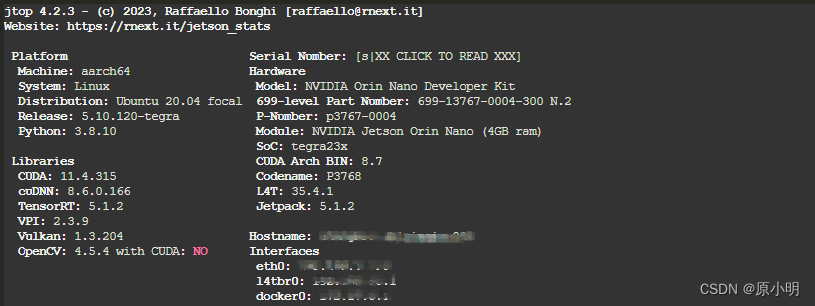

1. 设备

2. 环境

sudo apt-get install protobuf-compiler libprotoc-devexport PATH=/usr/local/cuda/bin:${PATH}

export CUDA_PATH=/usr/local/cuda

export cuDNN_PATH=/usr/lib/aarch64-linux-gnu

export CMAKE_ARGS="-DONNX_CUSTOM_PROTOC_EXECUTABLE=/usr/bin/protoc"

3.源码

mkdir /code

cd /code

git clone --recursive https://github.com/Microsoft/onnxruntime.git

# 从 tag v1.16.0 切换分支进行编译

git checkout -b v1.16.0 v1.16.0git submodule update --init --recursive --progress

cd /code/onnxruntime

4.编译

# --parallel 2 使用 2 个 cpu 进行编译,防止内存和CPU性能不足导致编译识别

./build.sh --config Release --update --build --parallel 2 --build_wheel \

--use_tensorrt --cuda_home /usr/local/cuda --cudnn_home /usr/lib/aarch64-linux-gnu \

--tensorrt_home /usr/lib/aarch64-linux-gnu

若编译识别,内存不足,可进行扩大交换内存

https://labelnet.blog.csdn.net/article/details/136538479

编译完成标识

...

build complate!

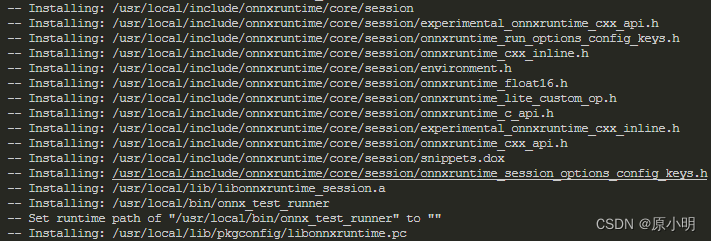

5. 安装

cd /build/Linux/Release

sudo make install

6.查看

/usr/lcoal 查看安装

7.下载

(1) 整个 build 目录,包含 build/Linux/Relase

https://download.csdn.net/download/LABLENET/88943160

(2) 仅 Python3.8 安装文件,onnxruntime-gpu-1.16.0-cp38-cp38-linux-aarch64.whl

https://download.csdn.net/download/LABLENET/88943155

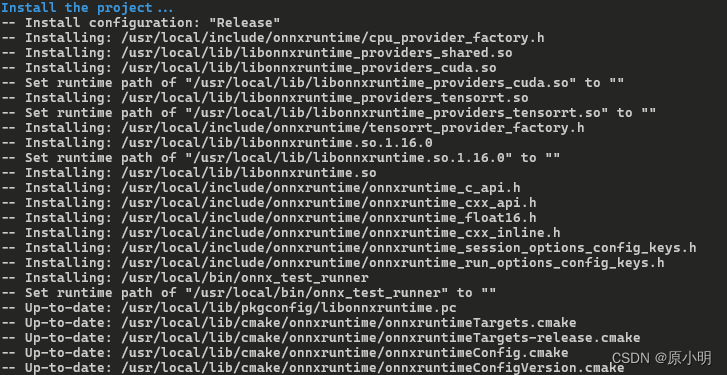

8. 静态库编译安装

1)编译

添加 l --build_shared_lib

./build.sh --config Release --update --build --parallel --build_shared_lib --build_wheel \

--use_tensorrt --cuda_home /usr/local/cuda --cudnn_home /usr/lib/aarch64-linux-gnu \

--tensorrt_home /usr/lib/aarch64-linux-gnu

2)安装

sudo cmake install

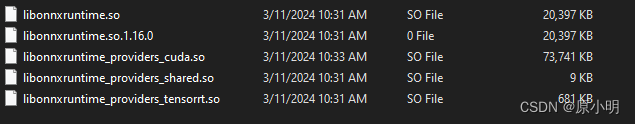

9. 静态库下载使用

C++, 见文件 https://download.csdn.net/download/LABLENET/88943411

10 C++ 开发

CMakeList.txt 中配置使用

...

# onnxruntime

find_package(onnxruntime REQUIRED)

message(onnxruntime_dir: ${onnxruntime_DIR})

target_link_libraries (${MODULE_NAME} PUBLIConnxruntime::onnxruntime

)

C++ 代码

#include <onnxruntime_cxx_api.h>int main()

{auto providers = Ort::GetAvailableProviders();cout << Ort::GetVersionString() << endl;for (auto provider : providers){cout << provider << endl;}

}

11. Python 开发

安装依赖包

pip3 install onnxruntime_gpu-1.16.0-cp38-cp38-linux_aarch64.whl -i https://pypi.tuna.tsinghua.edu.cn/simple/

开发

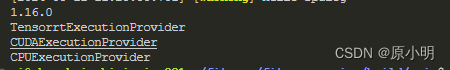

import onnxruntimeprint("OnnxRuntime Provider : ", onnxruntime.get_available_providers())输出

OnnxRuntime Provider : ['TensorrtExecutionProvider', 'CUDAExecutionProvider', 'CPUExecutionProvider']