pytorch安装,请查看上篇博客。

读取图片操作

from PIL import Imageimg_path = "D:\\pythonProject\\learn_pytorch\\dataset\\train\\ants\\0013035.jpg"

img = Image.open(img_path)

img.show()dir_path="dataset/train/ants"

import os

img_path_list = os.listdir(dir_path)

img_path_list[0]

Out[16]: '0013035.jpg'

from torch.utils.data import Dataset

from PIL import Image

import osclass MyData(Dataset):def __init__(self, root_dir, label_dir):self.root_dir = root_dirself.label_dir = label_dirself.path = os.path.join(self.root_dir, self.label_dir)self.img_path_list = os.listdir(self.path)def __getitem__(self, idx):img_name = self.img_path_list[idx]img_item_path = os.path.join(self.root_dir, self.label_dir, img_name)img = Image.open(img_item_path)label = self.label_dirreturn img, labeldef __len__(self):return len(self.img_path_list)root_dir = "dataset/train"

ants_label_dir = "ants"

ants_dataset = MyData(root_dir, ants_label_dir)

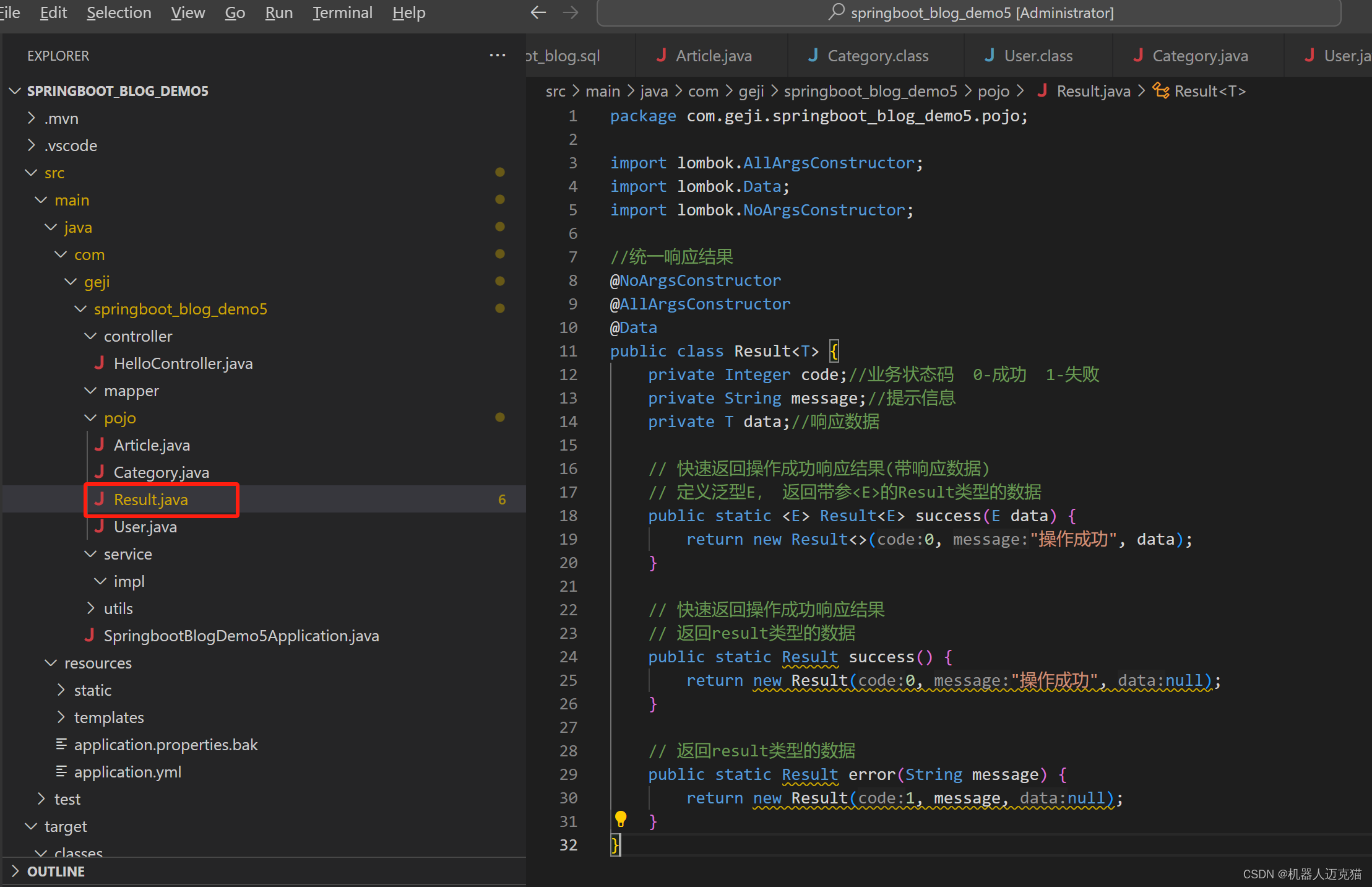

TensorBoard的使用

from torch.utils.tensorboard import SummaryWriterwriter = SummaryWriter("logs")for i in range(100):writer.add_scalar("y=x", 2 * i, i)writer.close()

在logs文件夹中会出现相关的事件,如下图。

在控制台中输入命令tensorboard --logdir=logs即可出现一个网址,对拟合过程进行一个可视化。

import numpy as np

from torch.utils.tensorboard import SummaryWriter

from PIL import Imagewriter = SummaryWriter("logs")

image_path = "dataset/train/ants_image/0013035.jpg"

img_PIL = Image.open(image_path)

img_array = np.array(img_PIL)

print(type(img_array))

print(img_array.shape) # 证明是3通道在后,因此add_image方法需要加上dataformats="HWC"参数。# add_image这个方法的第二个参数既可以是numpy类型也可以是tensor类型的。

writer.add_image("test", img_array, 1, dataformats="HWC")writer.close()

Transforms的使用

from torchvision import transforms

from PIL import Image

from torch.utils.tensorboard import SummaryWriterimg_path = "dataset/train/bees_image/16838648_415acd9e3f.jpg"

img_PIL = Image.open(img_path)writer = SummaryWriter("logs")# 1、ToTensor该如何使用?

tool = transforms.ToTensor()

img_tensor = tool(img_PIL)writer.add_image("Tensor_img", img_tensor, 1)writer.close()

from torchvision import transforms

from PIL import Image

from torch.utils.tensorboard import SummaryWriterimg_path = "dataset/train/bees_image/16838648_415acd9e3f.jpg"

img_PIL = Image.open(img_path)writer = SummaryWriter("logs")# 1、ToTensor该如何使用?

trans_totensor = transforms.ToTensor()

img_tensor = trans_totensor(img_PIL)

writer.add_image("Tensor_img", img_tensor)# Normalize

trans_norm = transforms.Normalize([8, 3, 6], [5, 2, 7])

img_norm = trans_norm(img_tensor)

writer.add_image("Normal_img", img_norm, 2)# Resize

trans_resize = transforms.Resize((512, 512))

img_resize = trans_resize(img_PIL)

img_resize = trans_totensor(img_resize)

writer.add_image("Resize_img", img_resize, 0)# Resize2

trans_resize2 = transforms.Resize(512)

trans_compose = transforms.Compose([trans_resize2, trans_totensor])

img_resize2 = trans_compose(img_PIL)

writer.add_image("Resize_img", img_resize2, 1)# 随即裁剪

trans_randomcrop = transforms.RandomCrop(256)

trans_compose_2 = transforms.Compose([trans_randomcrop, trans_totensor])

for i in range(10):img_crop_2 = trans_compose_2(img_PIL)writer.add_image("RandomCrop_img", img_crop_2, i)writer.close()

torchvision中数据集的使用

import torchvision

from torch.utils.tensorboard import SummaryWritertransforms_compose = torchvision.transforms.Compose([torchvision.transforms.ToTensor()

])train_set = torchvision.datasets.CIFAR10(root="./dataset", train=True, transform=transforms_compose, download=True)

test_set = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=transforms_compose, download=True)print(test_set[0])

# print(test_set.classes)

#

# img, target = test_set[0]

# print(img)

# print(target)

# print(test_set.classes[target])

# img.show()writer = SummaryWriter("logs")for i in range(10):img, target = test_set[i]writer.add_image("test_set", img, i)writer.close()

DataLoader的使用

DataLoader与Dataset的关系

DataLoader是数据加载器,Dataset是数据集。DataLoader设置参数去读取数据,其中,参数表明读那个数据集,每次读多少等。

import torchvision#准备测试的数据

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWritertest_data = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=torchvision.transforms.ToTensor())

test_loader = DataLoader(test_data, batch_size=64, shuffle=True, num_workers=0, drop_last=True)writer = SummaryWriter("dataloader")step = 0

for data in test_loader:imgs, targets = dataprint(imgs.shape)print(targets)writer.add_images("test_dataloader", imgs, step)step = step + 1writer.close()

nn.Module神经网络基本骨架

import torch

from torch import nnclass Tudui(nn.Module):def __init__(self) -> None:super().__init__()# Module类里的__call__应该自动调用了forward,你在这里是看不到的def forward(self, input):output = input + 1return outputtudui = Tudui()

x = torch.tensor(1.0)

output = tudui(x)

print(output)

输出:tensor(2.)

卷积操作

import torch

import torch.nn.functional as Finput = torch.tensor([[1, 2, 0, 3, 1],[0, 1, 2, 3, 1],[1, 2, 1, 0, 0],[5, 2, 3, 1, 1],[2, 1, 0, 1, 1]])kernel = torch.tensor([[1, 2, 1],[0, 1, 0],[2, 1, 0]])input = torch.reshape(input, (1, 1, 5, 5))

kernel = torch.reshape(kernel, (1, 1, 3, 3))output = F.conv2d(input, kernel, stride=1)

print(output)

输出:tensor([[[[10, 12, 12],

[18, 16, 16],

[13, 9, 3]]]])

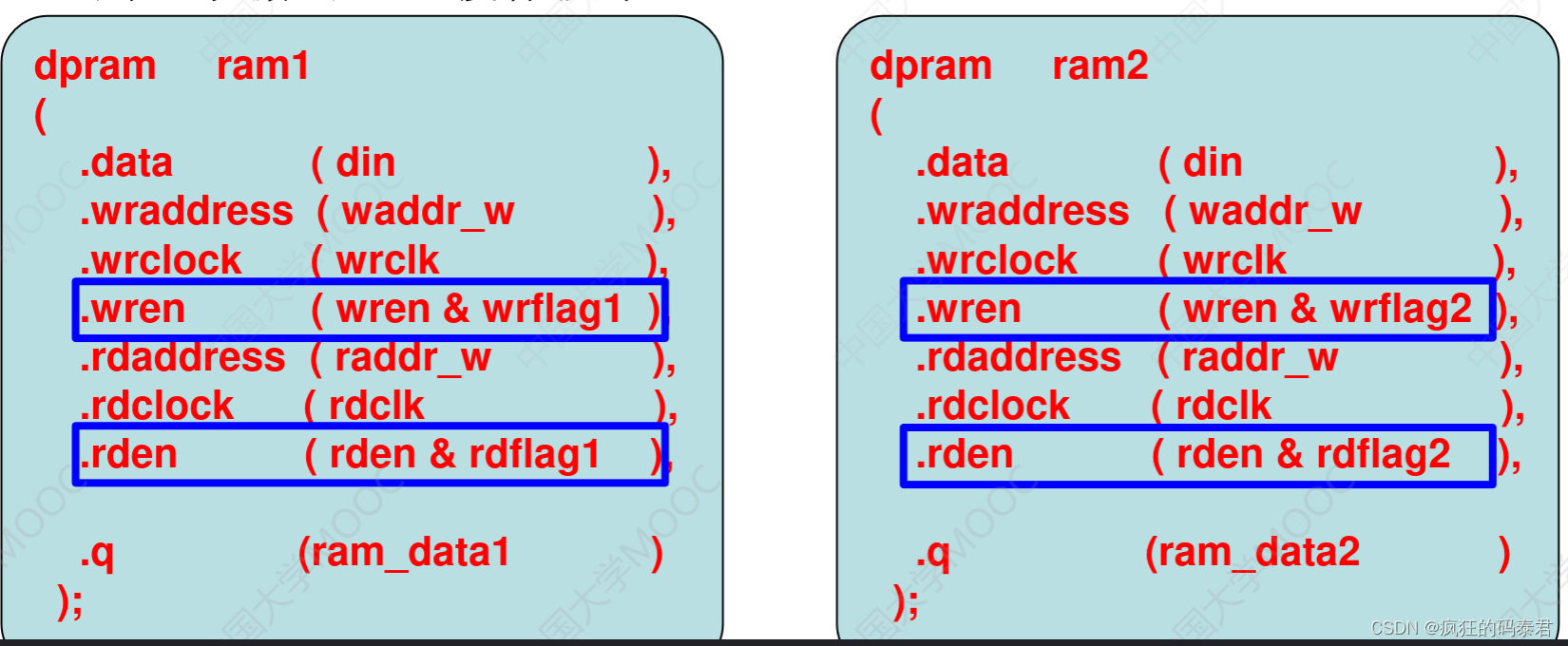

神经网络 卷积层

channel的大小和卷积核个数有关,和其尺寸没有关系

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriterdataset = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=64)class Tudui(nn.Module):def __init__(self) -> None:super().__init__()self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)def forward(self, x):x = self.conv1(x)return xtudui = Tudui()writer = SummaryWriter("dataloader")

step = 0

for data in dataloader:imgs, targets = dataoutput = tudui(imgs)print(imgs.shape)print(output.shape)writer.add_images("input", imgs, step)output = torch.reshape(output, (-1, 3, 30, 30))writer.add_images("output", output, step)step = step + 1writer.close()

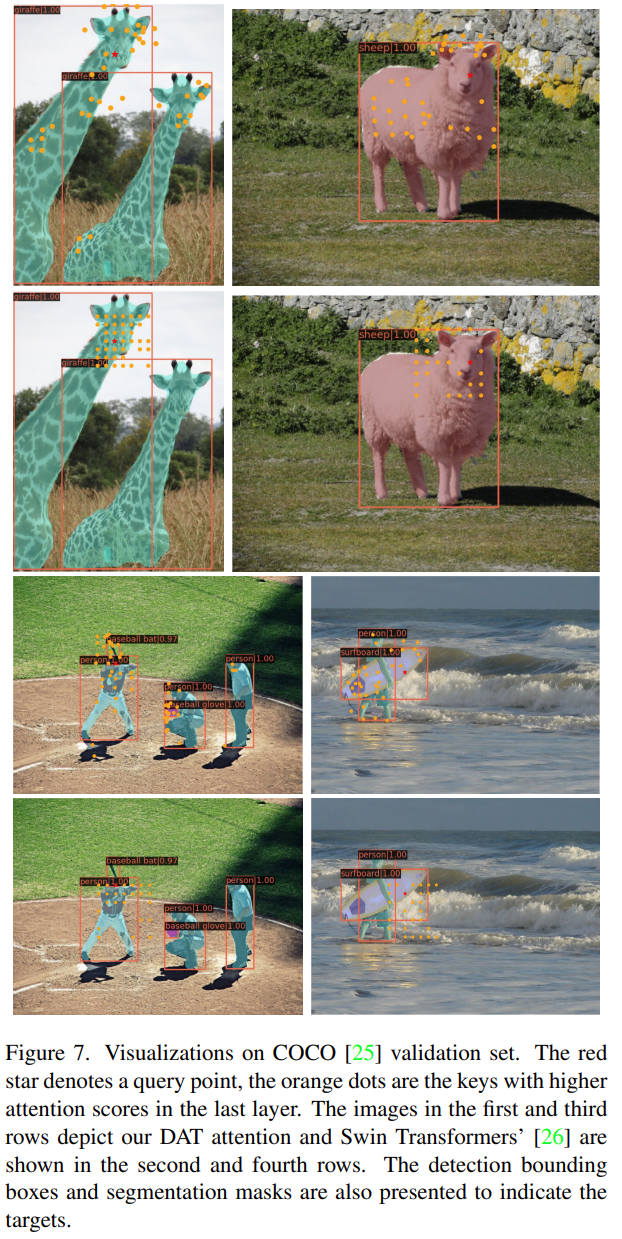

神经网络 非线性激活

谈谈神经网络中的非线性激活函数——ReLu函数 (zhihu.com)

激活函数是指在多层神经网络中,上层神经元的输出和下层神经元的输入存在一个函数关系,这个函数就是激活函数。

引入非线性激活函数的目的是提高神经网络的非线性拟合能力,增强模型的表达能力。

神经网络 线性层

# 神经网络 线性层

import torch

import torchvision

from torch import nn

from torch.nn import Linear

from torch.utils.data import DataLoaderdataset = torchvision.datasets.CIFAR10(root="./dataset", train=True, transform=torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=64)class Tudui(nn.Module):def __init__(self) -> None:super().__init__()self.linear1 = Linear(196608, 10)def forward(self, x):output = self.linear1(x)return outputtudui = Tudui()for data in dataloader:imgs, targets = dataprint(imgs.shape)output = torch.flatten(imgs)print(output.shape)output = tudui(output)print(output.shape)

神经网络 搭建小实战

# 搭建网络模型

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.tensorboard import SummaryWriterclass Tudui(nn.Module):def __init__(self) -> None:super().__init__()self.model1 = Sequential(Conv2d(3, 32, 5, padding=2),MaxPool2d(2),Conv2d(32, 32, 5, padding=2),MaxPool2d(2),Conv2d(32, 64, 5, padding=2),MaxPool2d(2),Flatten(),Linear(1024, 64),Linear(64, 10))def forward(self, x):x = self.model1(x)return xtudui = Tudui()

print(tudui)

input = torch.ones((64, 3, 32, 32))

output = tudui(input)

print(output.shape)writer = SummaryWriter("logs_seq")

writer.add_graph(tudui, input)

writer.close()

总结

import timeimport torch

import torchvision

from torch.utils.tensorboard import SummaryWriter# 准备数据集

from torch import nn

from torch.utils.data import DataLoaderstart_time = time.time()

train_data = torchvision.datasets.CIFAR10(root="./dataset", train=True, transform=torchvision.transforms.ToTensor(), download=True)

test_data = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=torchvision.transforms.ToTensor(), download=True)# length 长度

train_data_size = len(train_data)

test_data_size = len(test_data)

# 格式化字符串的用法

print("训练数据集的长度{}".format(train_data_size))

print("测试数据集的长度{}".format(test_data_size))# 利用DataLoader来加载数据集

train_dataloader = DataLoader(train_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)import torch

from torch import nn# 搭建神经网络

class Tudui(nn.Module):def __init__(self) -> None:super().__init__()self.model= nn.Sequential(nn.Conv2d(3, 32, 5, 1, 2),nn.MaxPool2d(2),nn.Conv2d(32, 32, 5, 1, 2),nn.MaxPool2d(2),nn.Conv2d(32, 64, 5, 1, 2),nn.MaxPool2d(2),nn.Flatten(),nn.Linear(64*4*4, 64),nn.Linear(64, 10))def forward(self, x):return self.model(x)if __name__ == '__main__':tudui = Tudui()input = torch.ones((64, 3, 32, 32))output = tudui(input)print(output.shape)# 创建网络模型

tudui = Tudui()

if torch.cuda.is_available():tudui = tudui.cuda()# 损失函数

loss_fn = nn.CrossEntropyLoss()

if torch.cuda.is_available():loss_fn = loss_fn.cuda()# 优化器

learning_rate = 0.01

optimizer = torch.optim.SGD(tudui.parameters(), lr = learning_rate)# 设置训练网络的一些参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练的轮数

epoch = 10# 添加tensorboard

writer = SummaryWriter("P28_logs_train")for i in range(epoch):print("-----第{}轮训练开始-----".format(i + 1))# 训练步骤开始tudui.train()for data in train_dataloader:imgs, targets = dataif torch.cuda.is_available():imgs = imgs.cuda()targets = targets.cuda()outputs = tudui(imgs)loss = loss_fn(outputs, targets)# 优化器优化模型optimizer.zero_grad()loss.backward()optimizer.step()total_train_step = total_train_step + 1if total_train_step % 100 == 0:end_time = time.time()print(end_time - start_time)print("训练次数:{}, Loss:{}".format(total_train_step, loss.item()))writer.add_scalar("train_loss", loss.item(), total_train_step)# 测试步骤开始tudui.eval()total_test_loss = 0total_accuracy = 0with torch.no_grad():for data in test_dataloader:imgs, targets = dataif torch.cuda.is_available():imgs = imgs.cuda()targets = targets.cuda()outputs = tudui(imgs)loss = loss_fn(outputs, targets)total_test_loss = total_test_loss + loss.item()accuracy = (outputs.argmax(1) == targets).max()total_accuracy = total_accuracy + accuracyprint("整体测试集上的Loss:{}".format(total_test_loss))print("整体测试集上的正确率:{}".format(total_accuracy/test_data_size))writer.add_scalar("test_loss", total_test_loss, total_test_step)writer.add_scalar("test_accuracy", total_accuracy/test_data_size, total_test_step)total_test_step = total_test_step + 1torch.save(tudui, "tudui_{}.pth".format(i))print("模型已保存")writer.close()