引言

在信息爆炸的时代,获取实时的财经资讯对于投资者和金融从业者来说至关重要。然而,手动浏览网页收集财经文章耗时费力,为了解决这一问题,本文将介绍如何使用Python编写一个爬虫程序来自动爬取网易财经下关于财经的文章

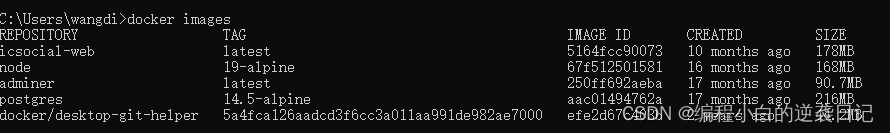

1. 爬虫代码概述

本文将使用Python编写一个爬虫程序,该程序能够爬取网易财经下关于财经的文章,并将爬取的结果保存为JSON文件。爬虫程序的主要流程如下:

- 设置请求头信息,模拟浏览器访问

- 定义爬取函数,根据不同的文章类型爬取相应的URL

- 解析爬取的网页内容,提取文章标题、链接等信息

- 将爬取结果保存为JSON文件

- 多线程并发爬取文章内容并保存

2. 网页分析与URL获取

在爬取网易财经的文章之前,我们需要先分析网页结构并获取相应的URL。通过分析,我们发现网易财经的财经文章分为股票、商业、基金、房产和理财五个类别。每个类别的文章都有对应的URL,我们可以根据这些URL来爬取相应的文章

base_url = ['https://money.163.com/special/00259BVP/news_flow_index.js?callback=data_callback','https://money.163.com/special/00259BVP/news_flow_biz.js?callback=data_callback','https://money.163.com/special/00259BVP/news_flow_fund.js?callback=data_callback','https://money.163.com/special/00259BVP/news_flow_house.js?callback=data_callback','https://money.163.com/special/00259BVP/news_flow_licai.js?callback=data_callback']

3. 爬虫实现

我们使用Python的requests库发送HTTP请求并使用BeautifulSoup库解析网页内容。以下是爬取网易财经文章的主要代码:

import requests

import re

from bs4 import BeautifulSoup

from tqdm import tqdm

import os

import bag

from concurrent.futures import ThreadPoolExecutor# 设置请求头信息

session = requests.session()

session.headers['User-Agent'] = r'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/113.0.0.0 Safari/537.36'

session.headers['Referer'] = r'https://money.163.com/'

session.headers['Accept-Language'] = 'zh-CN,zh;q=0.9'# 定义爬取函数

def get_url(url, kind):num = 1result = []while True:if num == 1:resp = session.get(url)else:if num < 10:resp = session.get(url.replace('.js?callback=data_callback', '') + f'_0{num}' + '.js?callback=data_callback')else:resp = session.get(url.replace('.js?callback=data_callback', '') + f'_{num}' + '.js?callback=data_callback')if resp.status_code == 404:breaknum += 1title = re.findall(r'"title":"(.*?)"', resp.text, re.S)docurl = re.findall(r'"docurl":"(.*?)"', resp.text, re.S)label = re.findall('"label":"(.*?)"', resp.text, re.S)keyword = re.findall(r'"keywords":\[(.*?)]', resp.text, re.S)mid = []for k in keyword:mid1 = []for j in re.findall(r'"keyname":"(.*?)"', str(k), re.S):mid1.append(j.strip())mid.append(','.join(mid1))for i in range(len(title)):result.append([title[i],docurl[i],label[i],kind,mid[i]])return result# 爬取文章内容

def get_data(ls):resp = session.get(ls[1])resp.encoding = 'utf8'resp.close()html = BeautifulSoup(resp.text, 'lxml')content = []p = re.compile(r'<p.*?>(.*?)</p>', re.S)contents = html.find_all('div', class_='post_body')for info in re.findall(p, str(contents)):content.append(re.sub('<.*?>', '', info))return [ls[-1], ls[0], '\n'.join(content), ls[-2], ls[1]]# 主函数

def main():base_url = ['https://money.163.com/special/00259BVP/news_flow_index.js?callback=data_callback','https://money.163.com/special/00259BVP/news_flow_biz.js?callback=data_callback','https://money.163.com/special/00259BVP/news_flow_fund.js?callback=data_callback','https://money.163.com/special/00259BVP/news_flow_house.js?callback=data_callback','https://money.163.com/special/00259BVP/news_flow_licai.js?callback=data_callback']kind = ['股票', '商业', '基金', '房产', '理财']path = r'./财经(根数据).json'save_path = r'./财经.json'if os.path.isfile(path):source_ls = bag.Bag.read_json(path)else:source_ls = []index = 0urls = []for url in base_url:result = get_url(url, kind[index])index += 1urls = urls + resultnewly_added = []if len(source_ls) == 0:bag.Bag.save_json(urls, path)newly_added = urlselse:flag = [i[1] for i in source_ls]for link in urls:if link[1] in flag:passelse:newly_added.append(link)if len(newly_added) == 0:print('无新数据')else:bag.Bag.save_json(newly_added + source_ls, path)if os.path.isfile(save_path):data_result = bag.Bag.read_json(save_path)else:data_result = []with ThreadPoolExecutor(max_workers=20) as t:tasks = []for url in tqdm(newly_added, desc='网易财经'):url: listtasks.append(t.submit(get_data, url))end = []for task in tqdm(tasks, desc='网易财经'):end.append(task.result())bag.Bag.save_json(end + data_result, save_path)if __name__ == '__main__':main()

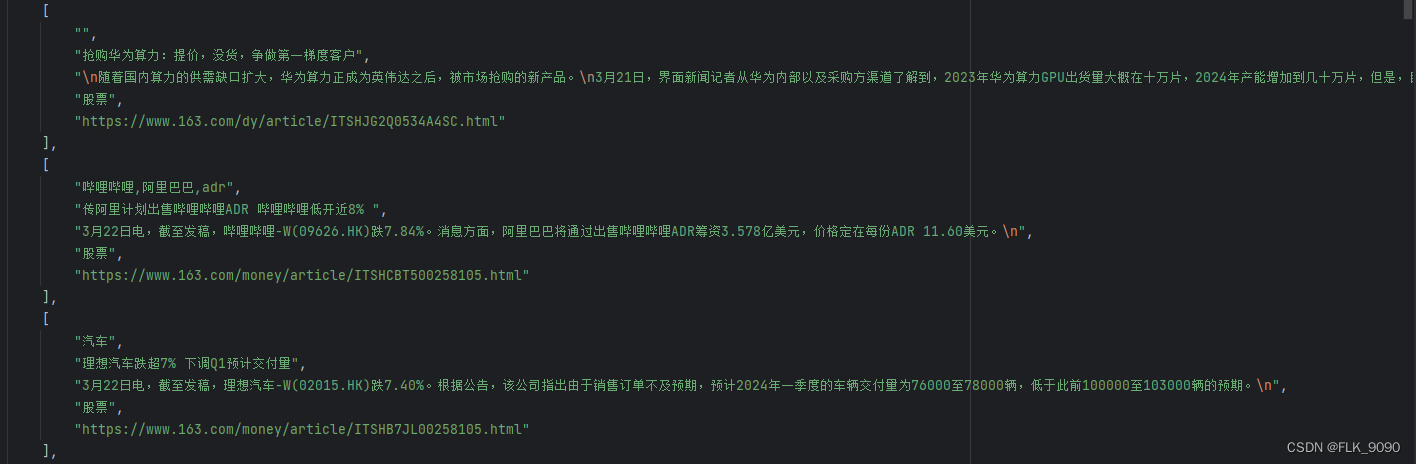

4. 结果保存与展示

爬取的结果将保存为JSON文件,方便后续处理和分析。可以使用bag库来保存和读取JSON文件。以下是保存结果的代码:

import os

import bag# 保存结果

path = r'./财经(根数据).json'

save_path = r'./财经.json'if os.path.isfile(path):source_ls = bag.Bag.read_json(path)

else:source_ls = []

...

...if len(newly_added) == 0:print('无新数据')

else:bag.Bag.save_json(newly_added + source_ls, path)if os.path.isfile(save_path):data_result = bag.Bag.read_json(save_path)else:data_result = []with ThreadPoolExecutor(max_workers=20) as t:tasks = []for url in tqdm(newly_added, desc='网易财经'):url: listtasks.append(t.submit(get_data, url))end = []for task in tqdm(tasks, desc='网易财经'):end.append(task.result())bag.Bag.save_json(end + data_result, save_path)

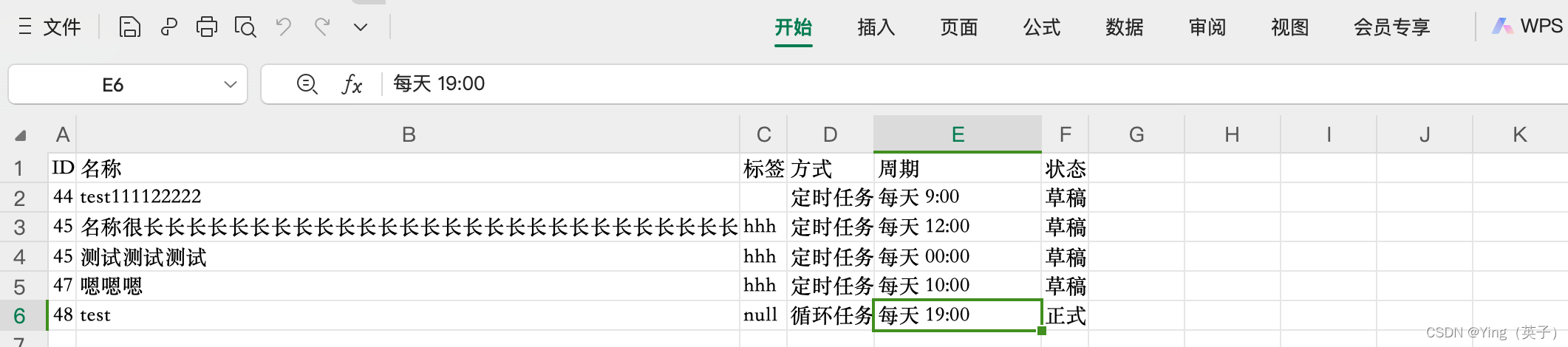

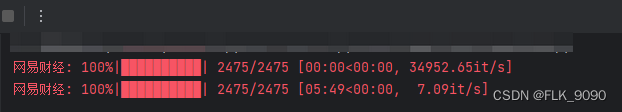

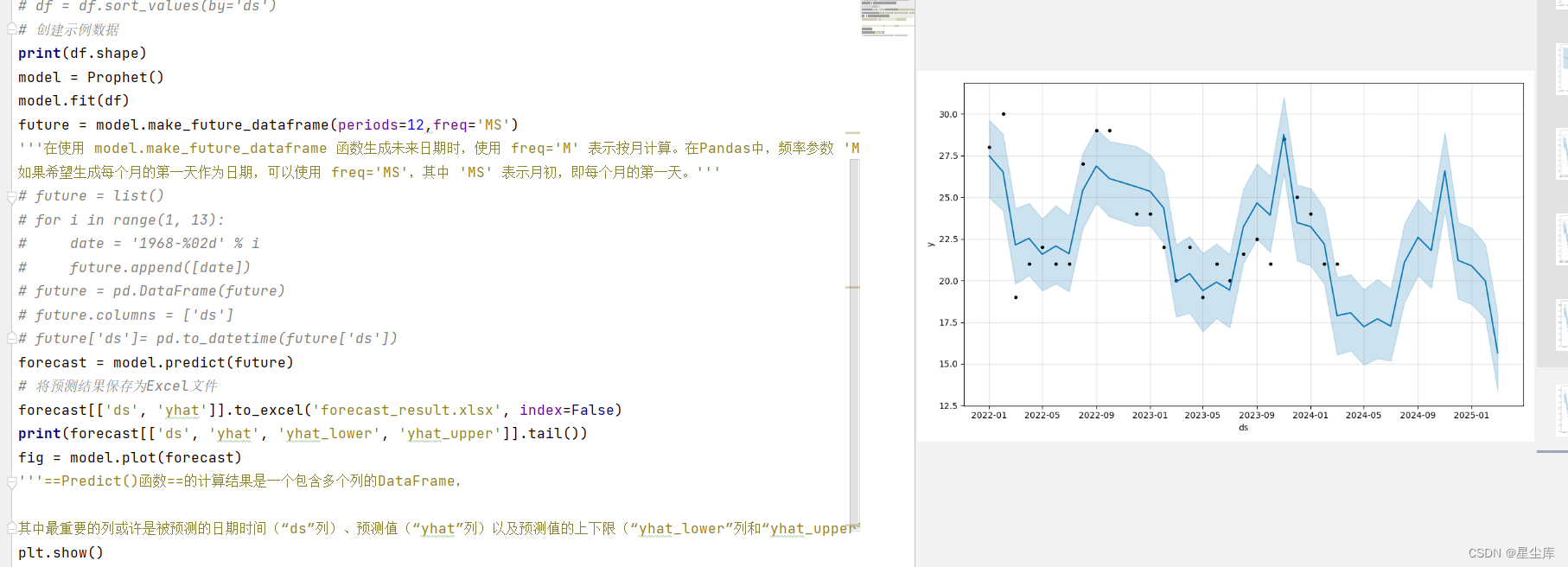

5.运行结果

6. 总结

本文介绍了如何使用Python编写一个爬虫程序来爬取网易财经下关于财经的文章。通过分析网页结构,获取相应的URL,并使用requests和BeautifulSoup库来发送HTTP请求和解析网页内容。最后,将爬取的结果保存为JSON文件。该爬虫程序可以帮助投资者和金融从业者快速获取财经资讯,提高工作效率。

最后如果你觉得本教程对你有所帮助,不妨点赞并关注我的CSDN账号。我会持续为大家带来更多有趣且实用的教程和资源。谢谢大家的支持!

![洛谷_P1873 [COCI 2011/2012 #5] EKO / 砍树_python写法](https://img-blog.csdnimg.cn/direct/c8ce55c3bff848ef8d807453b9ad388b.png)