文章目录

- 4. 接口调用

- 4.1 创建并配置C#项目

- 4.2 添加推理代码

- 4.3 项目演示

- 5. 总结

4. 接口调用

4.1 创建并配置C#项目

首先创建一个简单的C#项目,然后添加项目配置。

首先是添加TensorRT C# API 项目引用,如下图所示,添加上文中C#项目生成的dll文件即可。

接下来添加OpenCvSharp,此处通过NuGet Package安装即可,此处主要安装以下两个程序包即可:

|  |

配置好项目后,项目的配置文件如下所示:

<Project Sdk="Microsoft.NET.Sdk"><PropertyGroup><OutputType>Exe</OutputType><TargetFramework>net6.0</TargetFramework><RootNamespace>TensorRT_CSharp_API_demo</RootNamespace><ImplicitUsings>enable</ImplicitUsings><Nullable>enable</Nullable></PropertyGroup><ItemGroup><PackageReference Include="OpenCvSharp4.Extensions" Version="4.9.0.20240103" /><PackageReference Include="OpenCvSharp4.Windows" Version="4.9.0.20240103" /></ItemGroup><ItemGroup><Reference Include="TensorRtSharp"><HintPath>E:\GitSpace\TensorRT-CSharp-API\src\TensorRtSharp\bin\Release\net6.0\TensorRtSharp.dll</HintPath></Reference></ItemGroup></Project>

4.2 添加推理代码

此处演示一个简单的图像分类项目,以Yolov8-cls项目为例:

static void Main(string[] args)

{Nvinfer predictor = new Nvinfer("E:\\Model\\yolov8\\yolov8s-cls_2.engine");Dims InputDims = predictor.GetBindingDimensions("images");int BatchNum = InputDims.d[0];Mat image1 = Cv2.ImRead("E:\\ModelData\\image\\demo_4.jpg");Mat image2 = Cv2.ImRead("E:\\ModelData\\image\\demo_5.jpg");List<Mat> images = new List<Mat>() { image1, image2 };for (int begImgNo = 0; begImgNo < images.Count; begImgNo += BatchNum){DateTime start = DateTime.Now;int endImgNo = Math.Min(images.Count, begImgNo + BatchNum);int batchNum = endImgNo - begImgNo;List<Mat> normImgBatch = new List<Mat>();int imageLen = 3 * 224 * 224;float[] inputData = new float[2 * imageLen];for (int ino = begImgNo; ino < endImgNo; ino++){Mat input_mat = CvDnn.BlobFromImage(images[ino], 1.0 / 255.0, new OpenCvSharp.Size(224, 224), 0, true, false);float[] data = new float[imageLen];Marshal.Copy(input_mat.Ptr(0), data, 0, imageLen);Array.Copy(data, 0, inputData, ino * imageLen, imageLen);}predictor.LoadInferenceData("images", inputData);DateTime end = DateTime.Now;Console.WriteLine("[ INFO ] Input image data processing time: " + (end - start).TotalMilliseconds + " ms.");predictor.infer();start = DateTime.Now;predictor.infer();end = DateTime.Now;Console.WriteLine("[ INFO ] Model inference time: " + (end - start).TotalMilliseconds + " ms.");start = DateTime.Now;float[] outputData = predictor.GetInferenceResult("output0");for (int i = 0; i < batchNum; ++i){Console.WriteLine(string.Format("\n[ INFO ] Classification Top {0} result : \n", 10));Console.WriteLine("[ INFO ] classid probability");Console.WriteLine("[ INFO ] ------- -----------");float[] data = new float[1000];Array.Copy(outputData, i * 1000, data, 0, 1000);List<int> sortResult = Argsort(new List<float>(data));for (int j = 0; j < 10; ++j){string msg = "";msg += ("index: " + sortResult[j] + "\t");msg += ("score: " + data[sortResult[j]] + "\t");Console.WriteLine("[ INFO ] " + msg);}}end = DateTime.Now;Console.WriteLine("[ INFO ] Inference result processing time: " + (end - start).TotalMilliseconds + " ms.");}}public static List<int> Argsort(List<float> array)

{int arrayLen = array.Count;List<float[]> newArray = new List<float[]> { };for (int i = 0; i < arrayLen; i++){newArray.Add(new float[] { array[i], i });}newArray.Sort((a, b) => b[0].CompareTo(a[0]));List<int> arrayIndex = new List<int>();foreach (float[] item in newArray){arrayIndex.Add((int)item[1]);}return arrayIndex;

}

4.3 项目演示

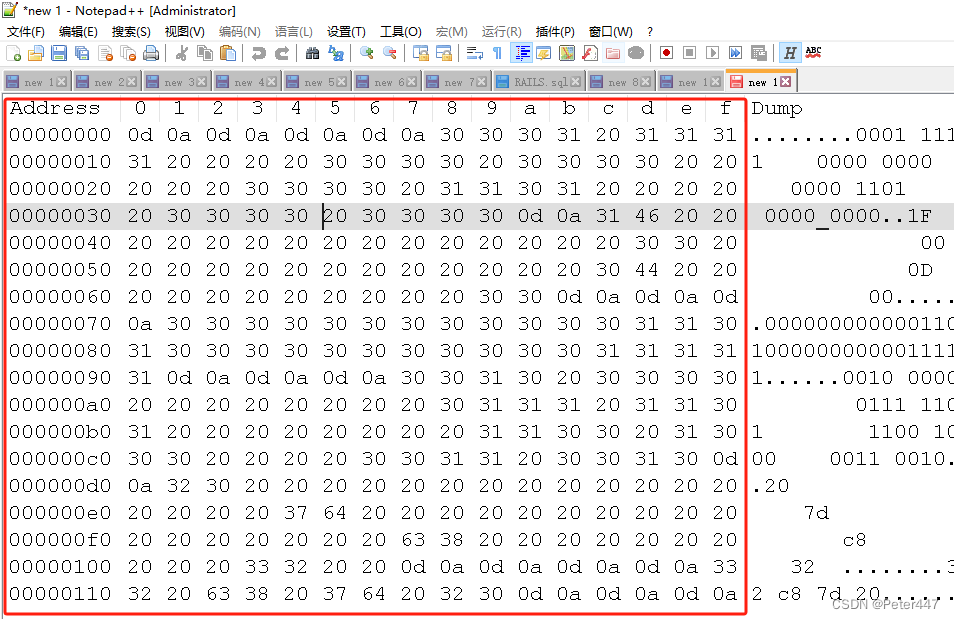

配置好项目并编写好代码后,运行该项目,项目输出如下所示:

[03/31/2024-22:27:44] [I] [TRT] Loaded engine size: 15 MiB

[03/31/2024-22:27:44] [I] [TRT] [MemUsageChange] TensorRT-managed allocation in engine deserialization: CPU +0, GPU +12, now: CPU 0, GPU 12 (MiB)

[03/31/2024-22:27:44] [I] [TRT] [MemUsageChange] TensorRT-managed allocation in IExecutionContext creation: CPU +0, GPU +4, now: CPU 0, GPU 16 (MiB)

[03/31/2024-22:27:44] [W] [TRT] CUDA lazy loading is not enabled. Enabling it can significantly reduce device memory usage and speed up TensorRT initialization. See "Lazy Loading" section of CUDA documentation https://docs.nvidia.com/cuda/cuda-c-programming-guide/index.html#lazy-loading

[ INFO ] Input image data processing time: 6.6193 ms.

[ INFO ] Model inference time: 1.1434 ms.[ INFO ] Classification Top 10 result :[ INFO ] classid probability

[ INFO ] ------- -----------

[ INFO ] index: 386 score: 0.87328124

[ INFO ] index: 385 score: 0.082506955

[ INFO ] index: 101 score: 0.04416279

[ INFO ] index: 51 score: 3.5818E-05

[ INFO ] index: 48 score: 4.2115275E-06

[ INFO ] index: 354 score: 3.5188648E-06

[ INFO ] index: 474 score: 5.789438E-07

[ INFO ] index: 490 score: 5.655325E-07

[ INFO ] index: 343 score: 5.1091644E-07

[ INFO ] index: 340 score: 4.837259E-07[ INFO ] Classification Top 10 result :[ INFO ] classid probability

[ INFO ] ------- -----------

[ INFO ] index: 293 score: 0.89423335

[ INFO ] index: 276 score: 0.052870292

[ INFO ] index: 288 score: 0.021361532

[ INFO ] index: 290 score: 0.009259541

[ INFO ] index: 275 score: 0.0066174944

[ INFO ] index: 355 score: 0.0025512716

[ INFO ] index: 287 score: 0.0024535337

[ INFO ] index: 210 score: 0.00083151844

[ INFO ] index: 184 score: 0.0006893527

[ INFO ] index: 272 score: 0.00054959994

通过上面输出可以看出,模型推理仅需1.1434ms,大大提升了模型的推理速度。

5. 总结

在本项目中,我们开发了TensorRT C# API 2.0版本,重新封装了推理接口。并结合分类模型部署流程向大家展示了TensorRT C# API 的使用方式,方便大家快速上手使用。

为了方便各位开发者使用,此处开发了配套的演示项目,主要是基于Yolov8开发的目标检测、目标分割、人体关键点识别、图像分类以及旋转目标识别,由于时间原因,还未开发配套的技术文档,此处先行提供给大家项目源码,大家可以根据自己需求使用:

- Yolov8 Det 目标检测项目源码:

https://github.com/guojin-yan/TensorRT-CSharp-API-Samples/blob/master/model_samples/yolov8_custom/Yolov8Det.cs

- Yolov8 Seg 目标分割项目源码:

https://github.com/guojin-yan/TensorRT-CSharp-API-Samples/blob/master/model_samples/yolov8_custom/Yolov8Seg.cs

- Yolov8 Pose 人体关键点识别项目源码:

https://github.com/guojin-yan/TensorRT-CSharp-API-Samples/blob/master/model_samples/yolov8_custom/Yolov8Pose.cs

- Yolov8 Cls 图像分类项目源码:

https://github.com/guojin-yan/TensorRT-CSharp-API-Samples/blob/master/model_samples/yolov8_custom/Yolov8Cls.cs

- Yolov8 Obb 旋转目标识别项目源码:

https://github.com/guojin-yan/TensorRT-CSharp-API-Samples/blob/master/model_samples/yolov8_custom/Yolov8Obb.cs

同时对本项目开发的案例进行了时间测试,以下时间只是程序运行一次的时间,测试环境为:

-

CPU:i7-165G7

-

CUDA型号:12.2

-

Cudnn:8.9.3

-

TensorRT:8.6.1.6

| Model | Batch | 数据预处理 | 模型推理 | 结果后处理 |

|---|---|---|---|---|

| Yolov8s-Det | 2 | 25 ms | 7 ms | 20 ms |

| Yolov8s-Obb | 2 | 49 ms | 15 ms | 32 ms |

| Yolov8s-Seg | 2 | 23 ms | 8 ms | 128 ms |

| Yolov8s-Pose | 2 | 27 ms | 7 ms | 20 ms |

| Yolov8s-Cls | 2 | 16 ms | 1 ms | 3 ms |

最后如果各位开发者在使用中有任何问题,欢迎大家与我联系。