【大模型】大模型 CPU 推理之 llama.cpp

- llama.cpp

- 安装llama.cpp

- Memory/Disk Requirements

- Quantization

- 测试推理

- 下载模型

- 测试

- 参考

llama.cpp

-

描述

The main goal of llama.cpp is to enable LLM inference with minimal setup and state-of-the-art performance on a wide variety of hardware - locally and in the cloud.

- Plain C/C++ implementation without any dependencies

- Apple silicon is a first-class citizen - optimized via ARM NEON, Accelerate and Metal frameworks

- AVX, AVX2 and AVX512 support for x86 architectures

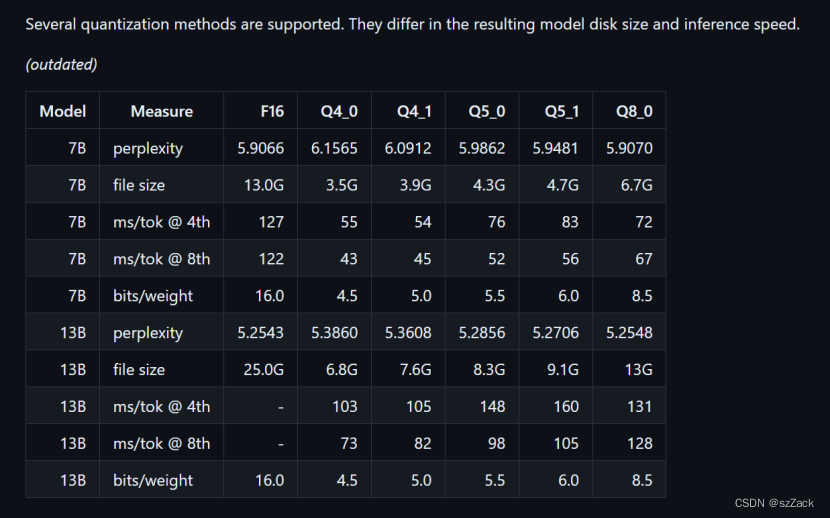

- 1.5-bit, 2-bit, 3-bit, 4-bit, 5-bit, 6-bit, and 8-bit integer quantization for faster inference and reduced memory use

- Custom CUDA kernels for running LLMs on NVIDIA GPUs (support for AMD GPUs via HIP)

- Vulkan, SYCL, and (partial) OpenCL backend support

- CPU+GPU hybrid inference to partially accelerate models larger than the total VRAM capacity

-

官网

https://github.com/ggerganov/llama.cpp -

Supported platforms:

Mac OSLinuxWindows (via CMake)DockerFreeBSD -

Supported models:

- Typically finetunes of the base models below are supported as well.

LLaMA 🦙

LLaMA 2 🦙🦙

Mistral 7B

Mixtral MoE

Falcon

Chinese LLaMA / Alpaca and Chinese LLaMA-2 / Alpaca-2

Vigogne (French)

Koala

Baichuan 1 & 2 + derivations

Aquila 1 & 2

Starcoder models

Refact

Persimmon 8B

MPT

Bloom

Yi models

StableLM models

Deepseek models

Qwen models

PLaMo-13B

Phi models

GPT-2

Orion 14B

InternLM2

CodeShell

Gemma

Mamba

Xverse

Command-R- Multimodal models:

LLaVA 1.5 models, LLaVA 1.6 models

BakLLaVA

Obsidian

ShareGPT4V

MobileVLM 1.7B/3B models

Yi-VL

安装llama.cpp

- 下载代码

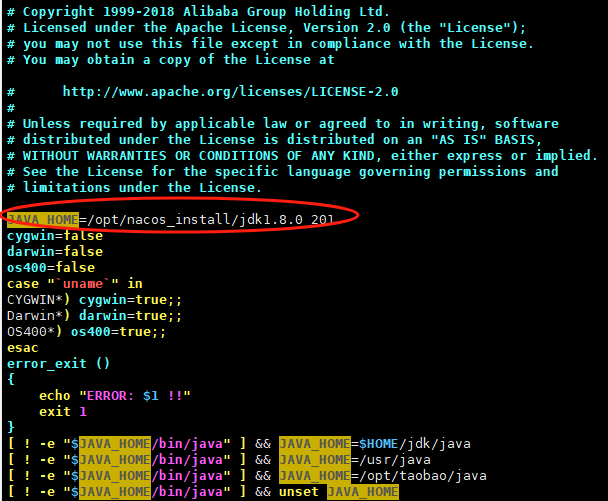

git clone https://github.com/ggerganov/llama.cpp - Build

On Linux or MacOS:

其他编译方法参考官网https://github.com/ggerganov/llama.cppcd llama.cppmake

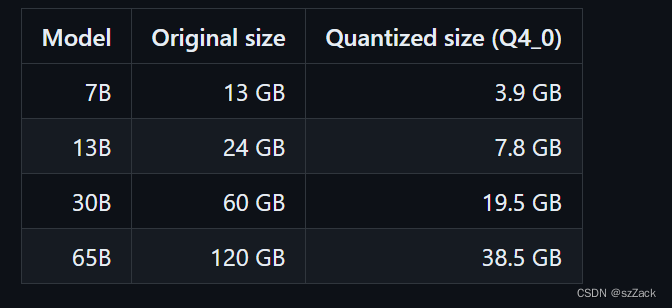

Memory/Disk Requirements

Quantization

测试推理

下载模型

快速下载模型,参考: 无需 VPN 即可急速下载 huggingface 上的 LLM 模型

我这里下 qwen/Qwen1.5-1.8B-Chat-GGUF 进行测试

huggingface-cli download --resume-download qwen/Qwen1.5-1.8B-Chat-GGUF --local-dir qwen/Qwen1.5-1.8B-Chat-GGUF

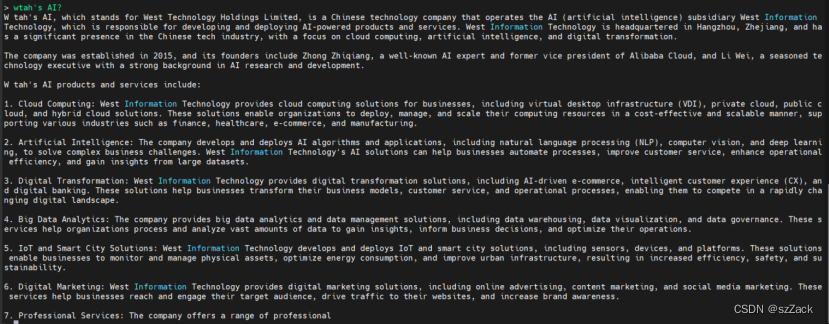

测试

cd ./llama.cpp./main -m /your/path/qwen/Qwen1.5-1.8B-Chat-GGUF/qwen1_5-1_8b-chat-q4_k_m.gguf -n 512 --color -i -cml -f ./prompts/chat-with-qwen.txt

需要修改提示语,可以编辑 ./prompts/chat-with-qwen.txt 进行修改。

加载模型输出信息:

llama.cpp# ./main -m /mnt/data/llm/Qwen1.5-1.8B-Chat-GGUF/qwen1_5-1_8b-chat-q4_k_m.gguf -n 512 --color -i -cml -f ./prompts/chat-with-qwen

.txt

Log start

main: build = 2527 (ad3a0505)

main: built with cc (Ubuntu 11.4.0-1ubuntu1~22.04) 11.4.0 for x86_64-linux-gnu

main: seed = 1711760850

llama_model_loader: loaded meta data with 21 key-value pairs and 291 tensors from /mnt/data/llm/Qwen1.5-1.8B-Chat-GGUF/qwen1_5-1_8b-chat-q4_k_m.gguf (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = qwen2

llama_model_loader: - kv 1: general.name str = Qwen1.5-1.8B-Chat-AWQ-fp16

llama_model_loader: - kv 2: qwen2.block_count u32 = 24

llama_model_loader: - kv 3: qwen2.context_length u32 = 32768

llama_model_loader: - kv 4: qwen2.embedding_length u32 = 2048

llama_model_loader: - kv 5: qwen2.feed_forward_length u32 = 5504

llama_model_loader: - kv 6: qwen2.attention.head_count u32 = 16

llama_model_loader: - kv 7: qwen2.attention.head_count_kv u32 = 16

llama_model_loader: - kv 8: qwen2.attention.layer_norm_rms_epsilon f32 = 0.000001

llama_model_loader: - kv 9: qwen2.rope.freq_base f32 = 1000000.000000

llama_model_loader: - kv 10: qwen2.use_parallel_residual bool = true

llama_model_loader: - kv 11: tokenizer.ggml.model str = gpt2

llama_model_loader: - kv 12: tokenizer.ggml.tokens arr[str,151936] = ["!", "\"", "#", "$", "%", "&", "'", ...

llama_model_loader: - kv 13: tokenizer.ggml.token_type arr[i32,151936] = [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

llama_model_loader: - kv 14: tokenizer.ggml.merges arr[str,151387] = ["Ġ Ġ", "ĠĠ ĠĠ", "i n", "Ġ t",...

llama_model_loader: - kv 15: tokenizer.ggml.eos_token_id u32 = 151645

llama_model_loader: - kv 16: tokenizer.ggml.padding_token_id u32 = 151643

llama_model_loader: - kv 17: tokenizer.ggml.bos_token_id u32 = 151643

llama_model_loader: - kv 18: tokenizer.chat_template str = {% for message in messages %}{{'<|im_...

llama_model_loader: - kv 19: general.quantization_version u32 = 2

llama_model_loader: - kv 20: general.file_type u32 = 15

llama_model_loader: - type f32: 121 tensors

llama_model_loader: - type q5_0: 12 tensors

llama_model_loader: - type q8_0: 12 tensors

llama_model_loader: - type q4_K: 133 tensors

llama_model_loader: - type q6_K: 13 tensors

llm_load_vocab: special tokens definition check successful ( 293/151936 ).

llm_load_print_meta: format = GGUF V3 (latest)

llm_load_print_meta: arch = qwen2

llm_load_print_meta: vocab type = BPE

llm_load_print_meta: n_vocab = 151936

llm_load_print_meta: n_merges = 151387

llm_load_print_meta: n_ctx_train = 32768

llm_load_print_meta: n_embd = 2048

llm_load_print_meta: n_head = 16

llm_load_print_meta: n_head_kv = 16

llm_load_print_meta: n_layer = 24

llm_load_print_meta: n_rot = 128

llm_load_print_meta: n_embd_head_k = 128

llm_load_print_meta: n_embd_head_v = 128

llm_load_print_meta: n_gqa = 1

llm_load_print_meta: n_embd_k_gqa = 2048

llm_load_print_meta: n_embd_v_gqa = 2048

llm_load_print_meta: f_norm_eps = 0.0e+00

llm_load_print_meta: f_norm_rms_eps = 1.0e-06

llm_load_print_meta: f_clamp_kqv = 0.0e+00

llm_load_print_meta: f_max_alibi_bias = 0.0e+00

llm_load_print_meta: f_logit_scale = 0.0e+00

llm_load_print_meta: n_ff = 5504

llm_load_print_meta: n_expert = 0

llm_load_print_meta: n_expert_used = 0

llm_load_print_meta: causal attn = 1

llm_load_print_meta: pooling type = 0

llm_load_print_meta: rope type = 2

llm_load_print_meta: rope scaling = linear

llm_load_print_meta: freq_base_train = 1000000.0

llm_load_print_meta: freq_scale_train = 1

llm_load_print_meta: n_yarn_orig_ctx = 32768

llm_load_print_meta: rope_finetuned = unknown

llm_load_print_meta: ssm_d_conv = 0

llm_load_print_meta: ssm_d_inner = 0

llm_load_print_meta: ssm_d_state = 0

llm_load_print_meta: ssm_dt_rank = 0

llm_load_print_meta: model type = 1B

llm_load_print_meta: model ftype = Q4_K - Medium

llm_load_print_meta: model params = 1.84 B

llm_load_print_meta: model size = 1.13 GiB (5.28 BPW)

llm_load_print_meta: general.name = Qwen1.5-1.8B-Chat-AWQ-fp16

llm_load_print_meta: BOS token = 151643 '<|endoftext|>'

llm_load_print_meta: EOS token = 151645 '<|im_end|>'

llm_load_print_meta: PAD token = 151643 '<|endoftext|>'

llm_load_print_meta: LF token = 148848 'ÄĬ'

llm_load_tensors: ggml ctx size = 0.11 MiB

llm_load_tensors: CPU buffer size = 1155.67 MiB

...................................................................

llama_new_context_with_model: n_ctx = 512

llama_new_context_with_model: n_batch = 512

llama_new_context_with_model: n_ubatch = 512

llama_new_context_with_model: freq_base = 1000000.0

llama_new_context_with_model: freq_scale = 1

llama_kv_cache_init: CPU KV buffer size = 96.00 MiB

llama_new_context_with_model: KV self size = 96.00 MiB, K (f16): 48.00 MiB, V (f16): 48.00 MiB

llama_new_context_with_model: CPU output buffer size = 296.75 MiB

llama_new_context_with_model: CPU compute buffer size = 300.75 MiB

llama_new_context_with_model: graph nodes = 868

llama_new_context_with_model: graph splits = 1system_info: n_threads = 4 / 4 | AVX = 1 | AVX_VNNI = 1 | AVX2 = 1 | AVX512 = 0 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 0 | SSE3 = 1 | SSSE3 = 1 | VSX = 0 | MATMUL_INT8 = 0 |

main: interactive mode on.

Reverse prompt: '<|im_start|>user

'

sampling:repeat_last_n = 64, repeat_penalty = 1.000, frequency_penalty = 0.000, presence_penalty = 0.000top_k = 40, tfs_z = 1.000, top_p = 0.950, min_p = 0.050, typical_p = 1.000, temp = 0.800mirostat = 0, mirostat_lr = 0.100, mirostat_ent = 5.000

sampling order:

CFG -> Penalties -> top_k -> tfs_z -> typical_p -> top_p -> min_p -> temperature

generate: n_ctx = 512, n_batch = 2048, n_predict = 512, n_keep = 10== Running in interactive mode. ==- Press Ctrl+C to interject at any time.- Press Return to return control to LLaMa.- To return control without starting a new line, end your input with '/'.- If you want to submit another line, end your input with '\'.system

You are a helpful assistant.

user>输入文本:What’s AI?

输出示例:

参考

- https://github.com/ggerganov/llama.cpp