<!--爬虫仅支持1.8版本的jdk-->

<!-- 爬虫需要的依赖-->

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

<version>4.5.2</version>

</dependency>

<!-- 爬虫需要的日志依赖-->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.25</version>

</dependency>

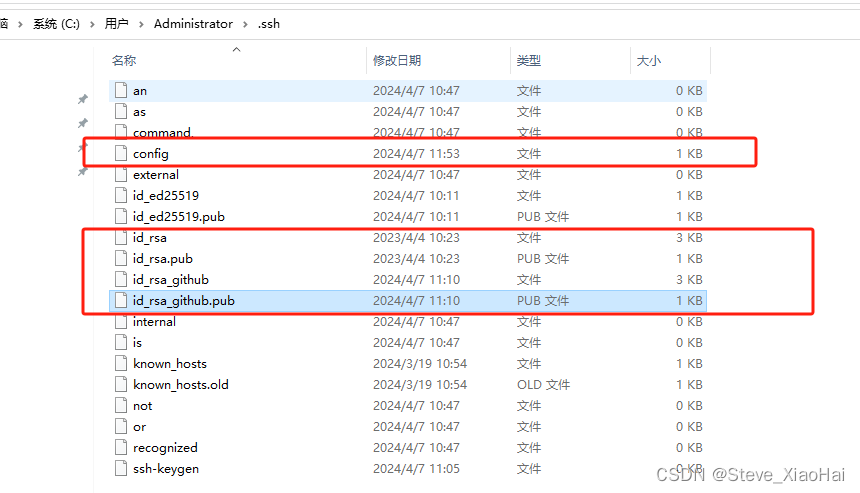

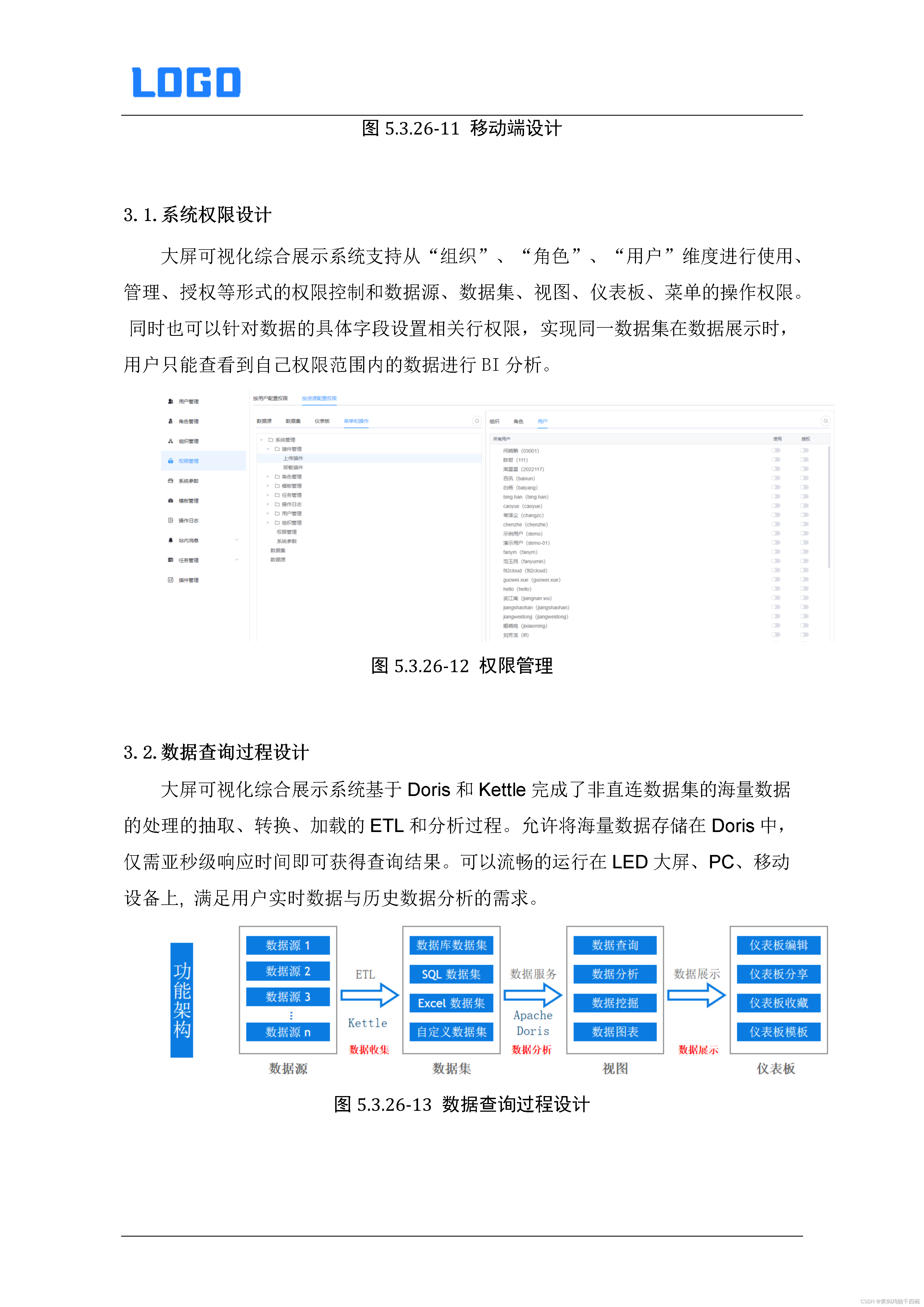

爬虫配置文件位置及存放位置

package day02;

import org.apache.http.HttpEntity;

import org.apache.http.NameValuePair;

import org.apache.http.client.entity.UrlEncodedFormEntity;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.client.methods.HttpPost;

import org.apache.http.client.utils.URIBuilder;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.message.BasicNameValuePair;

import org.apache.http.util.EntityUtils;

import java.io.IOException;

import java.net.URISyntaxException;

import java.util.ArrayList;

import java.util.List;public class pacohngde {public static void main(String[] args) throws IOException{//注意这个方法是爬取网址所有位置//1.打开浏览器,创建Httpclient对象// CloseableHttpclient httpclient = Httpclients.createDefault();CloseableHttpClient aDefault = HttpClients.createDefault();// 组合示例https://search.bilibili.com/all?keyword=药水哥&search_source=1//下面操作说白了就是吧位置组合起来使用主要用于搜索 说白就是把网站组合起来使用搜索功能//创建HttpPost对象,设置url访问地址HttpPost httpPost = new HttpPost()HttpPost httpPost = new HttpPost("https://search.bilibili.com/all");//设置参数//声明List集合,封装表单中的参数List<NameValuePair> params = new Arraylist<NameValuePair>();List<NameValuePair> params = new ArrayList<>();params.add(new BasicNameValuePair("keyword","药水哥"));//创建表单的Entity对象,第一个参数就是封装好的表单数据,第二个参数就是编码UrlEncodedFormEntity urlEncodedFormEntity = new UrlEncodedFormEntity(params,"utf8");httpPost.setEntity(urlEncodedFormEntity );System.out.println("要爬取的网址"+httpPost);//3.按回车,发起请求,返回响应,使用httpclient对象发起请求CloseableHttpResponse response = aDefault.execute(httpPost);//4.解析响应,获取数据//判断状态码是否是200 200为正常型号 其他为异常if(response.getStatusLine().getStatusCode()== 200){//获取爬取数据HttpEntity httpEntity =response.getEntity();//将爬取数据解析为utf-8格式String content = EntityUtils.toString(httpEntity,"utf8");//打印System.out.println(content);}//释放资源response.close();//关闭网页aDefault.close();}

}