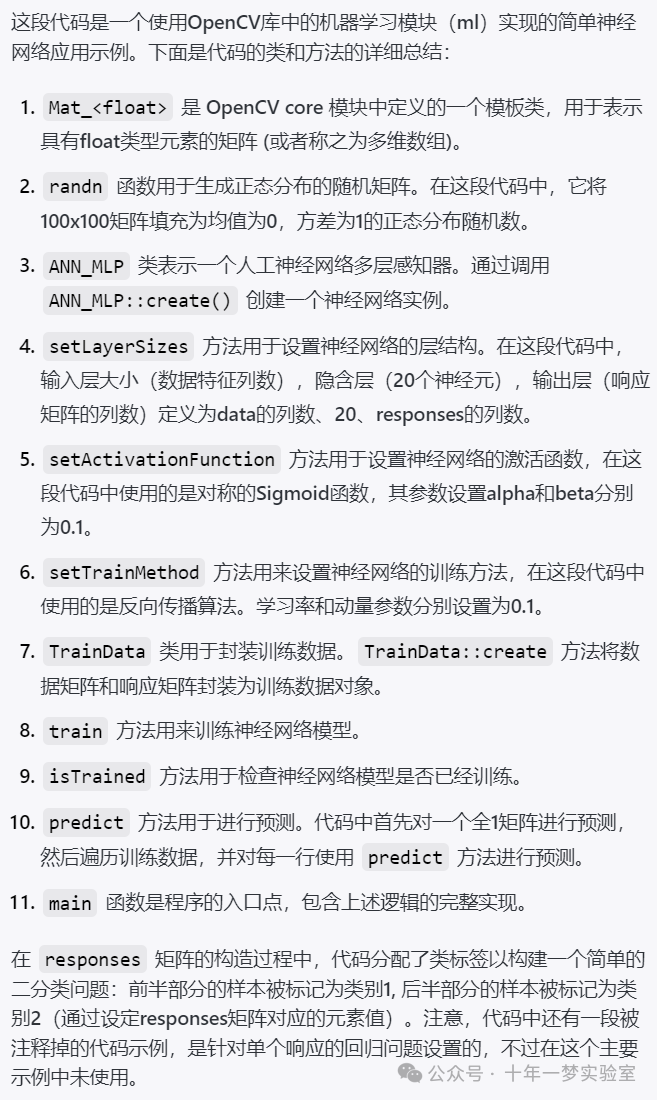

#include <opencv2/ml/ml.hpp> // 引入OpenCV的机器学习模块using namespace std; // 使用标准命名空间

using namespace cv; // 使用OpenCV命名空间

using namespace cv::ml; // 使用OpenCV机器学习命名空间int main()

{//创建随机训练数据Mat_<float> data(100, 100); // 申请100行100列的浮点数矩阵作为数据randn(data, Mat::zeros(1, 1, data.type()), Mat::ones(1, 1, data.type())); // 用正态分布随机填充数据,均值为0,方差为1//为每个类别创建一半的样本Mat_<float> responses(data.rows, 2); // 申请与data行数相同,2列的浮点数矩阵作为响应(输出)for (int i = 0; i<data.rows; ++i) // 遍历所有样本{if (i < data.rows/2){responses(i, 0) = 1; // 前半部分样本标记为第一类responses(i, 1) = 0;}else{responses(i, 0) = 0; // 后半部分样本标记为第二类responses(i, 1) = 1;}}/*// 如果只需要单一响应(回归分析),可以使用以下代码Mat_<float> responses(data.rows, 1); // 申请单列响应矩阵for (int i=0; i<responses.rows; ++i)responses(i, 0) = i < responses.rows / 2 ? 0 : 1; // 前半部分标记为0,后半部分标记为1*///创建神经网络Mat_<int> layerSizes(1, 3); // 定义一个1行3列的整数矩阵,用于指定网络每层的大小layerSizes(0, 0) = data.cols; // 输入层神经元数量等于数据列数layerSizes(0, 1) = 20; // 隐藏层设为20个神经元layerSizes(0, 2) = responses.cols; // 输出层神经元数量等于响应列数Ptr<ANN_MLP> network = ANN_MLP::create(); // 创建多层感知机神经网络network->setLayerSizes(layerSizes); // 设置网络层大小network->setActivationFunction(ANN_MLP::SIGMOID_SYM, 0.1, 0.1); // 设置激活函数为对称S型函数,激活参数为0.1network->setTrainMethod(ANN_MLP::BACKPROP, 0.1, 0.1); // 设置训练方法为反向传播,学习率和动量都为0.1Ptr<TrainData> trainData = TrainData::create(data, ROW_SAMPLE, responses); // 利用数据和响应创建训练数据network->train(trainData); // 使用训练数据训练网络if (network->isTrained()) // 如果网络训练好了{printf("Predict one-vector:\n"); // 打印预测一个向量Mat result; // 用于保存预测结果的矩阵network->predict(Mat::ones(1, data.cols, data.type()), result); // 预测一个全1向量的输出cout << result << endl; // 输出预测结果printf("Predict training data:\n"); // 打印预测训练数据for (int i=0; i<data.rows; ++i) // 遍历所有训练数据{network->predict(data.row(i), result); // 对每一行数据进行预测cout << result << endl; // 输出每一行的预测结果}}return 0; // 程序结束,返回0

}该代码的函数是使用OpenCV的机器学习模块创建并训练一个简单的多层感知机(神经网络)模型。代码生成了一些随机数据和相应的标签,然后设置了神经网络的层结构和训练参数,利用随机生成的数据训练网络,最后使用训练完成的模型来预测一些新数据的输出。

终端输出:

Predict one-vector:

[0.49815065, 0.49921253]

Predict training data:

[0.49815512, 0.4992235]

[0.49815148, 0.4992148]

[0.49816421, 0.49922886]

[0.49815488, 0.49921444]

[0.4981553, 0.49921337]

[0.49815708, 0.49922335]

[0.49815473, 0.49921861]

[0.49815747, 0.49921554]

[0.49815911, 0.49922082]

[0.4981544, 0.49921104]

[0.49815312, 0.49920845]

[0.49815834, 0.4992134]

[0.4981578, 0.499221]

[0.49815252, 0.49921796]

[0.49815798, 0.49920991]

[0.4981553, 0.49921247]

[0.49815372, 0.49922264]

[0.49815732, 0.49922493]

[0.49815607, 0.49922168]

[0.49814662, 0.49921048]

[0.49815735, 0.49921829]

[0.49815637, 0.4992227]

[0.49815381, 0.49921602]

[0.49815139, 0.49921939]

[0.49815428, 0.49921471]

[0.4981533, 0.49922156]

[0.49815425, 0.49922514]

[0.49815568, 0.4992153]

[0.49816135, 0.49922994]

[0.49815443, 0.49921793]

[0.49815369, 0.49921811]

[0.49815857, 0.4992213]

[0.49815249, 0.49921635]

[0.49815717, 0.49922404]

[0.49815562, 0.49922115]

[0.49815992, 0.49922723]

[0.49816322, 0.49921566]

[0.49815544, 0.49922326]

[0.4981539, 0.49922061]

[0.49815902, 0.49923074]

[0.49816567, 0.49922168]

[0.49816078, 0.49921575]

[0.49815938, 0.49921471]

[0.49815077, 0.4992201]

[0.49815583, 0.49921182]

[0.49815345, 0.49921283]

[0.4981598, 0.49921614]

[0.49815416, 0.49921855]

[0.49815762, 0.4992173]

[0.49815825, 0.49921978]

[0.49815825, 0.49922138]

[0.49815908, 0.49921981]

[0.49815544, 0.49921504]

[0.49816117, 0.49923223]

[0.49816099, 0.49921462]

[0.49815351, 0.49922308]

[0.49816227, 0.49923256]

[0.49815354, 0.49922392]

[0.49815038, 0.49922356]

[0.49816525, 0.49922138]

[0.49815342, 0.49922705]

[0.49815989, 0.49922326]

[0.49815401, 0.49921766]

[0.49815825, 0.49921879]

[0.49815637, 0.49921662]

[0.49815908, 0.49922174]

[0.49815243, 0.49921563]

[0.49815255, 0.49921766]

[0.49815673, 0.49921358]

[0.49816242, 0.49922019]

[0.49816835, 0.4992229]

[0.49815518, 0.49920934]

[0.49815202, 0.49921903]

[0.49815437, 0.49922141]

[0.49815497, 0.49921486]

[0.49815184, 0.49921608]

[0.49815631, 0.49922335]

[0.49815813, 0.49921325]

[0.49815223, 0.49922213]

[0.49815005, 0.49921677]

[0.49815634, 0.49921936]

[0.49816167, 0.49923047]

[0.49815112, 0.49921957]

[0.49815676, 0.49921891]

[0.49815649, 0.49922562]

[0.49815223, 0.49921489]

[0.49815086, 0.49922147]

[0.49815762, 0.49921981]

[0.49815792, 0.49921963]

[0.49815729, 0.49921337]

[0.49815807, 0.49922234]

[0.49815661, 0.49921706]

[0.49815363, 0.49922809]

[0.49815917, 0.49921337]

[0.4981555, 0.49922144]

[0.49816197, 0.49922115]

[0.49815977, 0.49922109]

[0.49816063, 0.4992297]

[0.49815196, 0.49921131]

[0.49814603, 0.49921173]