Title

题目

Fully end-to-end deep-learning-based diagnosis of

pancreatic tumors

胰腺肿瘤的全端到端深度学习诊断

01

文献速递介绍

胰腺癌是最常见的肿瘤之一,预后不良且通常是致命的。没有肿瘤的患者只需要进一步观察,而胰腺肿瘤的诊断需要紧急行动和明确的手术计划。如果延迟治疗,病情恶化和死亡的风险将增加,使得胰腺肿瘤的准确诊断对其成功的手术治疗至关重要。

人工智能可以帮助提高影像解释的准确性,并使诊断专业知识更广泛地可用。然而,胰腺肿瘤诊断的人工智能方法发展不够成熟,因为这一任务尤其具有挑战性。首先,目标在形状、大小和位置上高度可变,并且仅占整个CT图像的非常小的一部分。在我们的CT数据集中,胰腺仅占每个CT图像的约1.3%。其余信息来自其他器官,如肝脏、胃、肠道和图像背景,这些信息几乎不会影响人工智能模型的诊断。此外,肿瘤与周围组织的高相似性进一步降低了准确性和诊断效率。第三点是缺乏合适的胰腺图像数据集,这直接影响了人工智能模型的发展。

先前的研究已经尝试解决这些问题。一种有效的方法是胰腺分割。Chakraborty等人基于手动分割的CT图像,利用随机森林和支持向量机学习预测胰腺高风险乳头状粘液性肿瘤(IPMN)。Wei等人提出了一个支持向量机系统,包含24个基于指南的特征和385个放射组学高通量特征,结合由放射科医师标记的感兴趣区域(ROI)来诊断胰腺浆液性囊性肿瘤(SCN)。随着深度学习框架的发展,研究人员已经能够构建有效的深度编码器-解码器网络进行胰腺分割,提高了诊断准确性。Zhu等人报告了一种多尺度分割方法,通过检查是否有足够数量的体素被分割为肿瘤来筛查胰管腺癌(PDAC)。Liu等人首先分割胰腺,然后分类异常以检测PDAC。然而,在不增加医疗专家工作量或程序成本的情况下,高效获得即时诊断和治疗建议仍然是一个重大问题。由于原始患者数据(来自医院记录)包含了CT检查的诊断报告和不同成像平面以及血管造影阶段的图像,因此可用于诊断的有效CT图像的比例很小。因此,成功应用深度学习框架的关键在于对原始数据进行详细的自动预处理。

本研究提出了一个完全端到端的深度学习(FEE-DL)模型,用于从原始腹部CT图像自动诊断胰腺肿瘤。该模型的方法论有四个步骤,用于从原始数据中定位胰腺肿瘤:影像筛选、胰腺定位、胰腺分割和胰腺肿瘤诊断。

Abstract-Background

摘要

Artificial intelligence can facilitate clinical decision making by considering massive amounts of medical imaging data. Various algorithms have been implemented for different clinical applications. Accurate diagnosis and treatment require reliable and interpretable data. For pancreatic tumor diagnosis, only58.5% of images from the First Affiliated Hospital and the Second Affiliated Hospital, Zhejiang University School of Medicine are used, increasing labor and time costs to manually filter out images not directly used by the diagnostic model.

人工智能在临床决策中能够通过考虑大量医学影像数据来提供帮助。不同的算法已经应用于不同的临床应用中。准确的诊断和治疗需要可靠且可解释的数据。对于胰腺肿瘤的诊断,浙江大学医学院附属第一医院和第二医院的影像数据只使用了58.5%,增加了手动筛选出未被诊断模型直接使用的影像的劳动力和时间成本。

Results

结果

We established a fully end-to-end deep-learning model for diagnosing pancreatic tumors and proposing treatment. The model considers original abdominal CT images without any manual preprocessing. Our artificial-intelligence-based system achieved an area under the curve of 0.871 and a F1 score of 88.5% using an independent testing dataset containing 107,036 clinical CT images from 347 patients. The average accuracy for all tumor types was 82.7%, and the independent accuracies of identifying intraductal papillary mucinous neoplasm and pancreatic ductal adenocarcinoma were 100% and 87.6%, respectively. The average test time per patient was 18.6 s, compared with at least 8 min for manual reviewing. Furthermore, the model provided a transparent and interpretable diagnosis by producing saliency maps highlighting the regions relevant to its decision.

我们建立了一个完全端到端的深度学习模型,用于诊断胰腺肿瘤并提出治疗方案。该模型考虑了原始腹部CT影像,没有进行任何手动预处理。我们基于包含347名患者的107,036张临床CT影像的独立测试数据集,人工智能系统实现了0.871的曲线下面积和88.5%的F1分数。对于所有肿瘤类型的平均准确率为82.7%,识别乳头状粘液性肿瘤和胰管腺癌的独立准确率分别为100%和87.6%。每位患者的平均测试时间为18.6秒,而手动审查至少需要8分钟。此外,该模型通过生成突出显示与其决策相关区域的显著性图,提供了透明且可解释的诊断。

Conclusions

结论

The proposed model can potentially deliver efficient and accurate preoperative diagnoses that could aid the surgical management of pancreatic tumor.

所提出的模型有可能提供高效准确的术前诊断

Method

方法

This study used a training dataset of 143,945 dynamic contrast-enhanced CT images of the abdomen from 319 patients. The proposed model contained four stages: image screening, pancreas location, pancreas segmentation, and pancreatic tumor diagnosis.

本研究使用了319名患者的143,945张动态增强CT腹部影像的训练数据集。提出的模型包含四个阶段:影像筛选、胰腺定位、胰腺分割和胰腺肿瘤诊断。

Figure

图

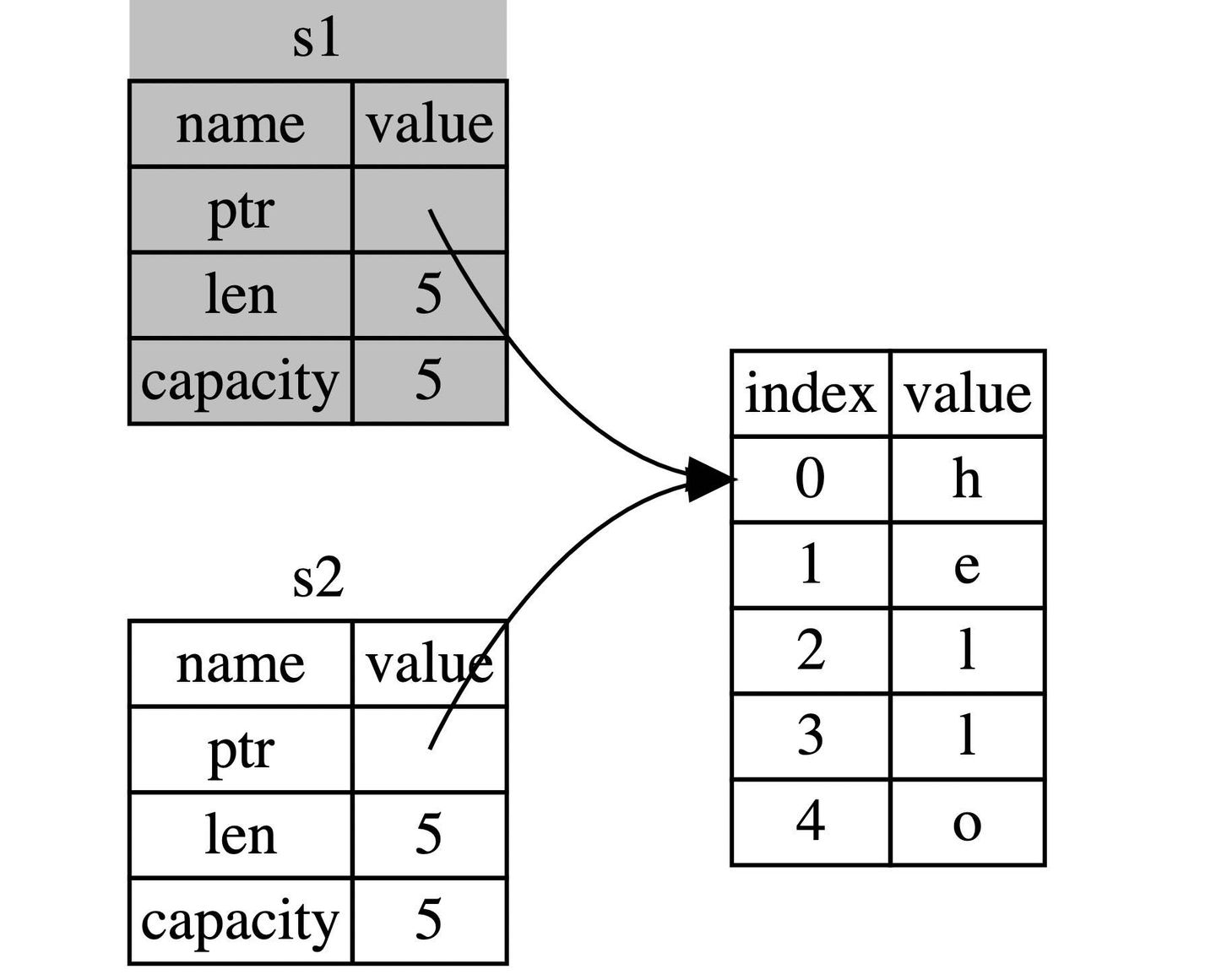

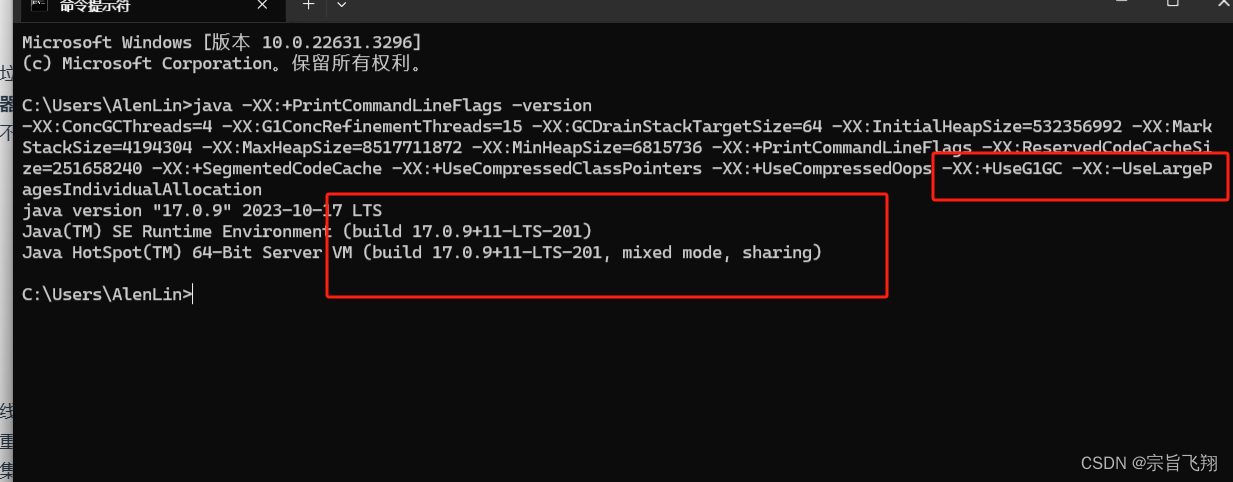

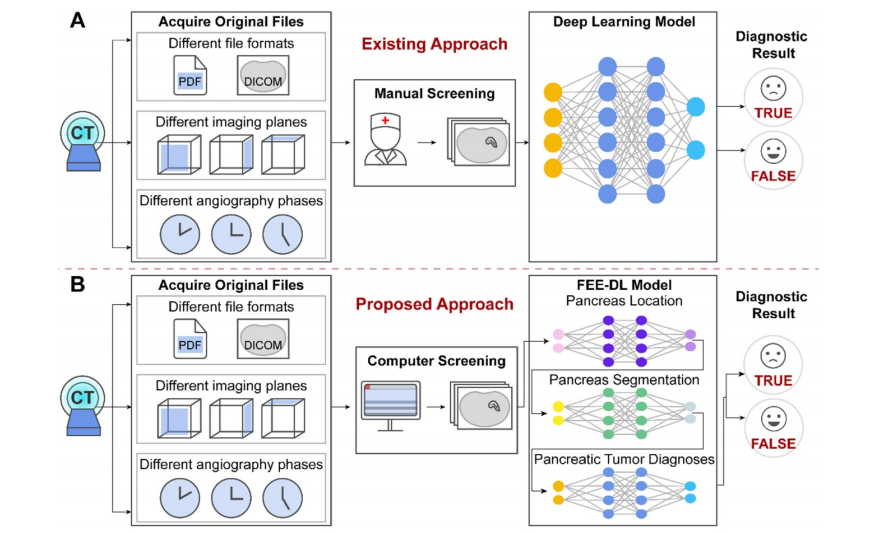

Figure 1. The original files obtained from the hospitals contain different file formats, different imaging planes and different angiography phases. (A) Artificial intelligence approaches currently used for pancreatic diagnosis focus on the analysis of valid CT images, and ignore the importance of screening the original data at an early stage. (B) Our proposed FEE-DL model first screens out transverse plane CT images containing the pancreas from complex original files before deep-learning diagnosis.

图1. 从医院获取的原始文件包含不同的文件格式、不同的成像平面和不同的血管造影相。(A) 当前用于胰腺诊断的人工智能方法专注于分析有效的CT图像,忽略了在早期阶段筛选原始数据的重要性。(B) 我们提出的完全端到端深度学习模型首先从复杂的原始文件中筛选出包含胰腺的横断面CT图像,然后进行深度学习诊断。

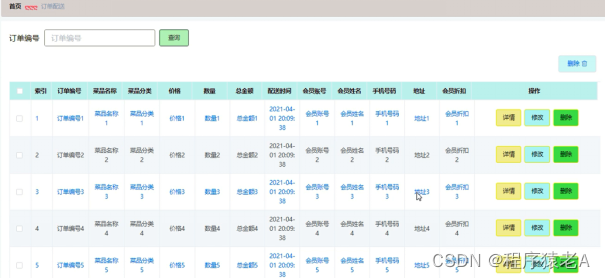

Figure 2. Multiplex original clinical data. (A-C) Images not directly used by the FEE-DL model containing (A) coronal plane CT scan, (B) sagittal plane CT scan, and (C) CT scan without pancreas. (D) Arterial, (E) venous, and (F) delayed phase CT scans.

图2. 多重原始临床数据。(A-C) 不直接被完全端到端深度学习模型使用的图像,包括(A) 冠状面CT扫描,(B) 矢状面CT扫描,以及(C) 不含胰腺的CT扫描。(D) 动脉期,(E) 静脉期,和(F) 延迟期CT扫描。

Figure 3. Workflow diagram of the model’s training and testing phase. In the training phase, after valid images screening and data augmentation from the original abdominal CT images, we constructed a deep-learning model involving pancreas location, pancreas segmentation, image fusion and pancreatic tumor diagnoses. The loss function is calculated according to the prediction and label, and the weights of the neural networks are updated according to the back-propagation algorithm. The best weights are fixed for subsequent use on the testing dataset to diagnose pancreatic tumor.

图3. 模型的训练和测试阶段的工作流程图。在训练阶段,经过从原始腹部CT图像中筛选出有效图像并进行数据增强后,我们构建了一个深度学习模型,涉及胰腺定位、胰腺分割、图像融合和胰腺肿瘤诊断。根据预测和标签计算损失函数,并根据反向传播算法更新神经网络的权重。最佳权重被固定用于对测试数据集进行胰腺肿瘤的诊断。

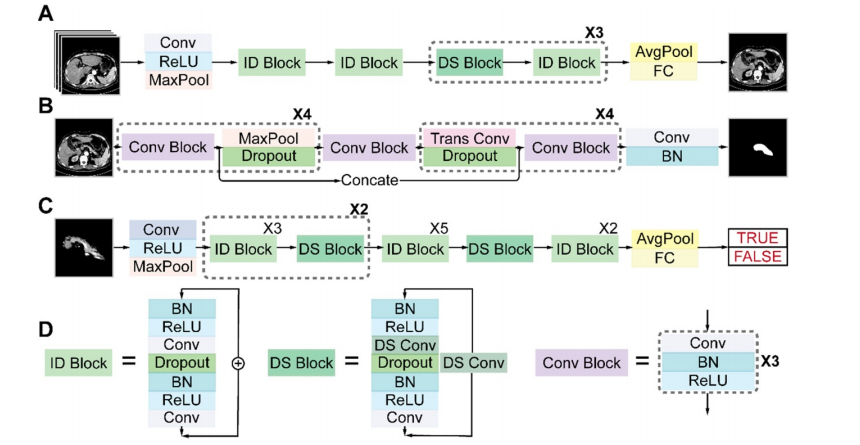

Figure 4. Architectures of the three sub-networks: (A) ResNet18 for pancreas location, (B) U-Net32 for pancreas segmentation, and (C) ResNet34 for pancreatic tumor diagnosis. (D) Detailed structures of the identity (ID), down sampling (DS), and convolution (Conv) blocks. (AvgPool, average-pooling; BN, batch normalization; Concate, concatenation; FC, fully connected; MaxPool, max-pooling; ReLU, rectified linear unit; Trans, transposed).

图4. 三个子网络的架构:(A) 用于胰腺定位的 ResNet18,(B) 用于胰腺分割的 U-Net32,以及 (C) 用于胰腺肿瘤诊断的 ResNet34。(D) 身份(ID)、下采样(DS)和卷积(Conv)模块的详细结构。(AvgPool,平均池化;BN,批量归一化;Concate,串联;FC,全连接;MaxPool,最大池化;ReLU,修正线性单元;Trans,转置)。

Figure 5. Performance of each sub-network in the training and validation datasets. (A) ResNet18 for pancreas location. (B) U-Net32 for pancreas segmentation. (C) ResNet34 for pancreatic tumor diagnoses. (D) Representative results of pancreas segmentation. Rows from top to bottom are input CT images, ground truth, prediction, fusion results, and pancreas contours in CT, respectively, where radiologists’ annotations are shown in green and computerized segmentation is displayed in red. Higher resolution images are also shown on the lower left side.

图5. 每个子网络在训练和验证数据集中的性能。(A) 用于胰腺定位的 ResNet18。(B) 用于胰腺分割的 U-Net32。(C) 用于胰腺肿瘤诊断的 ResNet34。(D) 胰腺分割的代表性结果。从上到下的行分别是输入 CT 图像、地面真相、预测、融合结果和 CT 中的胰腺轮廓,其中放射科医生的标注显示为绿色,计算机分割显示为红色。较高分辨率的图像也显示在左下角

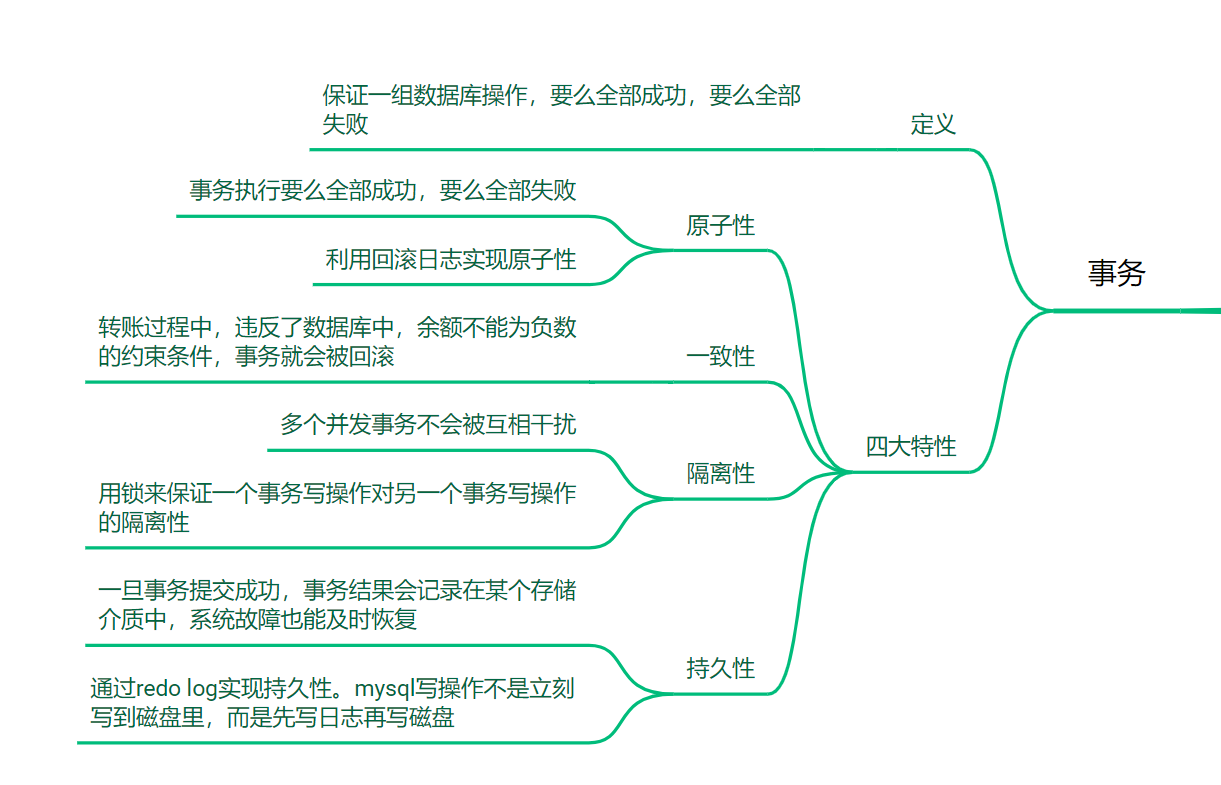

Figure 6. Performance of the FEE-DL model. (A) Confusion matrix. (B) Receiver operating characteristic (ROC) curves of the model and random prediction for comparison. The area under the curve (AUC) was 0.871. (C) Prediction accuracy of different pancreatic tumors with respect to the average accuracy (82.7%). (IPMN, intraductal papillary mucinous neoplasm; PDAC, pancreatic ductal adenocarcinoma; SCN, serous cystic neoplasm).

图6. FEE-DL模型的性能。(A) 混淆矩阵。(B) 模型和随机预测的接收者操作特征曲线(ROC曲线)进行比较。曲线下面积(AUC)为0.871。(C) 不同胰腺肿瘤的预测准确度与平均准确度(82.7%)的关系。(IPMN,胰管内乳头状黏液性肿瘤;PDAC,胰管腺癌;SCN,浆液性囊性肿瘤)。

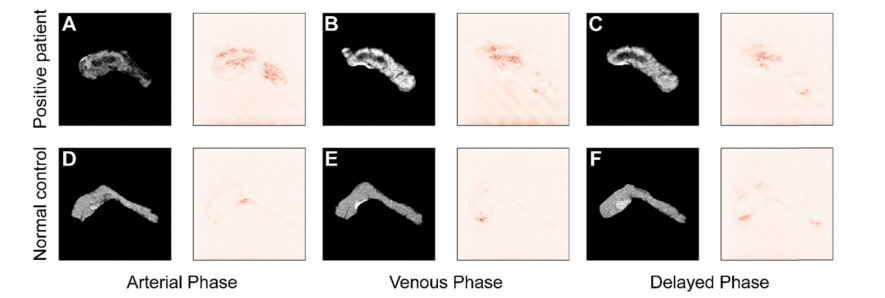

Figure 7. Comparison of saliency maps for (A-C) a tumor patient and (D-F) a normal control in different angiography phases: left, arterial phase; center, venous phase; and right, delayed phase.

图7. 不同血管造影相位下肿瘤患者(A-C)和正常对照(D-F)的显著性图比较:左侧,动脉期;中间,静脉期;右侧,延迟期。

Table

表

Table 1 lists the types of pancreatic tumor and their frequency in the training and testing datasets. Pancreatic cancer (PDAC) and pancreatic tumors such as IPMN, pancreatic neuroendocrine tumors (PNET), SCN, and ‘Other’ are considered as positive cases. Rare cases or lesions on the pancreas caused by

表1 列出了训练和测试数据集中胰腺肿瘤的类型及其频率。胰腺癌(PDAC)和诸如IPMN、胰腺神经内分泌肿瘤(PNET)、SCN和“其他”等胰腺肿瘤被视为阳性病例。由胰腺引起的罕见病例或病变。

Table 2. Patient characteristics in the training and testing datasets

表2 列出了训练和测试数据集中患者的特征。

Table 3. Performance of each sub-network

表3. 每个子网络的性能