代码示例及原理

- 原理是利用websocket协议实现对pod的exec登录,利用client-go构造与远程apiserver的长连接,将对pod容器的输入和pod容器的输出重定向到我们的io方法中,从而实现浏览器端的虚拟终端的效果

- 消息体结构如下

type Connection struct {WsSocket *websocket.Conn // 主websocket连接OutWsSocket *websocket.ConnInChan chan *WsMessage // 输入消息管道OutChan chan *WsMessage // 输出消息管道Mutex sync.Mutex // 并发控制IsClosed bool // 是否关闭CloseChan chan byte // 关闭连接管道

}

// 消息体

type WsMessage struct {MessageType int `json:"messageType"`Data []byte `json:"data"`

}

// terminal的行宽和列宽

type XtermMessage struct {Rows uint16 `json:"rows"`Cols uint16 `json:"cols"`

}

- 下面需要一个handler来控制终端和接收消息,ResizeEvent用来控制终端变更的事件

type ContainerStreamHandler struct {WsConn *ConnectionResizeEvent chan remotecommand.TerminalSize

}

- 为了控制终端,我们需要重写TerminalSize的Next方法,这个方法是client-go定义的接口,如下所示

/*

Copyright 2017 The Kubernetes Authors.Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

*/package remotecommand// TerminalSize and TerminalSizeQueue was a part of k8s.io/kubernetes/pkg/util/term

// and were moved in order to decouple client from other term dependencies// TerminalSize represents the width and height of a terminal.

type TerminalSize struct {Width uint16Height uint16

}// TerminalSizeQueue is capable of returning terminal resize events as they occur.

type TerminalSizeQueue interface {// Next returns the new terminal size after the terminal has been resized. It returns nil when// monitoring has been stopped.Next() *TerminalSize

}

- 我们重写的Next方法如下

func (handler *ContainerStreamHandler) Next() (size *remotecommand.TerminalSize) {select {case ret := <-handler.ResizeEvent:size = &retcase <-handler.WsConn.CloseChan:return nil // 这里很重要, 具体见最后的解释}return

}

- 当我们从ResizeEvent管道中接收到调整终端大小的事件之后,这个事件会被client-go接收到,源码如下

func (p *streamProtocolV3) handleResizes() {if p.resizeStream == nil || p.TerminalSizeQueue == nil {return}go func() {defer runtime.HandleCrash()encoder := json.NewEncoder(p.resizeStream)for {size := p.TerminalSizeQueue.Next() // 接收到我们的调整终端大小的事件if size == nil {return}if err := encoder.Encode(&size); err != nil {runtime.HandleError(err)}}}()

}

- 最后我们给出核心的实现(只是一个大概的框架,具体细节有问题可以留言)

func ExecCommandInContainer(ctx context.Context, conn *tty.Connection, podName, namespace, containerName string) (err error) {kubeClient, err := k8s.CreateClientFromConfig([]byte(env.Kubeconfig))if err != nil {return}restConfig, err := clientcmd.RESTConfigFromKubeConfig([]byte(env.Kubeconfig))if err != nil {return}// 构造请求req := kubeClient.CoreV1().RESTClient().Post().Resource("pods").Name(podName).Namespace(namespace).SubResource("exec").VersionedParams(&corev1.PodExecOptions{Command: []string{"/bin/sh", "-c", "export LANG=\"en_US.UTF-8\"; [ -x /bin/bash ] && exec /bin/bash || exec /bin/sh"},Container: containerName,Stdin: true,Stdout: true,Stderr: true,TTY: true,}, scheme.ParameterCodec)// 使用spdy协议对http协议进行增量升级 exec, err := remotecommand.NewSPDYExecutor(restConfig, "POST", req.URL())if err != nil {return err}handler := &tty.ContainerStreamHandler{WsConn: conn,ResizeEvent: make(chan remotecommand.TerminalSize),}// 核心函数,重定向标准输入和输出err = exec.StreamWithContext(ctx, remotecommand.StreamOptions{Stdin: handler,Stdout: handler,Stderr: handler,TerminalSizeQueue: handler,Tty: true,})return

}

- 整个函数的核心是

StreamWithContext函数,这个函数是client-go的一个方法,接下来我们详细分析一下

// StreamWithContext opens a protocol streamer to the server and streams until a client closes

// the connection or the server disconnects or the context is done.

func (e *spdyStreamExecutor) StreamWithContext(ctx context.Context, options StreamOptions) error {conn, streamer, err := e.newConnectionAndStream(ctx, options)if err != nil {return err}defer conn.Close()panicChan := make(chan any, 1) // panic管道errorChan := make(chan error, 1) // error管道go func() {defer func() {if p := recover(); p != nil {panicChan <- p}}()errorChan <- streamer.stream(conn)}()select {case p := <-panicChan:panic(p)case err := <-errorChan:return errcase <-ctx.Done():return ctx.Err()}

}

- 这个方法首先初始化了一个连接,然后定义了两个管道,分别接收panic事件和error事件,核心是

streamer.stream()方法,接下来我们分析一下这个方法

func (p *streamProtocolV4) stream(conn streamCreator) error {// 创建一个与apiserver的连接传输流if err := p.createStreams(conn); err != nil {return err}// now that all the streams have been created, proceed with reading & copying// 观察流中的错误errorChan := watchErrorStream(p.errorStream, &errorDecoderV4{})// 监听终端调整的事件p.handleResizes()// 将我们的标准输入拷贝到remoteStdin也就是远端的标准输入当中p.copyStdin()var wg sync.WaitGroup// 将远端的标准输出拷贝到我们的标准输出当中p.copyStdout(&wg)p.copyStderr(&wg)// we're waiting for stdout/stderr to finish copyingwg.Wait()// waits for errorStream to finish reading with an error or nilreturn <-errorChan

}

- 整体逻辑还是很清晰的,具体实现细节看源码吧

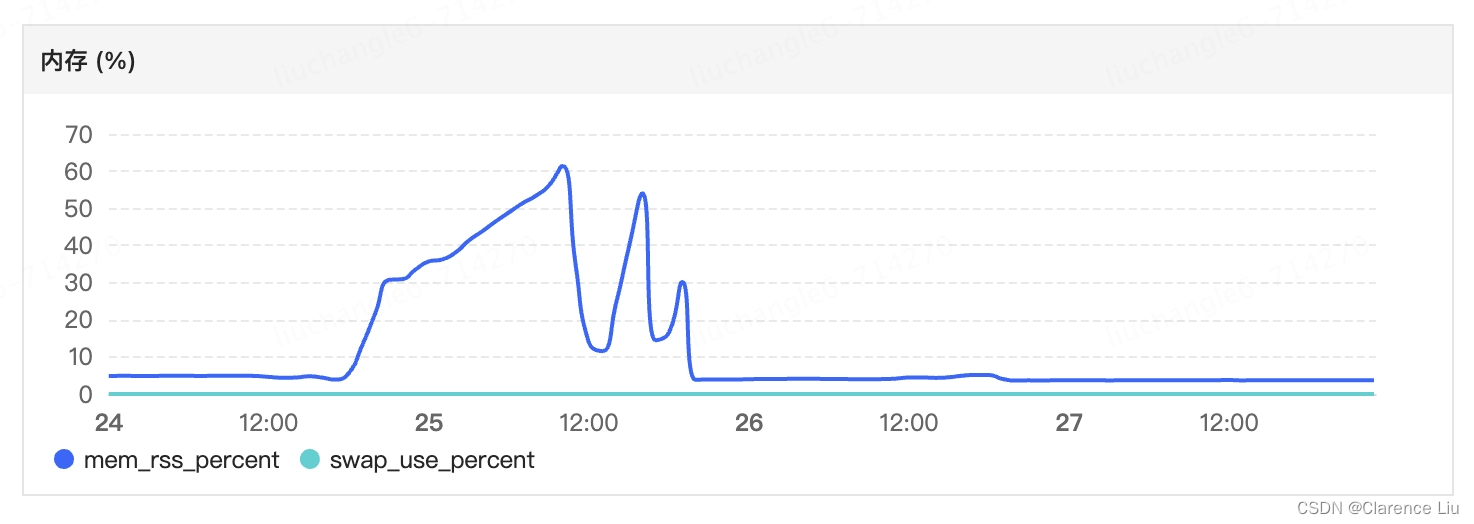

OOM 问题

- 这个功能上线之后,发现内存不断攀升,如下图(出现陡降是因为我重启了服务)

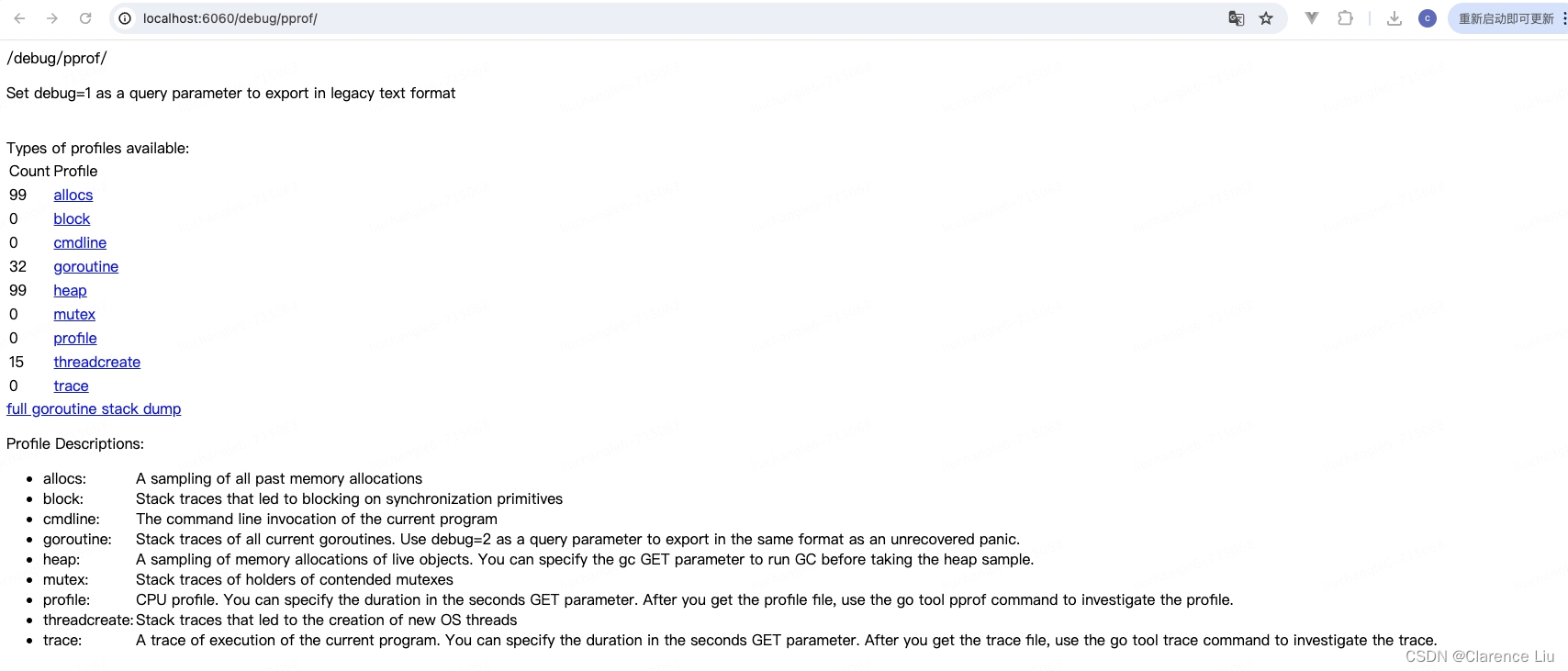

- 使用

pprof进行问题排查,在你的main.go文件中加入下面的内容

import _ "net/http/pprof"func main(){go func() {http.ListenAndServe("localhost:6060", nil)}()

}

- 然后你可以在

http://localhost:6060/debug/pprof/中看到下面的页面

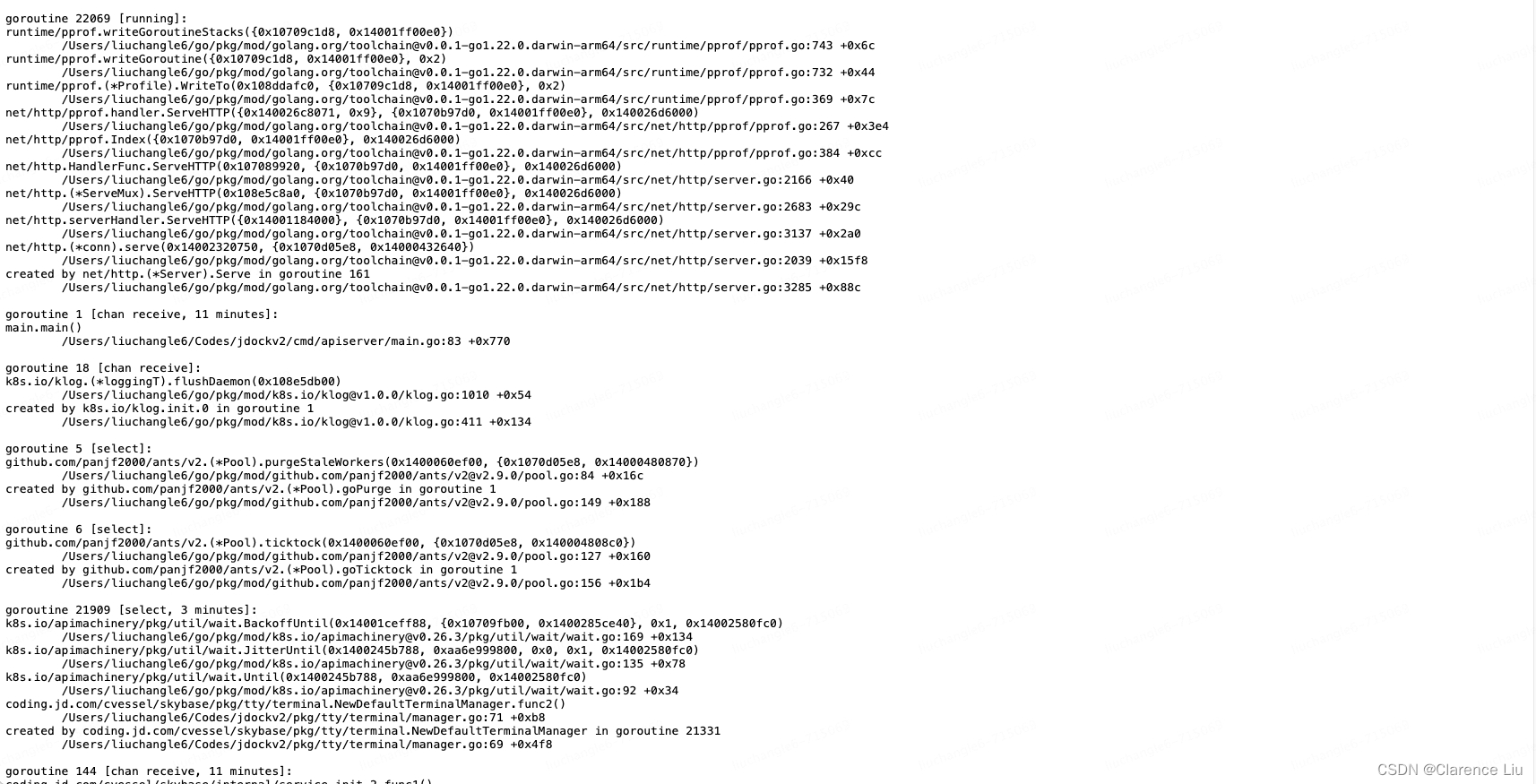

- 点击

full goroutine stack dump,你会看到goroutine的堆栈存储情况,如下图

- 查看之后发现出现了很多的残留

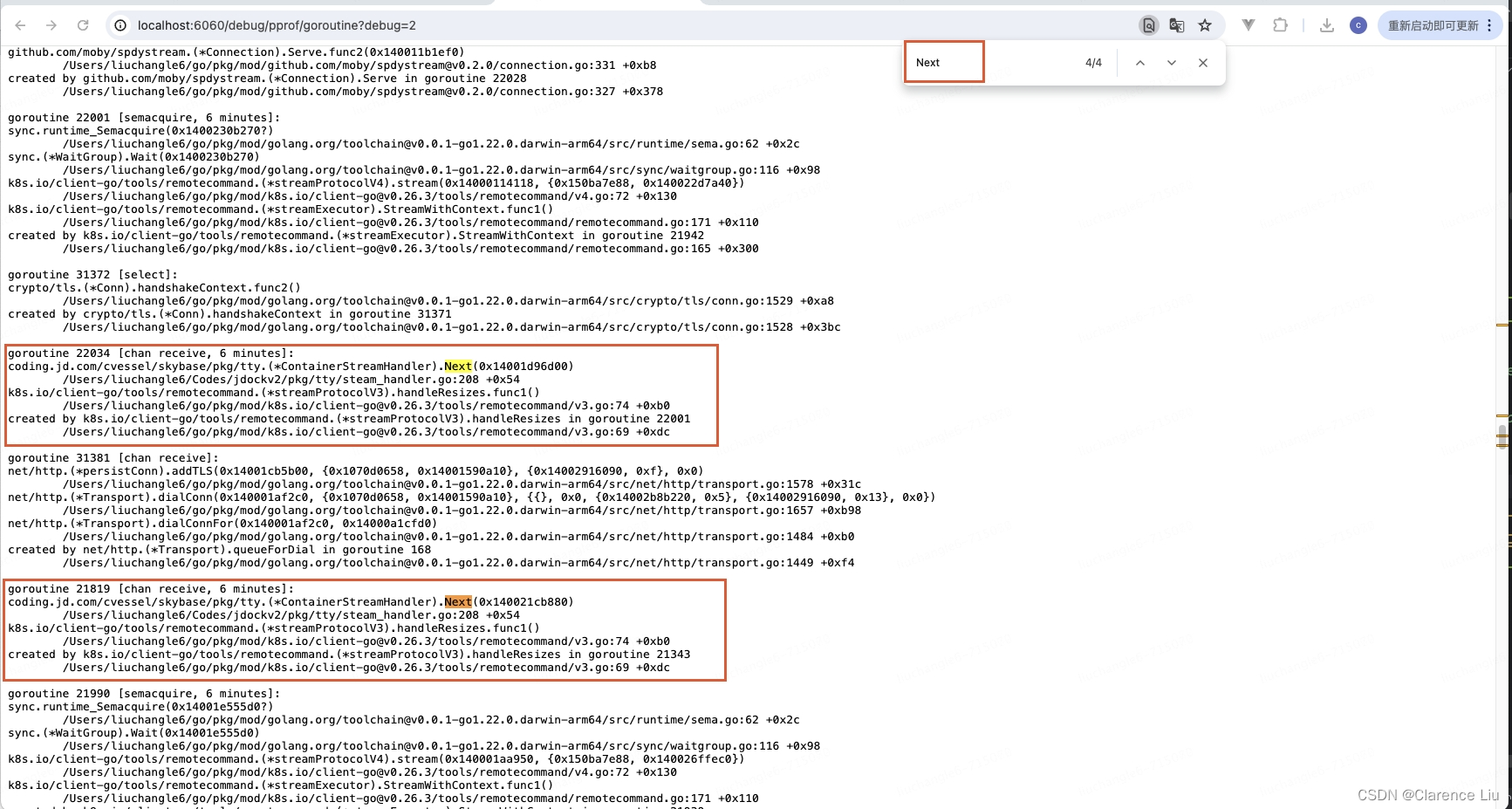

goroutine,出现在Next方法中,如下图

- 这个问题出现的原因是我们重写的Next方法,当断开连接的时候必须要主动返回一个

nil,否则会残留一个go func,具体看上面的handleResizes方法中有一个for循环,必须收到一个size为nil,才能跳出此func,一开始我写的方法如下,这样写的话当浏览器退出的时候,是不会给我一个nil的终端调整的事件的

// 错误写法

func (handler *ContainerStreamHandler) Next() (size *remotecommand.TerminalSize) {ret := <-handler.ResizeEvent:size = &retreturn

}

// 正确写法

func (handler *ContainerStreamHandler) Next() (size *remotecommand.TerminalSize) {select {case ret := <-handler.ResizeEvent:size = &retcase <-handler.WsConn.CloseChan:return nil // 当发现管道关闭的时候,主动返回一个nil}return

}

有问题欢迎交流

![P9422 [蓝桥杯 2023 国 B] 合并数列](https://img-blog.csdnimg.cn/direct/16ffd2e5020c4ec2a0cf15ba79f85680.png)