相关链接:

神经网络不确定性综述(Part I)——A survey of uncertainty in deep neural networks-CSDN博客

神经网络不确定性综述(Part II)——Uncertainty estimation_Single deterministic methods-CSDN博客

神经网络不确定性综述(Part III)——Uncertainty estimation_Bayesian neural networks-CSDN博客

神经网络不确定性综述(Part IV)——Uncertainty estimation_Ensemble methods&Test-time augmentation-CSDN博客

神经网络不确定性综述(Part V)——Uncertainty measures and quality-CSDN博客

3. Uncertainty estimation

如前所述,uncertainty的来源有很多,因此我们很难完全排除神经网络中的不确定性,尤其是现实中我们收集的总是真实数据集的一个子集而不能全面覆盖整体,无法知晓数据的真实分布。更糟糕的是,DNN预测结果的uncertainty通常也没办法计算,因为不同的uncertainty没有被精准地建模或者人们根本没有意识到某些uncertainty的存在。这给uncertainty estimation带来了挑战,同时也意味着它的重要性。

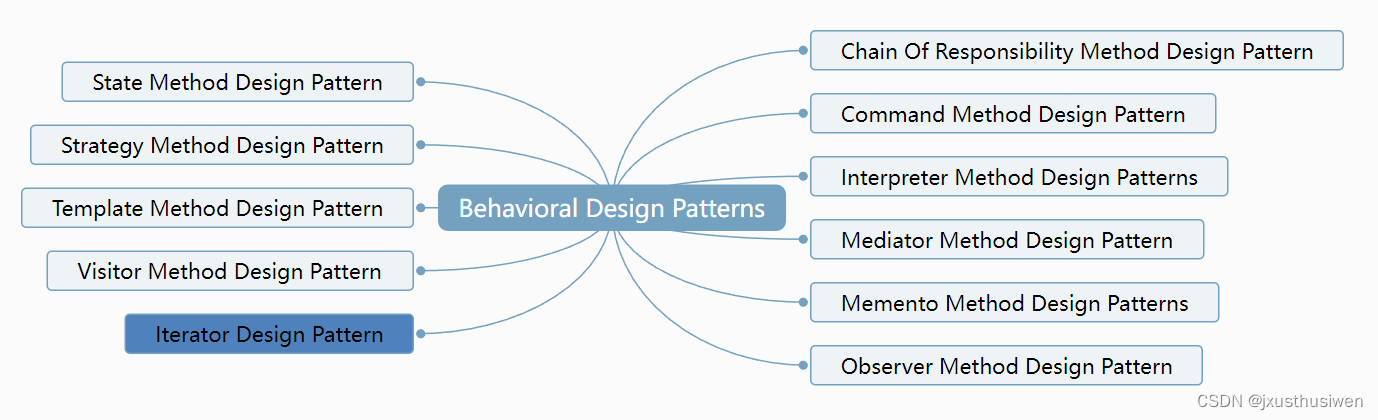

对于不同的uncertainty,data uncertainty通常体现在模型的prediction中,比如分类网络中softmax层的输出或回归网络中对标准差的显式预测。此外,也有一些方法将model/data uncertainty分别进行建模,试图将二者区分开。一般来说,估计不确定性的方法可以根据所使用的深度神经网络的数量(single or multipole)和性质(deterministic or stochastic)分为4种不同的类型。

- Single deterministic methods

Single deterministic methods give the prediction based on one single forward pass within a deterministic network. The uncertainty quantification is either derived by using additional (external) methods or is directly predicted by the network.

- Bayesian methods

Bayesian methods cover all kinds of stochastic DNNs, i.e. DNNs where two forward passes of the same sample generally lead to different results.

- Ensemble methods

Ensemble methods combine the predictions of several different deterministic networks at inference.

- Test-time augmentation methods

Test-time augmentation methods give the prediction based on one single deterministic network but augment the input data at test-time in order to generate several predictions that are used to evaluate the certainty of the prediction.

3.1 Single deterministic methods

对于deterministic neural networks而言,其参数是固定的,重复地向前传播每次得到的结果也将是一样的。量化single deterministic neural networks的方法可以被分为两类,一类是explicitly modeled and trained network另一类是use additional components to estimate uncertainty。第一种将不确定性量化/估计本身融入到了神经网络中,因此这种量化会影响整个训练过程;而第二种则是独立于模型的外部量化手段,不影响模型的训练。这两种方式分别可以被称为internal and external uncertainty quantification approaches.

3.1.1 Internal uncertainty quantification approaches

许多internal的方法都遵循同样一个思路,就是去predict分布的参数而不是直接做point-wise MAP estimation。通常情况下,这些方法的损失函数会考虑the expected divergence between the true distribution and the predicted distribution,例如Evidential Deep Learning损失函数中KL-divergence那一项。

在分类任务中,模型的输出通常代表类别概率(取值范围0~1)。对于多分类任务,一般使用soft-max function

其中 代表logits。

对于二分类,一般使用sigmoid function

在前面我们曾经介绍过,data uncertainty可以用模型output的概率分布来表示。而事实上,所得到的这些概率值本身也可以被解释为prediction of data uncertainty,例如,“猫”这一类别得到的输出值为0.8,则我们可以认为有0.8的置信度认为输入是一只猫。然而,soft-max output is often poorly calibrated,并且神经网络通常被认为过度自信over-confident,因此得到的不确定性估计往往并不精确。有研究发现,由ReLU和soft-max组合的神经网络对与训练数据分布偏差更大的那些样本的过度自信情况更严重。此外,soft-max无法与model uncertainty建立联系,导致其无法正确处理out-of-distribution的样本。一个直观的例子是,即使我们向“猫狗分类器”输入一张“鸟”的图片,模型仍然会从猫和狗二者之间进行判断,这显然是不合理的。下图是LeNet在MNIST数据集上过度自信的例子。

另一种方法同样考虑logit magnitude,借助Dirichlet distribution来估计不确定性。Dirichlet distribution is the conjugate prior of categorical distribution,因此可以视为distribution over categorical distributions即“分布的分布”。The density of Dirichlet distribution is defined by

where is the gamma function,

are called the concentration parameters, and the scalar

is the precision of the distribution. In practice, the concentrations

are derived by applying a strictly positive transformation, such as the exponential function, to the logit values.

A higher concentration value leads to a sharper Dirichlet distribution,如下图所示。

k-class categorical distribution对应于一个(k-1)-dimensional simplex。这个单纯形的每个顶点代表在某一个class具有full probability mass,而顶点的凸组合(convex combination)则对应于多个classes上的概率分布。

Malinin and Gales (2018) argued that a high model uncertainty should lead to a lower precision value and therefore to a flat distribution over the whole simplex since the network is not familiar with the data. In contrast to this, data uncertainty should be represented by a sharper but also centered distribution, since the network can handle the data, but cannot give a clear class preference. ——较高的model uncertainty应该对应于一个较低的precision,此时单纯形有一个较为平坦的分布,代表模型没见过输入数据;与此相反,data uncertainty应该有较高的precision,但是不集中在某个顶点而是集中在单纯形的中央,意味着模型虽然可以处理输入数据但不能给出明确的类别偏好。(NOTE: OOD数据造成的不确定性属于model uncertainty)

近年来,Dirichlet distribution被用在Dirichlet Prior Networks以及Evidential Neural Networks中。与常见的网络不同,这些网络的直接输出并不是probabilities,而是Dirichlet distribution的参数,而categorical distribution可以根据这些参数被进一步推导得到。

- Prior networks

由于对Prior networks尚缺乏调研,此处直接将原文附于下方:

Prior networks are trained in a multi-task way with the goal of (1) minimizing the expected Kullback–Leibler (KL) divergence between the predictions of in-distribution data and a sharp Dirichlet distribution and between (2) a flat Dirichlet distribution and the predictions of out-of-distribution data (Malinin and Gales 2018). Besides the main motivation of a better separation between in-distribution and OOD samples, these approaches also improve the separation between the confidence of correct and incorrect predictions, as was shown by Tsiligkaridis (2021a). As a follow-up, (Malinin and Gales 2019) discussed that for the case that the data uncertainty is high, the forward definition of the KL-divergence can lead to an undesirable multi-model target distribution. In order to avoid this, they reformulated the loss using the reverse KL divergence. The experiments showed improved results in the uncertainty estimation as well as for the adversarial robustness. Tsiligkaridis (2021b) extended the Dirichlet network approach by a new loss function that aims at minimizing an upper bound on the expected error based on the -norm, i.e. optimizing an expected worst-case upper bound. Wu et al. (2019) argued that using a mixture of Dirichlet distributions gives much more flexibility in approximating the posterior distribution. Therefore, an approach where the network predicts the parameters for a mixture of

Dirichlet distributions was suggested. For this, the network logits represent the parameters for

Dirichlet distributions and additionally

weights

with the constraint

are optimized. Nandy et al. (2020) analytically showed that for in-domain samples with high data uncertainty, the Dirichlet distribution predicted for a false prediction is often flatter than for a correct prediction. They argued that this makes it harder to differentiate between in- and out-of-distribution predictions and suggested a regularization term for maximizing the gap between in- and out-of-distribution samples.

- Evidential neural networks

Evidential neural networks同样把视角放在了一个single Dirichlet network的参数优化上,它使用Dempster-Shafer证据理论,借助主观逻辑subjective logic将网络输出的logits视为multinomial opinions or beliefs从而得到网络的loss function。在这个情况下,通过将belief赋予给整个框架,使网络具备了表述“I don’t know”的能力。

此外,Zhao et al. (2019)进一步区分了vacuity以及dissonance的情形以更好地区分in-distribution与out-of-distribution的样本;

Amini et al.(2020) transferred the idea of evidential neural networks from classification tasks to regression tasks by learning the parameters of an evidential Normal Inverse Gamma distribution over an underlying Normal distribution. Charpentier et al (2020) avoided the need of OOD data for the training process by using normalizing flows to learn a distribution over a latent space for each class. A new input sample is projected onto this latent space and a Dirichlet distribution is parameterized based on the class-wise densities of the received latent point.

类似的工作还有很多,并且除分类任务外,还有研究关注于回归任务,请读者自行阅读相关文献。

3.1.2 External uncertainty quantification approaches

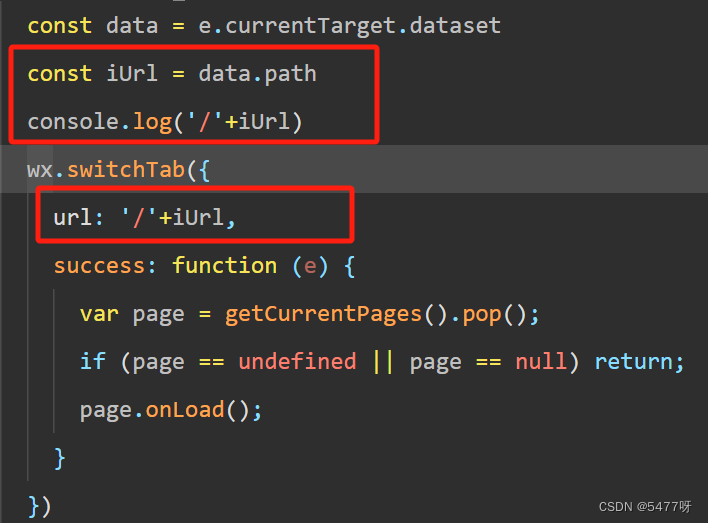

与internal approaches相对应,由于external approaches对不确定性的评估是与原始预测任务是分离的,因此它不会影响模型本身的预测。Raghu et al. (2019) argued that when both tasks, the prediction, and the uncertainty quantification, are done by one single method, the uncertainty estimation is biased by the actual prediction task.——当一个方法既做预测又做不确定性估计的时候,后者将被前者影响而产生估计偏差。因此他们建议使用两个网络分别完成prediction与uncertainty quantification,其中第二个网络的输入是第一个网络的输出(prediction)。

以下摘自原论文:

Similarly, Ramalho and Miranda (2020) introduced an additional neural network for uncertainty estimation. But in contrast to Raghu et al. (2019), the representation space of the training data is considered and the density around a given test sample is evaluated. The additional neural network uses this training data density in order to predict whether the main network’s estimate is expected to be correct or false. Hsu et al. (2020) detected out-of-distribution examples in classification tasks at test-time by predicting total probabilities for each class, in addition to the categorical distribution given by the softmax output. The class-wise total probability is predicted by applying the sigmoid function to the network’s logits. Based on these total probabilities, OOD examples can be identified as those with low class probabilities for all classes. In contrast to this, (Oberdiek et al. 2018) took the sensitivity of the model, i.e. the model’s slope, into account by using gradient metrics for the uncertainty quantification in classification tasks. Lee and AlRegib (2020) applied a similar idea but made use of back-propagated gradients. In their work, they presented state-of-the-art results on out-of-distribution and corrupted input detection.

3.1.3 Summing up single deterministic methods

相较于其它principle,single deterministic methods在训练时只需一个网络,训练过程更加sufficient。此外,single deterministic methods通常可以应用于pre-trained networks,只需完成一个或最多两个前向过程。这些网络虽然会包含更复杂的损失函数从而减慢训练过程,或者可能需要external components。但总的来说,single deterministic methods仍然比基于ensemble、Bayesian和test-time augmentation的方法更高效。但是这些方法依赖于单一的opinion,因此对underlying network architecture, training procedure, and training data非常敏感。