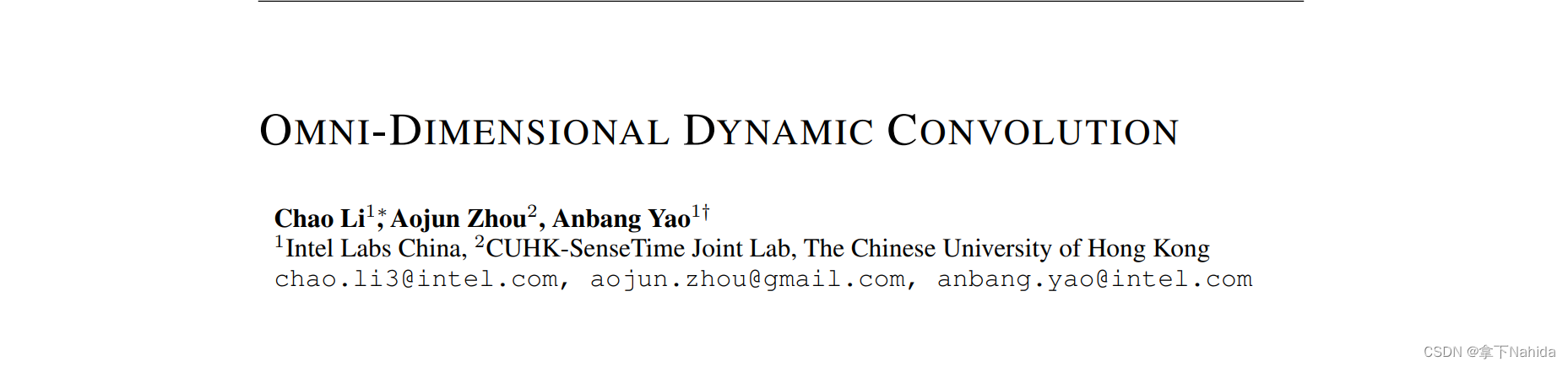

一、导言

提出了一种称为全维度动态卷积(ODConv)的新颖设计,旨在克服当前动态卷积方法的局限性并提升卷积神经网络(CNN)的性能。以下是该论文提出的全维度动态卷积设计的优点和存在的缺点分析:

优点:

-

增强特征学习能力: ODConv通过一个创新的多维度注意力机制,为卷积核空间的所有四个维度(即空间大小、输入通道数、输出通道数及卷积核数量)并行地学习互补的注意力权重。这使得每个卷积核能够根据输入特征的不同,动态调整其权重,从而更有效地捕捉上下文信息,显著增强了基本卷积操作的特征提取能力。

-

模型效率与准确性平衡: 即使仅使用单个卷积核,ODConv也能与或超越现有的多核动态卷积方法,在保持轻量级的同时实现更高的准确率。例如,在ImageNet数据集上,它为MobileNetV2带来了3.77%到5.71%的绝对top-1精度提升,对于ResNet系列则有1.86%到3.72%的提升。

-

广泛适用性: ODConv作为标准卷积的直接替代品,可以方便地集成到多种CNN架构中,不仅适用于轻量级网络,也包括大型网络,如ResNet101,并且在这些网络上显示出有前景的结果,如为ResNet101带来1.57%的top-1精度增益。

-

全面的注意力机制: 研究发现,ODConv学习到的四种注意力(空间注意力αsi、通道注意力αci、滤波器注意力αfi、权重注意力αwi)是互补的,它们共同作用能有效解决单个注意力机制可能忽视的问题,提高了模型的决策能力。

缺点:

-

计算成本增加: 引入四类卷积核注意力虽然提升了模型性能,但也导致了FLOPs的轻微增长和推理时间的额外延迟。与参考方法相比,相同模型尺寸下,ODConv的训练成本更高。

-

超参数优化挑战: 虽然提供了广泛的消融研究来分析不同超参数对ODConv的影响,但应用于所有骨干网络的超参数组合并非针对每个特定网络结构的最优设置。这意味着需要进一步探索,以找到适合不同应用场景的速度与精度需求的最佳超参数组合。

-

未充分探索深层大网络潜力: 论文主要集中在如ResNet101这样的相对较大的网络上的应用,而对于更深或更大的网络结构,由于计算资源限制,其潜力尚未被充分探索。

综上所述,全维度动态卷积(ODConv)提供了一种更通用且优雅的动态卷积设计,显著提升了模型的性能,尤其是在准确性和效率之间找到了良好的平衡。然而,它也面临着计算成本增加和超参数优化的挑战,未来工作可聚焦于减少计算负担并探索更广泛网络架构下的应用潜力。

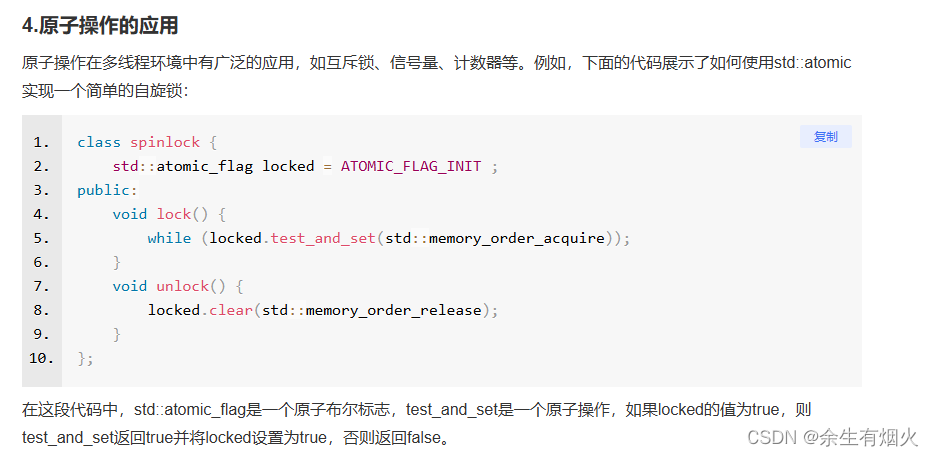

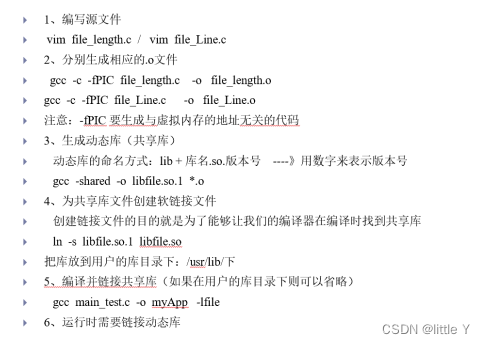

二、准备工作

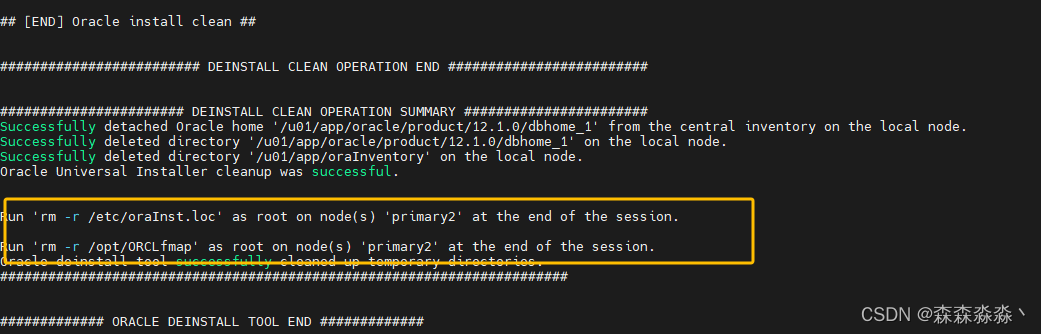

首先在YOLOv5/v7的models文件夹下新建文件odconv.py,导入如下代码

from models.common import *class ODConv(nn.Sequential):def __init__(self, in_planes, out_planes, kernel_size=3, stride=1, groups=1, norm_layer=nn.BatchNorm2d,reduction=0.0625, kernel_num=1):padding = (kernel_size - 1) // 2super(ODConv, self).__init__(ODConv2d(in_planes, out_planes, kernel_size, stride, padding, groups=groups,reduction=reduction, kernel_num=kernel_num),norm_layer(out_planes),nn.SiLU())class Attention(nn.Module):def __init__(self, in_planes, out_planes, kernel_size,groups=1,reduction=0.0625,kernel_num=4,min_channel=16):super(Attention, self).__init__()attention_channel = max(int(in_planes * reduction), min_channel)self.kernel_size = kernel_sizeself.kernel_num = kernel_numself.temperature = 1.0self.avgpool = nn.AdaptiveAvgPool2d(1)self.fc = nn.Conv2d(in_planes, attention_channel, 1, bias=False)self.bn = nn.BatchNorm2d(attention_channel)self.relu = nn.ReLU(inplace=True)self.channel_fc = nn.Conv2d(attention_channel, in_planes, 1, bias=True)self.func_channel = self.get_channel_attentionif in_planes == groups and in_planes == out_planes: # depth-wise convolutionself.func_filter = self.skipelse:self.filter_fc = nn.Conv2d(attention_channel, out_planes, 1, bias=True)self.func_filter = self.get_filter_attentionif kernel_size == 1: # point-wise convolutionself.func_spatial = self.skipelse:self.spatial_fc = nn.Conv2d(attention_channel, kernel_size * kernel_size, 1, bias=True)self.func_spatial = self.get_spatial_attentionif kernel_num == 1:self.func_kernel = self.skipelse:self.kernel_fc = nn.Conv2d(attention_channel, kernel_num, 1, bias=True)self.func_kernel = self.get_kernel_attentionself.bn_1 = nn.LayerNorm([attention_channel, 1, 1])self._initialize_weights()def _initialize_weights(self):for m in self.modules():if isinstance(m, nn.Conv2d):nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')if m.bias is not None:nn.init.constant_(m.bias, 0)if isinstance(m, nn.BatchNorm2d):nn.init.constant_(m.weight, 1)nn.init.constant_(m.bias, 0)def update_temperature(self, temperature):self.temperature = temperature@staticmethoddef skip(_):return 1.0def get_channel_attention(self, x):channel_attention = torch.sigmoid(self.channel_fc(x).view(x.size(0), -1, 1, 1) / self.temperature)return channel_attentiondef get_filter_attention(self, x):filter_attention = torch.sigmoid(self.filter_fc(x).view(x.size(0), -1, 1, 1) / self.temperature)return filter_attentiondef get_spatial_attention(self, x):spatial_attention = self.spatial_fc(x).view(x.size(0), 1, 1, 1, self.kernel_size, self.kernel_size)spatial_attention = torch.sigmoid(spatial_attention / self.temperature)return spatial_attentiondef get_kernel_attention(self, x):kernel_attention = self.kernel_fc(x).view(x.size(0), -1, 1, 1, 1, 1)kernel_attention = F.softmax(kernel_attention / self.temperature, dim=1)return kernel_attentiondef forward(self, x):x = self.avgpool(x)x = self.fc(x)x = self.bn_1(x)x = self.relu(x)return self.func_channel(x), self.func_filter(x), self.func_spatial(x), self.func_kernel(x)class ODConv2d(nn.Module):def __init__(self,in_planes,out_planes,kernel_size=3,stride=1,padding=0,dilation=1,groups=1,reduction=0.0625,kernel_num=1):super(ODConv2d, self).__init__()self.in_planes = in_planesself.out_planes = out_planesself.kernel_size = kernel_sizeself.stride = strideself.padding = paddingself.dilation = dilationself.groups = groupsself.kernel_num = kernel_numself.attention = Attention(in_planes, out_planes, kernel_size, groups=groups,reduction=reduction, kernel_num=kernel_num)self.weight = nn.Parameter(torch.randn(kernel_num, out_planes, in_planes // groups, kernel_size, kernel_size),requires_grad=True)self._initialize_weights()if self.kernel_size == 1 and self.kernel_num == 1:self._forward_impl = self._forward_impl_pw1xelse:self._forward_impl = self._forward_impl_commondef _initialize_weights(self):for i in range(self.kernel_num):nn.init.kaiming_normal_(self.weight[i], mode='fan_out', nonlinearity='relu')def update_temperature(self, temperature):self.attention.update_temperature(temperature)def _forward_impl_common(self, x):channel_attention, filter_attention, spatial_attention, kernel_attention = self.attention(x)batch_size, in_planes, height, width = x.size()x = x * channel_attentionx = x.reshape(1, -1, height, width)aggregate_weight = spatial_attention * kernel_attention * self.weight.unsqueeze(dim=0)aggregate_weight = torch.sum(aggregate_weight, dim=1).view([-1, self.in_planes // self.groups, self.kernel_size, self.kernel_size])output = F.conv2d(x, weight=aggregate_weight, bias=None, stride=self.stride, padding=self.padding,dilation=self.dilation, groups=self.groups * batch_size)output = output.view(batch_size, self.out_planes, output.size(-2), output.size(-1))output = output * filter_attentionreturn outputdef _forward_impl_pw1x(self, x):channel_attention, filter_attention, spatial_attention, kernel_attention = self.attention(x)x = x * channel_attentionoutput = F.conv2d(x, weight=self.weight.squeeze(dim=0), bias=None, stride=self.stride, padding=self.padding,dilation=self.dilation, groups=self.groups)output = output * filter_attentionreturn outputdef forward(self, x):return self._forward_impl(x)

其次在在YOLOv5/v7项目文件下的models/yolo.py中在文件首部添加代码

from models.odconv import ODConv并搜索def parse_model(d, ch)

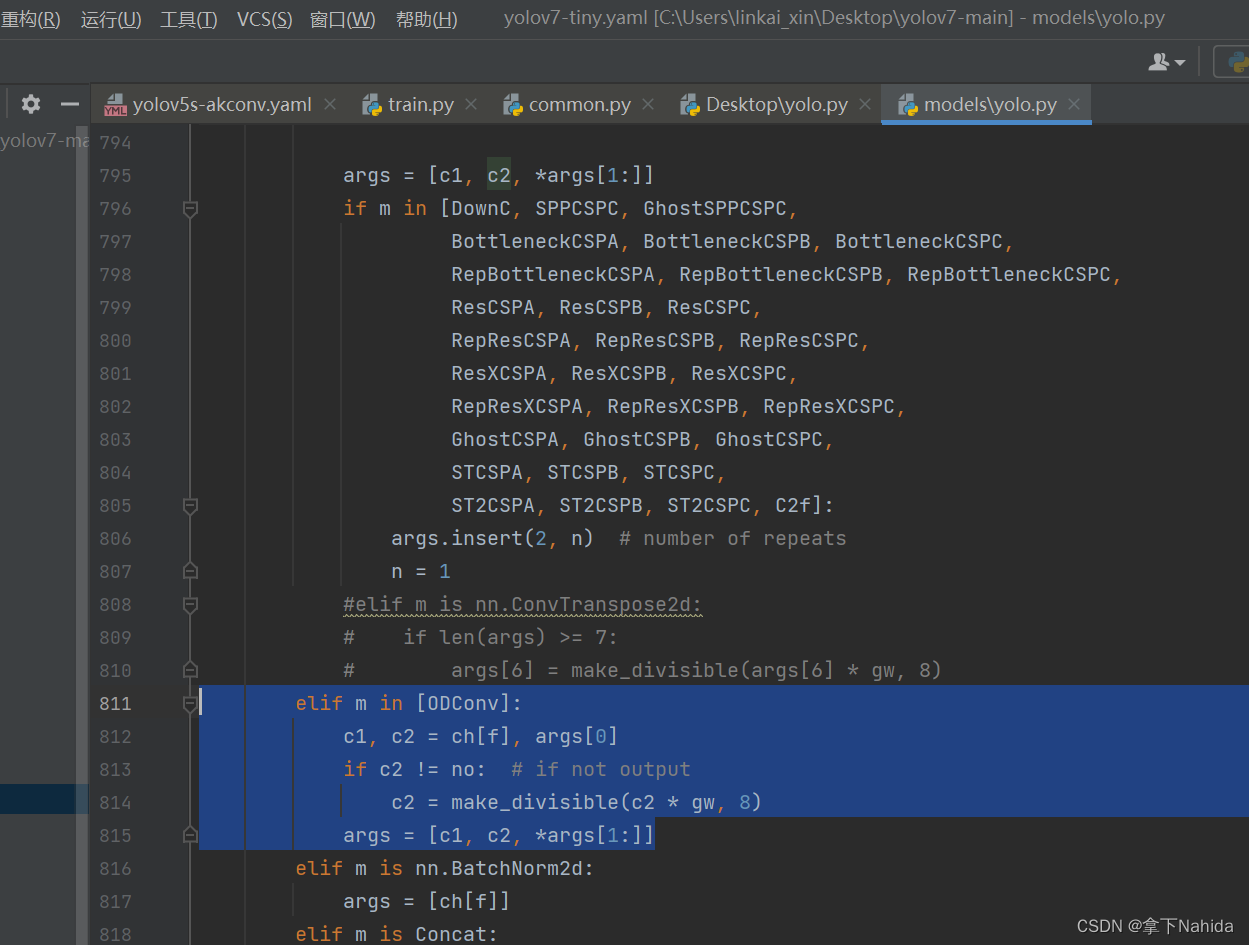

定位到如下行添加以下代码

elif m in [ODConv]:c1, c2 = ch[f], args[0]if c2 != no: # if not outputc2 = make_divisible(c2 * gw, 8)args = [c1, c2, *args[1:]]

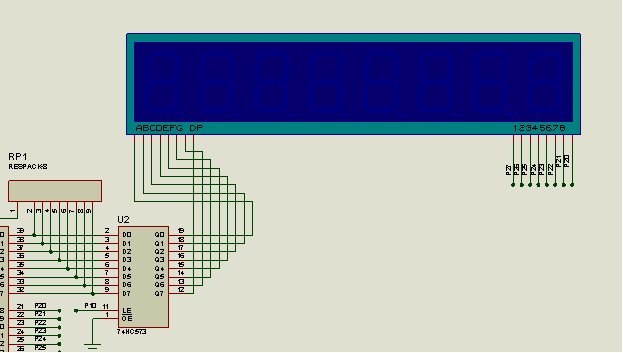

三、YOLOv7-tiny改进工作

完成二后,在YOLOv7项目文件下的models文件夹下创建新的文件yolov7-tiny-odconv.yaml,导入如下代码。

# parameters

nc: 80 # number of classes

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple# anchors

anchors:- [10,13, 16,30, 33,23] # P3/8- [30,61, 62,45, 59,119] # P4/16- [116,90, 156,198, 373,326] # P5/32# yolov7-tiny backbone

backbone:# [from, number, module, args] c2, k=1, s=1, p=None, g=1, act=True[[-1, 1, Conv, [32, 3, 2, None, 1, nn.LeakyReLU(0.1)]], # 0-P1/2 [-1, 1, Conv, [64, 3, 2, None, 1, nn.LeakyReLU(0.1)]], # 1-P2/4 [-1, 1, Conv, [32, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [32, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [32, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [32, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 7[-1, 1, MP, []], # 8-P3/8[-1, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 14[-1, 1, MP, []], # 15-P4/16[-1, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [128, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [128, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 21[-1, 1, MP, []], # 22-P5/32[-1, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [256, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [256, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, Conv, [512, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 28]# yolov7-tiny head

head:[[-1, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, SP, [5]],[-2, 1, SP, [9]],[-3, 1, SP, [13]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -7], 1, Concat, [1]],[-1, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 37[-1, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[21, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # route backbone P4[[-1, -2], 1, Concat, [1]],[-1, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 47[-1, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[14, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # route backbone P3[[-1, -2], 1, Concat, [1]],[-1, 1, Conv, [32, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [32, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [32, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [32, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 57[-1, 1, Conv, [128, 3, 2, None, 1, nn.LeakyReLU(0.1)]],[[-1, 47], 1, Concat, [1]],[-1, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 65[-1, 1, Conv, [256, 3, 2, None, 1, nn.LeakyReLU(0.1)]],[[-1, 37], 1, Concat, [1]],[-1, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [128, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [128, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, ODConv, [256, 1, 1]], # 73[57, 1, Conv, [128, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[65, 1, Conv, [256, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[73, 1, Conv, [512, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[74,75,76], 1, IDetect, [nc, anchors]], # Detect(P3, P4, P5)]

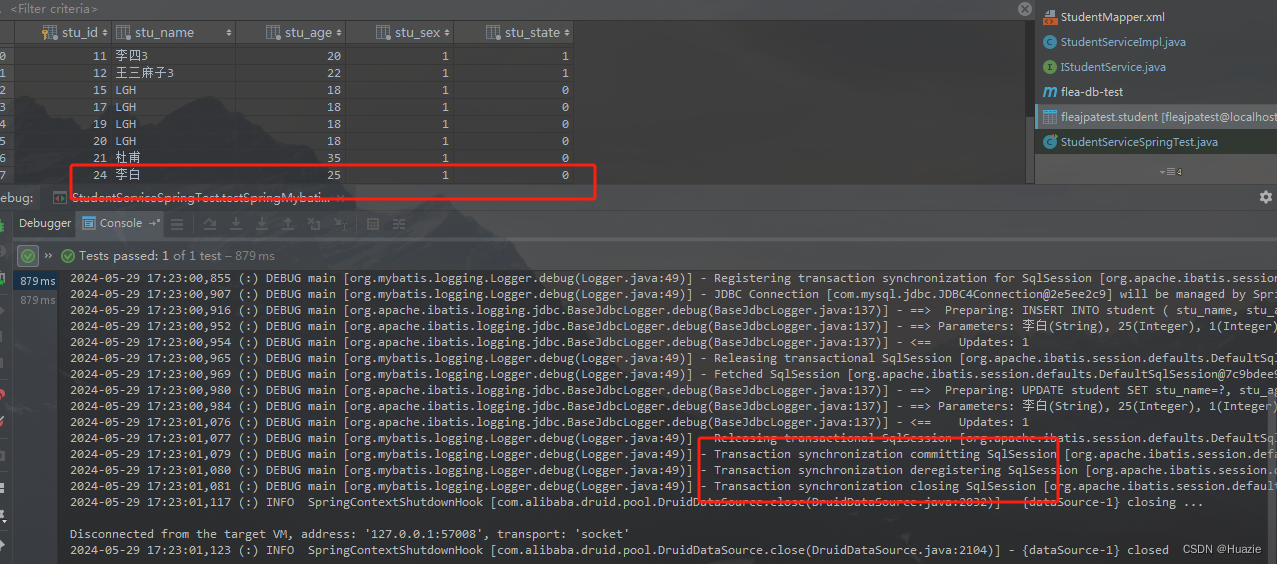

from n params module arguments 0 -1 1 928 models.common.Conv [3, 32, 3, 2, None, 1, LeakyReLU(negative_slope=0.1)]1 -1 1 18560 models.common.Conv [32, 64, 3, 2, None, 1, LeakyReLU(negative_slope=0.1)]2 -1 1 2112 models.common.Conv [64, 32, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]3 -2 1 2112 models.common.Conv [64, 32, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]4 -1 1 9280 models.common.Conv [32, 32, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]5 -1 1 9280 models.common.Conv [32, 32, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]6 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 7 -1 1 8320 models.common.Conv [128, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]8 -1 1 0 models.common.MP [] 9 -1 1 4224 models.common.Conv [64, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]10 -2 1 4224 models.common.Conv [64, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]11 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]12 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]13 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 14 -1 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]15 -1 1 0 models.common.MP [] 16 -1 1 16640 models.common.Conv [128, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]17 -2 1 16640 models.common.Conv [128, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]18 -1 1 147712 models.common.Conv [128, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]19 -1 1 147712 models.common.Conv [128, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]20 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 21 -1 1 131584 models.common.Conv [512, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]22 -1 1 0 models.common.MP [] 23 -1 1 66048 models.common.Conv [256, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]24 -2 1 66048 models.common.Conv [256, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]25 -1 1 590336 models.common.Conv [256, 256, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]26 -1 1 590336 models.common.Conv [256, 256, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]27 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 28 -1 1 525312 models.common.Conv [1024, 512, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]29 -1 1 131584 models.common.Conv [512, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]30 -2 1 131584 models.common.Conv [512, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]31 -1 1 0 models.common.SP [5] 32 -2 1 0 models.common.SP [9] 33 -3 1 0 models.common.SP [13] 34 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 35 -1 1 262656 models.common.Conv [1024, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]36 [-1, -7] 1 0 models.common.Concat [1] 37 -1 1 131584 models.common.Conv [512, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]38 -1 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]39 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest'] 40 21 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]41 [-1, -2] 1 0 models.common.Concat [1] 42 -1 1 16512 models.common.Conv [256, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]43 -2 1 16512 models.common.Conv [256, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]44 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]45 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]46 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 47 -1 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]48 -1 1 8320 models.common.Conv [128, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]49 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest'] 50 14 1 8320 models.common.Conv [128, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]51 [-1, -2] 1 0 models.common.Concat [1] 52 -1 1 4160 models.common.Conv [128, 32, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]53 -2 1 4160 models.common.Conv [128, 32, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]54 -1 1 9280 models.common.Conv [32, 32, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]55 -1 1 9280 models.common.Conv [32, 32, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]56 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 57 -1 1 8320 models.common.Conv [128, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]58 -1 1 73984 models.common.Conv [64, 128, 3, 2, None, 1, LeakyReLU(negative_slope=0.1)]59 [-1, 47] 1 0 models.common.Concat [1] 60 -1 1 16512 models.common.Conv [256, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]61 -2 1 16512 models.common.Conv [256, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]62 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]63 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]64 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 65 -1 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]66 -1 1 295424 models.common.Conv [128, 256, 3, 2, None, 1, LeakyReLU(negative_slope=0.1)]67 [-1, 37] 1 0 models.common.Concat [1] 68 -1 1 65792 models.common.Conv [512, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]69 -2 1 65792 models.common.Conv [512, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]70 -1 1 147712 models.common.Conv [128, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]71 -1 1 147712 models.common.Conv [128, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]72 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 73 -1 1 173440 models.odconv.ODConv [512, 256, 1, 1] 74 57 1 73984 models.common.Conv [64, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]75 65 1 295424 models.common.Conv [128, 256, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]76 73 1 1180672 models.common.Conv [256, 512, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]77 [74, 75, 76] 1 17132 models.yolo.IDetect [1, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [128, 256, 512]]Model Summary: 271 layers, 6056844 parameters, 6056844 gradients, 13.1 GFLOPS运行后若打印出如上文本代表改进成功。

四、YOLOv5s改进工作

完成二后,在YOLOv5项目文件下的models文件夹下创建新的文件yolov5s-odconv.yaml,导入如下代码。

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:- [10,13, 16,30, 33,23] # P3/8- [30,61, 62,45, 59,119] # P4/16- [116,90, 156,198, 373,326] # P5/32# YOLOv5 v6.0 backbone

backbone:# [from, number, module, args][[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2[-1, 1, Conv, [128, 3, 2]], # 1-P2/4[-1, 3, C3, [128]],[-1, 1, Conv, [256, 3, 2]], # 3-P3/8[-1, 6, C3, [256]],[-1, 1, Conv, [512, 3, 2]], # 5-P4/16[-1, 9, C3, [512]],[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32[-1, 3, C3, [1024]],[-1, 1, SPPF, [1024, 5]], # 9]# YOLOv5 v6.0 head

head:[[-1, 1, Conv, [512, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 6], 1, Concat, [1]], # cat backbone P4[-1, 3, C3, [512, False]], # 13[-1, 1, Conv, [256, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 4], 1, Concat, [1]], # cat backbone P3[-1, 3, C3, [256, False]], # 17 (P3/8-small)[-1, 1, Conv, [256, 3, 2]],[[-1, 14], 1, Concat, [1]], # cat head P4[-1, 3, C3, [512, False]], # 20 (P4/16-medium)[-1, 1, ODConv, [512, 3, 2]],[[-1, 10], 1, Concat, [1]], # cat head P5[-1, 3, C3, [1024, False]], # 23 (P5/32-large)[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)]

from n params module arguments 0 -1 1 3520 models.common.Conv [3, 32, 6, 2, 2] 1 -1 1 18560 models.common.Conv [32, 64, 3, 2] 2 -1 1 18816 models.common.C3 [64, 64, 1] 3 -1 1 73984 models.common.Conv [64, 128, 3, 2] 4 -1 2 115712 models.common.C3 [128, 128, 2] 5 -1 1 295424 models.common.Conv [128, 256, 3, 2] 6 -1 3 625152 models.common.C3 [256, 256, 3] 7 -1 1 1180672 models.common.Conv [256, 512, 3, 2] 8 -1 1 1182720 models.common.C3 [512, 512, 1] 9 -1 1 656896 models.common.SPPF [512, 512, 5] 10 -1 1 131584 models.common.Conv [512, 256, 1, 1] 11 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest'] 12 [-1, 6] 1 0 models.common.Concat [1] 13 -1 1 361984 models.common.C3 [512, 256, 1, False] 14 -1 1 33024 models.common.Conv [256, 128, 1, 1] 15 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest'] 16 [-1, 4] 1 0 models.common.Concat [1] 17 -1 1 90880 models.common.C3 [256, 128, 1, False] 18 -1 1 147712 models.common.Conv [128, 128, 3, 2] 19 [-1, 14] 1 0 models.common.Concat [1] 20 -1 1 296448 models.common.C3 [256, 256, 1, False] 21 -1 1 603353 models.odconv.ODConv [256, 256, 3, 2] 22 [-1, 10] 1 0 models.common.Concat [1] 23 -1 1 1182720 models.common.C3 [512, 512, 1, False] 24 [17, 20, 23] 1 16182 models.yolo.Detect [1, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [128, 256, 512]]Model Summary: 279 layers, 7035343 parameters, 7035343 gradients, 15.5 GFLOPs运行后若打印出如上文本代表改进成功。

五、YOLOv5n改进工作

完成二后,在YOLOv5项目文件下的models文件夹下创建新的文件yolov5n-odconv.yaml,导入如下代码。

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.25 # layer channel multiple

anchors:- [10,13, 16,30, 33,23] # P3/8- [30,61, 62,45, 59,119] # P4/16- [116,90, 156,198, 373,326] # P5/32# YOLOv5 v6.0 backbone

backbone:# [from, number, module, args][[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2[-1, 1, Conv, [128, 3, 2]], # 1-P2/4[-1, 3, C3, [128]],[-1, 1, Conv, [256, 3, 2]], # 3-P3/8[-1, 6, C3, [256]],[-1, 1, Conv, [512, 3, 2]], # 5-P4/16[-1, 9, C3, [512]],[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32[-1, 3, C3, [1024]],[-1, 1, SPPF, [1024, 5]], # 9]# YOLOv5 v6.0 head

head:[[-1, 1, Conv, [512, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 6], 1, Concat, [1]], # cat backbone P4[-1, 3, C3, [512, False]], # 13[-1, 1, Conv, [256, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 4], 1, Concat, [1]], # cat backbone P3[-1, 3, C3, [256, False]], # 17 (P3/8-small)[-1, 1, Conv, [256, 3, 2]],[[-1, 14], 1, Concat, [1]], # cat head P4[-1, 3, C3, [512, False]], # 20 (P4/16-medium)[-1, 1, ODConv, [512, 3, 2]],[[-1, 10], 1, Concat, [1]], # cat head P5[-1, 3, C3, [1024, False]], # 23 (P5/32-large)[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)]

from n params module arguments 0 -1 1 1760 models.common.Conv [3, 16, 6, 2, 2] 1 -1 1 4672 models.common.Conv [16, 32, 3, 2] 2 -1 1 4800 models.common.C3 [32, 32, 1] 3 -1 1 18560 models.common.Conv [32, 64, 3, 2] 4 -1 2 29184 models.common.C3 [64, 64, 2] 5 -1 1 73984 models.common.Conv [64, 128, 3, 2] 6 -1 3 156928 models.common.C3 [128, 128, 3] 7 -1 1 295424 models.common.Conv [128, 256, 3, 2] 8 -1 1 296448 models.common.C3 [256, 256, 1] 9 -1 1 164608 models.common.SPPF [256, 256, 5] 10 -1 1 33024 models.common.Conv [256, 128, 1, 1] 11 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest'] 12 [-1, 6] 1 0 models.common.Concat [1] 13 -1 1 90880 models.common.C3 [256, 128, 1, False] 14 -1 1 8320 models.common.Conv [128, 64, 1, 1] 15 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest'] 16 [-1, 4] 1 0 models.common.Concat [1] 17 -1 1 22912 models.common.C3 [128, 64, 1, False] 18 -1 1 36992 models.common.Conv [64, 64, 3, 2] 19 [-1, 14] 1 0 models.common.Concat [1] 20 -1 1 74496 models.common.C3 [128, 128, 1, False] 21 -1 1 154329 models.odconv.ODConv [128, 128, 3, 2] 22 [-1, 10] 1 0 models.common.Concat [1] 23 -1 1 296448 models.common.C3 [256, 256, 1, False] 24 [17, 20, 23] 1 8118 models.yolo.Detect [1, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [64, 128, 256]]Model Summary: 279 layers, 1771887 parameters, 1771887 gradients, 4.1 GFLOPs运行后打印如上代码说明改进成功。

更多文章产出中,主打简洁和准确,欢迎关注我,共同探讨!