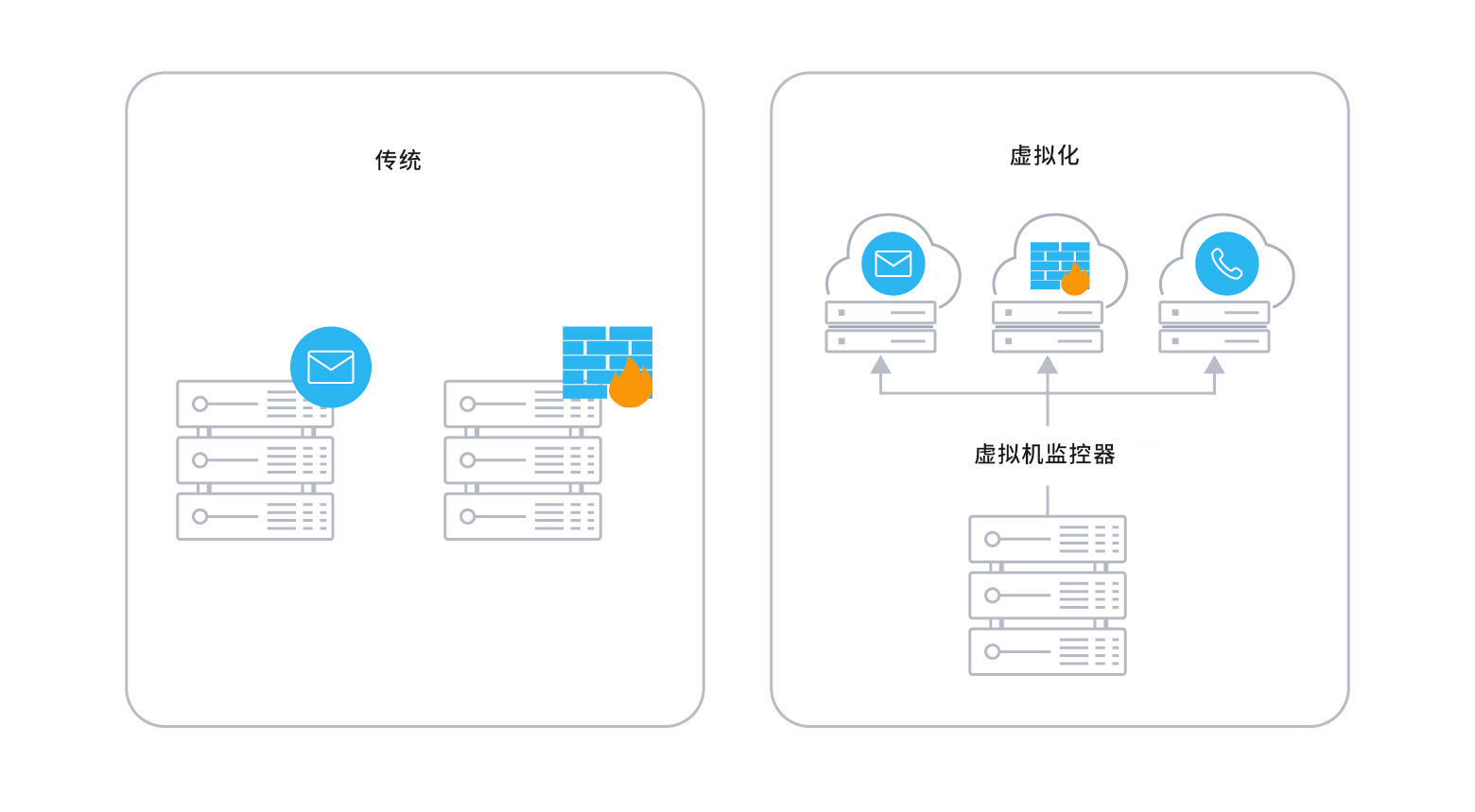

计算资源隔离

- 更方便进行高并发架构的维护和升级

- 架构管理的灵活性更高,不再以单个节点的物理资源作为基础

技术:

- 硬件辅助虚拟化

- 容器技术

在企业部署方案中,很少以单节点实现虚拟化和容器技术,一般以集群状态来运行虚拟化技术或者容器。

容器相对比硬件辅助虚拟化的优点:

- 轻量级 (运行时对系统内存和硬盘的使用较少)

- 便捷性 portable (容器对于镜像有规范化的镜像标准,因此打包制作好的镜像可以在任何容器运行时环境中运行)

- 运行速度快 (容器在启动时,并不需要从系统内核开始进行初始化;容器在运行时只需要启动少量进程即可)

缺点:

- 容器并不可靠

- 缺少专门的存储规划

- 容器通信方式有限

Kubernetes 是一个开源的容器编排引擎,用来对容器化应用进行自动化部署、扩缩和管理。

容器的存储管理和网络基础设施管理:

以docker为例:

存储:

bind-mount

volume

以上的存储类型都不支持在跨节点进行容器化应用部署时的存储管理问题.

网络:

host 直接复制容器宿主机的网络配置

bridge 桥接网络驱动 不同桥接网络之间容器通信需要配置额外的网络通信参数

ipvlan / macvlan 通过改变网络接口的vlan标签,让不同宿主机中的容器可以彼此进行通信

overlay 支持跨宿主机的容器通信

以上的docker网络设施解决方案中,要么只能实现单节点的通信,要么在多节点通信状态下,将大大增加宿主机网卡的工作压力。以上的跨节点通信方案在设置时,都需要设置额外的路由规则才可以实现。

K8S 部署过程整理:

Kubernetes 集群的需求:

(1)可用性:一个单机的 Kubernetes 学习环境,具有单点失效特点。创建高可用的集群则意味着需要考虑:

- 将控制器(运行K8S核心组件的节点)与工作节点(运行应用容器的节点)分开

- 在多个节点上提供控制器组件的副本

- 为针对集群的 API 服务器的流量提供负载均衡

- 随着负载的合理需要,提供足够的可用的(或者能够迅速变为可用的)工作节点

- 规模:如果你预期你的生产用Kubernetes 环境要承受固定量的请求,你可能可以针对所需要的容量来一次性完成安装。不过,如果你预期服务请求会随着时间增长,或者因为类似季节或者特殊事件的原因而发生剧烈变化,你就需要规划如何处理请求上升时对控制面和工作节点的压力,或者如何缩减集群规模以减少未使用资源的消耗。

- 安全性与访问管理:在你自己的学习环境 Kubernetes 集群上,你拥有完全的管理员特权。

- 但是针对运行着重要工作负载的共享集群,用户账户不止一两个时,就需要更细粒度的方案来确定谁或者哪些主体可以访问集群资源。

- 你可以使用基于角色的访问控制(RBAC)和其他安全机制来确保用户和负载能够访问到所需要的资源,同时确保工作负载及集群自身仍然是安全的。

- 你可以通过管理策略和 容器资源来针对用户和工作负载所可访问的资源设置约束。

K8S 集群中每个节点的角色分工:

- 控制器: K8S 部署集群的管理,应用服务的调度管理

- 工作节点(Node):运行pod的节点

简单修改主机名/ip地址

node1:

[root@bogon ~]# hostnamectl set-hostname node1

[root@bogon ~]# nmcli connection modify ens160 ipv4.method manual ipv4.addresses 192.168.110.11/24 ipv4.gateway 192.168.110.2 ipv4.dns 8.8.8.8 autoconnect yes

[root@bogon ~]# nmcli connection up ens160

Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/3)

[root@bogon ~]# ip a s

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope host valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000link/ether 00:0c:29:00:8b:54 brd ff:ff:ff:ff:ff:ffaltname enp3s0inet 192.168.110.11/24 brd 192.168.110.255 scope global noprefixroute ens160valid_lft forever preferred_lft foreverinet6 fe80::20c:29ff:fe00:8b54/64 scope link tentative noprefixroute valid_lft forever preferred_lft forever

[root@bogon ~]# su

[root@node1 ~]#

[root@node1 ~]# vim /etc/ssh/sshd_config // 允许root登录

添加下面这一行

PermitRootLogin yes[root@node1 ~]# systemctl restart sshd

[root@node1 ~]# node2:

利用终端软件,统一执行,前期环境配置和软件安装

注:以下命令需要在三个节点上都执行

一、启用ipv4软件包转发

[root@node1 ~]# sysctl net.ipv4.ip_forward

net.ipv4.ip_forward = 0

[root@node1 ~]# vim /etc/sysctl.d/k8s.conf

net.ipv[root@node1 ~]# sysctl --system

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

* Applying /usr/lib/sysctl.d/50-coredump.conf ...

* Applying /usr/lib/sysctl.d/50-default.conf ...

* Applying /usr/lib/sysctl.d/50-libkcapi-optmem_max.conf ...

* Applying /usr/lib/sysctl.d/50-pid-max.conf ...

* Applying /usr/lib/sysctl.d/50-redhat.conf ...

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/k8s.conf ...

* Applying /etc/sysctl.conf ...

kernel.yama.ptrace_scope = 0

kernel.core_pattern = |/usr/lib/systemd/systemd-coredump %P %u %g %s %t %c %h

kernel.core_pipe_limit = 16

fs.suid_dumpable = 2

kernel.sysrq = 16

kernel.core_uses_pid = 1

net.ipv4.conf.default.rp_filter = 2

net.ipv4.conf.ens160.rp_filter = 2

net.ipv4.conf.lo.rp_filter = 2

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.conf.ens160.accept_source_route = 0

net.ipv4.conf.lo.accept_source_route = 0

net.ipv4.conf.default.promote_secondaries = 1

net.ipv4.conf.ens160.promote_secondaries = 1

net.ipv4.conf.lo.promote_secondaries = 1

net.ipv4.ping_group_range = 0 2147483647

net.core.default_qdisc = fq_codel

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

fs.protected_regular = 1

fs.protected_fifos = 1

net.core.optmem_max = 81920

kernel.pid_max = 4194304

kernel.kptr_restrict = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.ens160.rp_filter = 1

net.ipv4.conf.lo.rp_filter = 1

net.ipv4.ip_forward = 1

[root@node1 ~]# sysctl net.ipv4.ip_forward

net.ipv4.ip_forward = 1二、确认ip、mac地址、prod_uuid、主机名、在三个节点上不同

[root@node1 ~]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 100 0link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000link/ether 00:0c:29:00:8b:54 brd ff:ff:ff:ff:ff:ffaltname enp3s0inet 192.168.110.11/24 brd 192.168.110.255 scope global noprefixroute ens160valid_lft forever preferred_lft foreverinet6 fe80::20c:29ff:fe00:8b54/64 scope link noprefixroutevalid_lft forever preferred_lft forever

[root@node1 ~]# cat /sys/class/dmi/id/p

power/ product_name product_sku product_version

product_family product_serial product_uuid

[root@node1 ~]# cat /sys/class/dmi/id/p

power/ product_name product_sku product_version

product_family product_serial product_uuid

[root@node1 ~]# cat /sys/class/dmi/id/product_uuid

9b3c4d56-0bb8-1c6d-3ab7-e216b7008b54三、确认6443 端口未被占用

[root@node1 ~]# nc 127.0.0.1 6443 -v

Ncat: Version 7.92 ( https://nmap.org/ncat )

Ncat: Connection refused.四、禁用交换分区

[root@node1 ~]# swapon -s

Filename Type Size Used Pr iority

/dev/dm-1 partition 4108284 0 -2

[root@node1 ~]# swapoff -a

[root@node1 ~]# swapon -s

[root@node1 ~]# vim /etc/fstab

[root@node1 ~]# cat /etc/fstab#

# /etc/fstab

# Created by anaconda on Fri Aug 23 00:45:24 2024

#

# Accessible filesystems, by reference, are maintained under '/dev/disk/'.

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info.

#

# After editing this file, run 'systemctl daemon-reload' to update systemd

# units generated from this file.

#

/dev/mapper/cs_bogon-root / xfs defaults 0 0

UUID=d910d323-150c-40df-bd32-bdfb1f5c93b9 /boot xfs defaults 0 0

UUID=BA00-CA1F /boot/efi vfat umask=0077,shortname=winnt 0 2

#/dev/mapper/cs_bogon-swap none swap defaults 0 0五、安装CRI 容器运行时

[root@node1 ~]# dnf remove -y podman container* runc*

[root@node1 ~]# dnf -y install dnf-utils

[root@node1 ~]# yum-config-manager --add-repo https://download.docker.com/linux/centos/doc ker-ce.repo

Adding repo from: https://download.docker.com/linux/centos/docker-ce.repo

[root@node1 ~]# dnf -y install docker-ce docker-ce-cli containerd.io // 等待安装完成即可[root@node1 ~]# systemctl enable --now containerd.service

Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /usr/lib/systemd/system/containerd.service.

[root@node1 ~]# ll /var/run/containerd/containerd.sock //k8s调用容器运行时接口,确保此文件存在

srw-rw----. 1 root root 0 Sep 19 13:57 /var/run/containerd/containerd.sock

配置containerd 服务支持CRI的调用

[root@node1 ~]# vim /etc/containerd/config.toml

[root@node1 ~]# sed -i "s/cri//" /etc/containerd/config.toml

[root@node1 ~]# grep plugin /etc/containerd/config.toml

disabled_plugins = [""]

[root@node1 ~]# systemctl restart containerd.service

[root@node1 ~]#六、安装Kubenetes相关管理工具

三个节点一起执行

[root@node1 ~]# setenforce 0

[root@node1 ~]# sed -i "s/^SELINUX=enforcing/SELINUX=permissivce" /etc/selinux/config

sed: -e expression #1, char 40: unterminated `s' command

[root@node1 ~]# sed -i 's/^SELINUX=enforcing/SELINUX=permissivce' /etc/selinux/config

sed: -e expression #1, char 40: unterminated `s' command

[root@node1 ~]# sed -i 's/^SELINUX=enforcing/SELINUX=permissivce/' /etc/selinux/config

[root@node1 ~]# grep SELINUX /etc/selinux/config

# SELINUX= can take one of these three values:

# NOTE: Up to RHEL 8 release included, SELINUX=disabled would also

SELINUX=permissive

# SELINUXTYPE= can take one of these three values:

SELINUXTYPE=targeted

[root@node1 ~]# vim /etc/yum.repos.d/k8s.repo

[root@node1 ~]# cat /etc/yum.repos.d/k8s.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.31/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.31/rpm/repodata/repomd.xml.key

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni

只在node1上配置,通过scp 同步给其他节点。

[root@node1 ~]# scp /etc/yum.repos.d/k8s.repo root@192.168.110.10:/etc/yum.repos.d/

The authenticity of host '192.168.110.10 (192.168.110.10)' can't be established.

ED25519 key fingerprint is SHA256:84EopGSflyn0EP7RLvmnvaWPJCTe8G99eX4dF6XQzFk.

This key is not known by any other names

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.110.10' (ED25519) to the list of known hosts.

root@192.168.110.10's password:

k8s.repo 100% 235 104.8KB/s 00:00

[root@node1 ~]# scp /etc/yum.repos.d/k8s.repo root@192.168.110.22:/etc/yum.repos.d/

The authenticity of host '192.168.110.22 (192.168.110.22)' can't be established.

ED25519 key fingerprint is SHA256:84EopGSflyn0EP7RLvmnvaWPJCTe8G99eX4dF6XQzFk.

This host key is known by the following other names/addresses:~/.ssh/known_hosts:1: 192.168.110.10

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.110.22' (ED25519) to the list of known hosts.

root@192.168.110.22's password:

k8s.repo 100% 235 120.0KB/s 00:00在三个节点上同时安装。

[root@node1 ~]# yum -y install kubeadm kubectl kubelet --disableexcludes=kubernetes

[root@control ~]# systemctl start kubelet.service下载初始化平台需要使用的镜像,不使用官方的register.k8s.io仓库,使用aliyun的镜像。

在三个节点上同时下载。

[root@control ~]# cat kube.memo

registry.cn-hangzhou.aliyuncs.com/google_containers

[root@control ~]# kubeadm config print init-defaults > init.yml

[root@control ~]# kubeadm config print join-defaults > join.yml修改init.yml

使用国内镜像,并且设置好apiserver的地址

下载镜像:

[root@control ~]# kubeadm config images pull --config init.yml

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.31.0

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.31.0

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.31.0

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.31.0

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.11.3

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.10

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.15-0初始化集群:

[root@control ~]# kubeadm init --config init.yml

// 注意观察输出中可能包含的错误信息

测试可以拉取docker镜像的方案:

1、 设置dns 服务器

2、设置docker加速镜像

[root@control ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://docker.registry.cyou","https://docker-cf.registry.cyou","https://dockercf.jsdelivr.fyi","https://docker.jsdelivr.fyi","https://dockertest.jsdelivr.fyi","https://mirror.aliyuncs.com","https://dockerproxy.com","https://mirror.baidubce.com","https://docker.m.daocloud.io","https://docker.nju.edu.cn","https://docker.mirrors.sjtug.sjtu.edu.cn","https://docker.mirrors.ustc.edu.cn","https://mirror.iscas.ac.cn","https://docker.rainbond.cc"]

}

[root@control ~]# cat /etc/resolv.conf

# Generated by NetworkManager

nameserver 223.5.5.5

nameserver 8.8.8.8

[root@control ~]# systemctl restart docker

docker pull flannel/flannel:latest

docker pull flannel/flannel-cni-plugin:latest