基于 LangChain 的 LLM 应用开发

1. 介绍

现在,使用 Prompt 可以快速开发一个应用程序,但是一个应用程序可能需要多次写Prompt,并对 LLM 的输出结果进行解析。因此,需要编写很多胶水代码。

Harrison Chase 创建的 LangChain 框架可以简化开发流程。其包含两个包,Python和JavaScript。LangChain 提取了很多公共的抽象,注重组合和模块化,包含了很多可以单独使用或者可以与其他组件结合使用的独立组件。此外,还包括很多不同的用例,并且非常容易上手。这使得 LangChain 方便开发基于 LLM 的复杂应用。

本课程将要介绍的内容大纲:

2. Models, Prompts and Parsers

Models(模型):基础语言模型。

Prompts(提示):模型的输入,用来给模型传递用户意图。

Parsers(解析器):将模型的输出解析成更结构化的输出。

import os

import openaifrom dotenv import load_dotenv, find_dotenv

_ = load_dotenv(find_dotenv()) # read local .env file

openai.api_key = os.environ['OPENAI_API_KEY']

def get_completion(prompt, model="gpt-3.5-turbo"):messages = [{"role": "user", "content": prompt}]response = openai.ChatCompletion.create(model=model,messages=messages,temperature=0, )return response.choices[0].message["content"]

海盗风格的英语,让ChatGPT翻译成正式的英语:

customer_email = """

Arrr, I be fuming that me blender lid \

flew off and splattered me kitchen walls \

with smoothie! And to make matters worse,\

the warranty don't cover the cost of \

cleaning up me kitchen. I need yer help \

right now, matey!

"""style = """American English \

in a calm and respectful tone

"""prompt = f"""Translate the text \

that is delimited by triple backticks

into a style that is {style}.

text: ```{customer_email}```

"""response = get_completion(prompt)

输出:

基于LangChain的实现:

#!pip install --upgrade langchain

from langchain.chat_models import ChatOpenAI# To control the randomness and creativity of the generated

# text by an LLM, use temperature = 0.0

chat = ChatOpenAI(temperature=0.0)

chat

PromptTemplate

创建提示模板:

from langchain.prompts import ChatPromptTemplatetemplate_string = """Translate the text \

that is delimited by triple backticks \

into a style that is {style}. \

text: ```{text}```

"""prompt_template = ChatPromptTemplate.from_template(template_string)prompt_template.messages[0].prompt

# 打印 prompt 中的参数,输出:['style', 'text']

prompt_template.messages[0].prompt.input_variables

customer_style = """American English \

in a calm and respectful tone

"""customer_email = """

Arrr, I be fuming that me blender lid \

flew off and splattered me kitchen walls \

with smoothie! And to make matters worse, \

the warranty don't cover the cost of \

cleaning up me kitchen. I need yer help \

right now, matey!

"""customer_messages = prompt_template.format_messages(style=customer_style,text=customer_email)

print(type(customer_messages))

print(type(customer_messages[0]))

print(customer_messages[0])

# Call the LLM to translate to the style of the customer message

customer_response = chat(customer_messages)

print(customer_response.content)

为什么要使用Prompt模板?因为当构建复杂的应用程序时,Prompt可能会非常长并且具体。因此,好的Prompt模板是一种有用的抽象,可以更好地重用Prompt(提示)。LangChain提供了很多常用操作的提示,例如文本摘要,问答,链接数据库或者连接不同的API。

下面是一个用于在线学习应用程序为程序打分的非常长的提示模板示例:

当使用LLM构建APP时,通常会指示LLM以某种格式生成输出,比如使用特定的关键词。下面的例子展示了使用ReAct框架让LLM执行思维链推理(Chain of Thought Reasoning)的操作。

ReAct 思维链推理

其中,

- Thought:LLM思考的内容,因为给LLM流出思考的空间,它往往能得出更准确的结论。

- Action:执行特定的动作。

- Observation:展示LLM从这个动作中观察到了什么等。

可以使用上面这三种Prompt与一个解析器结合,LangChain会自动提取出用这些特定关键词标记的文本。这样就可以很好地抽象地指定LLM的输入,然后让解析器正确地解释LLM给出的输出。

LangChain 解析器

下面是一个使用LangChain解析器解析JSON格式输出的例子。

{"gift": False,"delivery_days": 5,"price_value": "pretty affordable!"

}customer_review = """\

This leaf blower is pretty amazing. It has four settings:\

candle blower, gentle breeze, windy city, and tornado. \

It arrived in two days, just in time for my wife's \

anniversary present. \

I think my wife liked it so much she was speechless. \

So far I've been the only one using it, and I've been \

using it every other morning to clear the leaves on our lawn. \

It's slightly more expensive than the other leaf blowers \

out there, but I think it's worth it for the extra features.

"""review_template = """\

For the following text, extract the following information:gift: Was the item purchased as a gift for someone else? \

Answer True if yes, False if not or unknown.delivery_days: How many days did it take for the product \

to arrive? If this information is not found, output -1.price_value: Extract any sentences about the value or price,\

and output them as a comma separated Python list.Format the output as JSON with the following keys:

gift

delivery_days

price_valuetext: {text}

"""

from langchain.prompts import ChatPromptTemplateprompt_template = ChatPromptTemplate.from_template(review_template)

print(prompt_template)

messages = prompt_template.format_messages(text=customer_review)

chat = ChatOpenAI(temperature=0.0)

# 此时的返回是str类型的

response = chat(messages)

进行类型转换:

from langchain.output_parsers import ResponseSchema

from langchain.output_parsers import StructuredOutputParser

gift_schema = ResponseSchema(name="gift",description="Was the item purchased\as a gift for someone else? \Answer True if yes,\False if not or unknown.")delivery_days_schema = ResponseSchema(name="delivery_days",description="How many days\did it take for the product\to arrive? If this \information is not found,\output -1.")price_value_schema = ResponseSchema(name="price_value",description="Extract any\sentences about the value or \price, and output them as a \comma separated Python list.")response_schemas = [gift_schema, delivery_days_schema,price_value_schema]

output_parser = StructuredOutputParser.from_response_schemas(response_schemas)

format_instructions = output_parser.get_format_instructions()

print(format_instructions)

review_template_2 = """\

For the following text, extract the following information:gift: Was the item purchased as a gift for someone else? \

Answer True if yes, False if not or unknown.delivery_days: How many days did it take for the product\

to arrive? If this information is not found, output -1.price_value: Extract any sentences about the value or price,\

and output them as a comma separated Python list.text: {text}{format_instructions}

"""prompt = ChatPromptTemplate.from_template(template=review_template_2)messages = prompt.format_messages(text=customer_review, format_instructions=format_instructions)

response = chat(messages)

print(response.content)

# 转成 dict,以便后续处理

output_dict = output_parser.parse(response.content)

3. Memory

当用户与LLM模型互动时,正常情况下,它们没办法记住历史对话信息。这对构建像聊天机器人这样的应用来说是个问题。本节内容将介绍如何通过LangChain实现让模型”记住“历史对话内容,并能够将其输入到LLM中。

LangChain针对复杂的记忆存储管理提供了多种选项,下面是一些例子。

import osfrom dotenv import load_dotenv, find_dotenv

_ = load_dotenv(find_dotenv()) # read local .env fileimport warnings

warnings.filterwarnings('ignore')from langchain.chat_models import ChatOpenAI

from langchain.chains import ConversationChain

from langchain.memory import ConversationBufferMemoryllm = ChatOpenAI(temperature=0.0)

memory = ConversationBufferMemory()

conversation = ConversationChain(llm=llm, memory = memory,verbose=True

)

可以看到,通过LangChain,模型具备了“记住”历史对话信息的能力。如果把verbose参数设置为True,那运行之后,会输出LangChain这个API背后是如何工作的,可以看出它把历史会话信息都存储了起来,然后每次都喂给模型,这使得模型可以从上下文感知这些历史对话信息,因此可以根据这些信息实现记忆的能力。

ConversationBufferMemory

LangChain的ConversationBufferMemory方法可以临时存储对话记忆。使用该法也很简单,按照dict的格式往里边添加数据就可以了。

memory = ConversationBufferMemory()

memory.save_context({"input": "Hi"}, {"output": "What's up"})

当使用LLM进行对话时,LLM本身是无状态的,即LLM本身不会记住和你对话的历史消息。聊天机器人,之所以具备了记忆能力,是因为将缓存中保存的历史对话消息当做上下文一起喂给模型了。但是这样随着对话轮数的增加,需要存储的上下文也越来越长,那么调用ChatGPT API时消耗的token就越来越多,并且可能超过token的限制,这对大型的聊天机器人应用来说是不可承受的。基于此,LangChain提供了集中便捷的记忆存储方案来保存对话消息和累计对话内容。

ConversationBufferWindowMemory

窗口记忆,即仅保留最后若干轮对话消息。通过参数 k 控制,表示窗口大小。例如,k = 1,表示只记住最后一轮对话。

from langchain.memory import ConversationBufferWindowMemorymemory = ConversationBufferWindowMemory(k=1)

llm = ChatOpenAI(temperature=0.0)

memory = ConversationBufferWindowMemory(k=1)

conversation = ConversationChain(llm=llm, memory = memory,verbose=False

)

ConversationalTokenBufferMemory

Token缓存记忆,限制保存在记忆中的token数量。

from langchain.memory import ConversationTokenBufferMemory

from langchain.llms import OpenAI

llm = ChatOpenAI(temperature=0.0)memory = ConversationTokenBufferMemory(llm=llm, max_token_limit=30)

memory.save_context({"input": "AI is what?!"},{"output": "Amazing!"})

memory.save_context({"input": "Backpropagation is what?"},{"output": "Beautiful!"})

memory.save_context({"input": "Chatbots are what?"}, {"output": "Charming!"})

memory.load_memory_variables({})

ConversationSummaryBufferMemory

这个API背后的动机是:预期将记忆的存储容量限制在最近若干次对话上或者限制token的数量上,不如让LLM将历史消息生成摘要,在记忆中存储这些摘要即可。

from langchain.memory import ConversationSummaryBufferMemory# create a long string

schedule = "There is a meeting at 8am with your product team. \

You will need your powerpoint presentation prepared. \

9am-12pm have time to work on your LangChain \

project which will go quickly because Langchain is such a powerful tool. \

At Noon, lunch at the italian resturant with a customer who is driving \

from over an hour away to meet you to understand the latest in AI. \

Be sure to bring your laptop to show the latest LLM demo."memory = ConversationSummaryBufferMemory(llm=llm, max_token_limit=100)

memory.save_context({"input": "Hello"}, {"output": "What's up"})

memory.save_context({"input": "Not much, just hanging"},{"output": "Cool"})

memory.save_context({"input": "What is on the schedule today?"}, {"output": f"{schedule}"})

其他类型的记忆

4. Chain

下面用到的数据包括两列,产品名称列表及其对应的评论。

import pandas as pd

df = pd.read_csv('Data.csv')

LLMChain

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

from langchain.chains import LLMChainllm = ChatOpenAI(temperature=0.9)

prompt = ChatPromptTemplate.from_template("What is the best name to describe \a company that makes {product}?"

)

chain = LLMChain(llm=llm, prompt=prompt)

product = "Queen Size Sheet Set"

chain.run(product)

SimpleSequentialChain

顺序链是多个链一个接一个地运行。有两种类型,分别适用于不同场景:

- SimpleSequentialChain:所有的子链都只有一个输入和一个输出。

- SequentialChain:多个输入,多个输出。

下面是一个例子,有两条链,第一条链输出公司名称,然后传递给第二条链作为输入。

from langchain.chains import SimpleSequentialChainllm = ChatOpenAI(temperature=0.9)# prompt template 1

first_prompt = ChatPromptTemplate.from_template("What is the best name to describe \a company that makes {product}?"

)# Chain 1

chain_one = LLMChain(llm=llm, prompt=first_prompt)# prompt template 2

second_prompt = ChatPromptTemplate.from_template("Write a 20 words description for the following \company:{company_name}"

)

# chain 2

chain_two = LLMChain(llm=llm, prompt=second_prompt)overall_simple_chain = SimpleSequentialChain(chains=[chain_one, chain_two],verbose=True)

overall_simple_chain.run(product)

SequentialChain

下面的例子包含四条链。其中,第一条链将评论翻译成英语;第二条链一句话总结这篇评论;第三条链将会检测原始的评论是用什么语言写的;第四条链将接收多个输入,一个是第二条链得到的摘要,一个是第三条链得到的语言,要求后续对摘要进行回复时,使用指定的语言。

注意下面例子中 output_key 参数和prompt中的变量名称是有对应关系的。

from langchain.chains import SequentialChainllm = ChatOpenAI(temperature=0.9)# prompt template 1: translate to english

first_prompt = ChatPromptTemplate.from_template("Translate the following review to english:""\n\n{Review}"

)

# chain 1: input= Review and output= English_Review

chain_one = LLMChain(llm=llm, prompt=first_prompt, output_key="English_Review")second_prompt = ChatPromptTemplate.from_template("Can you summarize the following review in 1 sentence:""\n\n{English_Review}"

)

# chain 2: input= English_Review and output= summary

chain_two = LLMChain(llm=llm, prompt=second_prompt, output_key="summary")

# prompt template 3: translate to english

third_prompt = ChatPromptTemplate.from_template("What language is the following review:\n\n{Review}"

)

# chain 3: input= Review and output= language

chain_three = LLMChain(llm=llm, prompt=third_prompt,output_key="language")# prompt template 4: follow up message

fourth_prompt = ChatPromptTemplate.from_template("Write a follow up response to the following ""summary in the specified language:""\n\nSummary: {summary}\n\nLanguage: {language}"

)

# chain 4: input= summary, language and output= followup_message

chain_four = LLMChain(llm=llm, prompt=fourth_prompt,output_key="followup_message")

# overall_chain: input= Review

# and output= English_Review,summary, followup_message

overall_chain = SequentialChain(chains=[chain_one, chain_two, chain_three, chain_four],input_variables=["Review"],output_variables=["English_Review", "summary", "followup_message"],verbose=True

)

review = df.Review[5]

overall_chain(review)

RouterChain

根据输入内容路由到某条链来处理输入。设想一下,假设你有多条子链,每条子链专门负责处理某种特定类型的输入,这种情况下可以使用路由链(Router Chain)。

下面是个例子,它首先判断该使用哪条子链,然后将输入传递到响应的子链。

MultiPromptChain可以用来在多个不同的prompt模板之间路由。

LLMRouterChain借助LLM的帮助在不同的子链之间路由。需要在prompt模板中提供名称和描述。

RouterOutputParser解析器将LLM输出解析成一个dict,方便下游确定使用哪条链,以及该链的输入是什么。

除了目标链之外,还需要一个默认链,用于在路由找不到合适的子链调用时,用来备用的一条链。它会直接将用户的问题当做Prompt用来问LLM,而不再去基于预定义的模板去生成Prompt再去问LLM。

physics_template = """You are a very smart physics professor. \

You are great at answering questions about physics in a concise\

and easy to understand manner. \

When you don't know the answer to a question you admit\

that you don't know.Here is a question:

{input}"""math_template = """You are a very good mathematician. \

You are great at answering math questions. \

You are so good because you are able to break down \

hard problems into their component parts,

answer the component parts, and then put them together\

to answer the broader question.Here is a question:

{input}"""history_template = """You are a very good historian. \

You have an excellent knowledge of and understanding of people,\

events and contexts from a range of historical periods. \

You have the ability to think, reflect, debate, discuss and \

evaluate the past. You have a respect for historical evidence\

and the ability to make use of it to support your explanations \

and judgements.Here is a question:

{input}"""computerscience_template = """ You are a successful computer scientist.\

You have a passion for creativity, collaboration,\

forward-thinking, confidence, strong problem-solving capabilities,\

understanding of theories and algorithms, and excellent communication \

skills. You are great at answering coding questions. \

You are so good because you know how to solve a problem by \

describing the solution in imperative steps \

that a machine can easily interpret and you know how to \

choose a solution that has a good balance between \

time complexity and space complexity. Here is a question:

{input}"""

prompt_infos = [{"name": "physics", "description": "Good for answering questions about physics", "prompt_template": physics_template},{"name": "math", "description": "Good for answering math questions", "prompt_template": math_template},{"name": "History", "description": "Good for answering history questions", "prompt_template": history_template},{"name": "computer science", "description": "Good for answering computer science questions", "prompt_template": computerscience_template}

]

from langchain.chains.router import MultiPromptChain

from langchain.chains.router.llm_router import LLMRouterChain,RouterOutputParser

from langchain.prompts import PromptTemplatellm = ChatOpenAI(temperature=0)destination_chains = {}

for p_info in prompt_infos:name = p_info["name"]prompt_template = p_info["prompt_template"]prompt = ChatPromptTemplate.from_template(template=prompt_template)chain = LLMChain(llm=llm, prompt=prompt)destination_chains[name] = chain destinations = [f"{p['name']}: {p['description']}" for p in prompt_infos]

destinations_str = "\n".join(destinations)default_prompt = ChatPromptTemplate.from_template("{input}")

default_chain = LLMChain(llm=llm, prompt=default_prompt)

MULTI_PROMPT_ROUTER_TEMPLATE = """Given a raw text input to a \

language model select the model prompt best suited for the input. \

You will be given the names of the available prompts and a \

description of what the prompt is best suited for. \

You may also revise the original input if you think that revising\

it will ultimately lead to a better response from the language model.<< FORMATTING >>

Return a markdown code snippet with a JSON object formatted to look like:

```json

{{{{"destination": string \ name of the prompt to use or "DEFAULT""next_inputs": string \ a potentially modified version of the original input

}}}}

```REMEMBER: "destination" MUST be one of the candidate prompt \

names specified below OR it can be "DEFAULT" if the input is not\

well suited for any of the candidate prompts.

REMEMBER: "next_inputs" can just be the original input \

if you don't think any modifications are needed.<< CANDIDATE PROMPTS >>

{destinations}<< INPUT >>

{{input}}<< OUTPUT (remember to include the ```json)>>"""

router_template = MULTI_PROMPT_ROUTER_TEMPLATE.format(destinations=destinations_str

)

router_prompt = PromptTemplate(template=router_template,input_variables=["input"],output_parser=RouterOutputParser(),

)router_chain = LLMRouterChain.from_llm(llm, router_prompt)

chain = MultiPromptChain(router_chain=router_chain, destination_chains=destination_chains, default_chain=default_chain, verbose=True)

5. Question And Answer

文档问答系统是一种常见的LLM构建的复杂应用程序。Embedding和向量存储是两种强大的前沿技术。

Document Q&A Example

下面是一个文档问答的例子:

from langchain.chains import RetrievalQA

from langchain.chat_models import ChatOpenAI

from langchain.document_loaders import CSVLoader

from langchain.vectorstores import DocArrayInMemorySearch

from IPython.display import display, Markdownfile = 'OutdoorClothingCatalog_1000.csv'

loader = CSVLoader(file_path=file)

#pip install docarray

from langchain.indexes import VectorstoreIndexCreatorindex = VectorstoreIndexCreator(vectorstore_cls=DocArrayInMemorySearch

).from_loaders([loader])query ="Please list all your shirts with sun protection \

in a table in markdown and summarize each one."response = index.query(query)

Embeddings

如果想要使用语言模型与大量文档相结合,存在一个关键问题:语言模型一次只能接收几千个单词,如果一个很大的文档,如何让语言模型对文档所有内容进行问答呢?答案是借助Embedding和向量存储。

Embedding将一段文本转换成数字,用一个高维的向量(一组数字)表示这段文本。内容相似的文本会有相似的向量值。

Vector Database

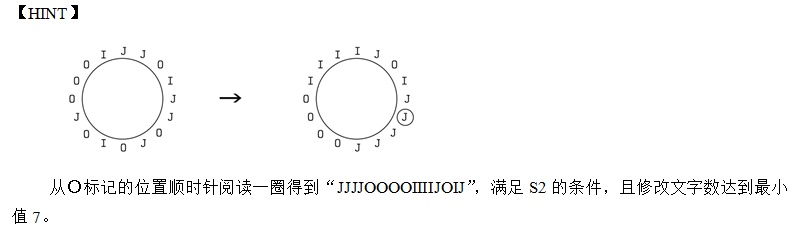

所以需要把文档拆分成小块,这样每次就只用把最相关的几块传递给语言模型。每个文本块生成一个Embedding,并将其存储在向量数据库中。索引创建后,用户在使用应用时,首先将用户输入的内容转换成Embedding,然后就可以从数据库中查询到与其最相似的几个文本片段,之后将这几个片段和原始的用户输入内容一起输入到语言模型进行回答即可。整个实现过程如下图所示:

基于LangChain的示例:

from langchain.embeddings import OpenAIEmbeddingsloader = CSVLoader(file_path=file)

docs = loader.load()embeddings = OpenAIEmbeddings()db = DocArrayInMemorySearch.from_documents(docs, embeddings

)

embedding长这样:是一个1536维的向量,当然OpenAI的Embedding还支持其他维数的。

检索器(Retriver)是一个通用接口,其定义了一个接收查询内容并返回相似文档的方法。之后手动将这些文档中的内容拼接成字符串保存,然后将这部分内容和用户的问题一起喂给语言模型,让其生成输出。

query = "Please suggest a shirt with sunblocking"docs = db.similarity_search(query)retriever = db.as_retriever()llm = ChatOpenAI(temperature = 0.0)qdocs = "".join([docs[i].page_content for i in range(len(docs))])response = llm.call_as_llm(f"{qdocs} Question: Please list all your \

shirts with sun protection in a table in markdown and summarize each one.")

上述分步骤的实现过程可以使用LangChain将其进行封装到一个方法中,下面是一个例子。其中,chain_type参数的stuff表示在调用语言模型时,将所有文档内容都一起放到上下文中。

qa_stuff = RetrievalQA.from_chain_type(llm=llm, chain_type="stuff", retriever=retriever, verbose=True

)

query = "Please list all your shirts with sun protection in a table \

in markdown and summarize each one."response = qa_stuff.run(query)

display(Markdown(response))

Document Stuff Method

因为语言模型有token的限制,所以 Stuff 方法在文档内容较长时不适用。因此,LangChain还提供了其他的三种方法。

第一种是 Map_reduce,把每篇文档都进行分块,然后将每一块的内容和用户问题一起输入到语言模型,得到一个独立的返回结果。然后,将每一块得到的结果都合并在一起,再使用语言模型来对这些结果进行总结,最终得到答案。如此便可以处理任意数量任意长度的文档了。最后,它还支持并行处理,可以极大提高响应速度。

第二种是Refine,基于迭代运行,后一个输入基于前一个文档的问答的输出。这种方法适用于需要整合信息,以及随着时间推移构建答案的场景。这种方法与Map_reduce调用次数一样多,但无法并行,处理速度要慢一些。

第三种 Map_rerank,这种方法对于每个文档只需要调用一次语言模型,让语言模型返回对文档每个分块的评分,最终选择分数最高的输出。这种方式要求模型具备打分能力,并且需要高质量的Prompt。支持并行处理,速度较快。

上述这些方法也可以应用到不同的链中,比如Map_reduce可以用在文本摘要中,这在长篇文档中非常高效。

6. Evaluation

当使用LLM构建应用程序时,一个绕不开的问题是:如何评估应用程序的表现?它是否达到了某种验收标准?并且当更换LLM,更换向量数据库或者更改系统参数,抑或更换检索数据方式时,如何确定系统是否比以前更好了或者更差了。

这些应用程序实际上是许多不同步骤的链和序列。我们首先需要了解每个步骤的输入和输出到底是什么,在这个过程中借助一些可视化工具或者调试工具是非常有必要的。此外,还需要使用大量的不同的数据集来测试模型,这有助于我们全面了解模型的表现。

借助 QAGenerateChain API可以读取文档并从每个文档中生成一组问题和答案,避免了手动生成。从而可以更高效地对搭建的应用进行评估。

from langchain.chains import RetrievalQA

from langchain.chat_models import ChatOpenAI

from langchain.document_loaders import CSVLoader

from langchain.indexes import VectorstoreIndexCreator

from langchain.vectorstores import DocArrayInMemorySearchfile = 'OutdoorClothingCatalog_1000.csv'

loader = CSVLoader(file_path=file)

data = loader.load()index = VectorstoreIndexCreator(vectorstore_cls=DocArrayInMemorySearch

).from_loaders([loader])llm = ChatOpenAI(temperature = 0.0)

qa = RetrievalQA.from_chain_type(llm=llm, chain_type="stuff", retriever=index.vectorstore.as_retriever(), verbose=True,chain_type_kwargs = {"document_separator": "<<<<>>>>>"}

)

# 硬编码问答样例

examples = [{"query": "Do the Cozy Comfort Pullover Set\have side pockets?","answer": "Yes"},{"query": "What collection is the Ultra-Lofty \850 Stretch Down Hooded Jacket from?","answer": "The DownTek collection"}

]

QAGenerateChain 问答生成链

from langchain.evaluation.qa import QAGenerateChainexample_gen_chain = QAGenerateChain.from_llm(ChatOpenAI())new_examples = example_gen_chain.apply_and_parse([{"doc": t} for t in data[:5]]

)

为了更好的观察答案生成过程中的每个子链,可以使用 langchain.debug 工具,这样就可以看到 prompt和中间输出,方便排查问题。

import langchain

langchain.debug = True

qa.run(examples[0]["query"])

[chain/start] [1:chain:RetrievalQA] Entering Chain run with input:

{"query": "Do the Cozy Comfort Pullover Set have side pockets?"

}

[chain/start] [1:chain:RetrievalQA > 2:chain:StuffDocumentsChain] Entering Chain run with input:

[inputs]

[chain/start] [1:chain:RetrievalQA > 2:chain:StuffDocumentsChain > 3:chain:LLMChain] Entering Chain run with input:

{"question": "Do the Cozy Comfort Pullover Set have side pockets?","context": ": 10\nname: Cozy Comfort Pullover Set, Stripe\ndescription: Perfect for lounging, this striped knit set lives up to its name. We used ultrasoft fabric and an easy design that's as comfortable at bedtime as it is when we have to make a quick run out.\n\nSize & Fit\n- Pants are Favorite Fit: Sits lower on the waist.\n- Relaxed Fit: Our most generous fit sits farthest from the body.\n\nFabric & Care\n- In the softest blend of 63% polyester, 35% rayon and 2% spandex.\n\nAdditional Features\n- Relaxed fit top with raglan sleeves and rounded hem.\n- Pull-on pants have a wide elastic waistband and drawstring, side pockets and a modern slim leg.\n\nImported.<<<<>>>>>: 73\nname: Cozy Cuddles Knit Pullover Set\ndescription: Perfect for lounging, this knit set lives up to its name. We used ultrasoft fabric and an easy design that's as comfortable at bedtime as it is when we have to make a quick run out. \n\nSize & Fit \nPants are Favorite Fit: Sits lower on the waist. \nRelaxed Fit: Our most generous fit sits farthest from the body. \n\nFabric & Care \nIn the softest blend of 63% polyester, 35% rayon and 2% spandex.\n\nAdditional Features \nRelaxed fit top with raglan sleeves and rounded hem. \nPull-on pants have a wide elastic waistband and drawstring, side pockets and a modern slim leg. \nImported.<<<<>>>>>: 151\nname: Cozy Quilted Sweatshirt\ndescription: Our sweatshirt is an instant classic with its great quilted texture and versatile weight that easily transitions between seasons. With a traditional fit that is relaxed through the chest, sleeve, and waist, this pullover is lightweight enough to be worn most months of the year. The cotton blend fabric is super soft and comfortable, making it the perfect casual layer. To make dressing easy, this sweatshirt also features a snap placket and a heritage-inspired Mt. Katahdin logo patch. For care, machine wash and dry. Imported.<<<<>>>>>: 265\nname: Cozy Workout Vest\ndescription: For serious warmth that won't weigh you down, reach for this fleece-lined vest, which provides you with layering options whether you're inside or outdoors.\nSize & Fit\nRelaxed Fit. Falls at hip.\nFabric & Care\nSoft, textured fleece lining. Nylon shell. Machine wash and dry. \nAdditional Features \nTwo handwarmer pockets. Knit side panels stretch for a more flattering fit. Shell fabric is treated to resist water and stains. Imported."

}

[llm/start] [1:chain:RetrievalQA > 2:chain:StuffDocumentsChain > 3:chain:LLMChain > 4:llm:ChatOpenAI] Entering LLM run with input:

{"prompts": ["System: Use the following pieces of context to answer the users question. \nIf you don't know the answer, just say that you don't know, don't try to make up an answer.\n----------------\n: 10\nname: Cozy Comfort Pullover Set, Stripe\ndescription: Perfect for lounging, this striped knit set lives up to its name. We used ultrasoft fabric and an easy design that's as comfortable at bedtime as it is when we have to make a quick run out.\n\nSize & Fit\n- Pants are Favorite Fit: Sits lower on the waist.\n- Relaxed Fit: Our most generous fit sits farthest from the body.\n\nFabric & Care\n- In the softest blend of 63% polyester, 35% rayon and 2% spandex.\n\nAdditional Features\n- Relaxed fit top with raglan sleeves and rounded hem.\n- Pull-on pants have a wide elastic waistband and drawstring, side pockets and a modern slim leg.\n\nImported.<<<<>>>>>: 73\nname: Cozy Cuddles Knit Pullover Set\ndescription: Perfect for lounging, this knit set lives up to its name. We used ultrasoft fabric and an easy design that's as comfortable at bedtime as it is when we have to make a quick run out. \n\nSize & Fit \nPants are Favorite Fit: Sits lower on the waist. \nRelaxed Fit: Our most generous fit sits farthest from the body. \n\nFabric & Care \nIn the softest blend of 63% polyester, 35% rayon and 2% spandex.\n\nAdditional Features \nRelaxed fit top with raglan sleeves and rounded hem. \nPull-on pants have a wide elastic waistband and drawstring, side pockets and a modern slim leg. \nImported.<<<<>>>>>: 151\nname: Cozy Quilted Sweatshirt\ndescription: Our sweatshirt is an instant classic with its great quilted texture and versatile weight that easily transitions between seasons. With a traditional fit that is relaxed through the chest, sleeve, and waist, this pullover is lightweight enough to be worn most months of the year. The cotton blend fabric is super soft and comfortable, making it the perfect casual layer. To make dressing easy, this sweatshirt also features a snap placket and a heritage-inspired Mt. Katahdin logo patch. For care, machine wash and dry. Imported.<<<<>>>>>: 265\nname: Cozy Workout Vest\ndescription: For serious warmth that won't weigh you down, reach for this fleece-lined vest, which provides you with layering options whether you're inside or outdoors.\nSize & Fit\nRelaxed Fit. Falls at hip.\nFabric & Care\nSoft, textured fleece lining. Nylon shell. Machine wash and dry. \nAdditional Features \nTwo handwarmer pockets. Knit side panels stretch for a more flattering fit. Shell fabric is treated to resist water and stains. Imported.\nHuman: Do the Cozy Comfort Pullover Set have side pockets?"]

}

[llm/end] [1:chain:RetrievalQA > 2:chain:StuffDocumentsChain > 3:chain:LLMChain > 4:llm:ChatOpenAI] [1.73s] Exiting LLM run with output:

{"generations": [[{"text": "The Cozy Comfort Pullover Set, Stripe does have side pockets.","generation_info": null,"message": {"content": "The Cozy Comfort Pullover Set, Stripe does have side pockets.","additional_kwargs": {},"example": false}}]],"llm_output": {"token_usage": {"prompt_tokens": 628,"completion_tokens": 14,"total_tokens": 642},"model_name": "gpt-3.5-turbo"}

}

[chain/end] [1:chain:RetrievalQA > 2:chain:StuffDocumentsChain > 3:chain:LLMChain] [1.73s] Exiting Chain run with output:

{"text": "The Cozy Comfort Pullover Set, Stripe does have side pockets."

}

[chain/end] [1:chain:RetrievalQA > 2:chain:StuffDocumentsChain] [1.73s] Exiting Chain run with output:

{"output_text": "The Cozy Comfort Pullover Set, Stripe does have side pockets."

}

[chain/end] [1:chain:RetrievalQA] [1.97s] Exiting Chain run with output:

{"result": "The Cozy Comfort Pullover Set, Stripe does have side pockets."

}

'The Cozy Comfort Pullover Set, Stripe does have side pockets.'

在返回给用户错误的答案时,往往可能不是LLM生成的问题,而可能是检索的时候的问题。

上面的例子中,借助LLM生成了问答数据集,那么如何进行评估呢?

首先,需要使用 qa.apply(examples) 函数借助LLM生成正确的答案。

QAEvalChain 问答评估链

from langchain.evaluation.qa import QAEvalChainllm = ChatOpenAI(temperature=0)

eval_chain = QAEvalChain.from_llm(llm)graded_outputs = eval_chain.evaluate(examples, predictions)

for i, eg in enumerate(examples):print(f"Example {i}:")print("Question: " + predictions[i]['query'])print("Real Answer: " + predictions[i]['answer'])print("Predicted Answer: " + predictions[i]['result'])print("Predicted Grade: " + graded_outputs[i]['text'])print()

Example 0:

Question: Do the Cozy Comfort Pullover Set have side pockets?

Real Answer: Yes

Predicted Answer: The Cozy Comfort Pullover Set, Stripe does have side pockets.

Predicted Grade: CORRECTExample 1:

Question: What collection is the Ultra-Lofty 850 Stretch Down Hooded Jacket from?

Real Answer: The DownTek collection

Predicted Answer: The Ultra-Lofty 850 Stretch Down Hooded Jacket is from the DownTek collection.

Predicted Grade: CORRECTExample 2:

Question: What is the weight of each pair of Women's Campside Oxfords?

Real Answer: The approximate weight of each pair of Women's Campside Oxfords is 1 lb. 1 oz.

Predicted Answer: The weight of each pair of Women's Campside Oxfords is approximately 1 lb. 1 oz.

Predicted Grade: CORRECTExample 3:

Question: What are the dimensions of the small and medium Recycled Waterhog Dog Mat?

Real Answer: The dimensions of the small Recycled Waterhog Dog Mat are 18" x 28" and the dimensions of the medium Recycled Waterhog Dog Mat are 22.5" x 34.5".

Predicted Answer: The small Recycled Waterhog Dog Mat has dimensions of 18" x 28" and the medium size has dimensions of 22.5" x 34.5".

Predicted Grade: CORRECTExample 4:

Question: What are some features of the Infant and Toddler Girls' Coastal Chill Swimsuit?

Real Answer: The swimsuit features bright colors, ruffles, and exclusive whimsical prints. It is made of four-way-stretch and chlorine-resistant fabric, ensuring that it keeps its shape and resists snags. The swimsuit is also UPF 50+ rated, providing the highest rated sun protection possible by blocking 98% of the sun's harmful rays. The crossover no-slip straps and fully lined bottom ensure a secure fit and maximum coverage. Finally, it can be machine washed and line dried for best results.

Predicted Answer: The Infant and Toddler Girls' Coastal Chill Swimsuit is a two-piece swimsuit with bright colors, ruffles, and exclusive whimsical prints. It is made of four-way-stretch and chlorine-resistant fabric that keeps its shape and resists snags. The swimsuit has UPF 50+ rated fabric that provides the highest rated sun protection possible, blocking 98% of the sun's harmful rays. The crossover no-slip straps and fully lined bottom ensure a secure fit and maximum coverage. It is machine washable and should be line dried for best results.

Predicted Grade: CORRECTExample 5:

Question: What is the fabric composition of the Refresh Swimwear V-Neck Tankini Contrasts?

Real Answer: The body of the Refresh Swimwear V-Neck Tankini Contrasts is made of 82% recycled nylon and 18% Lycra® spandex, while the lining is made of 90% recycled nylon and 10% Lycra® spandex.

Predicted Answer: The Refresh Swimwear V-Neck Tankini Contrasts is made of 82% recycled nylon with 18% Lycra® spandex for the body and 90% recycled nylon with 10% Lycra® spandex for the lining.

Predicted Grade: CORRECTExample 6:

Question: What is the fabric composition of the EcoFlex 3L Storm Pants?

Real Answer: The EcoFlex 3L Storm Pants are made of 100% nylon, exclusive of trim.

Predicted Answer: The fabric composition of the EcoFlex 3L Storm Pants is 100% nylon, exclusive of trim.

Predicted Grade: CORRECT

LangChainPlus

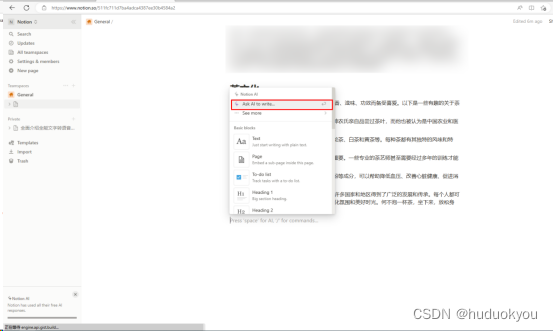

创建数据集,后台程序自动进行评估,并且可以可视化各个节点的输入输出信息。

7. Agents

有时候,人们会把LLM看做是知识库,就好像它学会并记忆了大量的从互联网上获取的信息。基于此,可以轻松实现高质量的问答。但是一种更有用的方式是把LLM看做是一个推理引擎,用户可以向其提供一些文本或者其他信息,然后LLM可能会使用它从互联网上学习这些背景知识,也可以利用用户提供的信息来帮助用户回答问题或者推理内容,甚至决定下一步的动作。目前LangChain的Agents框架已经能够实现上述的功能。

initialize_agent

下面是一个例子

from langchain.agents.agent_toolkits import create_python_agent

from langchain.agents import load_tools, initialize_agent

from langchain.agents import AgentType

from langchain.tools.python.tool import PythonREPLTool

from langchain.python import PythonREPL

from langchain.chat_models import ChatOpenAIllm = ChatOpenAI(temperature=0)

tools = load_tools(["llm-math","wikipedia"], llm=llm)

agent= initialize_agent(tools, llm, agent=AgentType.CHAT_ZERO_SHOT_REACT_DESCRIPTION,handle_parsing_errors=True,verbose = True)

agent("What is the 25% of 300?")

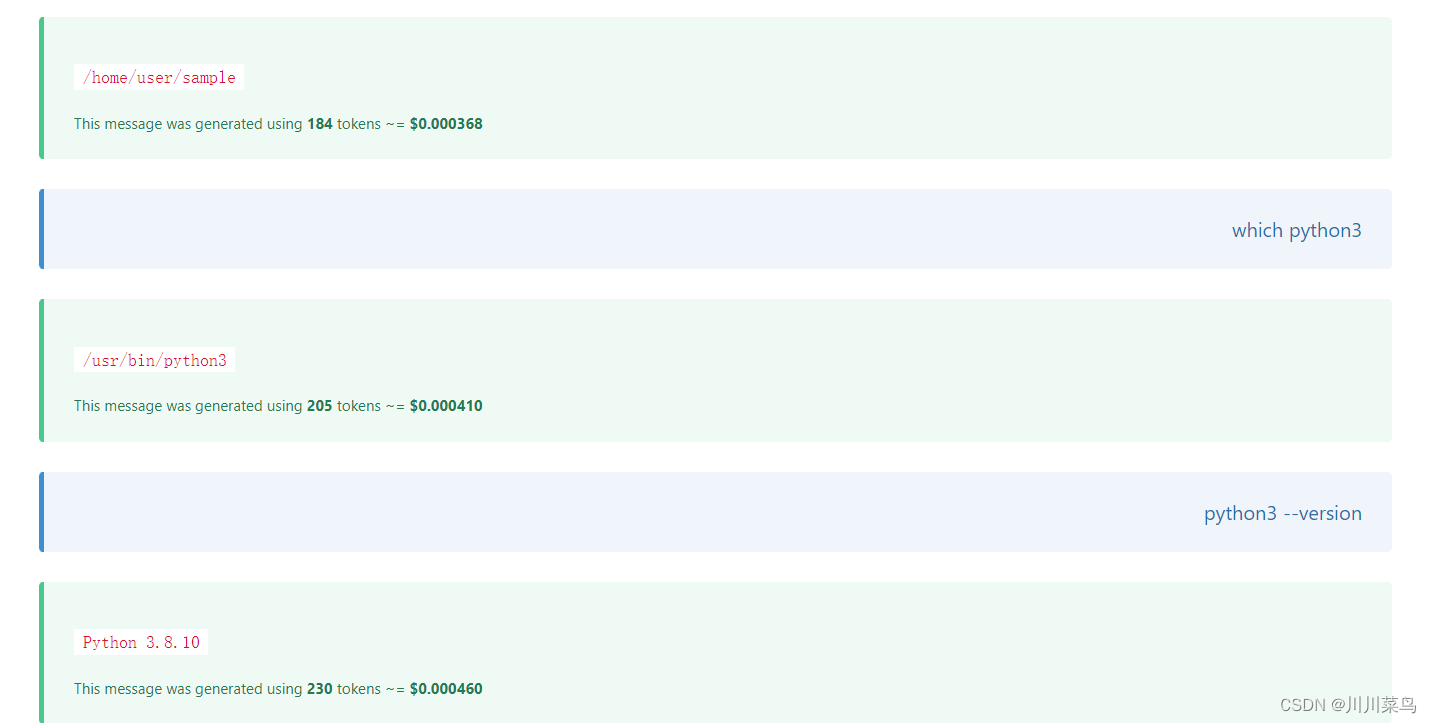

这是开启了debug之后的输出。其中Thought是输入到模型的prompt,Action是需要执行的动作,action指明了需要调用的工具,action_input指明了这个工具的输入。Observation是调用工具后的输出结果,最后输入到模型让其做最后的输出,得到最终的答案。

其中,使用的代理类型是 ,其含义如下:

- CHAT:表明它是一个专为Chat模型一起工作而优化的代理;

- ZERO_SHOT:零样本学习能力;

- REACT:一种组织Prompt的技术,可以最大化LLM的推理能力。

handle_parsing_errors = True:是在解析器出错时进行处理的逻辑,实际上就是遇到内容无法正常解析时,将格式错误的内容传回语言模型,并要求它自行纠正。

create_python_agent

像Copilot,代码解释器插件之类的应用,它们所做的就是用语言模型写代码,然后执行生成的代码。基于LangChain也可以实现这样的应用。

REPL是一种与代码进行交互的工具,可以简单地将其当做一个 Jupyter Notebook。

agent = create_python_agent(llm,tool=PythonREPLTool(),verbose=True

)customer_list = [["Harrison", "Chase"], ["Lang", "Chain"],["Dolly", "Too"],["Elle", "Elem"], ["Geoff","Fusion"], ["Trance","Former"],["Jen","Ayai"]]agent.run(f"""Sort these customers by \

last name and then first name \

and print the output: {customer_list}""")

import langchain

langchain.debug=True

agent.run(f"""Sort these customers by \

last name and then first name \

and print the output: {customer_list}""")

langchain.debug=False

输出:

[chain/start] [1:chain:AgentExecutor] Entering Chain run with input:

{"input": "Sort these customers by last name and then first name and print the output: [['Harrison', 'Chase'], ['Lang', 'Chain'], ['Dolly', 'Too'], ['Elle', 'Elem'], ['Geoff', 'Fusion'], ['Trance', 'Former'], ['Jen', 'Ayai']]"

}

[chain/start] [1:chain:AgentExecutor > 2:chain:LLMChain] Entering Chain run with input:

{"input": "Sort these customers by last name and then first name and print the output: [['Harrison', 'Chase'], ['Lang', 'Chain'], ['Dolly', 'Too'], ['Elle', 'Elem'], ['Geoff', 'Fusion'], ['Trance', 'Former'], ['Jen', 'Ayai']]","agent_scratchpad": "","stop": ["\nObservation:","\n\tObservation:"]

}

[llm/start] [1:chain:AgentExecutor > 2:chain:LLMChain > 3:llm:ChatOpenAI] Entering LLM run with input:

{"prompts": ["Human: You are an agent designed to write and execute python code to answer questions.\nYou have access to a python REPL, which you can use to execute python code.\nIf you get an error, debug your code and try again.\nOnly use the output of your code to answer the question. \nYou might know the answer without running any code, but you should still run the code to get the answer.\nIf it does not seem like you can write code to answer the question, just return \"I don't know\" as the answer.\n\n\nPython REPL: A Python shell. Use this to execute python commands. Input should be a valid python command. If you want to see the output of a value, you should print it out with `print(...)`.\n\nUse the following format:\n\nQuestion: the input question you must answer\nThought: you should always think about what to do\nAction: the action to take, should be one of [Python REPL]\nAction Input: the input to the action\nObservation: the result of the action\n... (this Thought/Action/Action Input/Observation can repeat N times)\nThought: I now know the final answer\nFinal Answer: the final answer to the original input question\n\nBegin!\n\nQuestion: Sort these customers by last name and then first name and print the output: [['Harrison', 'Chase'], ['Lang', 'Chain'], ['Dolly', 'Too'], ['Elle', 'Elem'], ['Geoff', 'Fusion'], ['Trance', 'Former'], ['Jen', 'Ayai']]\nThought:"]

}

[llm/end] [1:chain:AgentExecutor > 2:chain:LLMChain > 3:llm:ChatOpenAI] [4.96s] Exiting LLM run with output:

{"generations": [[{"text": "I can use the `sorted()` function to sort the list of customers. I will need to provide a key function that specifies the sorting order based on last name and then first name.\nAction: Python REPL\nAction Input: \n```python\ncustomers = [['Harrison', 'Chase'], ['Lang', 'Chain'], ['Dolly', 'Too'], ['Elle', 'Elem'], ['Geoff', 'Fusion'], ['Trance', 'Former'], ['Jen', 'Ayai']]\nsorted_customers = sorted(customers, key=lambda x: (x[1], x[0]))\nsorted_customers\n```","generation_info": null,"message": {"content": "I can use the `sorted()` function to sort the list of customers. I will need to provide a key function that specifies the sorting order based on last name and then first name.\nAction: Python REPL\nAction Input: \n```python\ncustomers = [['Harrison', 'Chase'], ['Lang', 'Chain'], ['Dolly', 'Too'], ['Elle', 'Elem'], ['Geoff', 'Fusion'], ['Trance', 'Former'], ['Jen', 'Ayai']]\nsorted_customers = sorted(customers, key=lambda x: (x[1], x[0]))\nsorted_customers\n```","additional_kwargs": {},"example": false}}]],"llm_output": {"token_usage": {"prompt_tokens": 326,"completion_tokens": 129,"total_tokens": 455},"model_name": "gpt-3.5-turbo"}

}

[chain/end] [1:chain:AgentExecutor > 2:chain:LLMChain] [4.96s] Exiting Chain run with output:

{"text": "I can use the `sorted()` function to sort the list of customers. I will need to provide a key function that specifies the sorting order based on last name and then first name.\nAction: Python REPL\nAction Input: \n```python\ncustomers = [['Harrison', 'Chase'], ['Lang', 'Chain'], ['Dolly', 'Too'], ['Elle', 'Elem'], ['Geoff', 'Fusion'], ['Trance', 'Former'], ['Jen', 'Ayai']]\nsorted_customers = sorted(customers, key=lambda x: (x[1], x[0]))\nsorted_customers\n```"

}

[tool/start] [1:chain:AgentExecutor > 4:tool:Python REPL] Entering Tool run with input:

"```python

customers = [['Harrison', 'Chase'], ['Lang', 'Chain'], ['Dolly', 'Too'], ['Elle', 'Elem'], ['Geoff', 'Fusion'], ['Trance', 'Former'], ['Jen', 'Ayai']]

sorted_customers = sorted(customers, key=lambda x: (x[1], x[0]))

sorted_customers

```"

[tool/end] [1:chain:AgentExecutor > 4:tool:Python REPL] [0.383ms] Exiting Tool run with output:

""

[chain/start] [1:chain:AgentExecutor > 5:chain:LLMChain] Entering Chain run with input:

{"input": "Sort these customers by last name and then first name and print the output: [['Harrison', 'Chase'], ['Lang', 'Chain'], ['Dolly', 'Too'], ['Elle', 'Elem'], ['Geoff', 'Fusion'], ['Trance', 'Former'], ['Jen', 'Ayai']]","agent_scratchpad": "I can use the `sorted()` function to sort the list of customers. I will need to provide a key function that specifies the sorting order based on last name and then first name.\nAction: Python REPL\nAction Input: \n```python\ncustomers = [['Harrison', 'Chase'], ['Lang', 'Chain'], ['Dolly', 'Too'], ['Elle', 'Elem'], ['Geoff', 'Fusion'], ['Trance', 'Former'], ['Jen', 'Ayai']]\nsorted_customers = sorted(customers, key=lambda x: (x[1], x[0]))\nsorted_customers\n```\nObservation: \nThought:","stop": ["\nObservation:","\n\tObservation:"]

}

[llm/start] [1:chain:AgentExecutor > 5:chain:LLMChain > 6:llm:ChatOpenAI] Entering LLM run with input:

{"prompts": ["Human: You are an agent designed to write and execute python code to answer questions.\nYou have access to a python REPL, which you can use to execute python code.\nIf you get an error, debug your code and try again.\nOnly use the output of your code to answer the question. \nYou might know the answer without running any code, but you should still run the code to get the answer.\nIf it does not seem like you can write code to answer the question, just return \"I don't know\" as the answer.\n\n\nPython REPL: A Python shell. Use this to execute python commands. Input should be a valid python command. If you want to see the output of a value, you should print it out with `print(...)`.\n\nUse the following format:\n\nQuestion: the input question you must answer\nThought: you should always think about what to do\nAction: the action to take, should be one of [Python REPL]\nAction Input: the input to the action\nObservation: the result of the action\n... (this Thought/Action/Action Input/Observation can repeat N times)\nThought: I now know the final answer\nFinal Answer: the final answer to the original input question\n\nBegin!\n\nQuestion: Sort these customers by last name and then first name and print the output: [['Harrison', 'Chase'], ['Lang', 'Chain'], ['Dolly', 'Too'], ['Elle', 'Elem'], ['Geoff', 'Fusion'], ['Trance', 'Former'], ['Jen', 'Ayai']]\nThought:I can use the `sorted()` function to sort the list of customers. I will need to provide a key function that specifies the sorting order based on last name and then first name.\nAction: Python REPL\nAction Input: \n```python\ncustomers = [['Harrison', 'Chase'], ['Lang', 'Chain'], ['Dolly', 'Too'], ['Elle', 'Elem'], ['Geoff', 'Fusion'], ['Trance', 'Former'], ['Jen', 'Ayai']]\nsorted_customers = sorted(customers, key=lambda x: (x[1], x[0]))\nsorted_customers\n```\nObservation: \nThought:"]

}

[llm/end] [1:chain:AgentExecutor > 5:chain:LLMChain > 6:llm:ChatOpenAI] [2.63s] Exiting LLM run with output:

{"generations": [[{"text": "The customers have been sorted by last name and then first name.\nFinal Answer: [['Jen', 'Ayai'], ['Harrison', 'Chase'], ['Lang', 'Chain'], ['Elle', 'Elem'], ['Geoff', 'Fusion'], ['Trance', 'Former'], ['Dolly', 'Too']]","generation_info": null,"message": {"content": "The customers have been sorted by last name and then first name.\nFinal Answer: [['Jen', 'Ayai'], ['Harrison', 'Chase'], ['Lang', 'Chain'], ['Elle', 'Elem'], ['Geoff', 'Fusion'], ['Trance', 'Former'], ['Dolly', 'Too']]","additional_kwargs": {},"example": false}}]],"llm_output": {"token_usage": {"prompt_tokens": 460,"completion_tokens": 67,"total_tokens": 527},"model_name": "gpt-3.5-turbo"}

}

[chain/end] [1:chain:AgentExecutor > 5:chain:LLMChain] [2.63s] Exiting Chain run with output:

{"text": "The customers have been sorted by last name and then first name.\nFinal Answer: [['Jen', 'Ayai'], ['Harrison', 'Chase'], ['Lang', 'Chain'], ['Elle', 'Elem'], ['Geoff', 'Fusion'], ['Trance', 'Former'], ['Dolly', 'Too']]"

}

[chain/end] [1:chain:AgentExecutor] [7.59s] Exiting Chain run with output:

{"output": "[['Jen', 'Ayai'], ['Harrison', 'Chase'], ['Lang', 'Chain'], ['Elle', 'Elem'], ['Geoff', 'Fusion'], ['Trance', 'Former'], ['Dolly', 'Too']]"

}

agent_scratchpad 参数的值是之前的输出和工具输出的组合。把这部分信息喂给模型,是让模型了解之前发生了什么,并利用这部分信息推理出下一步的动作。

创建自定义代理

代理最大的优势是可以将其链接到自己的信息来源,自己的API和自己的数据等。

from langchain.agents import tool

from datetime import date@tool

def time(text: str) -> str:"""Returns todays date, use this for any \questions related to knowing todays date. \The input should always be an empty string, \and this function will always return todays \date - any date mathmatics should occur \outside this function."""return str(date.today())agent= initialize_agent(tools + [time], llm, agent=AgentType.CHAT_ZERO_SHOT_REACT_DESCRIPTION,handle_parsing_errors=True,verbose = True)

添加 @tool 装饰器将自定义的方法转换为langchain可以使用的工具。

try:result = agent("whats the date today?")

except: print("exception on external access")

输出:

> Entering new AgentExecutor chain...

Question: What's the date today?

Thought: I can use the `time` tool to get the current date.

Action:

```

{"action": "time","action_input": ""

}

```

Observation: 2023-07-02

Thought:I now know the final answer.

Final Answer: The date today is 2023-07-02.> Finished chain.

8. Summary

本课程介绍了各种应用实例,包括处理客户评论,构建一个基于文档问答的应用,使用LLM决定何时调用外部工具,来回答复杂的问题等。

提示词模板,解析器,模型记忆,思维推理链,模型评估,模型代理。

合。把这部分信息喂给模型,是让模型了解之前发生了什么,并利用这部分信息推理出下一步的动作。

创建自定义代理

代理最大的优势是可以将其链接到自己的信息来源,自己的API和自己的数据等。

from langchain.agents import tool

from datetime import date@tool

def time(text: str) -> str:"""Returns todays date, use this for any \questions related to knowing todays date. \The input should always be an empty string, \and this function will always return todays \date - any date mathmatics should occur \outside this function."""return str(date.today())agent= initialize_agent(tools + [time], llm, agent=AgentType.CHAT_ZERO_SHOT_REACT_DESCRIPTION,handle_parsing_errors=True,verbose = True)

添加 @tool 装饰器将自定义的方法转换为langchain可以使用的工具。

try:result = agent("whats the date today?")

except: print("exception on external access")

输出:

> Entering new AgentExecutor chain...

Question: What's the date today?

Thought: I can use the `time` tool to get the current date.

Action:

```

{"action": "time","action_input": ""

}

```

Observation: 2023-07-02

Thought:I now know the final answer.

Final Answer: The date today is 2023-07-02.> Finished chain.

8. Summary

本课程介绍了各种应用实例,包括处理客户评论,构建一个基于文档问答的应用,使用LLM决定何时调用外部工具,来回答复杂的问题等。