目录

功能描述

效果展示

代码实现

前端代码

后端代码

routers =》users.js

routers =》 index.js

app.js

功能描述

此案例是在上一个案例【FFmpeg的简单使用【Windows】--- 视频混剪+添加背景音乐-CSDN博客】的基础上的进一步完善,可以先去看上一个案例然后再看这一个,这些案例都是每次在上一个的基础上加一点功能。

在背景音乐区域先点击【选择文件】按钮上传生成视频的背景音乐素材;

然后在视频区域点击【选择文件】按钮选择要混剪的视频素材,最多可选择10个;

然后可以在文本框中输入你想要生成多长时间的视频,此处我给的默认值是 10s 即你要是不修改的话就是默认生成 10s 的视频;

最后点击【开始处理】按钮,此时会先将选择的视频素材上传到服务器,然后将视频按照指定的时间进行混剪并融合背景音乐。

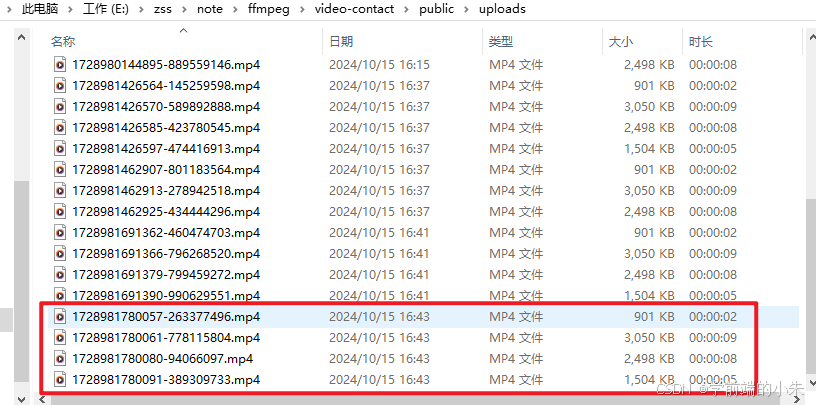

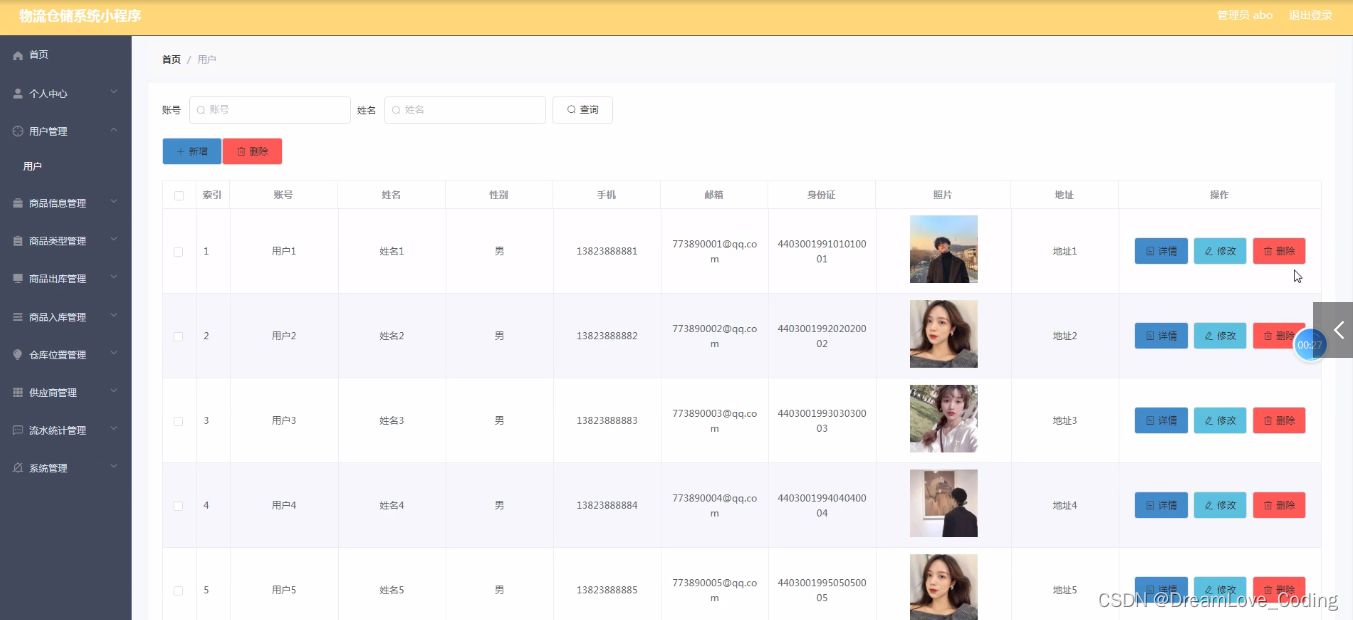

效果展示

代码实现

说明:

前端代码是使用vue编写的。

后端接口的代码是使用nodejs进行编写的。

前端代码

<template><div id="app"><!-- 显示上传的音频 --><div><h2>上传的背景音乐</h2><audiov-for="audio in uploadedaudios":key="audio.src":src="audio.src"controlsstyle="width: 300px"></audio></div><!-- 上传视频音频 --><input type="file" @change="uploadaudio" accept="audio/*" /><hr /><!-- 显示上传的视频 --><div><h2>将要处理的视频</h2><videov-for="video in uploadedVideos":key="video.src":src="video.src"controlsstyle="width: 120px"></video></div><!-- 上传视频按钮 --><input type="file" @change="uploadVideo" multiple accept="video/*" /><hr /><h1>设置输出视频长度</h1><input type="number" v-model="timer" class="inputStyle" /><hr /><!-- 显示处理后的视频 --><div><h2>已处理后的视频</h2><videov-for="video in processedVideos":key="video.src":src="video.src"controlsstyle="width: 120px"></video></div><button @click="processVideos">开始处理</button></div>

</template><script setup>

import axios from "axios";

import { ref } from "vue";const uploadedaudios = ref([]);

const processedAudios = ref([]);

let audioIndex = 0;

const uploadaudio = async (e) => {const files = e.target.files;for (let i = 0; i < files.length; i++) {const file = files[i];const audioSrc = URL.createObjectURL(file);uploadedaudios.value = [{ id: audioIndex++, src: audioSrc, file }];}await processAudio();

};

// 上传音频

const processAudio = async () => {const formData = new FormData();for (const audio of uploadedaudios.value) {formData.append("audio", audio.file); // 使用实际的文件对象}try {const response = await axios.post("http://localhost:3000/user/single/audio",formData,{headers: {"Content-Type": "multipart/form-data",},});const processedVideoSrc = response.data.path;processedAudios.value = [{id: audioIndex++,src: processedVideoSrc,},];} catch (error) {console.error("音频上传失败:", error);}

};const uploadedVideos = ref([]);

const processedVideos = ref([]);

let videoIndex = 0;const uploadVideo = async (e) => {const files = e.target.files;for (let i = 0; i < files.length; i++) {const file = files[i];const videoSrc = URL.createObjectURL(file);uploadedVideos.value.push({ id: videoIndex++, src: videoSrc, file });}

};

const timer = ref(10);const processVideos = async () => {const formData = new FormData();formData.append("audioPath", processedAudios.value[0].src);formData.append("timer", timer.value);for (const video of uploadedVideos.value) {formData.append("videos", video.file); // 使用实际的文件对象}try {const response = await axios.post("http://localhost:3000/user/process",formData,{headers: {"Content-Type": "multipart/form-data",},});const processedVideoSrc = response.data.path;processedVideos.value.push({id: videoIndex++,src: "http://localhost:3000/" + processedVideoSrc,});} catch (error) {console.error("视频处理失败:", error);}

};

</script>

<style lang="scss" scoped>

.inputStyle {padding-left: 20px;font-size: 20px;line-height: 2;border-radius: 20px;border: 1px solid #ccc;

}

</style>后端代码

说明:

此案例的核心就是针对于视频的输出长度的问题。

我在接口中书写的视频混剪的逻辑是每个视频中抽取的素材都是等长的,这就涉及到一个问题,将时间平均(segmentLength)到每个素材上的时候,有可能素材视频的长度(length)要小于avaTime,这样的话就会导致从这样的素材中随机抽取视频片段的时候有问题。我的解决方案是这样的:

首先对视频片段进行初始化的抽取,如果segmentLength>length的时候,就将整个视频作为抽取的片段传入,如果segmentLength<length的时候再进行从该素材中随机抽取指定的视频片段。

当初始化完毕之后发现初始化分配之后的视频长度(totalLength)<设置的输出视频长度(timer),则通过不断从剩余的视频素材中随机选择片段来填补剩余的时间,直到总长度达到目标长度为止。每次循环都会计算剩余需要填补的时间,并从随机选择的视频素材中截取一段合适的长度。

routers =》users.js

var express = require('express');

var router = express.Router();

const multer = require('multer');

const ffmpeg = require('fluent-ffmpeg');

const path = require('path');

const { spawn } = require('child_process')

// 视频

const upload = multer({dest: 'public/uploads/',storage: multer.diskStorage({destination: function (req, file, cb) {cb(null, 'public/uploads'); // 文件保存的目录},filename: function (req, file, cb) {// 提取原始文件的扩展名const ext = path.extname(file.originalname).toLowerCase(); // 获取文件扩展名,并转换为小写// 生成唯一文件名,并加上扩展名const uniqueSuffix = Date.now() + '-' + Math.round(Math.random() * 1E9);const fileName = uniqueSuffix + ext; // 新文件名cb(null, fileName); // 文件名}})

});

// 音频

const uploadVoice = multer({dest: 'public/uploadVoice/',storage: multer.diskStorage({destination: function (req, file, cb) {cb(null, 'public/uploadVoice'); // 文件保存的目录},filename: function (req, file, cb) {// 提取原始文件的扩展名const ext = path.extname(file.originalname).toLowerCase(); // 获取文件扩展名,并转换为小写// 生成唯一文件名,并加上扩展名const uniqueSuffix = Date.now() + '-' + Math.round(Math.random() * 1E9);const fileName = uniqueSuffix + ext; // 新文件名cb(null, fileName); // 文件名}})

});const fs = require('fs');// 处理文件上传

router.post('/upload', upload.single('video'), (req, res) => {const videoPath = req.file.path;const originalName = req.file.originalname;const filePath = path.join('uploads', originalName);fs.rename(videoPath, filePath, (err) => {if (err) {console.error(err);return res.status(500).send("Failed to move file.");}res.json({ message: 'File uploaded successfully.', path: filePath });});

});// 处理单个视频文件

router.post('/single/process', upload.single('video'), (req, res) => {console.log(req.file)const videoPath = req.file.path;// 使用filename进行拼接是为了防止视频被覆盖const outputPath = `public/processed/reversed_${req.file.filename}`;ffmpeg().input(videoPath).outputOptions(['-vf reverse'// 反转视频帧顺序]).output(outputPath).on('end', () => {res.json({ message: 'Video processed successfully.', path: outputPath.replace('public', '') });}).on('error', (err) => {console.log(err)res.status(500).json({ error: 'An error occurred while processing the video.' });}).run();

});// 处理多个视频文件上传

router.post('/process', upload.array('videos', 10), (req, res) => {// 要添加的背景音频const audioPath = path.join(path.dirname(__filename).replace('routes', 'public'), req.body.audioPath)//要生成多长时间的视频const { timer } = req.body// 格式化上传的音频文件的路径const videoPaths = req.files.map(file => path.join(path.dirname(__filename).replace('routes', 'public/uploads'), file.filename));// 输出文件路径const outputPath = path.join('public/processed', 'merged_video.mp4');// 要合并的视频片段文件const concatFilePath = path.resolve('public', 'concat.txt').replace(/\\/g, '/');//绝对路径// 创建 processed 目录(如果不存在)if (!fs.existsSync("public/processed")) {fs.mkdirSync("public/processed");}// 计算每个视频的长度const videoLengths = videoPaths.map(videoPath => {return new Promise((resolve, reject) => {ffmpeg.ffprobe(videoPath, (err, metadata) => {if (err) {reject(err);} else {resolve(parseFloat(metadata.format.duration));}});});});// 等待所有视频长度计算完成Promise.all(videoLengths).then(lengths => {console.log('lengths', lengths)// 构建 concat.txt 文件内容let concatFileContent = '';// 定义一个函数来随机选择视频片段function getRandomSegment(videoPath, length, segmentLength) {// 如果该素材的长度小于截取的长度,则直接返回整个视频素材if (segmentLength >= length) {return {videoPath,startTime: 0,endTime: length};}const startTime = Math.floor(Math.random() * (length - segmentLength));return {videoPath,startTime,endTime: startTime + segmentLength};}// 随机选择视频片段const segments = [];let totalLength = 0;// 初始分配for (let i = 0; i < lengths.length; i++) {const videoPath = videoPaths[i];const length = lengths[i];const segmentLength = Math.min(timer / lengths.length, length);const segment = getRandomSegment(videoPath, length, segmentLength);segments.push(segment);totalLength += (segment.endTime - segment.startTime);}console.log("初始化分配之后的视频长度", totalLength)/* 这段代码的主要作用是在初始分配后,如果总长度 totalLength 小于目标长度 targetLength,则通过不断从剩余的视频素材中随机选择片段来填补剩余的时间,直到总长度达到目标长度为止。每次循环都会计算剩余需要填补的时间,并从随机选择的视频素材中截取一段合适的长度。*/// 如果总长度小于目标长度,则从剩余素材中继续选取随机片段while (totalLength < timer) {// 计算还需要多少时间才能达到目标长度const remainingTime = timer - totalLength;// 从素材路径数组中随机选择一个视频素材的索引const videoIndex = Math.floor(Math.random() * videoPaths.length);// 根据随机选择的索引,获取对应的视频路径和长度const videoPath = videoPaths[videoIndex];const length = lengths[videoIndex];// 确定本次需要截取的长度// 这个长度不能超过剩余需要填补的时间,也不能超过素材本身的长度,因此选取两者之中的最小值const segmentLength = Math.min(remainingTime, length);// 生成新的视频片段const segment = getRandomSegment(videoPath, length, segmentLength);// 将新生成的视频片段对象添加到片段数组中segments.push(segment);// 更新总长度totalLength += (segment.endTime - segment.startTime);}// 打乱视频片段的顺序function shuffleArray(array) {for (let i = array.length - 1; i > 0; i--) {const j = Math.floor(Math.random() * (i + 1));[array[i], array[j]] = [array[j], array[i]];}return array;}shuffleArray(segments);// 构建 concat.txt 文件内容segments.forEach(segment => {concatFileContent += `file '${segment.videoPath.replace(/\\/g, '/')}'\n`;concatFileContent += `inpoint ${segment.startTime}\n`;concatFileContent += `outpoint ${segment.endTime}\n`;});fs.writeFileSync(concatFilePath, concatFileContent, 'utf8');// 获取视频总时长const totalVideoDuration = segments.reduce((acc, segment) => acc + (segment.endTime - segment.startTime), 0);console.log("最终要输出的视频总长度为", totalVideoDuration)// 获取音频文件的长度const getAudioDuration = (filePath) => {return new Promise((resolve, reject) => {const ffprobe = spawn('ffprobe', ['-v', 'error','-show_entries', 'format=duration','-of', 'default=noprint_wrappers=1:nokey=1',filePath]);let duration = '';ffprobe.stdout.on('data', (data) => {duration += data.toString();});ffprobe.stderr.on('data', (data) => {console.error(`ffprobe stderr: ${data}`);reject(new Error(`Failed to get audio duration`));});ffprobe.on('close', (code) => {if (code !== 0) {reject(new Error(`FFprobe process exited with code ${code}`));} else {resolve(parseFloat(duration.trim()));}});});};getAudioDuration(audioPath).then(audioDuration => {// 计算音频循环次数const loopCount = Math.floor(totalVideoDuration / audioDuration);// 使用 ffmpeg 合并多个视频ffmpeg().input(audioPath) // 添加音频文件作为输入.inputOptions([`-stream_loop ${loopCount}`, // 设置音频循环次数]).input(concatFilePath).inputOptions(['-f concat','-safe 0']).output(outputPath).outputOptions(['-y', // 覆盖已存在的输出文件'-c:v libx264', // 视频编码器'-preset veryfast', // 编码速度'-crf 23', // 视频质量控制'-map 0:a', // 选择第一个输入(即音频文件)的音频流'-map 1:v', // 选择所有输入文件的视频流(如果有)'-c:a aac', // 音频编码器'-b:a 128k', // 音频比特率'-t', totalVideoDuration.toFixed(2), // 设置输出文件的总时长为视频的时长]).on('end', () => {const processedVideoSrc = `/processed/merged_video.mp4`;console.log(`Processed video saved at: ${outputPath}`);res.json({ message: 'Videos processed and merged successfully.', path: processedVideoSrc });}).on('error', (err) => {console.error(`Error processing videos: ${err}`);console.error('FFmpeg stderr:', err.stderr);res.status(500).json({ error: 'An error occurred while processing the videos.' });}).run();}).catch(err => {console.error(`Error getting audio duration: ${err}`);res.status(500).json({ error: 'An error occurred while processing the videos.' });});}).catch(err => {console.error(`Error calculating video lengths: ${err}`);res.status(500).json({ error: 'An error occurred while processing the videos.' });});// 写入 concat.txt 文件const concatFileContent = videoPaths.map(p => `file '${p.replace(/\\/g, '/')}'`).join('\n');fs.writeFileSync(concatFilePath, concatFileContent, 'utf8');

});// 处理单个音频文件

router.post('/single/audio', uploadVoice.single('audio'), (req, res) => {const audioPath = req.file.path;console.log(req.file)res.send({msg: 'ok',path: audioPath.replace('public', '').replace(/\\/g, '/')})

})

module.exports = router;

routers =》 index.js

var express = require('express');

var router = express.Router();router.use('/user', require('./users'));module.exports = router;

app.js

var createError = require('http-errors');

var express = require('express');

var path = require('path');

var cookieParser = require('cookie-parser');

var logger = require('morgan');var indexRouter = require('./routes/index');var app = express();// view engine setup

app.set('views', path.join(__dirname, 'views'));

app.set('view engine', 'jade');app.use(logger('dev'));

app.use(express.json());

app.use(express.urlencoded({ extended: false }));

app.use(cookieParser());

app.use(express.static(path.join(__dirname, 'public')));// 使用cors解决跨域问题

app.use(require('cors')());app.use('/', indexRouter);// catch 404 and forward to error handler

app.use(function (req, res, next) {next(createError(404));

});// error handler

app.use(function (err, req, res, next) {// set locals, only providing error in developmentres.locals.message = err.message;res.locals.error = req.app.get('env') === 'development' ? err : {};// render the error pageres.status(err.status || 500);res.render('error');

});module.exports = app;

![[PHP]__callStatic](https://i-blog.csdnimg.cn/direct/30853d7b8b904d12855a4ec749cb0208.png)