elk部署

- 前提准备

- 1、elasticsearch

- 2、kibana

- 3、logstash

前提准备

1、提前装好docker docker-compose相关命令

2、替换docker仓库地址国内镜像源

cd /etc/docker

vi daemon.json

# 替换内容

{"registry-mirrors": [

"https://docker.1panel.dev",

"https://docker.fxxk.dedyn.io",

"https://docker.xn--6oq72ry9d5zx.cn",

"https://docker.m.daocloud.io",

"https://a.ussh.net",

"https://docker.zhai.cm"]}参考地址:https://blog.csdn.net/llc580231/article/details/139979603

3、写一个docker-compose.yml(

新建一个网络、可通过容器名访问网络)

version: '2.1'

services:# 网络配置

networks:elastic_net:driver: bridge

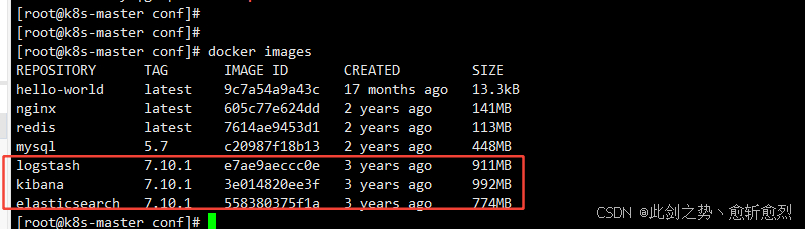

4、提前下载好相关镜像

docker pull elasticsearch:7.10.1

docker pull kibana:7.10.1

docker pull logstash:7.10.1

docker images

1、elasticsearch

#(需要是root账户)

vim /etc/sysctl.conf

#(文件最后添加一行)

vm.max_map_count=262144

# 生效

sysctl -p

docker-compose.yml新增es相关配置

es:image: elasticsearch:7.10.1container_name: esenvironment:- "discovery.type=single-node"- "TZ=Asia/Shanghai"- "ES_JAVA_OPTS=-Xms512m -Xmx512m"ulimits:memlock:soft: -1hard: -1volumes:- /home/es:/usr/share/elasticsearch/dataports:- 9200:9200networks:- elastic_net

执行新建容器命令./docker-compose -f conf/docker-compose.yml up --build -d es

访问9200端口成功

2、kibana

docker-compose.yml新增kibana相关配置

kibana:container_name: kibanaimage: kibana:7.10.1restart: unless-stoppedenvironment:- "TZ=Asia/Shanghai"- "I18N_LOCALE=zh-CN"- "ELASTICSEARCH_HOSTS=http://es:9200"ports:- 5601:5601volumes:- /home/kibana/config/kibana.yml:/usr/share/kibana/config/kibana.ymlnetworks:- elastic_net新建文件/home/kibana/config/kibana.yml

server.name: kibana

# kibana的主机地址 0.0.0.0可表示监听所有IP

server.host: "0.0.0.0"

# # kibana访问es的URL、es容器名称

elasticsearch.hosts: [ "http://es:9200" ]

# #这里是在elasticsearch设置密码时的值,没有密码可不配置

elasticsearch.username: "elastic"

elasticsearch.password: "\"123456\""

# # # 显示登陆页面

xpack.monitoring.ui.container.elasticsearch.enabled: true

# # 语言配置中文

i18n.locale: "zh-CN"执行新建容器命令./docker-compose -f conf/docker-compose.yml up --build -d kibana

访问5601成功

3、logstash

docker-compose.yml新增logstash相关配置

logstash:container_name: logstashimage: logstash:7.10.1ports:- 5044:5044volumes:- /home/logstash/pipeline:/usr/share/logstash/pipeline- /home/logstash/config:/usr/share/logstash/config- /home/nginx/log:/home/nginx/lognetworks:- elastic_net新建/home/logstash/config和/home/logstash/pipeline文件夹

/home/logstash/config文件新建配置文件pipelines.yml、logstash.yml

#pipelines.yml

- pipeline.id: nginxpath.config: "/usr/share/logstash/pipeline/nginx_log.conf"#logstash.yml内容 es为容器名

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.hosts: [ "http://es:9200" ]/home/logstash/pipeline文件新建 nginx_log.conf 文件

#配置

input {file {type => "messages_log"path => "/home/nginx/log/error.log"}file {type => "secure_log"path => "/home/nginx/log/access.log"}

}

output {if [type] == "messages_log" {elasticsearch {hosts => ["http://es:9200"]index => "messages_log_%{+YYYY-MM-dd}"user => "elastic" # 如果需要认证,确保用户名正确 password => "123456" # 如果需要认证,确保密码正确 }}if [type] == "secure_log" {elasticsearch {hosts => ["http://es:9200"]index => "secure_log_%{+YYYY-MM-dd}"user => "elastic" # 如果需要认证,确保用户名正确 password => "123456" # 如果需要认证,确保密码正确 }}

}执行新建容器命令./docker-compose -f conf/docker-compose.yml up --build -d logstash

可在kibana上查看es有相关索引

部署起来不是很难、但是没有实际应用logstash的功能、重点在/logstash/pipeline下的管道配置文件*.conf 输入输出过滤等操作、要写好相关的处理逻辑才能将错乱的日志处理成标准日志、至于conf文件的编写感觉很难需要学习相关的语法。

参考官网https://www.elastic.co/guide/en/logstash/current/config-examples.html