oly Kernel Inception Network for Remote Sensing Detection

论文地址:2403.06258![]() https://arxiv.org/pdf/2403.06258

https://arxiv.org/pdf/2403.06258

主要问题:

-

目标尺度变化大: 遥感图像中目标尺度范围广泛,从大型物体(如足球场)到小型物体(如车辆)都有涉及。

-

上下文信息多样: 准确识别目标不仅依赖于其外观,还需要考虑其周围环境,即上下文信息。

解决方法:

-

多尺度卷积: PKINet 使用多个不同大小的深度卷积核(无空洞卷积)并行排列,以提取不同尺度的纹理特征并捕捉局部上下文信息。

-

上下文锚点注意力机制 (CAA): CAA 机制利用全局平均池化和一维条带卷积来捕捉远距离像素之间的关系,并增强中心区域的特征,从而捕获长距离上下文信息。

模块适用任务:

-

遥感图像目标检测: PKINet 可以与各种面向目标检测器(如 Oriented RCNN)结合使用,以生成遥感图像的最终目标检测结果。

-

通用目标检测: PKINet 在 COCO 2017 数据集上也取得了优异的性能,表明其可以作为通用的骨干网络,不仅限于遥感图像。

-

主要优势:

-

性能提升: PKINet 在多个遥感图像目标检测基准数据集上取得了最先进的性能,并且对目标尺度变化不敏感。

-

轻量化: 与其他方法相比,PKINet 由于策略性地使用了深度卷积和一维卷积,因此参数量更少,计算效率更高。

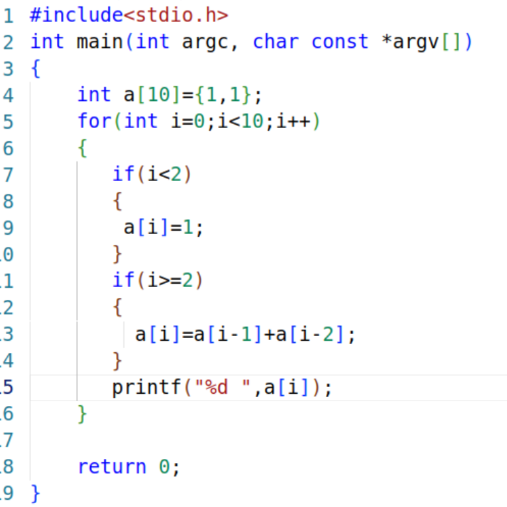

即插即用代码:

from typing import Optional

import torch.nn as nn

import torch

class ConvModule(nn.Module):def __init__(self,in_channels: int,out_channels: int,kernel_size: int,stride: int = 1,padding: int = 0,groups: int = 1,norm_cfg: Optional[dict] = None,act_cfg: Optional[dict] = None):super().__init__()layers = []# Convolution Layerlayers.append(nn.Conv2d(in_channels, out_channels, kernel_size, stride, padding, groups=groups, bias=(norm_cfg is None)))# Normalization Layerif norm_cfg:norm_layer = self._get_norm_layer(out_channels, norm_cfg)layers.append(norm_layer)# Activation Layerif act_cfg:act_layer = self._get_act_layer(act_cfg)layers.append(act_layer)# Combine all layersself.block = nn.Sequential(*layers)def forward(self, x):return self.block(x)def _get_norm_layer(self, num_features, norm_cfg):if norm_cfg['type'] == 'BN':return nn.BatchNorm2d(num_features, momentum=norm_cfg.get('momentum', 0.1), eps=norm_cfg.get('eps', 1e-5))# Add more normalization types if neededraise NotImplementedError(f"Normalization layer '{norm_cfg['type']}' is not implemented.")def _get_act_layer(self, act_cfg):if act_cfg['type'] == 'ReLU':return nn.ReLU(inplace=True)if act_cfg['type'] == 'SiLU':return nn.SiLU(inplace=True)# Add more activation types if neededraise NotImplementedError(f"Activation layer '{act_cfg['type']}' is not implemented.")class CAA(nn.Module):"""Context Anchor Attention"""def __init__(self,channels: int,h_kernel_size: int = 11,v_kernel_size: int = 11,norm_cfg: Optional[dict] = dict(type='BN', momentum=0.03, eps=0.001),act_cfg: Optional[dict] = dict(type='SiLU')):super().__init__()self.avg_pool = nn.AvgPool2d(7, 1, 3)self.conv1 = ConvModule(channels, channels, 1, 1, 0,norm_cfg=norm_cfg, act_cfg=act_cfg)self.h_conv = ConvModule(channels, channels, (1, h_kernel_size), 1,(0, h_kernel_size // 2), groups=channels,norm_cfg=None, act_cfg=None)self.v_conv = ConvModule(channels, channels, (v_kernel_size, 1), 1,(v_kernel_size // 2, 0), groups=channels,norm_cfg=None, act_cfg=None)self.conv2 = ConvModule(channels, channels, 1, 1, 0,norm_cfg=norm_cfg, act_cfg=act_cfg)self.act = nn.Sigmoid()def forward(self, x):attn_factor = self.act(self.conv2(self.v_conv(self.h_conv(self.conv1(self.avg_pool(x))))))return attn_factor# Example usage to print input and output shapes

if __name__ == "__main__":input = torch.randn(1, 32, 64, 64) #输入 B C H Wblock = CAA(32)output = block(input)print(input.size())print(output.size())YOLO小伙伴可进群交流: