项目地址:GitHub@chunhuizhang/llms_tuning

文章目录

- 01 TRL SFTTrainer 中的 formatting_func 与 DataCollatorForCompletion

- 02 accelerate ddp 与 trl SFTTrainer

- 03 finetune_llama3_for_RAG

- 04 optimizer Trainer 优化细节(AdamW,grad clip、Grad Norm)等

- 05 StackLlama、SFT+DPO(代码组织、数据处理,pipeline)

- 模型和数据

- 参数

- 数据集

- 加速SFT

- zero3

- accelerate DPO

- DPODataCollatorWithPadding & training

- 小寄巧

01 TRL SFTTrainer 中的 formatting_func 与 DataCollatorForCompletion

数据集:

dataset = load_dataset("lucasmccabe-lmi/CodeAlpaca-20k", split="train")

样本形如:

{'instruction': 'Create a function that takes a specific input and produces a specific output using any mathematical operators. Write corresponding code in Python.','input': '','output': 'def f(x):\n """\n Takes a specific input and produces a specific output using any mathematical operators\n """\n return x**2 + 3*x'}

trainer = SFTTrainer(model,train_dataset=dataset,args=SFTConfig(output_dir="/tmp"),formatting_func=formatting_prompts_func,data_collator=collator,

)

trainer.train()

formatting_func:将数据集整合成问答数据集的格式

data_collector:在SFT中(Prompt-Response Pairs),只对Response部分计算损失(Pre-Training是不区分Prompt和Response的,只对下一个Token计算损失)。

具体而言:

def formatting_prompts_func(example):output_texts = []for i in range(len(example['instruction'])):text = f"### Question: {example['instruction'][i]}\n ### Answer: {example['output'][i]}"output_texts.append(text)return output_texts

output_texts = formatting_prompts_func(dataset[:2])

output_texts[0]

格式化后的样本(就是Promptize):

### Question: Create a function that takes a specific input and produces a specific output using any mathematical operators. Write corresponding code in Python.### Answer: def f(x):"""Takes a specific input and produces a specific output using any mathematical operators"""return x**2 + 3*x

---

### Question: Generate a unique 8 character string that contains a lowercase letter, an uppercase letter, a numerical digit, and a special character. Write corresponding code in Python.### Answer: import string

import randomdef random_password_string():characters = string.ascii_letters + string.digits + string.punctuationpassword = ''.join(random.sample(characters, 8))return passwordif __name__ == '__main__':print(random_password_string())

然后看data_collector,常用的是内置的DataCollatorForCompletionOnlyLM

找到 labels (batch['labels']) 中和 response_template 相同 token 的最后一个的 index 作为 response_token_ids_start_idx,然后将 labels 中的开头到responese_template的最后一个token都标记为-100,这样的话就不会计算损失了。(自带的Ignoring Token的Index,BERT和T5有差别,其实就是mask)

源码如下:

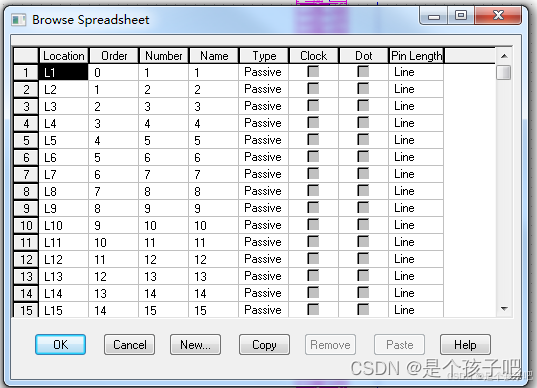

- 第一个参数是

response_template,第二个参数instruction_template(默认为 None)

model = AutoModelForCausalLM.from_pretrained("facebook/opt-350m")

tokenizer = AutoTokenizer.from_pretrained("facebook/opt-350m")response_template = " ### Answer:"

collator = DataCollatorForCompletionOnlyLM(response_template, tokenizer=tokenizer)

02 accelerate ddp 与 trl SFTTrainer

- accelerate: (accelerate config)

- backend(有很多后端,ddp是默认的,数据并行)

- default ddp: 提升数据的吞吐量

self.accelerator.ddp_handler = DistributedDataParallelKwargs(**kwargs)

- deepspeed, fsdp(这是两个流行的):https://huggingface.co/blog/deepspeed-to-fsdp-and-back

- megtron-lm

- 之前fsdp在并行上处理有一些问题,不如deepspeed,但后来BUG被修了,其实现在也差不了太多

- default ddp: 提升数据的吞吐量

- backend(有很多后端,ddp是默认的,数据并行)

- accelerate ddp

- 一般用法(相对底层),稍加改动;

- https://huggingface.co/docs/transformers/accelerate

- with

transformers(Trainer) ortrl(SFTTrainer):基本上不需要改动;accelerate lanuch(具体使用ddp,deepspeed,fsdp可以通过配置调整)

- 一般用法(相对底层),稍加改动;

简单使用:

accelerate config:命令行交互式配置(默认保存在./accelerate/default_config.yaml中,accelerate lanuch也可以指定参数,会覆盖default_config.yaml的值)accelerate launch -haccelerate launch --num_processes 2 --mixed_precision bf16 training_scripts.py

一个简单的训练脚本:

import os

os.environ['http_proxy'] = 'http://127.0.0.1:7890'

os.environ['https_proxy'] = 'http://127.0.0.1:7890'

# os.environ['NCCL_P2P_DISABLE'] = '1'

# os.environ['NCCL_IB_DISABLE'] = '1'

os.environ['WANDB_DISABLED'] = 'true'from transformers import AutoModelForCausalLM, AutoTokenizer

from datasets import load_dataset

from trl import SFTConfig, SFTTrainer, DataCollatorForCompletionOnlyLM

# from accelerate import Acceleratorimport torch

torch.manual_seed(42)

dataset = load_dataset("lucasmccabe-lmi/CodeAlpaca-20k", split="train")

model = AutoModelForCausalLM.from_pretrained("facebook/opt-350m", # device_map={"": Accelerator().process_index}# device_map={"": 0})

tokenizer = AutoTokenizer.from_pretrained("facebook/opt-350m")

def formatting_prompts_func(example):output_texts = []for i in range(len(example['instruction'])):text = f"### Question: {example['instruction'][i]}\n ### Answer: {example['output'][i]}"output_texts.append(text)return output_texts

response_template = " ### Answer:"

collator = DataCollatorForCompletionOnlyLM(response_template, tokenizer=tokenizer)

args = SFTConfig(output_dir="/tmp", max_seq_length=512, num_train_epochs=2, per_device_train_batch_size=4, gradient_accumulation_steps=4,gradient_checkpointing=True,)

trainer = SFTTrainer(model,train_dataset=dataset,args=args,formatting_func=formatting_prompts_func,data_collator=collator,

)

trainer.train()

关于SFTConfig:

class SFTConfig(TrainingArguments):- 继承了

TrainingArguments类,num_train_epochs: default 3per_device_train_batch_size: default 8per_device_eval_batch_size: default 8gradient_accumulation_steps: default 1dataloader_drop_last: default false

- 继承了

dataset_text_field: 跟 dataset 的成员对齐max_seq_lengthoutput_dir='/tmp'- packing=True,

- example packing, where multiple short examples are packed in the same input sequence to increase training efficiency.

# allows multiple shorter sequences to be packed into a single training example, maximizing the use of the model's context window.

03 finetune_llama3_for_RAG

- finetune Llama3-8B instruct model pipeline

- peft LoRA & bitsandbytes quantization

- RAG financial (chat/QA/instruct) dataset

- Accelerate distributed

- training arguments

- evaluation

import os

os.environ['http_proxy'] = 'http://127.0.0.1:7890'

os.environ['https_proxy'] = 'http://127.0.0.1:7890'

from IPython.display import Image

from textwrap import dedent

安装必要的包

# !pip install --upgrade trl

# !pip install --upgrade bitsandbytes

import random

from typing import Dict, List

from tqdm import tqdm

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from matplotlib.ticker import PercentFormatter

import seaborn as sns

from sklearn.model_selection import train_test_split

import torch

from torch.utils.data import DataLoader

from datasets import Dataset, load_dataset

from transformers import (AutoModelForCausalLM,AutoTokenizer,BitsAndBytesConfig,pipeline,

)

from peft import (LoraConfig,PeftModel,TaskType,get_peft_model,prepare_model_for_kbit_training,

)

from trl import DataCollatorForCompletionOnlyLM, SFTConfig, SFTTrainer

SEED = 42def seed_everything(seed: int):random.seed(seed)np.random.seed(seed)torch.manual_seed(seed)seed_everything(SEED)

常量定义:

pad_token = "<|pad|>"

model_id = "meta-llama/Meta-Llama-3-8B-Instruct"

new_model = "Llama-3-8B-Instruct-Finance-RAG"

Model和Tokenizer

quantization_config = BitsAndBytesConfig(load_in_4bit=True, bnb_4bit_quant_type="nf4", bnb_4bit_compute_dtype=torch.bfloat16

)# tokenizer

tokenizer = AutoTokenizer.from_pretrained(model_id, use_fast=True)# 不是所有model的tokenizer都支持 chat_template!!!

# 不支持的chat_template属性是空

print(tokenizer.chat_template)"""

{% set loop_messages = messages %}{% for message in loop_messages %}{% set content = '<|start_header_id|>' + message['role'] + '<|end_header_id|>'+ message['content'] | trim + '<|eot_id|>' %}{% if loop.index0 == 0 %}{% set content = bos_token + content %}{% endif %}{{ content }}{% endfor %}{% if add_generation_prompt %}{{ '<|start_header_id|>assistant<|end_header_id|>' }}{% endif %}

"""# 比如 base model

AutoTokenizer.from_pretrained('meta-llama/Meta-Llama-3-8B', use_fast=True).chat_templatetokenizer.special_tokens_map, tokenizer.pad_token # {'bos_token': '<|begin_of_text|>', 'eos_token': '<|end_of_text|>'}, None)tokenizer.add_special_tokens({"pad_token": pad_token})

# training

tokenizer.padding_side = "right"(tokenizer.pad_token, tokenizer.pad_token_id, tokenizer.eos_token, tokenizer.eos_token_id) # '<|pad|>', 128256, '<|end_of_text|>', 128001)model = AutoModelForCausalLM.from_pretrained(model_id,quantization_config=quantization_config,# attn_implementation="flash_attention_2",# attn_implementation="sdpa",device_map="auto",

)len(tokenizer.added_tokens_decoder) # 257model.model.embed_tokens, tokenizer.vocab_size, len(tokenizer) # (Embedding(128256, 4096), 128000, 128257)model.resize_token_embeddings(len(tokenizer), pad_to_multiple_of=8) Embedding(128264, 4096)128257/8, 128264/8 # (16032.125, 16033.0)

模型配置:model.config

LlamaConfig {"_name_or_path": "meta-llama/Meta-Llama-3-8B-Instruct","architectures": ["LlamaForCausalLM"],"attention_bias": false,"attention_dropout": 0.0,"bos_token_id": 128000,"eos_token_id": 128001,"hidden_act": "silu","hidden_size": 4096,"initializer_range": 0.02,"intermediate_size": 14336,"max_position_embeddings": 8192,"mlp_bias": false,"model_type": "llama","num_attention_heads": 32,"num_hidden_layers": 32,"num_key_value_heads": 8,"pretraining_tp": 1,"quantization_config": {"_load_in_4bit": true,"_load_in_8bit": false,"bnb_4bit_compute_dtype": "bfloat16","bnb_4bit_quant_storage": "uint8","bnb_4bit_quant_type": "nf4","bnb_4bit_use_double_quant": false,"llm_int8_enable_fp32_cpu_offload": false,"llm_int8_has_fp16_weight": false,"llm_int8_skip_modules": null,"llm_int8_threshold": 6.0,"load_in_4bit": true,"load_in_8bit": false,"quant_method": "bitsandbytes"},"rms_norm_eps": 1e-05,"rope_scaling": null,"rope_theta": 500000.0,"tie_word_embeddings": false,"torch_dtype": "bfloat16","transformers_version": "4.43.3","use_cache": true,"vocab_size": 128264

}

print(tokenizer.bos_token, tokenizer.bos_token_id) # <|begin_of_text|> 128000

print(tokenizer.eos_token, tokenizer.eos_token_id) # <|end_of_text|> 128001

print(tokenizer.pad_token, tokenizer.pad_token_id) # <|pad|> 128256

接下来用一个financial-qa-10K的数据集作为任务示例:

- RAG dataset with QA and context;

- Question + context => user query;

- Answer => assistant response;

数据字典及样本:

dataset = load_dataset("virattt/financial-qa-10K")

"""

DatasetDict({train: Dataset({features: ['question', 'answer', 'context', 'ticker', 'filing'],num_rows: 7000})

})"""

dataset["train"].column_names # ['question', 'answer', 'context', 'ticker', 'filing']dataset['train'][:1]

"""

{'question': ['What area did NVIDIA initially focus on before expanding to other computationally intensive fields?'],'answer': ['NVIDIA initially focused on PC graphics.'],'context': ['Since our original focus on PC graphics, we have expanded to several other large and important computationally intensive fields.'],'ticker': ['NVDA'],'filing': ['2023_10K']}

"""

datasets库的load_datasets得到的DatasetDict对象,可以直接用map方法进行批量处理:

def process(row):return {"question": row["question"],"context": row["context"],"answer": row["answer"]}

new_dataset = dataset.map(process, num_proc=8, remove_columns=dataset["train"].column_names)

new_dataset

"""

DatasetDict({train: Dataset({features: ['question', 'answer', 'context'],num_rows: 7000})

})

"""

这样就得到了QA + Context三列字段,查看:

df = new_dataset['train'].to_pandas()

df.head()

df.isnull().value_counts() # 这个数据集是没有缺失的

然后就是 to char dataset,需要自定义formatter:

def format_example(row: dict):prompt = dedent(f"""{row["question"]}Information:```{row["context"]}```""")messages = [{"role": "system","content": "Use only the information to answer the question",},{"role": "user", "content": prompt},{"role": "assistant", "content": row["answer"]},]return tokenizer.apply_chat_template(messages, tokenize=False)

df["text"] = df.apply(format_example, axis=1)

print(df.iloc[0]['text'])

"""

<|begin_of_text|><|start_header_id|>system<|end_header_id|>Use only the information to answer the question<|eot_id|><|start_header_id|>user<|end_header_id|>What area did NVIDIA initially focus on before expanding to other computationally intensive fields?Information:·```

Since our original focus on PC graphics, we have expanded to several other large and important computationally intensive fields.

```<|eot_id|><|start_header_id|>assistant<|end_header_id|>NVIDIA initially focused on PC graphics.<|eot_id|>

"""

就是prompt template,然后可以统计一下token数量:

def count_tokens(row: Dict) -> int:return len(tokenizer(row["text"],add_special_tokens=True,return_attention_mask=False,)["input_ids"])

df["token_count"] = df.apply(count_tokens, axis=1)

看看整体 token count 的分布情况:

# plt.hist(df.token_count, weights=np.ones(len(df.token_count)) / len(df.token_count))

# plt.gca().yaxis.set_major_formatter(PercentFormatter(1))

# plt.xlabel("Tokens")

# plt.ylabel("Percentage")

# plt.show()sns.histplot(df.token_count, stat='probability', bins=30)# 设置 y 轴格式为百分比

plt.gca().yaxis.set_major_formatter(PercentFormatter(1))# 添加标签

plt.xlabel("Tokens")

plt.ylabel("Percentage")# 显示图表

plt.show()len(df[df.token_count < 512]), len(df), len(df[df.token_count < 512]) / len(df) # (6997, 7000, 0.9995714285714286)

几乎所有的token count都在512以下,因此可以设置maxlen=512

分割数据集(20:4:1):

train, temp = train_test_split(df, test_size=0.2)

val, test = train_test_split(temp, test_size=0.2)

len(train), len(val), len(test) # (5600, 1120, 280)train.sample(n=5000).to_json("./data/train.json", orient="records", lines=True)

val.sample(n=1000).to_json("./data/val.json", orient="records", lines=True)

test.sample(n=250).to_json("./data/test.json", orient="records", lines=True)dataset = load_dataset("json",data_files={"train": "./data/train.json", "validation": "./data/val.json", "test": "./data/test.json"},

)

dataset

"""

DatasetDict({train: Dataset({features: ['question', 'answer', 'context', 'text', 'token_count'],num_rows: 5000})validation: Dataset({features: ['question', 'answer', 'context', 'text', 'token_count'],num_rows: 1000})test: Dataset({features: ['question', 'answer', 'context', 'text', 'token_count'],num_rows: 250})

})

"""

得到划分后的数据集

接下来我们就可以做SFT(监督微调)

这里以llama3-8b-instruct为例:

pipe = pipeline(task="text-generation",model=model,tokenizer=tokenizer,max_new_tokens=128,return_full_text=False,

)

def create_test_prompt(data_row):prompt = dedent(f"""{data_row["question"]}Information:```{data_row["context"]}```""")messages = [{"role": "system","content": "Use only the information to answer the question",},{"role": "user", "content": prompt},]return tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

row = dataset["test"][0]

prompt = create_test_prompt(row)

print(prompt)

"""

<|begin_of_text|><|start_header_id|>system<|end_header_id|>Use only the information to answer the question<|eot_id|><|start_header_id|>user<|end_header_id|>How does Amazon fulfill customer orders?Information:·```

Amazon fulfills customer orders using its North America and International fulfillment networks, co-sourced and outsourced arrangements in certain countries, digital delivery, and physical stores.

```<|eot_id|><|start_header_id|>assistant<|end_header_id|>

"""

模型输出:

outputs = pipe(prompt)

response = f"""

answer: {row["answer"]}

prediction: {outputs[0]["generated_text"]}

"""

print(response)

"""

answer: Amazon fulfills customer orders through a combination of North America and International fulfillment networks operated by the company, co-sourced and outsourced arrangements in some countries, digital delivery, and through its physical stores.

prediction: According to the information, Amazon fulfills customer orders using:1. North America and International fulfillment networks

2. Co-sourced and outsourced arrangements in certain countries

3. Digital delivery

4. Physical stores

"""

再看一个例子:

row = dataset["test"][1]

prompt = create_test_prompt(row)

print(prompt)

"""

<|begin_of_text|><|start_header_id|>system<|end_header_id|>Use only the information to answer the question<|eot_id|><|start_header_id|>user<|end_header_id|>Who holds the patents for the active pharmaceutical ingredients of some of the company's products?Information:· ```

Patents covering certain of the active pharmaceutical ingredients ("API") of some of our products are held by third parties. We acquired exclusive rights to these patents in the agreements we have with these parties.

```<|eot_id|><|start_header_id|>assistant<|end_header_id|>

"""

模型输出:

outputs = pipe(prompt)

response = f"""

answer: {row["answer"]}

prediction: {outputs[0]["generated_text"]}

"""

print(response)

"""

answer: The patents for the active pharmaceutical ingredients of some of the company's products are held by third parties, from whom the company has acquired exclusive rights through agreements.

prediction: Third parties hold the patents for the active pharmaceutical ingredients of some of the company's products.

"""

当然可以批量生成模型输出:

rows = []

for row in tqdm(dataset["test"]):prompt = create_test_prompt(row)outputs = pipe(prompt)rows.append({"question": row["question"],"context": row["context"],"prompt": prompt,"answer": row["answer"],"untrained_prediction": outputs[0]["generated_text"],})

predictions_df = pd.DataFrame(rows)

结果predictions_df 不作展示

最后是Train on Completions

- collate_fn

- DataCollatorForCompletionOnlyLM

- data collator used for completion tasks. It ensures that all the tokens of the labels are set to an ‘ignore_index’

when they do not come from the assistant. This ensure that the loss is only

calculated on the completion made by the assistant.

- data collator used for completion tasks. It ensures that all the tokens of the labels are set to an ‘ignore_index’

- 实例化的 collator 作为 SFTrainer 的 data_collator 的参数;

- DataCollatorForCompletionOnlyLM

examples = [dataset["train"][0]["text"]]

print(examples[0])

"""

<|begin_of_text|><|start_header_id|>system<|end_header_id|>Use only the information to answer the question<|eot_id|><|start_header_id|>user<|end_header_id|>Who is the Chief Financial Officer and since when?Information:·```

Richard A. Galanti | Executive Vice President and Chief Financial Officer. Mr. Galanti has been a director since January 1995.

```<|eot_id|><|start_header_id|>assistant<|end_header_id|>Richard A. Galanti is the Executive Vice President and Chief Financial Officer, and he has been in this role since 1993.<|eot_id|>

"""

就是用dataloader的collate_fn来进行预处理

response_template = "<|end_header_id|>"

collator = DataCollatorForCompletionOnlyLM(response_template, tokenizer=tokenizer)

# collatorencodings = [tokenizer(e) for e in examples]

dataloader = DataLoader(encodings, collate_fn=collator, batch_size=1)batch = next(iter(dataloader))

batch.keys() # dict_keys(['input_ids', 'attention_mask', 'labels'])

可以通过batch["input_ids"], batch["labels"]查看数据,以及:

tokenizer.decode([271, 42315, 362, 13, 10845, 15719, 374,279, 18362, 23270, 4900, 323, 14681, 17961, 20148, 11,323, 568, 706, 1027, 304, 420, 3560, 2533, 220,2550, 18, 13, 128009])

可以得到解码的结果:'\n\nRichard A. Galanti is the Executive Vice President and Chief Financial Officer, and he has been in this role since 1993.<|eot_id|>'

接着我们需要配置lora算法进行微调

模型整体架构:

LlamaForCausalLM((model): LlamaModel((embed_tokens): Embedding(128264, 4096)(layers): ModuleList((0-31): 32 x LlamaDecoderLayer((self_attn): LlamaSdpaAttention((q_proj): Linear4bit(in_features=4096, out_features=4096, bias=False)(k_proj): Linear4bit(in_features=4096, out_features=1024, bias=False)(v_proj): Linear4bit(in_features=4096, out_features=1024, bias=False)(o_proj): Linear4bit(in_features=4096, out_features=4096, bias=False)(rotary_emb): LlamaRotaryEmbedding())(mlp): LlamaMLP((gate_proj): Linear4bit(in_features=4096, out_features=14336, bias=False)(up_proj): Linear4bit(in_features=4096, out_features=14336, bias=False)(down_proj): Linear4bit(in_features=14336, out_features=4096, bias=False)(act_fn): SiLU())(input_layernorm): LlamaRMSNorm()(post_attention_layernorm): LlamaRMSNorm()))(norm): LlamaRMSNorm()(rotary_emb): LlamaRotaryEmbedding())(lm_head): Linear(in_features=4096, out_features=128264, bias=False)

)

LORA配置:

lora_config = LoraConfig(r=32,lora_alpha=16,target_modules=["self_attn.q_proj","self_attn.k_proj","self_attn.v_proj","self_attn.o_proj","mlp.gate_proj","mlp.up_proj","mlp.down_proj",],lora_dropout=0.05,bias="none",task_type=TaskType.CAUSAL_LM,

)

model = prepare_model_for_kbit_training(model)

model = get_peft_model(model, lora_config)

model.print_trainable_parameters() # trainable params: 83,886,080 || all params: 8,114,212,864 || trainable%: 1.0338166055782685

好了,现在可以SFT训练了:

output_dir = "experiments"

# # ssh -L 6006:localhost:6006 user@remote_server

# # localhost:6006

# %load_ext tensorboard

# %tensorboard --logdir "experiments/runs"

# dual 4090s

# accelerate config 会自动地配置这两个环境变量

os.environ["NCCL_P2P_DISABLE"] = "1"

os.environ["NCCL_IB_DISABLE"] = "1"

sft_config = SFTConfig(output_dir=output_dir,dataset_text_field="text",max_seq_length=512,num_train_epochs=1,per_device_train_batch_size=8,per_device_eval_batch_size=8,gradient_accumulation_steps=4,gradient_checkpointing=True,gradient_checkpointing_kwargs={"use_reentrant": False},optim="paged_adamw_8bit",eval_strategy="steps",eval_steps=0.2,save_steps=0.2,save_total_limit=2,logging_steps=10,learning_rate=1e-4,bf16=True, # or fp16=True,save_strategy="steps",warmup_ratio=0.1,lr_scheduler_type="constant",report_to="wandb",save_safetensors=True,dataset_kwargs={"add_special_tokens": False, # We template with special tokens"append_concat_token": False, # No need to add additional separator token},seed=SEED,

)

trainer = SFTTrainer(model=model,args=sft_config,train_dataset=dataset["train"],eval_dataset=dataset["validation"],tokenizer=tokenizer,data_collator=collator,

)

trainer.train()

trainer.save_model(new_model)

训练好的模型可以进行调用:

tokenizer = AutoTokenizer.from_pretrained(new_model)model = AutoModelForCausalLM.from_pretrained(model_id,torch_dtype=torch.bfloat16,device_map="auto",

)

model.resize_token_embeddings(len(tokenizer), pad_to_multiple_of=8)

model = PeftModel.from_pretrained(model, new_model)

model = model.merge_and_unload()

# model.push_to_hub(new_model, tokenizer=tokenizer, max_shard_size="5GB")

这里强调一下几个配置的参数值:

save_total_limits:保存最近的save_total_limits个checkpoints

最后是评估:

dataset = load_dataset("json",data_files={"train": "./data/train.json", "validation": "./data/val.json", "test": "./data/test.json"},

)

quantization_config = BitsAndBytesConfig(load_in_4bit=True, bnb_4bit_quant_type="nf4", bnb_4bit_compute_dtype=torch.bfloat16

)

tokenizer = AutoTokenizer.from_pretrained('./Llama-3-8B-Instruct-Finance-RAG', use_fast=True)

model = AutoModelForCausalLM.from_pretrained('./Llama-3-8B-Instruct-Finance-RAG', quantization_config=quantization_config, device_map="auto"

)

pipe = pipeline(task="text-generation",model=model,tokenizer=tokenizer,max_new_tokens=128,return_full_text=False,

)

row = dataset["test"][0]

prompt = create_test_prompt(row)

print(prompt)

# ---

outputs = pipe(prompt)

response = f"""

answer: {row["answer"]}

prediction: {outputs[0]["generated_text"]}

"""

print(response)

# ---

predictions = []

for row in tqdm(dataset["test"]):outputs = pipe(create_test_prompt(row))predictions.append(outputs[0]["generated_text"])

04 optimizer Trainer 优化细节(AdamW,grad clip、Grad Norm)等

标准操作(default)

accelerate launch --config-file examples/accelerate_configs/deepspeed_zero3.yaml examples/research_projects/stack_llama_2/scripts/sft_llama2.py \--output_dir="./sft" \--max_steps=500 \--logging_steps=10 \--save_steps=0.2 \--save_total_limit=2 \--per_device_train_batch_size=2 \--per_device_eval_batch_size=1 \--gradient_accumulation_steps=4 \--gradient_checkpointing=False \--group_by_length=False \--learning_rate=1e-4 \--lr_scheduler_type="cosine" \--warmup_steps=100 \--weight_decay=0.05 \--optim="paged_adamw_32bit" \--bf16=True \--remove_unused_columns=False \--run_name="sft_llama2" \--report_to="wandb"

运行:

{'loss': 1.5964, 'grad_norm': 0.04747452916511348, 'learning_rate': 1e-05, 'epoch': 0.02}

{'loss': 1.5964, 'grad_norm': 0.056631829890668624, 'learning_rate': 2e-05, 'epoch': 0.04}

{'loss': 1.5692, 'grad_norm': 0.05837008611668212, 'learning_rate': 3e-05, 'epoch': 0.06}8%|█████████▏ | 39/500 [01:49<20:44, 2.70s/it]

粒度都是 steps(optimization steps)

- loss

- grad_norm:监控全体的 weights/parameters (loss.backward 之后计算的)的 grad

- 避免出现梯度爆炸

- learning_rate: scheduled

Adam => AdamW

- Adam with decoupled weight decay (AdamW).

- https://arxiv.org/pdf/1711.05101

- AdamW 与 Adam 的主要区别在于处理权重衰减(也称为 L2 正则化)的方式:

- Adam:在传统的 Adam 中,权重衰减(或 L2 正则化)通常被添加到损失函数中。这意味着权重衰减项会影响梯度,进而影响动量(一阶矩)和自适应学习率(二阶矩)的计算。

- AdamW:AdamW 将权重衰减从损失函数中分离出来,直接应用于参数更新步骤。这种方法被称为"解耦权重衰减"(decoupled weight decay)。

- 在某些优化器(如 AdamW)中,权重衰减被认为是一种更自然和有效的方法,因为它在每次权重更新时直接应用衰减,而不需要显式地在损失函数中添加正则项。

Grad Clip & Grad Norm

- gradient clipping,梯度裁剪,一般用于解决梯度爆炸 (gradient explosion) 问题

nn.utils.clip_grad_norm_(model.parameters(), args.max_grad_norm)

Clip the gradient norm of an iterable of parameters.The norm is computed over all gradients together, as if they were concatenated into a single vector. Gradients are modified in-place. - grad norm

- if ∥ g ∥ ≥ c \|\textbf g\|\geq c ∥g∥≥c,则有 g ← c g ∥ g ∥ \textbf g\leftarrow c\frac{\textbf g}{\|\textbf g\|} g←c∥g∥g

- TrainingArguments (transformers)

max_grad_norm: float = field(default=1.0, metadata={"help": "Max gradient norm."})

梯度裁剪代码实现

# Define the maximum gradient norm threshold

max_grad_norm = 1.0# Training loop

for epoch in range(num_epochs):for inputs, targets in dataloader: # Replace dataloader with your data loading meoptimizer.zero_grad() # Zero the gradients# Forward passoutputs = model(inputs)loss = criterion(outputs, targets)# Backward passloss.backward()# Apply gradient clipping to prevent exploding gradientSnn.utils.clip_grad_norm_(model.parameters(), max_grad_norm)# Update weightsoptimizer.step()# Perform other training loop operations (e.g., validation)

w = torch.rand(5) * 1000

w_1 = w.clone()

w.requires_grad_(True)

w_1.requires_grad_(True)

loss = 1/2 * torch.sum(w_1 * w_1 + w * w)

# Here grads of loss w.r.t w and w_1 should be w and w_1 respectively

loss.backward()

# Clip grads of w_1

torch.nn.utils.clip_grad_norm_(w_1, max_norm=1.0, norm_type=2) # tensor(1683.7260)

torch.norm(w_1, p=2) # tensor(1683.7260, grad_fn=<LinalgVectorNormBackward0>)

w.grad # tensor([882.2693, 915.0040, 382.8638, 959.3057, 390.4482])

w_1.grad # tensor([0.5240, 0.5434, 0.2274, 0.5698, 0.2319])

torch.norm(w_1.grad, p=2) # tensor(1.)

w.grad / torch.norm(w.grad, p=2) # tensor([0.5240, 0.5434, 0.2274, 0.5698, 0.2319])

05 StackLlama、SFT+DPO(代码组织、数据处理,pipeline)

git clone git@github.com:huggingface/trl.git

cd trl

- run the scripts

huggingface-cli login

- TRL

- 三个 research projects(https://github.com/huggingface/trl/tree/main/examples/research_projects)

- stack_llama_2/scripts

- tools

- toxicity

- 学习代码组织

- 超参的组织

- 超参的默认值;

- 学习 pipeline;

- accelerate config

- https://github.com/huggingface/trl/tree/main/examples/accelerate_configs

- 三个 research projects(https://github.com/huggingface/trl/tree/main/examples/research_projects)

很多人没有资源,上面三个开源的项目是很好的用于提供微调经验的项目,如何设置超参,以及pipeline的使用

比如offload_optimizer_device,默认是None,可以改为cpu,即显存不足时,可以使用CPU临时存储。

模型和数据

- stack exchange(10M级别数据集上做的后训练,在Huggingface上的

lvwerra/stack-exchange-paired,rl用来做DPO的tuning,数据都是偏序数据,问题+好回答+坏回答):- 数据收集及标注

score = log2 (1 + upvotes)rounded to the nearest integer, plus 1 if the questioner accepted the answer- (we assign a score of −1 if the number of upvotes is negative).

- 这是判断回答好坏的一个得分,根据点赞情况来看

- pairs

(response_j, response_k)where j was rated better than k

- 数据收集及标注

lvwerra/stack-exchange-paired:千万级别,26.3 GB- split:31,284,837

- train:26.8m rows

- test:4.48m rows

- data_dir:

- data/finetune: SFT

- question + response_j

f"Question: {example['question']}\n\nAnswer: {example['response_j']}"

- data/rl: DPO

def return_prompt_and_responses(samples) -> Dict[str, str]:return {"prompt": ["Question: " + question + "\n\nAnswer: " for question in samples["question"]],"chosen": samples["response_j"],"rejected": samples["response_k"],} - data/evaluation: evaluation of DPO

- data/reward: 第一版 stack-llama 训练 reward modeling 用到的数据集

- 这样就既可以做sft finetuning,也可以做dop,一定要

return_prompt_and_responses成标准格式

- data/finetune: SFT

- split:31,284,837

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("meta-llama/Llama-2-7b-hf")

tokenizer.is_fast # True

参数

from transformers import HfArgumentParser

from trl import SFTConfig

# 封装 dataclass 类

# parser = HfArgumentParser((ScriptArguments, SFTConfig))

# 接收命令行参数,且将其转换为对应类的实例

# script_args, training_args = parser.parse_args_into_dataclasses()

from dataclasses import dataclass, field

from typing import Optional@dataclass

class ScriptArguments:model_name: Optional[str] = field(default="meta-llama/Llama-2-7b-hf", metadata={"help": "the model name"})dataset_name: Optional[str] = field(default="lvwerra/stack-exchange-paired", metadata={"help": "the dataset name"})subset: Optional[str] = field(default="data/finetune", metadata={"help": "the subset to use"})split: Optional[str] = field(default="train", metadata={"help": "the split to use"})size_valid_set: Optional[int] = field(default=4000, metadata={"help": "the size of the validation set"})streaming: Optional[bool] = field(default=True, metadata={"help": "whether to stream the dataset"})shuffle_buffer: Optional[int] = field(default=5000, metadata={"help": "the shuffle buffer size"})seq_length: Optional[int] = field(default=1024, metadata={"help": "the sequence length"})num_workers: Optional[int] = field(default=4, metadata={"help": "the number of workers"})use_bnb: Optional[bool] = field(default=True, metadata={"help": "whether to use BitsAndBytes"})# LoraConfiglora_alpha: Optional[float] = field(default=16, metadata={"help": "the lora alpha parameter"})lora_dropout: Optional[float] = field(default=0.05, metadata={"help": "the lora dropout parameter"})lora_r: Optional[int] = field(default=8, metadata={"help": "the lora r parameter"})script_args = ScriptArguments()

script_args.model_name # 'meta-llama/Llama-2-7b-hf'# if training_args.group_by_length and training_args.packing:

# raise ValueError("Cannot use both packing and group by length")

数据集

- dataset.filter/dataset.map: 要充分利用cpu的线程资源

- num_proc:进程数

- 注意 dataset.map 的过程中会在 $HF_HOME/datasets 下创建大量的缓存文件

dataset.cleanup_cache_files():来释放缓存文件,不然很容易击穿磁盘

from datasets import load_dataset# 887094/0 [00:44<00:00, 18883.94 examples/s]

# dataset = load_dataset(

# script_args.dataset_name,

# data_dir=script_args.subset,

# split=script_args.split,

# use_auth_token=True,

# num_proc=script_args.num_workers if not script_args.streaming else None,

# # streaming=script_args.streaming,

# streaming=False

# )# Setting num_proc from 24 to 20 for the train split as it only contains 20 shards.

# 7440923/0 [01:17<00:00, 117936.98 examples/s]

dataset = load_dataset(script_args.dataset_name,data_dir=script_args.subset,split=script_args.split,use_auth_token=True,num_proc=24,# streaming=script_args.streaming,streaming=False

)# Resolving data files: 0%| | 0/20 [00:00<?, ?it/s]

# Loading dataset shards: 0%| | 0/60 [00:00<?, ?it/s]len(dataset) # 7440923

dataset

"""

Dataset({features: ['qid', 'question', 'date', 'metadata', 'response_j', 'response_k'],num_rows: 7440923

})

"""dataset = dataset.train_test_split(test_size=0.005, seed=42)

train_data = dataset["train"]

valid_data = dataset["test"]

len(train_data), len(valid_data), len(train_data) + len(valid_data) # (7403718, 37205, 7440923)train_data[0]

"""

{'qid': 20079813,'question': 'The scenario is simple, I need to log in from another server (different from the API server) to retrieve the access token.\n\nI installed `Microsoft.Owin.Cors` package on the API Server. In `Startup.Auth.cs` file, under `public void ConfigureAuth(IAppBuilder app)`, I added in \n\n```\napp.UseCors(Microsoft.Owin.Cors.CorsOptions.AllowAll);\n\n```\n\nIn `WebApiConfig.cs`, under `public static void Register(HttpConfiguration config)`, I added in these lines:\n\n```\n// Cors\nvar cors = new EnableCorsAttribute("*", "*", "GET, POST, OPTIONS");\nconfig.EnableCors(cors);\n\n```\n\nWhat else should I change?','date': '2013/11/19','metadata': ['https://Stackoverflow.com/questions/20079813','https://Stackoverflow.com','https://Stackoverflow.com/users/863637/'],'response_j': 'I had many trial-and-errors to setup it for AngularJS-based web client. \n\nFor me, below approaches works with ASP.NET WebApi 2.2 and OAuth-based service.\n\n1. Install `Microsoft.AspNet.WebApi.Cors` nuget package.\n2. Install `Microsoft.Owin.Cors` nuget package.\n3. Add `config.EnableCors(new EnableCorsAttribute("*", "*", "GET, POST, OPTIONS, PUT, DELETE"));` to the above of `WebApiConfig.Register(config);` line at **Startup.cs** file.\n4. Add `app.UseCors(Microsoft.Owin.Cors.CorsOptions.AllowAll);` to the **Startup.Auth.cs** file. This must be done prior to calling `IAppBuilder.UseWebApi`\n5. Remove any xml settings what Blaise did.\n\nI found many setup variations and combinations at here stackoverflow or [blog articles](http://msdn.microsoft.com/en-us/magazine/dn532203.aspx). So, Blaise\'s approach may or may not be wrong. It\'s just another settings I think.','response_k': 'I just want to share my experience. I spent half of the day banging my head and trying to make it work. I read numerous of articles and SO questions and in the end I figured out what was wrong. \n\nThe line \n\n```\napp.UseCors(Microsoft.Owin.Cors.CorsOptions.AllowAll);\n\n```\n\nwas not the first one in `Startup` class `Configuration` method. When I moved it to the top - everything started working magically.\n\nAnd no custom headers in `web.config` or `config.EnableCors(corsPolicy);` or anything else was necessary.\n\nHope this will help someone to save some time.'}

"""

然后我们要构造样本数据及模板:

def prepare_sample_text(example):"""Prepare the text from a sample of the dataset."""text = f"Question: {example['question']}\n\nAnswer: {example['response_j']}"return textdef chars_token_ratio(dataset, tokenizer, nb_examples=400):"""Estimate the average number of characters per token in the dataset."""total_characters, total_tokens = 0, 0for _, example in tqdm(zip(range(nb_examples), iter(dataset)), total=nb_examples):text = prepare_sample_text(example)total_characters += len(text)if tokenizer.is_fast:total_tokens += len(tokenizer(text).tokens())else:total_tokens += len(tokenizer.tokenize(text))return total_characters / total_tokenschars_per_token = chars_token_ratio(train_data, tokenizer)

chars_per_token # 3.248387071938901

from trl.trainer import ConstantLengthDatasettrain_dataset = ConstantLengthDataset(tokenizer,train_data,formatting_func=prepare_sample_text,infinite=True,seq_length=script_args.seq_length,chars_per_token=chars_per_token,

)sample = next(iter(train_dataset))

sample['input_ids'].shape, sample['labels'].shape # (torch.Size([1024]), torch.Size([1024]))

print(tokenizer.decode(sample['input_ids']))

"""

`method="post"`, now I'm confused. When we click the button, what will actually happen? The jQuery.ajax will send a request in the js function but what will the `method="post"` do? Or `method="post"` can be ignored? If it does nothing, can we make the attribute `method` empty: `<form class="form" action="" method="">`?**Additional**Now I realise that the type of the buttion in this example is `button`, instead of `submit`. If I change the type into `submit` and click it, what will happen? Will it send two http requests, a post request comes from the form and a put request comes from the jQuery.ajax?Answer: This example is badly designed and confusing.The `formSubmit` function will only be called when the button is clicked. When the button is clicked, that function will run, and *nothing else* will happen; since the input is not a submit button, the form will not attempt to submit. All network activity will result from the `jQuery.ajax` inside the function. In this case, the `<form>` and the `method="post"` are ignored completely, since the form doesn't get submitted - the method used will be the one in the `.ajax` function, which is PUT.But the form is still submittable, if the user presses enter while focused inside the `<input type="text"`. If they do that, then the `formSubmit` function will not be called (since the button wasn't clicked), and the user's browser will send the `name` and the `message` as form data to the server, on the current page - and yes, as a POST. (Is the current page this code is on `submit.php`? If not, that may be a mistake. Perhaps the person who wrote the code completely overlooked the possibility of the form being submitable without using the button.)To make the example less confusing, I'd change the button to a *submit* button, and add a submit handler to the form instead of a click listener to the button. Then have the submit handler `return false` or call `e.preventDefault` so that all network activity goes through the `.ajax` call.</s><s> Question: I tend to get very stressed out about how things play out around me in the workplace. A lot of the stress and anxiety comes from high level decisions and changes made by management and executives that are not necessarily detrimental to me but definitely change the workplace environment and expectations causing me to be consumed with inconsolable worry and anxiety.As I get older I have been sufferring more and more anxiety attacks. We know the symptoms, feeling like I can't get a breath, heart pounding, cold sweats. Certainly my excessive worrying has helped me avoid bad situations in the past but more often than not my irrational worry and anxiety turns out to be unfounded, with little basis in logical fact (not that rational and logical thought really help; the fear is not rational).>

> Fear is the mind-killer

>

>

> I feel like this hinders me severely in my career. My coworkers and boss have taken notice and this stresses me out even more because now I am afraid they think me weak.My career aspirations are to make it into management someday because I am successful as a lead in turning around failing software projects and turning them into a success. I love working with people and clients and love to coach and guide people. I feel I would be a postive manager.My anxiety attacks are a manifestation of my stress, however will management think me too weak to take charge and be an effective leader?Am I too weak to be a good manager someday? Perhaps I need to come to terms with my own shortcomings as it seems my other peers are much more easy going with chaos going on around them?Answer: Anxiety attacks occur when you think about uncomfortable situations / things, you realize that you're freaking out, and then you freak out about it even further, in other words, you are freaking out about the fact that you're freaked out. Try:* **Just accept the fact that you're worried.** Admit that you're facing a bad situation, and stop thinking further about how much you're worried about it. The less you think, the less you freak out.

* **Change your physical state.** I find that sometimes mental states are easier to be switched out of if you're

"""

这个decode结果很长,就是输入的所有文本

lengths = []

for i, sample in enumerate(train_dataset):lengths.append(len(sample['input_ids']))if i >= 10: # 收集100个样本的信息break

lengths # [1024, 1024, 1024, 1024, 1024, 1024, 1024, 1024, 1024, 1024, 1024]

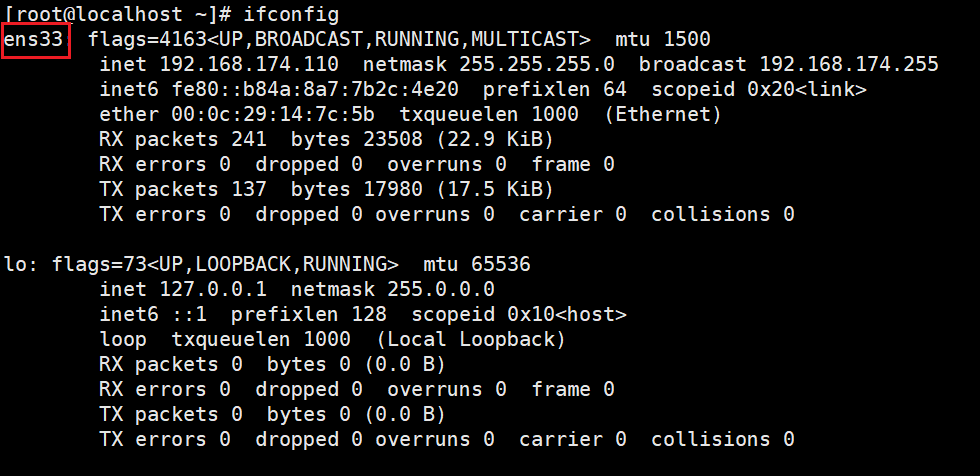

加速SFT

经验:

-

先尝试用 single gpu 跑一下:看下单卡的表现;

- 单机单卡能运行,就直接跑,否则一定要模型并行,即使用

accelerate launch --config-file ./single_gpu.yaml xx.py - 对比(multi gpus)看下通信的影响;

- 单机单卡能运行,就直接跑,否则一定要模型并行,即使用

-

accelerate config yaml

- https://github.com/huggingface/trl/tree/main/examples/accelerate_configs

from accelerate import Accelerator

...base_model = AutoModelForCausalLM.from_pretrained(...device_map={"": Accelerator().local_process_index},...

)

zero3

zero3使用deepspeed_zero3.yaml 即可,其他都一样

-

“meta-llama/Llama-2-7b-hf”:https://wandb.ai/loveresearch/huggingface/runs/jsn4syqr

accelerate launch --config-file examples/accelerate_configs/deepspeed_zero3.yaml examples/research_projects/stack_llama_2/scripts/sft_llama2.py \--output_dir="./sft" \--max_steps=1000 \--logging_steps=10 \--save_steps=0.2 \--save_total_limit=2 \--eval_strategy="steps"\--eval_steps=0.2\--per_device_train_batch_size=2 \--per_device_eval_batch_size=1 \--gradient_accumulation_steps=4 \--gradient_checkpointing=False \--group_by_length=False \--learning_rate=1e-4 \--lr_scheduler_type="cosine" \--warmup_steps=100 \--weight_decay=0.05 \--optim="paged_adamw_32bit" \--bf16=True \--remove_unused_columns=False \--run_name="sft_llama2" \--report_to="wandb" -

“meta-llama/Meta-Llama-3-8B”:

accelerate launch --config-file examples/accelerate_configs/deepspeed_zero3.yaml examples/research_projects/stack_llama_2/scripts/sft_llama2.py \--model_name="meta-llama/Meta-Llama-3-8B"\--output_dir="./sft" \--max_steps=1000 \--logging_steps=10 \--save_steps=0.2 \--save_total_limit=2 \--eval_strategy="steps"\--eval_steps=0.2\--per_device_train_batch_size=2 \--per_device_eval_batch_size=1 \--gradient_accumulation_steps=4 \--gradient_checkpointing=False \--group_by_length=False \--learning_rate=1e-4 \--lr_scheduler_type="cosine" \--warmup_steps=100 \--weight_decay=0.05 \--optim="paged_adamw_32bit" \--bf16=True \--remove_unused_columns=False \--run_name="sft_llama3" \--report_to="wandb" -

trl 中的 SFTTrainer 继承自 huggingface transformers 的 Trainer

-

max_steps=500

- 设置较小的 max_steps 可以用来做简单的 bug 测试;

- steps 称之为 optimization steps,优化步;执行多少次优化;即反向传播,梯度计算与权重参数更新;

-

logging_steps=10

- 多少步打印一次监控指标:loss、learning_rate、grad_norm

-

learning_rate=1e-4 && lr_scheduler_type=“cosine” && warmup_steps=100

- warmup_steps 达到 100 steps 时达到 learning_rate=1e-4

- warmup_steps 之前初始是 1e-5, 通过 100 步,线性到达 1e-4

- 指定的 learning_rate 其实是 η max \eta_{\text{max}} ηmax,warmup 到达后,逐渐衰减;

-

grad_norm

grad_norm = ∑ i = 1 n ( ∂ L ∂ w i ) 2 \text{grad\_norm} = \sqrt{\sum_{i=1}^n \left(\frac{\partial L}{\partial w_i}\right)^2} grad_norm=i=1∑n(∂wi∂L)2

accelerate DPO

accelerate launch --config-file examples/accelerate_configs/deepspeed_zero3.yaml examples/research_projects/stack_llama_2/scripts/dpo_llama2.py \--model_name_or_path="sft/final_checkpoint" \--output_dir="dpo"

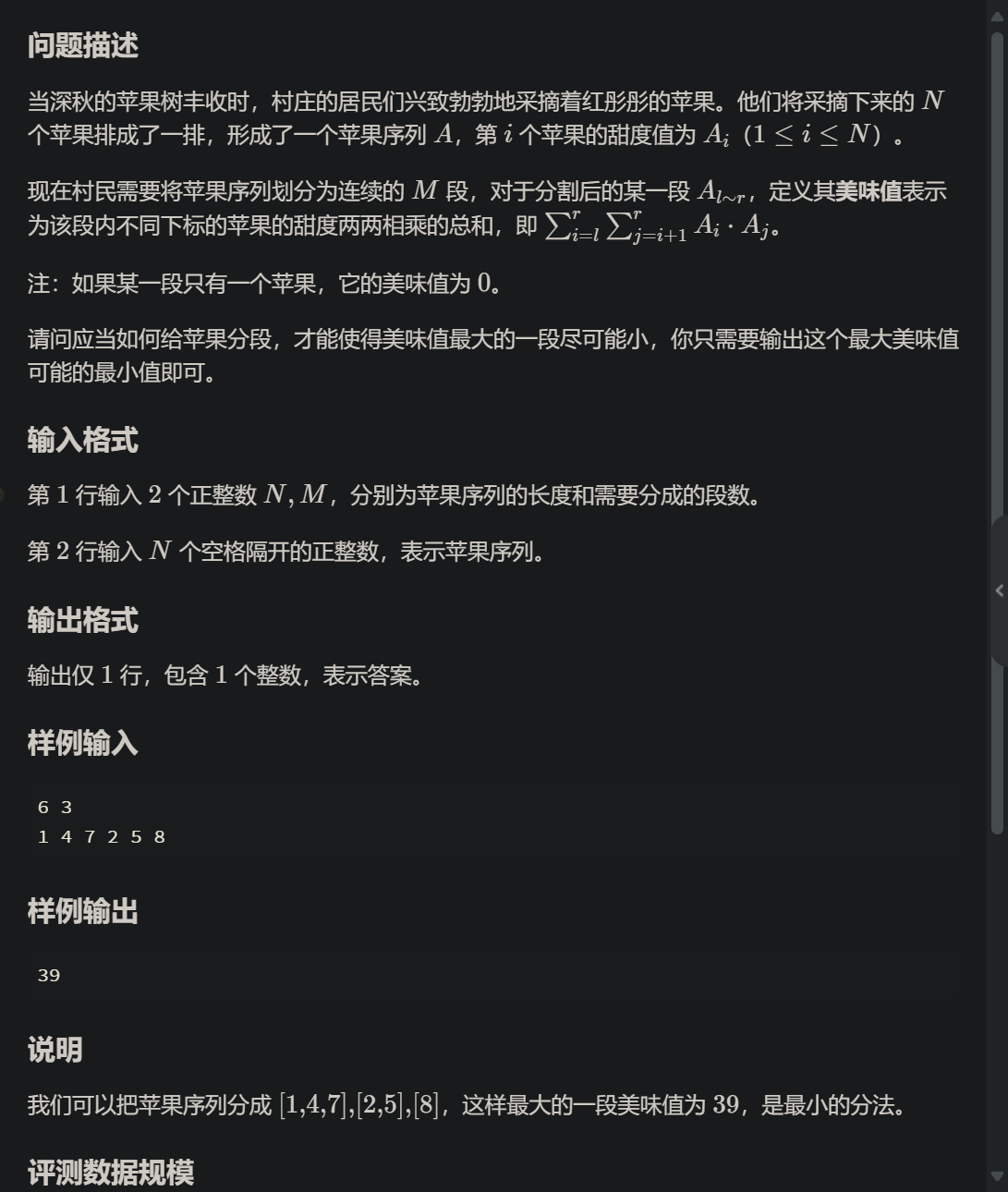

DPO原理回顾(如何计算loss)

-

输入定义:

- π θ \pi_\theta πθ: 策略模型

- π r e f \pi_{ref} πref: 参考模型

- D = { ( x i , y i + , y i − ) } D = \{(x_i, y_i^+, y_i^-)\} D={(xi,yi+,yi−)}: 训练数据,其中 x i x_i xi 是输入提示, y i + y_i^+ yi+ 是偏好的回答, y i − y_i^- yi− 是非偏好的回答

-

对数概率计算,对于每个样本 ( x i , y i + , y i − ) (x_i, y_i^+, y_i^-) (xi,yi+,yi−):

- π θ ( y i + ∣ x i ) = log P θ ( y i + ∣ x i ) \pi_\theta(y_i^+ | x_i) = \log P_\theta(y_i^+ | x_i) πθ(yi+∣xi)=logPθ(yi+∣xi)

- π θ ( y i − ∣ x i ) = log P θ ( y i − ∣ x i ) \pi_\theta(y_i^- | x_i) = \log P_\theta(y_i^- | x_i) πθ(yi−∣xi)=logPθ(yi−∣xi)

- π r e f ( y i + ∣ x i ) = log P r e f ( y i + ∣ x i ) \pi_{ref}(y_i^+ | x_i) = \log P_{ref}(y_i^+ | x_i) πref(yi+∣xi)=logPref(yi+∣xi)

- π r e f ( y i − ∣ x i ) = log P r e f ( y i − ∣ x i ) \pi_{ref}(y_i^- | x_i) = \log P_{ref}(y_i^- | x_i) πref(yi−∣xi)=logPref(yi−∣xi)

-

对数概率比计算

- r i + = π θ ( y i + ∣ x i ) − π r e f ( y i + ∣ x i ) r_i^+ = \pi_\theta(y_i^+ | x_i) - \pi_{ref}(y_i^+ | x_i) ri+=πθ(yi+∣xi)−πref(yi+∣xi)

- r i − = π θ ( y i − ∣ x i ) − π r e f ( y i − ∣ x i ) r_i^- = \pi_\theta(y_i^- | x_i) - \pi_{ref}(y_i^- | x_i) ri−=πθ(yi−∣xi)−πref(yi−∣xi)

-

DPO 损失计算(以 sigmoid 损失为例)

L i = − log ( σ ( β ⋅ ( r i + − r i − ) ) ) ⋅ ( 1 − λ ) − log ( 1 − σ ( β ⋅ ( r i + − r i − ) ) ) ⋅ λ L_i = -\log(\sigma(\beta \cdot (r_i^+ - r_i^-))) \cdot (1 - \lambda) - \log(1 - \sigma(\beta \cdot (r_i^+ - r_i^-))) \cdot \lambda Li=−log(σ(β⋅(ri+−ri−)))⋅(1−λ)−log(1−σ(β⋅(ri+−ri−)))⋅λ

其中:- σ \sigma σ 是 sigmoid 函数

- β \beta β 是温度参数

- λ \lambda λ 是标签平滑参数

-

总体损失

L = 1 N ∑ i = 1 N L i L = \frac{1}{N} \sum_{i=1}^N L_i L=N1i=1∑NLi

其中 N N N 是批次大小。

-

优化目标: θ ∗ = arg min θ L \theta^* = \arg\min_\theta L θ∗=argminθL

-

奖励计算(用于评估)

- chosen_reward i = β ⋅ ( π θ ( y i + ∣ x i ) − π r e f ( y i + ∣ x i ) ) \text{chosen\_reward}_i = \beta \cdot (\pi_\theta(y_i^+ | x_i) - \pi_{ref}(y_i^+ | x_i)) chosen_rewardi=β⋅(πθ(yi+∣xi)−πref(yi+∣xi))

- rejected_reward i = β ⋅ ( π θ ( y i − ∣ x i ) − π r e f ( y i − ∣ x i ) ) \text{rejected\_reward}_i = \beta \cdot (\pi_\theta(y_i^- | x_i) - \pi_{ref}(y_i^- | x_i)) rejected_rewardi=β⋅(πθ(yi−∣xi)−πref(yi−∣xi))

-

评估指标

- 平均 chosen 奖励: 1 N ∑ i = 1 N chosen_reward i \frac{1}{N} \sum_{i=1}^N \text{chosen\_reward}_i N1∑i=1Nchosen_rewardi

- 平均 rejected 奖励: 1 N ∑ i = 1 N rejected_reward i \frac{1}{N} \sum_{i=1}^N \text{rejected\_reward}_i N1∑i=1Nrejected_rewardi

- 奖励准确率: 1 N ∑ i = 1 N 1 [ chosen_reward i > rejected_reward i ] \frac{1}{N} \sum_{i=1}^N \mathbb{1}[\text{chosen\_reward}_i > \text{rejected\_reward}_i] N1∑i=1N1[chosen_rewardi>rejected_rewardi]

- 奖励边际: 1 N ∑ i = 1 N ( chosen_reward i − rejected_reward i ) \frac{1}{N} \sum_{i=1}^N (\text{chosen\_reward}_i - \text{rejected\_reward}_i) N1∑i=1N(chosen_rewardi−rejected_rewardi)

DPODataCollatorWithPadding & training

data_collator = DPODataCollatorWithPadding(# 2pad_token_id=self.tokenizer.pad_token_id,# -100label_pad_token_id=args.label_pad_token_id,# falseis_encoder_decoder=self.is_encoder_decoder,

)

- DPO DataCollator class that pads the tokenized inputs to the maximum length of the batch.

- prompt_input_ids, chosen_input_ids, rejected_input_ids

- chosen_labels, rejected_labels

- prompt_attention_mask, chosen_attention_mask, rejected_attention_mask

- concatenated_input_ids, concatenated_attention_mask

- input_ids: (prompt + chosen), labels: chosen

- input_ids: (prompt + rejected): labels: rejected

- 一个三元组的数据(问题+好回答+坏回答)可以分为两个记录,一个时prompt+chosen,另一个prompt+rejected

- Loss只定义在回答上,而不会在prompt上计算loss

outputs = model(concatenated_batch["concatenated_input_ids"],attention_mask=concatenated_batch["concatenated_attention_mask"],use_cache=False,**model_kwargs,

)all_logits = outputs.logits...all_logps, size_completion = self.get_batch_logps(all_logits,concatenated_batch["concatenated_labels"],# average_log_prob=self.loss_type == "ipo",is_encoder_decoder=self.is_encoder_decoder,label_pad_token_id=self.label_pad_token_id,

)...labels = concatenated_batch["concatenated_labels"].clone()

nll_loss = cross_entropy_loss(all_logits[:len_chosen], labels[:len_chosen])if self.loss_type == "ipo":all_logps = all_logps / size_completionchosen_logps = all_logps[:len_chosen]

rejected_logps = all_logps[len_chosen:]chosen_logits = all_logits[:len_chosen]

rejected_logits = all_logits[len_chosen:]

小寄巧

- 小批量数据集,快速测试和调试

if sanity_check:dataset = dataset.select(range(min(len(dataset), 1000)))