文章目录 1.赛事背景 2.baseline 2.1 文件夹结构 2.2 demo 1. 01_train_test_split.py 2. 02_tf2_mobilev2_classes.py 3. 03_predict.py 3.问题及改进 4.修改记录

赛事链接 训练集 测试集 验证集 1 . 【赛题描述】行为规范,即指某些特定的场景会对人物的行为做出特定的限制,比如加油站禁止吸烟,驾驶员禁止打电话,博物馆禁止拍照等。随着计算机人工智能的发展,这些禁止行为或者不文明行为都可通过基于视频监控的行为检测算法进行监控、发现以及适当时给与警告。本赛题基于这些数据,要求选手设计基于计算机视觉的算法,识别出其中人物抽烟和打电话的行为,难点在于数据集中人物清晰度有限,而且人物的像素大小不一,请选手合理利用技巧,尝试不同的图像算法,提升抽烟打电话行为识别的准确度。2 . 【赛题任务】初赛:选手可下载数据集的一部分,设计算法并完成模型训练,输出结果要求标出图片中人物的三种行为:抽烟,打电话,正常(即没有抽烟或者打电话的行人);复赛:选手可下载包含初赛数据集在内的全量数据,请选手利用增量数据优化初赛的算法,提升输出结果的准确性;较初赛不同的是,复赛数据集中有少量的既抽烟又打电话的人员图像,输出结构要求标出图片中人物的四种行为:抽烟,打电话,抽烟& 打电话,正常。 决赛:参赛队伍现场答辩,确认最终获奖名单。

1.初始文件结构

2.最终文件夹结构 """

https://blog.csdn.net/u010420283/article/details/90142480

将包含三个类别文件夹的训练集划分为训练集+验证集,比例自定(此处20%)

"""

import os, random, shutildef moveFile ( fileDir) : pathDir = os. listdir( fileDir) filenumber = len ( pathDir) picknumber = int ( filenumber * ratio) sample = random. sample( pathDir, picknumber) for name in sample: shutil. move( os. path. join( fileDir, name) , os. path. join( tarDir, name) ) return if __name__ == '__main__' : ori_path = r'C:\Users\hjz\python-project\project\03_smoking_calling\data\train' split_Dir = r'C:\Users\hjz\python-project\project\03_smoking_calling\data\val' ratio = 0.2 for firstPath in os. listdir( ori_path) : fileDir = os. path. join( ori_path, firstPath) tarDir = os. path. join( split_Dir, firstPath) if not os. path. exists( tarDir) : os. makedirs( tarDir) moveFile( fileDir)

import matplotlib. pyplot as plt

import numpy as np

import os

import tensorflow as tffrom tensorflow. keras. preprocessing import image_dataset_from_directorytrain_normal = r"C:\Users\hjz\python-project\project\03_smoking_calling\data\train\normal"

train_phone = r"C:\Users\hjz\python-project\project\03_smoking_calling\data\train\phone"

train_smoke = r"C:\Users\hjz\python-project\project\03_smoking_calling\data\train\smoke" train_dir = r"C:\Users\hjz\python-project\project\03_smoking_calling\data\train" val_dir = r"C:\Users\hjz\python-project\project\03_smoking_calling\data\val"

val_normal = r"C:\Users\hjz\python-project\project\03_smoking_calling\data\val\normal"

val_phone = r"C:\Users\hjz\python-project\project\03_smoking_calling\data\val\phone"

val_smoke = r"C:\Users\hjz\python-project\project\03_smoking_calling\data\val\smoke" test_dir = r"C:\Users\hjz\python-project\project\03_smoking_calling\data\test" train_normal_num = len ( os. listdir( train_normal) )

train_phone_num = len ( os. listdir( train_phone) )

train_smoke_num = len ( os. listdir( train_smoke) ) val_normal_num = len ( os. listdir( val_normal) )

val_phone_num = len ( os. listdir( val_phone) )

val_smoke_num = len ( os. listdir( val_smoke) ) train_all = train_normal_num + train_phone_num + train_smoke_num

val_all = val_normal_num + val_phone_num + val_smoke_numtest_num = len ( os. listdir( test_dir) ) print ( "train normal number: " , train_normal_num)

print ( "train phone_num: " , train_phone_num)

print ( "train_smoke_num: " , train_smoke_num)

print ( "all train images: " , train_all) print ( "val normal number: " , val_normal_num)

print ( "val phone_num: " , val_phone_num)

print ( "val_smoke_num: " , val_smoke_num)

print ( "all val images: " , val_all) print ( "all test images: " , test_num)

batch_size = 32

epochs = 500

height = 150

width = 150

image_gen_train = tf. keras. preprocessing. image. ImageDataGenerator( rescale= 1 . / 255 , rotation_range= 45 , width_shift_range= .15 , height_shift_range= .15 , horizontal_flip= True , zoom_range= 0.5 ) train_data_gen = image_gen_train. flow_from_directory( batch_size= batch_size, directory= train_dir, shuffle= True , target_size= ( height, width) , class_mode= "categorical" ) image_gen_val = tf. keras. preprocessing. image. ImageDataGenerator( rescale= 1 . / 255 ) val_data_gen = image_gen_val. flow_from_directory( batch_size= batch_size, directory= val_dir, target_size= ( height, width) , class_mode= "categorical" )

model_new = tf. keras. Sequential( [ tf. keras. layers. Conv2D( 16 , 3 , padding= "same" , activation= "relu" , input_shape= ( height, width, 3 ) ) , tf. keras. layers. MaxPooling2D( ) , tf. keras. layers. Dropout( 0.2 ) , tf. keras. layers. Conv2D( 32 , 3 , padding= "same" , activation= "relu" ) , tf. keras. layers. MaxPooling2D( ) , tf. keras. layers. Conv2D( 64 , 3 , padding= "same" , activation= "relu" ) , tf. keras. layers. MaxPooling2D( ) , tf. keras. layers. Dropout( 0.2 ) , tf. keras. layers. Flatten( ) , tf. keras. layers. Dense( 512 , activation= "relu" ) , tf. keras. layers. Dense( 3 , activation= "softmax" )

] )

model_new. compile ( optimizer= "adam" , loss= tf. keras. losses. CategoricalCrossentropy( from_logits= False ) , metrics= [ "accuracy" ] ) model_new. summary( ) callback = tf. keras. callbacks. EarlyStopping( monitor= 'val_accuracy' , min_delta= 0.0001 , patience= 5

)

checkpoint_path = "saved_model/best.ckpt"

checkpoint_dir = os. path. dirname( checkpoint_path)

cp_callback = tf. keras. callbacks. ModelCheckpoint( filepath= checkpoint_path, verbose= 1 , save_weights_only= False , save_best_only= True )

logdir = "./model"

tb_callback = tf. keras. callbacks. TensorBoard( log_dir= logdir) , log_callback = tf. keras. callbacks. CSVLogger( "training_log" , separator= ',' , append= False

)

history_new = model_new. fit_generator( train_data_gen, steps_per_epoch= train_all// batch_size, epochs= epochs, validation_data= val_data_gen, validation_steps= val_all// batch_size, callbacks= [ callback, cp_callback, tb_callback, log_callback] )

model_new. save( 'saved_model/model_2' )

print ( "保存新模型成功!" )

train normal number: 1915

train phone_num: 1768

train_smoke_num: 1238

all train images: 4921

val normal number: 478

val phone_num: 442

val_smoke_num: 309

all val images: 1229

all test images: 1500

Found 4921 images belonging to 3 classes.

Found 1229 images belonging to 3 classes.

Model: "sequential"

_________________________________________________________________

Layer ( type ) Output Shape Param

== == == == == == == == == == == == == == == == == == == == == == == == == == == == == == == == =

conv2d ( Conv2D) ( None , 150 , 150 , 16 ) 448

_________________________________________________________________

max_pooling2d ( MaxPooling2D) ( None , 75 , 75 , 16 ) 0

_________________________________________________________________

dropout ( Dropout) ( None , 75 , 75 , 16 ) 0

_________________________________________________________________

conv2d_1 ( Conv2D) ( None , 75 , 75 , 32 ) 4640

_________________________________________________________________

max_pooling2d_1 ( MaxPooling2 ( None , 37 , 37 , 32 ) 0

_________________________________________________________________

conv2d_2 ( Conv2D) ( None , 37 , 37 , 64 ) 18496

_________________________________________________________________

max_pooling2d_2 ( MaxPooling2 ( None , 18 , 18 , 64 ) 0

_________________________________________________________________

dropout_1 ( Dropout) ( None , 18 , 18 , 64 ) 0

_________________________________________________________________

flatten ( Flatten) ( None , 20736 ) 0

_________________________________________________________________

dense ( Dense) ( None , 512 ) 10617344

_________________________________________________________________

dense_1 ( Dense) ( None , 3 ) 1539

== == == == == == == == == == == == == == == == == == == == == == == == == == == == == == == == =

Total params: 10 , 642 , 467

Trainable params: 10 , 642 , 467

Non- trainable params: 0

_________________________________________________________________

Epoch 1 / 10 19 / 2460 [ . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ] - ETA: 6 : 38 - loss: 1.6014 - accuracy: 0.3947

Epoch 5 / 500 2460 / 2460 [ == == == == == == == == == == == == == == == ] - ETA: 0s - loss: 0.9556 - accuracy: 0.5471

Epoch 00005 : saving model to saved_model\cp- 0005. ckpt. . . . . . . .

Process finished with exit code - 1

""""

加载模型,预测,并生成json结果

"""

import cv2

import tensorflow as tf

import numpy as npfrom tensorflow import keras

import os

import json

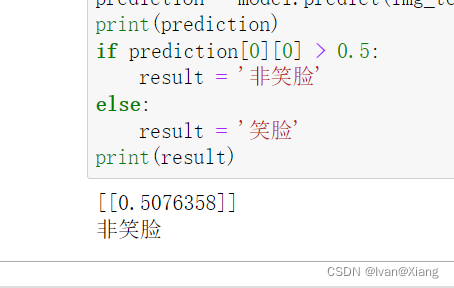

from keras. preprocessing import imagemodel = tf. keras. models. load_model( 'saved_model/model_2' )

height = 150

width = 150 train_path = r"C:\Users\hjz\python-project\project\03_smoking_calling\02_tf2_somking_calling\data\train"

train_ds = tf. keras. preprocessing. image_dataset_from_directory( train_path, image_size= ( height, width) , )

class_names = train_ds. class_names

print ( class_names) from tqdm import tqdm

submission = [ ] test_base = './data/test'

test_data = [ ] for img in os. listdir( test_base) : pt = os. path. join( test_base, img) new_pt = os. path. join( './data/test/' , img) test_data. append( new_pt)

print ( 'test_num:' , len ( test_data) ) labels = sorted ( os. listdir( './data/train' ) )

print ( labels) for im_pt in tqdm( test_data) : value = { "image_name" : None , "category" : None , "score" : None } img = keras. preprocessing. image. load_img( im_pt, target_size= ( height, width) ) img_array = keras. preprocessing. image. img_to_array( img) img_array = tf. expand_dims( img_array, 0 ) predictions = model. predict( img_array) score = predictions[ 0 ] value[ 'category' ] = class_names[ np. argmax( score) ] value[ 'score' ] = str ( np. max ( score) ) value[ 'image_name' ] = im_pt. split( '/' ) [ - 1 ] submission. append( value) with open ( 'result.json' , 'w' ) as f: json. dump( submission, f) print ( 'done' )

1 . 模型太简单,需要换用resnet, efficientNet等网络2 . 未调参

1 . 修改标签名字以符合递交要求;

2. tf2. 1 不好找资料改为tf2. 3 (有官网资料)

3 . 预测分数大部分为1 ,改下编译时指标:model_new. compile ( optimizer= "adam" , loss= tf. keras. losses. CategoricalCrossentropy( from_logits= False ) , metrics= [ "CategoricalAccuracy" ] )

4 . 线上结果约为85 % ;