多卡训练最近在跑yolov10版本的RT-DETR,用来进行目标检测。

单卡训练语句(正常运行):

python main.py

多卡训练语句:

需要通过torch.distributed.launch来启动,一般是单节点,其中CUDA_VISIBLE_DEVICES设置用的显卡编号,也可以不用,直接在main.py里面指定device也行,–nproc_pre_node 每个节点的显卡数量。

python -m torch.distributed.run --nproc_per_node=3 main.pyCUDA_VISIBLE_DEVICES=0,6,7 python -m torch.distributed.run --nproc_per_node=3 main.py

但是运行多卡训练之后,会报错,有的时候训练进程会卡住。错误信息如下,

[rank0]: Traceback (most recent call last):

[rank0]: File "/home/zyy23/yolov10/run_detr.py", line 5, in <module>

[rank0]: model.train(pretrained=True,

[rank0]: File "/home/zyy23/yolov10/ultralytics/engine/model.py", line 657, in train

[rank0]: self.trainer.train()

[rank0]: File "/home/zyy23/yolov10/ultralytics/engine/trainer.py", line 213, in train

[rank0]: self._do_train(world_size)

[rank0]: File "/home/zyy23/yolov10/ultralytics/engine/trainer.py", line 381, in _do_train

[rank0]: self.loss, self.loss_items = self.model(batch)

[rank0]: File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1553, in _wrapped_call_impl

[rank0]: return self._call_impl(*args, **kwargs)

[rank0]: File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1562, in _call_impl

[rank0]: return forward_call(*args, **kwargs)

[rank0]: File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/site-packages/torch/nn/parallel/distributed.py", line 1632, in forward

[rank0]: inputs, kwargs = self._pre_forward(*inputs, **kwargs)

[rank0]: File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/site-packages/torch/nn/parallel/distributed.py", line 1523, in _pre_forward

[rank0]: if torch.is_grad_enabled() and self.reducer._rebuild_buckets():

[rank0]: RuntimeError: Expected to have finished reduction in the prior iteration before starting a new one. This error indicates that your module has parameters that were not used in producing loss. You can enable unused parameter detection by passing the keyword argument `find_unused_parameters=True` to `torch.nn.parallel.DistributedDataParallel`, and by

[rank0]: making sure all `forward` function outputs participate in calculating loss.

[rank0]: If you already have done the above, then the distributed data parallel module wasn't able to locate the output tensors in the return value of your module's `forward` function. Please include the loss function and the structure of the return value of `forward` of your module when reporting this issue (e.g. list, dict, iterable).

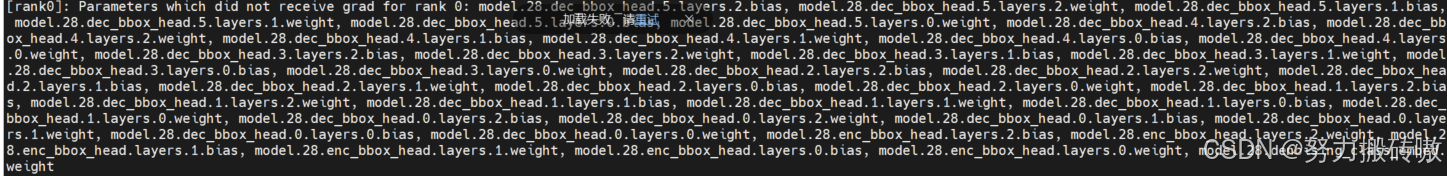

[rank0]: Parameters which did not receive grad for rank 0: model.28.dec_bbox_head.5.layers.2.bias, model.28.dec_bbox_head.5.layers.2.weight, model.28.dec_bbox_head.5.layers.1.bias, model.28.dec_bbox_head.5.layers.1.weight, model.28.dec_bbox_head.5.layers.0.bias, model.28.dec_bbox_head.5.layers.0.weight, model.28.dec_bbox_head.4.layers.2.bias, model.28.dec_bbox_head.4.layers.2.weight, model.28.dec_bbox_head.4.layers.1.bias, model.28.dec_bbox_head.4.layers.1.weight, model.28.dec_bbox_head.4.layers.0.bias, model.28.dec_bbox_head.4.layers.0.weight, model.28.dec_bbox_head.3.layers.2.bias, model.28.dec_bbox_head.3.layers.2.weight, model.28.dec_bbox_head.3.layers.1.bias, model.28.dec_bbox_head.3.layers.1.weight, model.28.dec_bbox_head.3.layers.0.bias, model.28.dec_bbox_head.3.layers.0.weight, model.28.dec_bbox_head.2.layers.2.bias, model.28.dec_bbox_head.2.layers.2.weight, model.28.dec_bbox_head.2.layers.1.bias, model.28.dec_bbox_head.2.layers.1.weight, model.28.dec_bbox_head.2.layers.0.bias, model.28.dec_bbox_head.2.layers.0.weight, model.28.dec_bbox_head.1.layers.2.bias, model.28.dec_bbox_head.1.layers.2.weight, model.28.dec_bbox_head.1.layers.1.bias, model.28.dec_bbox_head.1.layers.1.weight, model.28.dec_bbox_head.1.layers.0.bias, model.28.dec_bbox_head.1.layers.0.weight, model.28.dec_bbox_head.0.layers.2.bias, model.28.dec_bbox_head.0.layers.2.weight, model.28.dec_bbox_head.0.layers.1.bias, model.28.dec_bbox_head.0.layers.1.weight, model.28.dec_bbox_head.0.layers.0.bias, model.28.dec_bbox_head.0.layers.0.weight, model.28.enc_bbox_head.layers.2.bias, model.28.enc_bbox_head.layers.2.weight, model.28.enc_bbox_head.layers.1.bias, model.28.enc_bbox_head.layers.1.weight, model.28.enc_bbox_head.layers.0.bias, model.28.enc_bbox_head.layers.0.weight, model.28.denoising_class_embed.weight

[rank0]: Parameter indices which did not receive grad for rank 0: 510 521 522 523 524 525 526 539 540 541 542 543 544 545 546 547 548 549 550 551 552 553 554 555 556 557 558 559 560 561 562 563 564 565 566 567 568 569 570 571 572 573 574

[rank1]:[E1122 21:12:02.018431947 ProcessGroupGloo.cpp:143] Rank 1 successfully reached monitoredBarrier, but received errors while waiting for send/recv from rank 0. Please check rank 0 logs for faulty rank.

[rank2]:[E1122 21:12:02.018445283 ProcessGroupGloo.cpp:143] Rank 2 successfully reached monitoredBarrier, but received errors while waiting for send/recv from rank 0. Please check rank 0 logs for faulty rank.

[rank1]: Traceback (most recent call last):

[rank1]: File "/home/zyy23/yolov10/run_detr.py", line 5, in <module>

[rank1]: model.train(pretrained=True,

[rank1]: File "/home/zyy23/yolov10/ultralytics/engine/model.py", line 657, in train

[rank1]: self.trainer.train()

[rank1]: File "/home/zyy23/yolov10/ultralytics/engine/trainer.py", line 213, in train

[rank1]: self._do_train(world_size)

[rank1]: File "/home/zyy23/yolov10/ultralytics/engine/trainer.py", line 389, in _do_train

[rank1]: self.scaler.scale(self.loss).backward()

[rank1]: File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/site-packages/torch/_tensor.py", line 521, in backward

[rank1]: torch.autograd.backward(

[rank1]: File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/site-packages/torch/autograd/__init__.py", line 289, in backward

[rank1]: _engine_run_backward(

[rank1]: File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/site-packages/torch/autograd/graph.py", line 768, in _engine_run_backward

[rank1]: return Variable._execution_engine.run_backward( # Calls into the C++ engine to run the backward pass

[rank1]: RuntimeError: Rank 1 successfully reached monitoredBarrier, but received errors while waiting for send/recv from rank 0. Please check rank 0 logs for faulty rank.

[rank1]: Original exception:

[rank1]: [../third_party/gloo/gloo/transport/tcp/pair.cc:534] Connection closed by peer [127.0.1.1]:27022

[rank2]: Traceback (most recent call last):

[rank2]: File "/home/zyy23/yolov10/run_detr.py", line 5, in <module>

[rank2]: model.train(pretrained=True,

[rank2]: File "/home/zyy23/yolov10/ultralytics/engine/model.py", line 657, in train

[rank2]: self.trainer.train()

[rank2]: File "/home/zyy23/yolov10/ultralytics/engine/trainer.py", line 213, in train

[rank2]: self._do_train(world_size)

[rank2]: File "/home/zyy23/yolov10/ultralytics/engine/trainer.py", line 389, in _do_train

[rank2]: self.scaler.scale(self.loss).backward()

[rank2]: File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/site-packages/torch/_tensor.py", line 521, in backward

[rank2]: torch.autograd.backward(

[rank2]: File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/site-packages/torch/autograd/__init__.py", line 289, in backward

[rank2]: _engine_run_backward(

[rank2]: File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/site-packages/torch/autograd/graph.py", line 768, in _engine_run_backward

[rank2]: return Variable._execution_engine.run_backward( # Calls into the C++ engine to run the backward pass

[rank2]: RuntimeError: Rank 2 successfully reached monitoredBarrier, but received errors while waiting for send/recv from rank 0. Please check rank 0 logs for faulty rank.

[rank2]: Original exception:

[rank2]: [../third_party/gloo/gloo/transport/tcp/pair.cc:534] Connection closed by peer [127.0.1.1]:27022

W1122 21:12:02.606069 139664836297920 torch/distributed/elastic/multiprocessing/api.py:858] Sending process 1281666 closing signal SIGTERM

W1122 21:12:02.608416 139664836297920 torch/distributed/elastic/multiprocessing/api.py:858] Sending process 1281667 closing signal SIGTERM

E1122 21:12:02.987694 139664836297920 torch/distributed/elastic/multiprocessing/api.py:833] failed (exitcode: 1) local_rank: 0 (pid: 1281665) of binary: /home/zyy23/anaconda3/envs/mypytorch_3.9/bin/python

Traceback (most recent call last):File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/runpy.py", line 197, in _run_module_as_mainreturn _run_code(code, main_globals, None,File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/runpy.py", line 87, in _run_codeexec(code, run_globals)File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/site-packages/torch/distributed/run.py", line 905, in <module>main()File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/site-packages/torch/distributed/elastic/multiprocessing/errors/__init__.py", line 348, in wrapperreturn f(*args, **kwargs)File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/site-packages/torch/distributed/run.py", line 901, in mainrun(args)File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/site-packages/torch/distributed/run.py", line 892, in runelastic_launch(File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/site-packages/torch/distributed/launcher/api.py", line 133, in __call__return launch_agent(self._config, self._entrypoint, list(args))File "/home/zyy23/anaconda3/envs/mypytorch_3.9/lib/python3.9/site-packages/torch/distributed/launcher/api.py", line 264, in launch_agentraise ChildFailedError(

torch.distributed.elastic.multiprocessing.errors.ChildFailedError:

============================================================

run_detr.py FAILED

------------------------------------------------------------

Failures:<NO_OTHER_FAILURES>

------------------------------------------------------------

Root Cause (first observed failure):

[0]:time : 2024-11-22_21:12:02host : lab10rank : 0 (local_rank: 0)exitcode : 1 (pid: 1281665)error_file: <N/A>traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

发生了runtimerror

RuntimeError: Expected to have finished reduction in the prior iteration before starting a new one. This error indicates that your module has parameters that were not used in producing loss. You can enable unused parameter detection by passing the keyword argument `find_unused_parameters=True` to `torch.nn.parallel.DistributedDataParallel`, and bymaking sure all `forward` function outputs participate in calculating loss.If you already have done the above, then the distributed data parallel module wasn’t able to locate the output tensors in the return value of your module’s `forward` function. Please include the loss function and the structure of the return value of `forward` of your module when reporting this issue (e.g. list, dict, iterable).

看不懂的话,用翻译软件翻译一下

运行时错误:预计在开始新迭代之前已完成前一次迭代的减少。此错误表明您的模块具有未用于产生损耗的参数。您可以通过 (1) 将关键字参数 find_unused_parameters=True 传递给 torch.nn.parallel.DistributedDataParallel 来启用未使用的参数检测; (2) 确保所有 forward 函数输出都参与计算损失。如果您已经完成了上述两个步骤,那么分布式数据并行模块无法在模块的 forward 函数的返回值中定位输出张量。报告此问题时,请包括损失函数和模块 forward 返回值的结构(例如 list、dict、iterable)。

错误原因:

-

定义了网络层却没在

forward()中使用 -

forward()返回的参数未用于梯度计算 -

使用了不进行梯度回归的参数进行优化

有两种解决方法

一是在to torch.nn.parallel.DistributedDataParallel中加入find_unused_parameters参数并设置初始值为True,find_unused_parameters 是 PyTorch 中的一个参数,用于在分布式训练时优化梯度计算。

self.model = nn.parallel.DistributedDataParallel(self.model,

device_ids=[RANK],find_unused_parameters=True)

find_unused_parameters参数的作用:

检测未使用的参数:当设置为 True 时,PyTorch 会在每个前向传播过程中检查哪些参数没有被使用。这对于某些模型来说非常有用,特别是当模型的某些部分在特定输入下可能不会被触发时。

减少损失计算时的内存开销,提高性能:通过识别并忽略未使用的参数,PyTorch 可以在计算梯度时减少内存开销,从而提高训练效率。在大型模型或复杂网络中,识别未使用的参数可以加速训练过程,尤其是在分布式设置中,因为可以避免不必要的梯度同步。

适用于动态计算图:对于需要动态变化的模型架构,使用 find_unused_parameters=True 可以确保所有参数都被正确处理。

但是它会增加额外的计算开销用于验证哪些参数是未使用的不用参加到损失的计算中,所以最好是仅在需要时使用此参数,尤其是在模型中确实存在未使用参数的情况下。

由于用的是yolov10,封装的太严密,一直找不到这条语句在哪个位置,找了好久,也尝试在模型初始化的位置和命令行加参数,都没成功,后来在ultralytics/engine里面的trainer.py找到了,在_setup_train函数下面。

def _setup_train(self, world_size):"""Builds dataloaders and optimizer on correct rank process."""# Modelself.run_callbacks("on_pretrain_routine_start")ckpt = self.setup_model()self.model = self.model.to(self.device)self.set_model_attributes()# Freeze layersfreeze_list = (self.args.freezeif isinstance(self.args.freeze, list)else range(self.args.freeze)if isinstance(self.args.freeze, int)else [])always_freeze_names = [".dfl"] # always freeze these layersfreeze_layer_names = [f"model.{x}." for x in freeze_list] + always_freeze_namesfor k, v in self.model.named_parameters():# v.register_hook(lambda x: torch.nan_to_num(x)) # NaN to 0 (commented for erratic training results)if any(x in k for x in freeze_layer_names):LOGGER.info(f"Freezing layer '{k}'")v.requires_grad = Falseelif not v.requires_grad and v.dtype.is_floating_point: # only floating point Tensor can require gradientsLOGGER.info(f"WARNING ?? setting 'requires_grad=True' for frozen layer '{k}'. ""See ultralytics.engine.trainer for customization of frozen layers.")v.requires_grad = True# Check AMPself.amp = torch.tensor(self.args.amp).to(self.device) # True or Falseif self.amp and RANK in (-1, 0): # Single-GPU and DDPcallbacks_backup = callbacks.default_callbacks.copy() # backup callbacks as check_amp() resets themself.amp = torch.tensor(check_amp(self.model), device=self.device)callbacks.default_callbacks = callbacks_backup # restore callbacksif RANK > -1 and world_size > 1: # DDPdist.broadcast(self.amp, src=0) # broadcast the tensor from rank 0 to all other ranks (returns None)self.amp = bool(self.amp) # as booleanself.scaler = torch.cuda.amp.GradScaler(enabled=self.amp)if world_size > 1:self.model = nn.parallel.DistributedDataParallel(self.model, device_ids=[RANK],find_unused_parameters=True)

二是将环境变量TORCH_DISTRIBUTED_DEBUG设置为INFO或DETAIL,打印有关哪些特定参数在此级别上没有收到梯度的信息,作为此错误的一部分。上述报错信息没有显示这个提示,是因为我们已经使用了这条语句,使用之后,会看到哪些参数没有收到梯度信息。

TORCH_DISTRIBUTED_DEBUG=DETAIL python -m torch.distributed.run --nproc_per_node=3 main.py

或者是下面的语句,也可以看哪些参数没有更新

for name, param in self.model.named_parameters():if param.grad is None:print("The None grad model is:")print(name)

在使用nn.Module的__init__方法时,如果使用self.xx这样的语句定义了层,但是这个层的计算结果后续没有用来计算loss,或者这个self层没有使用,都会导致报错。只需要在模型中仔细检查forward函数和init函数,检查__init__() 方法中是否存在self参数,在 forward 中没有使用的,注释掉即可。(要么使用,要么删除)

![68,[8] BUUCTF WEB [RoarCTF 2019]Simple Upload(未写完)](https://i-blog.csdnimg.cn/direct/18dbc2fbf2f0495fb82b22e5f55b7b83.png)