前情提要

此代码使用scrapy框架爬取特定“关键词”下的搜狗常规搜索结果,保存到同级目录下csv文件。并非爬取微信公众号文章,但是绕过验证码的原理相同。如有错误,希望大家指正。

URL结构

https://www.sogou.com/web?query={关键词}&page={n}

开始爬取

scrapy常规操作就不唠叨了,上代码

class SougouSearchSpider(scrapy.Spider):name = 'sogou_search'allowed_domains = ['www.sogou.com']start_urls = ['https://www.sogou.com/web?query=python&page=1']def start_requests(self):for url in self.start_urls:yield scrapy.Request(url=url,callback=self.parse)

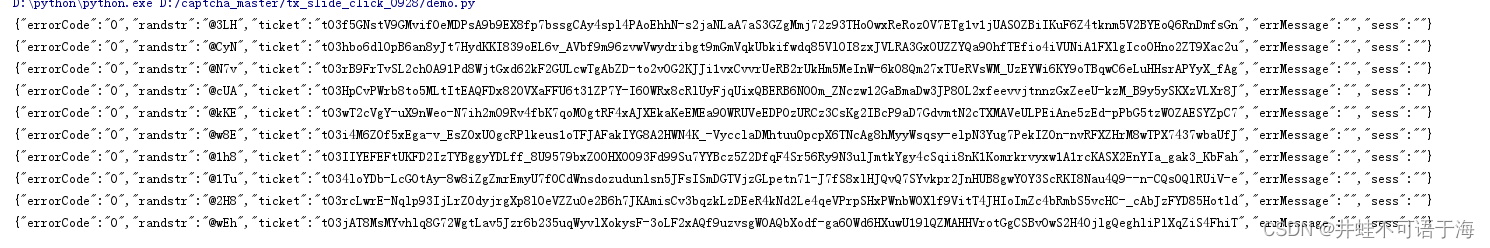

一顿操作后,发现刚爬了3页,就停止了。报错如下

2020-06-11 16:05:15 [scrapy.downloadermiddlewares.redirect] DEBUG: Redirecting (302) to <GET http://www.sogou.com/ant

应该访问的第四页被302重定向到了antispider反爬虫的验证码界面

看到这里,我们采取一下常规措施:1.更换动态IP2.伪装header3.硬刚破解验证码

一步一步来,我们先更换IP。这里我使用的是使用Redis的免费代理IP池:ProxyPool,Github地址。非常nice,代码十分简练。

import requests proxypool_url = 'http://127.0.0.1:5555/random' def get_random_proxy():response = requests.get(proxypool_url)try:if response.status_code == 200:return response.text.strip()except ConnectionError:return None

遗憾的是,只换IP不太行,还是会被302到antispider页面。接下来,我们伪装一下header,先看一下request.header。手动搜索,然后F12查看。

user-agent可以由fake_useragent随机生成,Github地址。难的是cookies。

ssuid=6821261448; IPLOC=CN3301; SUID=6E4A782A1810990A000000005D1B0530; SUV=001E174F2A784A5C5D356F6A8DE34468; wuid=AAGgaAPZKAAAAAqLFD1NdAgAGwY=; CXID=76D40644BC123D9C02A653E240879D21; ABTEST=1|1590639095|v17; browerV=3; osV=1; pgv_pvi=4077461504; ad=TWMV9lllll2WYZuMlllllVEYsfUlllllKgo1fZllll9lllllxllll5@@@@@@@@@@; pgv_si=s7053197312; clientId=1B608ECCC1D96A9828CC801DC0116B61; SNUID=8BAB9BC9E2E6452717FC02C7E3A7749E; ld=VZllllllll2W88XflllllVEPcwylllll3XPwWlllllUllllljZlll5@@@@@@@@@@; sst0=711; LSTMV=190%2C728; LCLKINT=2808800; sct=36

多访问几个网页,查看参数变化。然后发现重要的参数只有两个

SUV=001E174F2A784A5C5D356F6A8DE34468; SNUID=8BAB9BC9E2E6452717FC02C7E3A7749E;

然后我就带着这两个参数的cookies去访问了,虽然多爬了2页,但是很快遇到了antispider。

然后我就想我每访问一页,换一组cookies,行不行?

机智操作登场

突然想到,搜狗是一个大家族,我访问其他搜狗页面,比如搜狗视频,应该也能拿到cookies。

def get_new_cookies():# 搜狗视频urlurl = 'https://v.sogou.com/v?ie=utf8&query=&p=40030600'proxies = {"http": "http://" + get_random_proxy()}headers = {'User-Agent': ua}rst = requests.get(url=url,headers=headers,allow_redirects=False,proxies=proxies)cookies = rst.cookies.get_dict()return cookies

果不其然,得到了想要的cookies:

{'IPLOC': 'CN3301',

'SNUID': '13320250797FDDB3968937127A18F658',

'SUV': '00390FEA2A7848695EE1EE6630A8C887',

'JSESSIONID': 'aaakft_AlKG3p7g_ZIIkx'}

多打印了几次,每次的cookies值都不一样。这就OK了,马上放进scrapy。

大功告成

我尝试了每3秒爬一个网页,运行两小时,没触发antispider!

这样就满足我的需求了,就没有硬刚破解验证码。当然对接第三方打码平台很容易,就不多说了。如果你嫌弃慢的话,可以缩短时间间隔。

完整代码如下

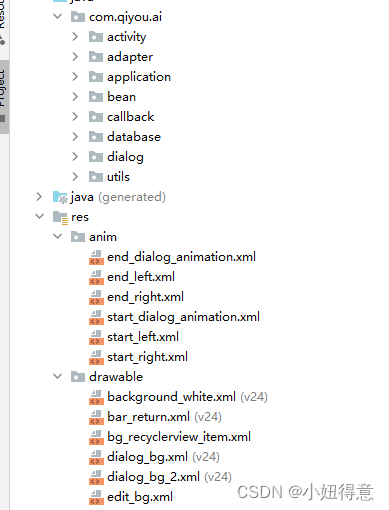

sougou_search.py

# coding=utf-8

from sougou_search_spider.items import SougouSearchSpiderItem

from IP.free_ip import get_random_proxy

from IP.get_cookies import get_new_cookies,get_new_headers

import scrapy

import time

import randomclass SougouSearchSpider(scrapy.Spider):name = 'sogou_search'allowed_domains = ['www.sogou.com']start_urls = ['https://www.sogou.com/web?query=python']def start_requests(self):headers = get_new_headers()for url in self.start_urls:# 获取代理IPproxy = 'http://' + str(get_random_proxy())yield scrapy.Request(url=url,callback=self.parse,headers=headers,meta={'http_proxy': proxy})def parse(self, response):headers_new = get_new_headers()cookies_new = get_new_cookies()# 获取当前页码current_page = int(response.xpath('//div[@id="pagebar_container"]/span/text()').extract_first())# 解析当前页面for i, a in enumerate(response.xpath('//div[contains(@class,"vrwrap")]/h3[@class="vrTitle"]/a')):# 获取标题,去除空格和换行符title = ''.join(a.xpath('./em/text() | ./text()').extract()).replace(' ', '').replace('\n', '')if title:item = SougouSearchSpiderItem()# 获取访问链接(①非跳转链接②跳转链接)、页码、行数、标题if a.xpath('@href').extract_first().startswith('/link'):item['visit_url'] = 'www.sogou.com' + a.xpath('@href').extract_first() # 提取链接else:item['visit_url'] = a.xpath('@href').extract_first()item['page'] = current_pageitem['rank'] = i + 1item['title'] = titleyield item# 控制爬取频率time.sleep(random.randint(8, 10))# 获取“下一页”的链接p = response.xpath('//div[@id="pagebar_container"]/a[@id="sogou_next"]')if p:p_url = 'https://www.sogou.com/web' + str(p.xpath('@href').extract_first())proxy = 'http://' + str(get_random_proxy())yield scrapy.Request(url=p_url,callback=self.parse,headers=headers_new,cookies=cookies_new,meta={'http_proxy': proxy})

get_cookies.py

# coding=utf-8

from IP.free_ip import get_random_proxy

from fake_useragent import UserAgent

import requestsua = UserAgent().randomdef get_new_headers():headers = {"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9","Accept-Encoding": "gzip, deflate, br","Accept-Language": "zh-CN,zh;q=0.9,en;q=0.8","User-Agent": ua}return headersdef get_new_cookies():url = 'https://v.sogou.com/v?ie=utf8&query=&p=40030600'proxies = {"http": "http://" + get_random_proxy()}headers = {'User-Agent': ua}rst = requests.get(url=url,headers=headers,allow_redirects=False,proxies=proxies)cookies = rst.cookies.get_dict()return cookiesif __name__ == '__main__':print(get_new_cookies())

free_ip.py

# coding=utf-8 import requestsproxypool_url = 'http://127.0.0.1:5555/random'def get_random_proxy():response = requests.get(proxypool_url)try:if response.status_code == 200:return response.text.strip()except ConnectionError:return Noneif __name__ == '__main__':print(get_random_proxy())

setting.py

BOT_NAME = 'sougou_search_spider'SPIDER_MODULES = ['sougou_search_spider.spiders']

NEWSPIDER_MODULE = 'sougou_search_spider.spiders'REDIRECT_ENABLED = False

HTTPERROR_ALLOWED_CODES = [302]ROBOTSTXT_OBEY = False

COOKIES_ENABLED = TrueITEM_PIPELINES = {'sougou_search_spider.pipelines.CsvSougouSearchSpiderPipeline': 300,

}

源码获取u加群:1136192749