前言

本文章教你如何爬取NBA湖人球队的球员信息

一、使用步骤

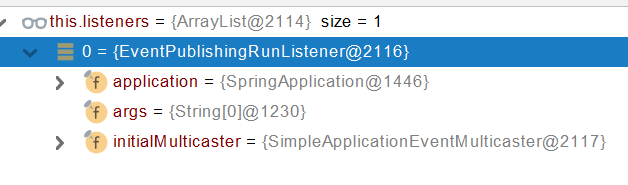

1.1引入库

import xlwt

import requests

import re

from bs4 import BeautifulSoup

import time

1.2引入代码

def main():url = 'https://nba.hupu.com/players/lakers'#解析数据datalist = get_data(url)saveData(datalist)

def get_data(url):datalist = []datatitle = []headers = {'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36 Edg/88.0.705.56'}html = requests.get(url,headers).text #bs = BeautifulSoup(html,'html.parser')for item in bs.find_all('tr'):data = []for items in item.find_all('td'):data.append(items.string)datalist.append(data)return datalist

def saveData(datalist):workbook = xlwt.Workbook(encoding='utf-8')worksheet = workbook.add_sheet('sheet1')for i in range(0,len(datalist[0])-3):worksheet.write(0,i,datalist[0][i+2])for k in range(0,len(datalist)-1):for j in range(0,5):worksheet.write(k+1,j,datalist[k+1][j+2])workbook.save('球星数据.xls')

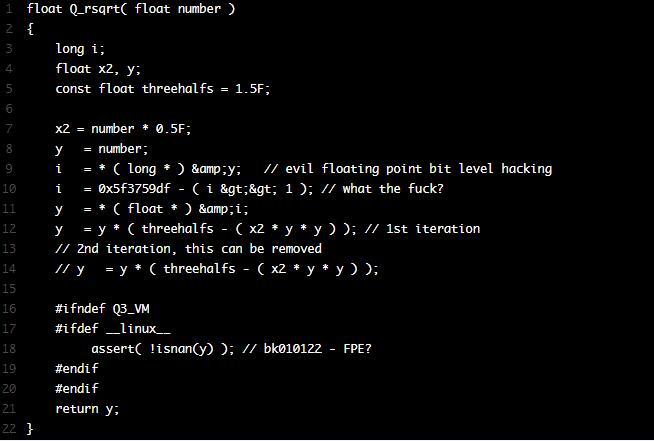

1.3引入完整代码

import xlwt

import requests

import re

from bs4 import BeautifulSoup

import timedef main():url = 'https://nba.hupu.com/players/lakers'#解析数据datalist = get_data(url)saveData(datalist)

def get_data(url):datalist = []datatitle = []headers = {'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36 Edg/88.0.705.56'}html = requests.get(url,headers).text #bs = BeautifulSoup(html,'html.parser')for item in bs.find_all('tr'):data = []for items in item.find_all('td'):data.append(items.string)datalist.append(data)return datalist

def saveData(datalist):workbook = xlwt.Workbook(encoding='utf-8')worksheet = workbook.add_sheet('sheet1')for i in range(0,len(datalist[0])-3):worksheet.write(0,i,datalist[0][i+2])for k in range(0,len(datalist)-1):for j in range(0,5):worksheet.write(k+1,j,datalist[k+1][j+2])workbook.save('球星数据.xls')

1.4总结

1.首先引入函数库,定义主函数main()

2.再获取网页,然后再解析网页,定义getData()函数

3.再就是通过find()函数来查找标签

4.最后就是保存数据啦,千万记得save()末尾一定要加.xls才能保存是Excel的文件