小集群:Ring;缺点:GPU多了以后,延迟太大,N;

大集群:Double-Tree; 优点:hop数是lg(N),延迟减少;

更大集群:两级;节点内先AllReduce一把,结果再在跨机器上AllReduce;优点:减少速度较慢的跨机器通信的数据量;

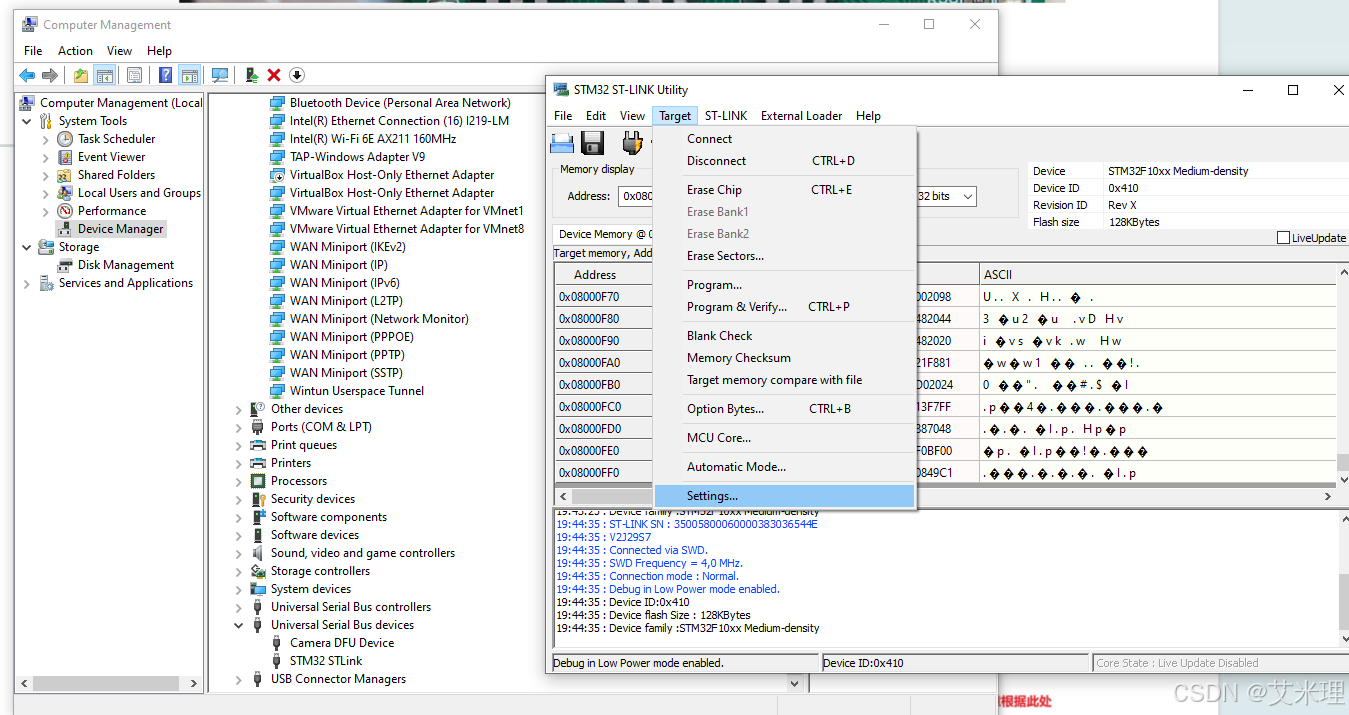

原文:

Ring Algorithm: This is NCCL's default communication pattern for small to medium-sized clusters. In this scheme, each GPU sends data to its neighbor and receives data from another neighbor, forming a ring-like structure. CUDA facilitates this by handling the GPU-to-GPU data transfers within the ring, using high-bandwidth connections like NVLink within a node or GPUDirect RDMA across nodes. This algorithm is bandwidth-efficient because each GPU participates in both sending and receiving, distributing the workload across the ring. However, as the number of GPUs in the ring increases, the communication latency becomes a limiting factor, necessitating more complex algorithms for larger clusters.

Tree and Hierarchical Algorithms: For larger clusters, NCCL employs tree-based algorithms to reduce the number of communication steps, thus minimizing latency. Tree algorithms allow the reduction and aggregation of data in a multi-level structure, where each GPU communicates with fewer nodes in each step, drastically reducing the total communication time compared to a ring.

Hierarchical All-Reduce: For even greater scalability, NCCL introduces a hierarchical approach, which is a two-step process that leverages CUDA's intra- and inter-node communication capabilities. First, NCCL performs intra-node reduction using NVLink or NVSwitch, facilitated by CUDA's ability to rapidly transfer data between GPUs within a single node. During this step, CUDA manages the memory pooling, synchronization, and data transfer across the GPUs, effectively aggregating the data locally.

12. AI Supercluster: Multi-Node Computing (Advanced CUDA and NCCL)

![[Unity 热更方案] 使用Addressable进行打包管理, 使用AssetBundle进行包的加载管理.70%跟练](https://i-blog.csdnimg.cn/direct/0507b661df734542a9bf0e1897dfbb70.png)