| docker-01 | 10.0.0.51 |

| docker-02 | 10.0.0.52 |

| docker-03 | 10.0.0.53 |

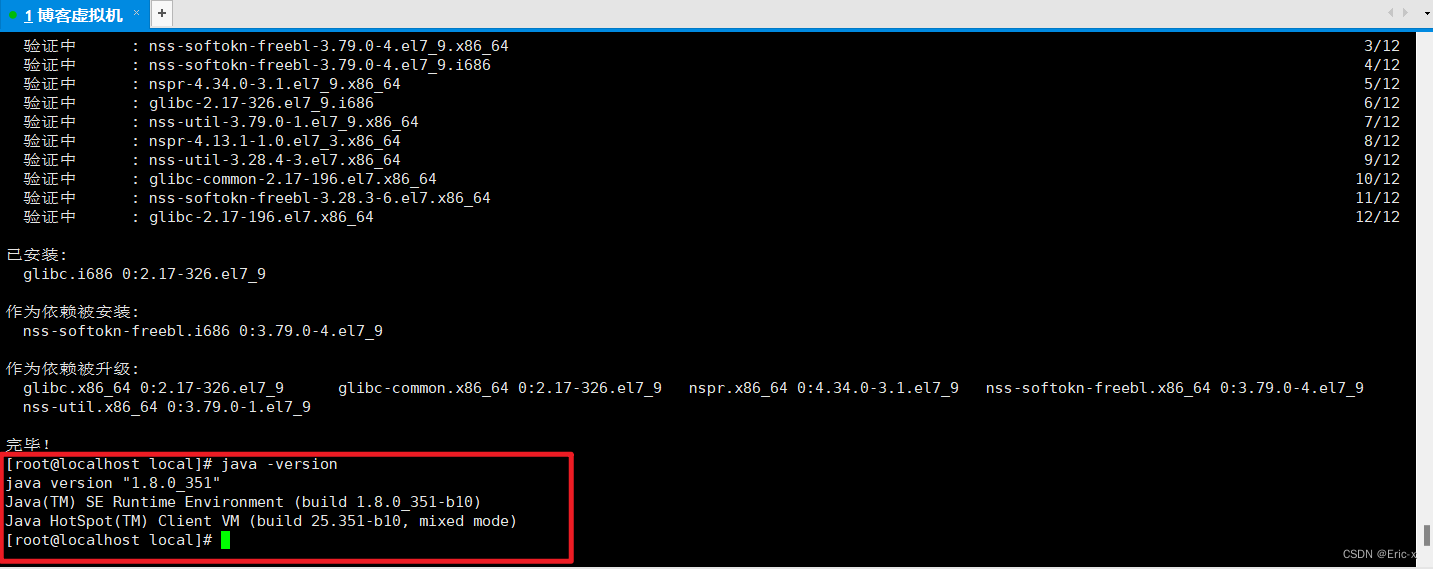

【1】docker安装

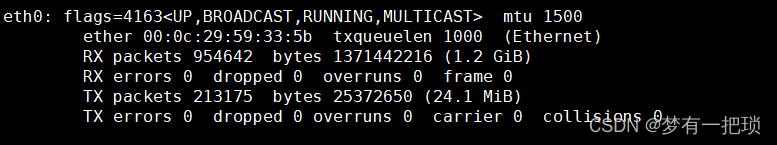

docker-01

[root@docker-01 ~]# vim /etc/yum.conf

[main]

cachedir=/var/cache/yum/$basearch/$releasever

keepcache=1

debuglevel=2

logfile=/var/log/yum.log

exactarch=1

obsoletes=1

gpgcheck=1

plugins=1

installonly_limit=5

bugtracker_url=http://bugs.centos.org/set_project.php?project_id=23&ref=http://bugs.centos.org/bug_report_page.php?category=yum

distroverpkg=centos-release[root@docker-01 ~]# wget -O /etc/yum.repos.d/docker-ce.repo http://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/centos/docker-ce.repo

[root@docker-01 ~]# sed -i 's+donload.docker.com+mirrors.tuna.tsinghua.edu.cn/docker-ce+' /etc/yum.repos.d/docker-ce.repo

[root@docker-01 ~]# yum -y install docker-ce## 安装包的存放路径

[root@docker-01 ~]# mkdir docker-ce

[root@docker-01 ~]# find /var/cache/yum/x86_64/7/ -type f -name "*.rpm" | xargs mv -t docker-ce/[root@docker-01 ~]# docker version

Client: Docker Engine - CommunityVersion: 24.0.4API version: 1.43Go version: go1.20.5Git commit: 3713ee1Built: Fri Jul 7 14:54:21 2023OS/Arch: linux/amd64Context: default

Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?[root@docker-01 ~]# systemctl start docker

[root@docker-01 ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.[root@docker-01 ~]# tar -zcvf docker-ce.tar.gz docker-ce/

[root@docker-01 ~]# scp -rp docker-ce.tar.gz root@10.0.0.52:/root/

root@10.0.0.52's password:

docker-ce.tar.gzdocker-02、docker-03

[root@docker-02 ~]# tar xf docker-ce.tar.gz

[root@docker-02 ~]# cd docker-ce/

[root@docker-02 docker-ce]# yum localinstall -y *.rpm

[root@docker-02 docker-ce]# systemctl start docker

[root@docker-02 docker-ce]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.[root@docker-02 docker-ce]# docker version

Client: Docker Engine - CommunityVersion: 24.0.4API version: 1.43Go version: go1.20.5Git commit: 3713ee1Built: Fri Jul 7 14:54:21 2023OS/Arch: linux/amd64Context: default【2】volume-存储

容器数据本地目录位置

[root@docker-01 ~]# docker run -dit --name test-01 alpine:latest

5d65a22c730e215414da92c6c79836cf4dd1402bc4b56e701f38aac84e8ab2bb[root@docker-01 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5d65a22c730e alpine:latest "/bin/sh" 8 seconds ago Up 7 seconds test-01

c6b42128a328 registry:latest "/entrypoint.sh /etc…" 16 minutes ago Up 11 minutes 0.0.0.0:5000->5000/tcp, :::5000->5000/tcp registry[root@docker-01 ~]# docker exec -it test-01 /bin/sh

/ # touch test

/ # exit[root@docker-01 ~]# cd /var/lib/docker/

[root@docker-01 docker]# find ./ -type f -name "test"

./overlay2/84bae6ec2e284fa10c371fcb97e993c1f1b6d342344e8a6c839c6b0e5157d383/diff/test

./overlay2/84bae6ec2e284fa10c371fcb97e993c1f1b6d342344e8a6c839c6b0e5157d383/merged/test将本地目录挂载到容器中

[root@docker-01 ~]# mkdir test

[root@docker-01 ~]# docker run -dit --name test-01 -v ./test:/opt/test/ alpine:latest

fd79df1b5127cfcd0902c4193317a8191c79f2a87a1197400ae3005ce63d7495

[root@docker-01 ~]# echo "111" > test/file[root@docker-01 ~]# docker exec -it test-01 cat opt/test/file

111

使用volume卷做数据持久化

- 即使容器被删除, volume卷还会存在,一直保存,除非把卷删除

- volume会将容器目录内容保存到本地,前提是卷是空内容,如果卷中有数据,他会将容器目录覆盖

- volume也可以数据共享,支持多个容器可以挂载相同的卷

[root@docker-01 ~]# docker volume ls

DRIVER VOLUME NAME

[root@docker-01 ~]# docker volume create test

test

[root@docker-01 ~]# docker volume ls

DRIVER VOLUME NAME

local test

[root@docker-01 ~]# docker run -dit --name test-01 -v test:/opt alpine:latest

1e53144cdc60a7b26f673bd9191ce9c84fac5ff3ea22c7cc938884cb7463d3fb[root@docker-01 ~]# cd /var/lib/docker/volumes/test/_data/

[root@docker-01 _data]# mkdir ff

[root@docker-01 _data]# docker exec -it test-01 ls opt/

ff【3】 制作镜像

dockerfile-自动制作镜像

FROM # 使用的基础容器

RUN # 下载服务

CMD # 容器启动的基础命令,容易被替换

ENTPRYOINT # 容器启动的基础命令,不能被替换,如果和CMD同时使用,CMD会成为他的参数

ADD # 拷贝,自动解压

COPY # 拷贝,不解压

WORKDIR # 指定默认的工作目录

EXPOSE # 暴露的端口

VOLUME # 持久化卷

ENV # 环境变量(ssh密码。数据库密码)

LABEL # 镜像的tags

MAINTAINER # 管理者标识构建单服务镜像-nginx

[root@docker-01 nginx]# vim dockerfile

FROM alpine:latest

RUN apk add nginx

RUN mv /etc/nginx/http.d/default.conf /etc/nginx/http.d/default.conf_bak

COPY test.conf /etc/nginx/http.d

RUN mkdir /usr/share/nginx/html

RUN echo "TSET" >> /usr/share/nginx/html/index.html

WORKDIR /root

EXPOSE 80

VOLUME /var/log/nginx

CMD ["nginx","-g","daemon off;"][root@docker-01 nginx]# docker build -t nginx:v1 ./

[+] Building 1.0s (12/12) FINISHED docker:default=> [internal] load build definition from dockerfile 0.0s=> => transferring dockerfile: 345B 0.0s=> [internal] load .dockerignore 0.0s=> => transferring context: 2B 0.0s=> [internal] load metadata for docker.io/library/alpine:latest 0.0s=> [1/7] FROM docker.io/library/alpine:latest 0.0s=> [internal] load build context 0.0s=> => transferring context: 31B 0.0s=> CACHED [2/7] RUN apk add nginx 0.0s=> CACHED [3/7] RUN mv /etc/nginx/http.d/default.conf /etc/nginx/http.d/default.conf_bak 0.0s=> CACHED [4/7] COPY test.conf /etc/nginx/http.d 0.0s=> CACHED [5/7] RUN mkdir /usr/share/nginx/html 0.0s=> CACHED [6/7] RUN echo "TSET" >> /usr/share/nginx/html/index.html 0.0s=> [7/7] WORKDIR /root 0.0s=> exporting to image 1.0s=> => exporting layers 1.0s=> => writing image sha256:5245200f87f20a09cc398ccb99149915d04c148942fe8367fe41ff3dcba8c321 0.0s=> => naming to docker.io/library/nginx:v1 [root@docker-01 nginx]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx v1 5245200f87f2 32 seconds ago 10.7MB[root@docker-01 nginx]# docker run -dit -p 80:80 --name nginx nginx:v1

079dbb6b8ae89070c42da5526be09430ec68b956b00173562953a07dee0ed820

[root@docker-01 nginx]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

079dbb6b8ae8 nginx:v1 "nginx -g 'daemon of…" 4 seconds ago Up 3 seconds 0.0.0.0:80->80/tcp, :::80->80/tcp nginx[root@docker-01 nginx]# docker volume ls

DRIVER VOLUME NAME

local 2e2c3c34b376dc4b59b0415d68a1478aee43d0c8c86f05ffab621cf6b4694ba0[root@docker-01 nginx]# ls /var/lib/docker/volumes/2e2c3c34b376dc4b59b0415d68a1478aee43d0c8c86f05ffab621cf6b4694ba0/_data/

access.log error.log

制作基础镜像centos:7

[root@docker-01 centos7]# vim dockerfile

FROM scratch

ADD centos_rootfs.tar.xz /

CMD ["/bin/sh"][root@docker-01 centos7]# docker build -t centos:7 ./

[+] Building 16.6s (5/5) FINISHED docker:default=> [internal] load build definition from dockerfile 0.0s=> => transferring dockerfile: 93B 0.0s=> [internal] load .dockerignore 0.0s=> => transferring context: 2B 0.0s=> [internal] load build context 0.7s=> => transferring context: 73.57MB 0.7s=> [1/1] ADD centos_rootfs.tar.xz / 14.0s=> exporting to image 1.9s=> => exporting layers 1.9s=> => writing image sha256:88149b5f20e7ac45d64059685021e08274c2459404a0fe815aa3aea66885fe89 0.0s=> => naming to docker.io/library/centos:7 0.0s[root@docker-01 centos7]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

centos 7 88149b5f20e7 10 seconds ago 402MB

查看镜像的构建历史

[root@docker-01 centos7]# docker history centos:7

IMAGE CREATED CREATED BY SIZE COMMENT

88149b5f20e7 About a minute ago CMD ["/bin/sh"] 0B buildkit.dockerfile.v0

<missing> About a minute ago ADD centos_rootfs.tar.xz / # buildkit 402MB buildkit.dockerfile.v0制作nginx+ssh的镜像

[root@docker-01 nginx-ssh]# vim dockerfile

FROM centos7:v1

RUN yum -y install epel-release

RUN yum clean all

RUN yum -y install nginx

RUN yum -y install openssh-server

RUN yum -y install initscripts

RUN /usr/sbin/sshd-keygen

RUN /usr/sbin/sshd

RUN echo '111' | passwd --stdin root

ADD init.sh /init.sh

EXPOSE 80 22

WORKDIR /root

CMD ["/bin/bash","/init.sh"][root@docker-01 nginx-ssh]# vim init.sh

#!/bin/bash

nginx

/usr/sbin/sshd -D## 构建

[root@docker-01 nginx-ssh]# docker build -t nginx_ssh:v1 ./

[+] Building 40.6s (16/16) FINISHED docker:default=> [internal] load build definition from dockerfile 0.0s=> => transferring dockerfile: 354B 0.0s=> [internal] load .dockerignore 0.0s=> => transferring context: 2B 0.0s=> [internal] load metadata for docker.io/library/centos7:v1 0.0s=> CACHED [ 1/11] FROM docker.io/library/centos7:v1 0.0s=> [internal] load build context 0.0s=> => transferring context: 28B 0.0s=> [ 2/11] RUN yum -y install epel-release 1.2s=> [ 3/11] RUN yum clean all 0.3s=> [ 4/11] RUN yum -y install nginx 24.3s => [ 5/11] RUN yum -y install openssh-server 4.3s => [ 6/11] RUN yum -y install initscripts 4.3s => [ 7/11] RUN /usr/sbin/sshd-keygen 0.2s => [ 8/11] RUN /usr/sbin/sshd 0.1s => [ 9/11] RUN echo '111' | passwd --stdin root 0.1s => [10/11] ADD init.sh /init.sh 0.0s => [11/11] WORKDIR /root 0.0s => exporting to image 5.7s => => exporting layers 5.7s=> => writing image sha256:c002c1a89ce1980238236701efa0f496f25c8f925603fbfa666a4b1beee41228 0.0s=> => naming to docker.io/library/nginx_ssh:v1 [root@docker-01 nginx-ssh]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx_ssh v1 c002c1a89ce1 55 seconds ago 1.09GB## 启动容器测试验证

[root@docker-01 nginx-ssh]# docker run -dit -P nginx_ssh:v1

72eb999e39d4227419934fa2adbd3bb8b3be874f01350647015db149aad2d41e[root@docker-01 nginx-ssh]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

72eb999e39d4 nginx_ssh:v1 "/bin/bash /init.sh" 12 seconds ago Up 11 seconds 0.0.0.0:32769->22/tcp, :::32769->22/tcp, 0.0.0.0:32768->80/tcp, :::32768->80/tcp quirky_swartz[root@docker-01 nginx-ssh]# curl -I 10.0.0.51:32768

HTTP/1.1 200 OK

Server: nginx/1.20.1

Date: Thu, 03 Aug 2023 11:13:45 GMT

Content-Type: text/html

Content-Length: 4833

Last-Modified: Fri, 16 May 2014 15:12:48 GMT

Connection: keep-alive

ETag: "53762af0-12e1"

Accept-Ranges: bytes[root@docker-01 nginx-ssh]# ssh root@10.0.0.51:32769

ssh: Could not resolve hostname 10.0.0.51:32769: Name or service not known

[root@docker-01 nginx-ssh]# ssh root@10.0.0.51 -p 32769

The authenticity of host '[10.0.0.51]:32769 ([10.0.0.51]:32769)' can't be established.

ECDSA key fingerprint is af:86:56:c1:7a:91:b4:49:73:7f:93:b4:de:69:b0:a5.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '[10.0.0.51]:32769' (ECDSA) to the list of known hosts.

root@10.0.0.51's password:

[root@72eb999e39d4 ~]# ls

[root@72eb999e39d4 ~]# ENTRYPOINT

- 使用CMD,我们启动容器时还可以指定初始命令,会覆盖掉

- CMD CMD ["/bin/bash"]

- docker run -dit -P test:v1 sleep 10

- 那么他的初始命令就是 sleep 10

- 使用ENTRYPOINT指定初始命令,我们启动容器时还可以指定初始命令,会作为参数使用 ENTRYPOINT ["/bin/bash"]

- docker run -dit -P test:v1 sleep 10

- 那么他的初始命令就是 /bin/bash sleep 10

【4】--link

不使用link的容器,ping主机名是不通的

使用link后,会将容器的主机名和ip记录在hosts文件。

所以以后链接容器不需要使用ip地址,使用主机名,因为主机名是固定不变的,IP地址容器重启后会变化

[root@docker-01 nginx-ssh]# docker run -dit --name test-01 alpine:latest

d2ab4cfdb93362b2a3f5bfe05e7a20d007201309792f233cc6c450349c8be747

[root@docker-01 nginx-ssh]# docker run -dit --name test-02 --link test-01:nginx alpine:latest

9c5cc9918eb770772e94ded90e49dfa0857014eac2022790f60da9fde78da337[root@docker-01 nginx-ssh]# docker exec -it test-02 ping test-01

PING test-01 (172.17.0.3): 56 data bytes

64 bytes from 172.17.0.3: seq=0 ttl=64 time=0.104 ms

64 bytes from 172.17.0.3: seq=1 ttl=64 time=0.049 ms

^C

--- test-01 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.049/0.076/0.104 ms

【5】docker-registry(轻量级私有仓库)

启动registry仓库

[root@docker-01 ~]# docker pull registry

Using default tag: latest

latest: Pulling from library/registry

31e352740f53: Already exists

7f9bcf943fa5: Pull complete

3c98a1678a82: Pull complete

51f7a5bb21d4: Pull complete

3f044f23c427: Pull complete

Digest: sha256:9977826e0d1d0eccc7af97017ae41f2dbe13f2c61e4c886ec28f0fdd8c4078aa

Status: Downloaded newer image for registry:latest

docker.io/library/registry:latest[root@docker-01 ~]# docker run -dit -p 5000:5000 --name registry --restart=always -v /opt/myregistry:/var/lib/registry registry:latest

ba4e887ad630f68d761266fc568c38a363b7a16a5ed732da0d097b0367c344c7[root@docker-01 ~]# docker ps -a -l

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

ba4e887ad630 registry:latest "/entrypoint.sh /etc…" 19 seconds ago Up 17 seconds 0.0.0.0:5000->5000/tcp, :::5000->5000/tcp registry

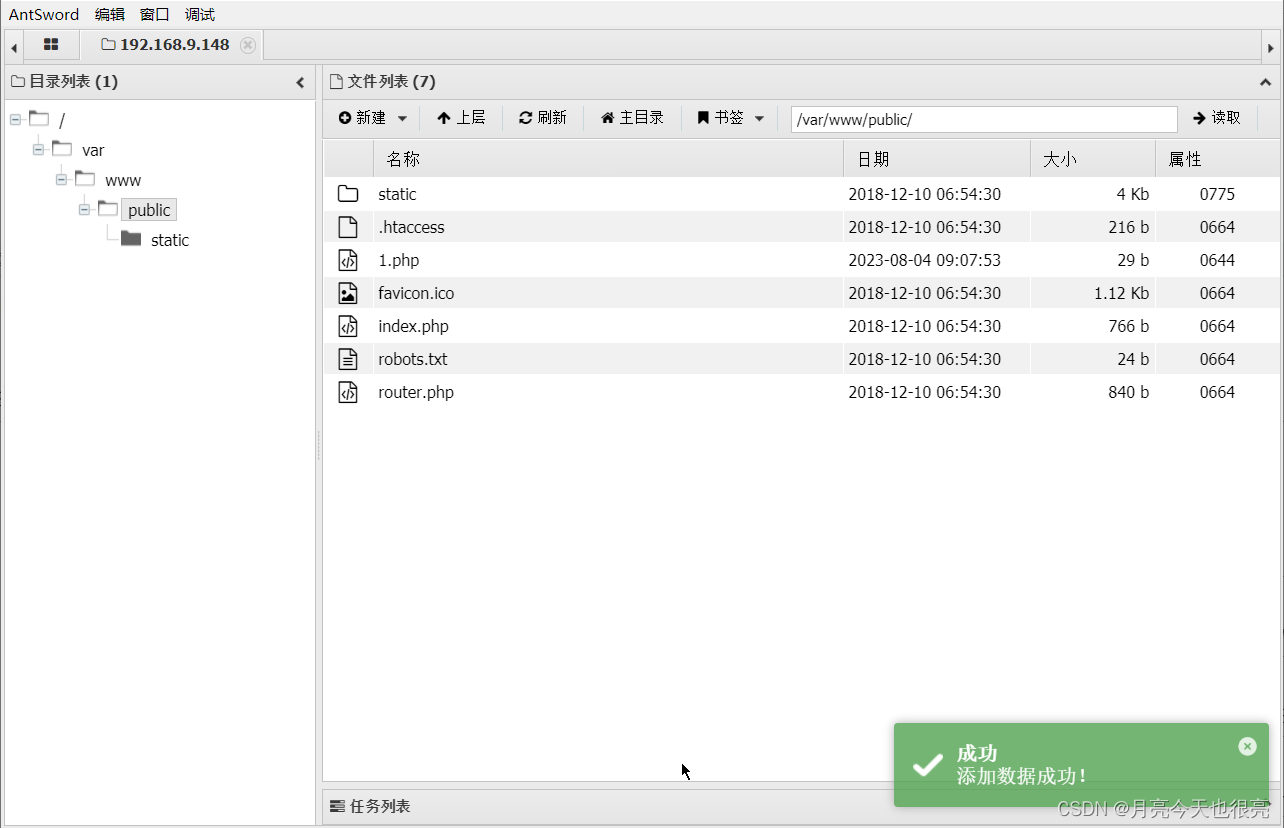

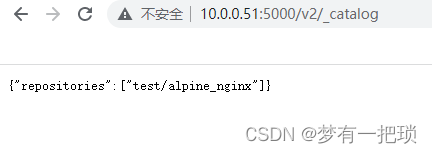

上传镜像

- 第一次推送会报错,原因是启动仓库容器时没有指定http协议,我们推送时使用的是http协议,他让使用https协议,修改配置文件,受信赖的

[root@docker-01 ~]# docker tag alpine_nginx:v1 10.0.0.51:5000/test/alpine_nginx:v1[root@docker-01 ~]# docker push 10.0.0.51:5000/test/alpine_nginx:v1

The push refers to repository [10.0.0.51:5000/test/alpine_nginx]

Get "https://10.0.0.51:5000/v2/": http: server gave HTTP response to HTTPS client## 添加受信赖的地址

[root@docker-01 ~]# vim /etc/docker/daemon.json{"registry-mirrors": [ "http://hub-mirror.c.163.com","https://docker.mirrors.ustc.edu.cn","https://registry.docker-cn.com"],"insecure-registries": [ "10.0.0.51:5000"]

}[root@docker-01 ~]# systemctl daemon-reload

[root@docker-01 ~]# systemctl restart docker.service

[root@docker-01 ~]# systemctl status docker.service ## 再次上传镜像

[root@docker-01 ~]# docker push 10.0.0.51:5000/test/alpine_nginx:v1

The push refers to repository [10.0.0.51:5000/test/alpine_nginx]

ea6e7cf61351: Pushed

22802b4d26c0: Pushed

v1: digest: sha256:0b806a3059535ed9ee2d882b35bc790cabe850036eb6f32073579c059885c513 size: 740## 仓库目录,查看仓库中镜像有哪些版本

[root@docker-01 ~]# ls /opt/myregistry/docker/registry/v2/repositories/test/alpine_nginx/_manifests/tags/

v1

下载镜像

[root@docker-01 ~]# docker pull 10.0.0.51:5000/test/alpine_nginx:v1

v1: Pulling from test/alpine_nginx

Digest: sha256:0b806a3059535ed9ee2d882b35bc790cabe850036eb6f32073579c059885c513

Status: Downloaded newer image for 10.0.0.51:5000/test/alpine_nginx:v1

10.0.0.51:5000/test/alpine_nginx:v1

删除仓库中的镜像

[root@docker-01 ~]# docker exec -it registry /bin/sh## 删除repo

/ # rm -rf /var/lib/registry/docker/registry/v2/repositories/test/alpine_nginx## 清除blob,释放占用的空间

/ # registry garbage-collect /etc/docker/registry/config.yml0 blobs marked, 4 blobs and 0 manifests eligible for deletion

blob eligible for deletion: sha256:0b806a3059535ed9ee2d882b35bc790cabe850036eb6f32073579c059885c513

INFO[0000] Deleting blob: /docker/registry/v2/blobs/sha256/0b/0b806a3059535ed9ee2d882b35bc790cabe850036eb6f32073579c059885c513 go.version=go1.19.9 instance.id=6293eedf-a5eb-4961-a272-cf87d6d96821 service=registry

blob eligible for deletion: sha256:1ce4f7c3383cd71be7eab3bdbf6d981c1bc28ad8cb13bfb0d038ee56b0337279

INFO[0000] Deleting blob: /docker/registry/v2/blobs/sha256/1c/1ce4f7c3383cd71be7eab3bdbf6d981c1bc28ad8cb13bfb0d038ee56b0337279 go.version=go1.19.9 instance.id=6293eedf-a5eb-4961-a272-cf87d6d96821 service=registry

blob eligible for deletion: sha256:53c99a3b3b9e83fffdc609a144ec3dc2b7a2fe73cfc3fca4153061cfda745ff8

INFO[0000] Deleting blob: /docker/registry/v2/blobs/sha256/53/53c99a3b3b9e83fffdc609a144ec3dc2b7a2fe73cfc3fca4153061cfda745ff8 go.version=go1.19.9 instance.id=6293eedf-a5eb-4961-a272-cf87d6d96821 service=registry

blob eligible for deletion: sha256:b8c1726d143fc87168c5a6602f314b687af9e1518fdfb6465cce9791cb6ec91a

INFO[0000] Deleting blob: /docker/registry/v2/blobs/sha256/b8/b8c1726d143fc87168c5a6602f314b687af9e1518fdfb6465cce9791cb6ec91a go.version=go1.19.9 instance.id=6293eedf-a5eb-4961-a272-cf87d6d96821 service=registry

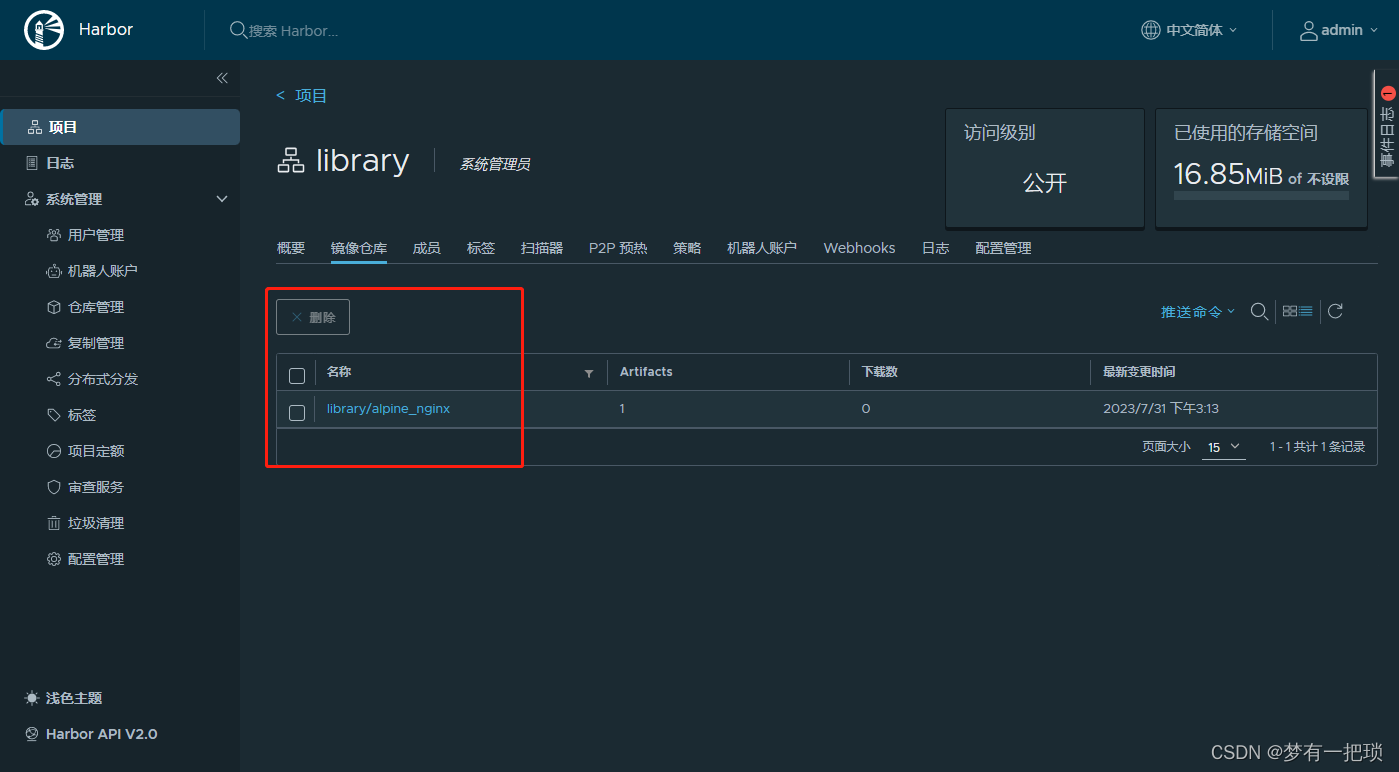

【6】docker-harbor(企业级私有仓库)

docker-01

## 安装docker-compose

[root@docker-01 ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo[root@docker-01 ~]# yum -y install docker-compose[root@docker-01 ~]# mv harbor-offline-installer-v2.5.0.tgz /usr/src/

[root@docker-01 ~]# cd /usr/src/[root@docker-01 src]# tar xf harbor-offline-installer-v2.5.0.tgz

[root@docker-01 src]# cd harbor/

[root@docker-01 harbor]# cp harbor.yml.tmpl harbor.yml[root@docker-01 harbor]# vim harbor.yml

hostname: 10.0.0.51# http related config

http:# port for http, default is 80. If https enabled, this port will redirect to https portport: 80注释掉https,因为上面已经使用了http协议

# https related config

#https:# https port for harbor, default is 443

# port: 443# The path of cert and key files for nginx

# certificate: /your/certificate/path

# private_key: /your/private/key/path

.....

harbor_admin_password: admin## 安装harbor

[root@docker-01 harbor]# ./install.sh [Step 0]: checking if docker is installed ...Note: docker version: 24.0.5[Step 1]: checking docker-compose is installed ...Note: docker-compose version: 1.18.0[Step 2]: loading Harbor images ...

[root@docker-01 harbor]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

90b46e74919f goharbor/nginx-photon:v2.5.0 "nginx -g 'daemon of…" 12 seconds ago Up 11 seconds (health: starting) 0.0.0.0:80->8080/tcp, :::80->8080/tcp nginx

0f9d3bf2bd1b goharbor/harbor-jobservice:v2.5.0 "/harbor/entrypoint.…" 12 seconds ago Up 11 seconds (health: starting) harbor-jobservice

89dfacb33a8d goharbor/harbor-core:v2.5.0 "/harbor/entrypoint.…" 12 seconds ago Up 11 seconds (health: starting) harbor-core

dfe33ae77b76 goharbor/harbor-db:v2.5.0 "/docker-entrypoint.…" 13 seconds ago Up 11 seconds (health: starting) harbor-db

a29a64e88a60 goharbor/harbor-portal:v2.5.0 "nginx -g 'daemon of…" 13 seconds ago Up 11 seconds (health: starting) harbor-portal

fc937964ac33 goharbor/redis-photon:v2.5.0 "redis-server /etc/r…" 13 seconds ago Up 11 seconds (health: starting) redis

3ec1b5c820c5 goharbor/registry-photon:v2.5.0 "/home/harbor/entryp…" 13 seconds ago Up 11 seconds (health: starting) registry

e3ecd2b0c3d3 goharbor/harbor-registryctl:v2.5.0 "/home/harbor/start.…" 13 seconds ago Up 12 seconds (health: starting) registryctl

d696cd56240c goharbor/harbor-log:v2.5.0 "/bin/sh -c /usr/loc…" 13 seconds ago Up 12 seconds (health: starting) 127.0.0.1:1514->10514/tcp harbor-log

systemctl管理harbor

[root@docker-01 harbor]# vim /usr/lib/systemd/system/harbor.service

[Unit]Description=HarborAfter=docker.service systemd-networkd.service systemd-resolved.serviceRequires=docker.serviceDocumentation=http://github.com/vmware/harbor[Service]Type=simpleRestart=on-failureRestartSec=5ExecStart=/usr/bin/docker-compose -f /usr/src/harbor/docker-compose.yml upExecStop=/usr/bin/docker-compose -f /usr/src/harbor/docker-compose.yml down[Install]WantedBy=multi-user.target[root@docker-01 harbor]# systemctl daemon-reload

[root@docker-01 harbor]# systemctl restart harbor.service

docker-02:上传镜像到harbor仓库

[root@docker-02 ~]# vim /etc/docker/daemon.json

{"registry-mirrors": ["http://hub-mirror.c.163.com","https://docker.mirrors.ustc.edu.cn","https://registry.docker-cn.com"],"insecure-registries": ["10.0.0.51:5000","10.0.0.51"]

}[root@docker-02 ~]# systemctl daemon-reload

[root@docker-02 ~]# systemctl restart docker.service[root@docker-02 ~]# docker tag alpine:latest 10.0.0.51/library/alpine:latest[root@docker-02 ~]# docker login 10.0.0.51

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-storeLogin Succeeded

[root@docker-02 ~]# docker push 10.0.0.51/library/alpine:latest

The push refers to repository [10.0.0.51/library/alpine]

78a822fe2a2d: Pushed

latest: digest: sha256:25fad2a32ad1f6f510e528448ae1ec69a28ef81916a004d3629874104f8a7f70 size: 528

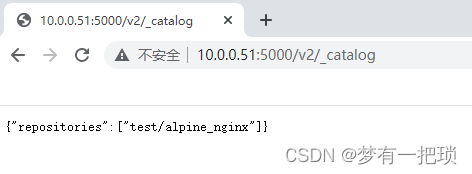

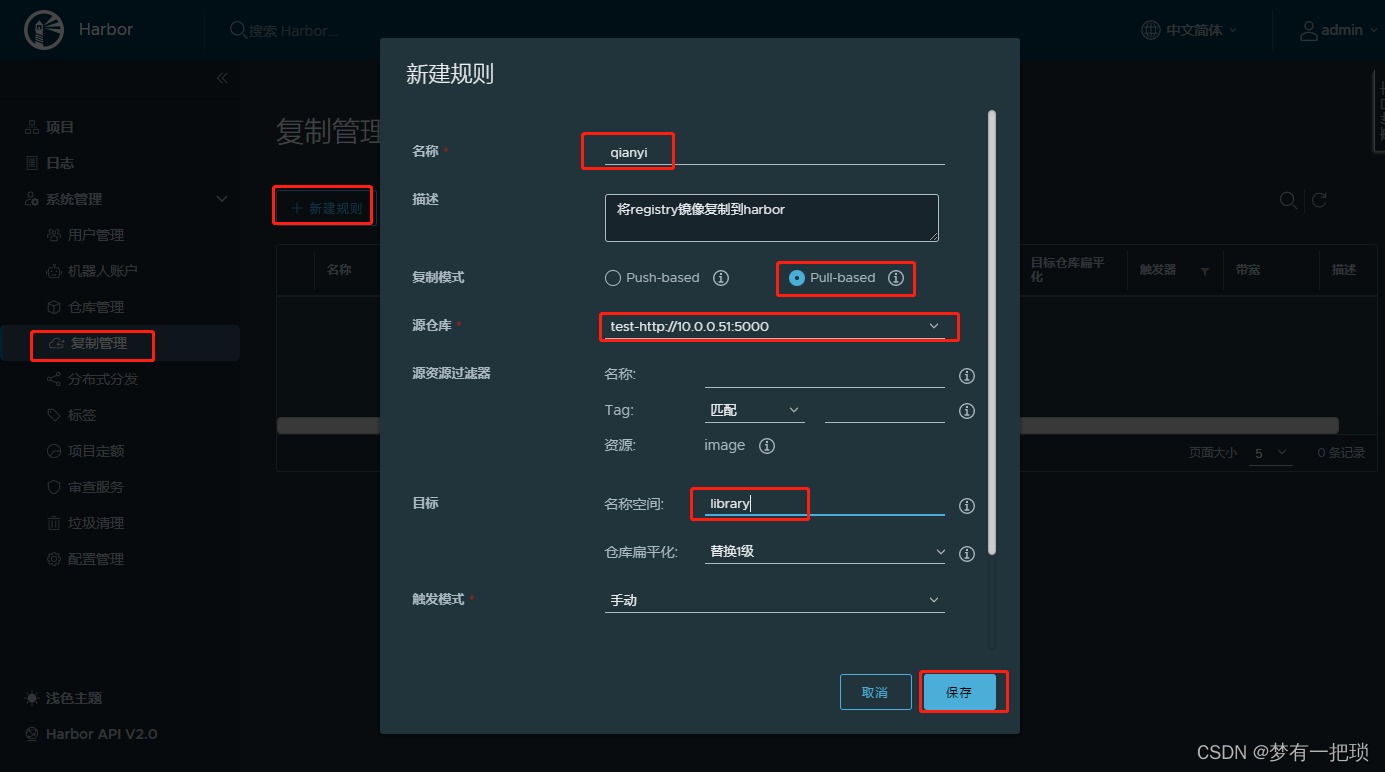

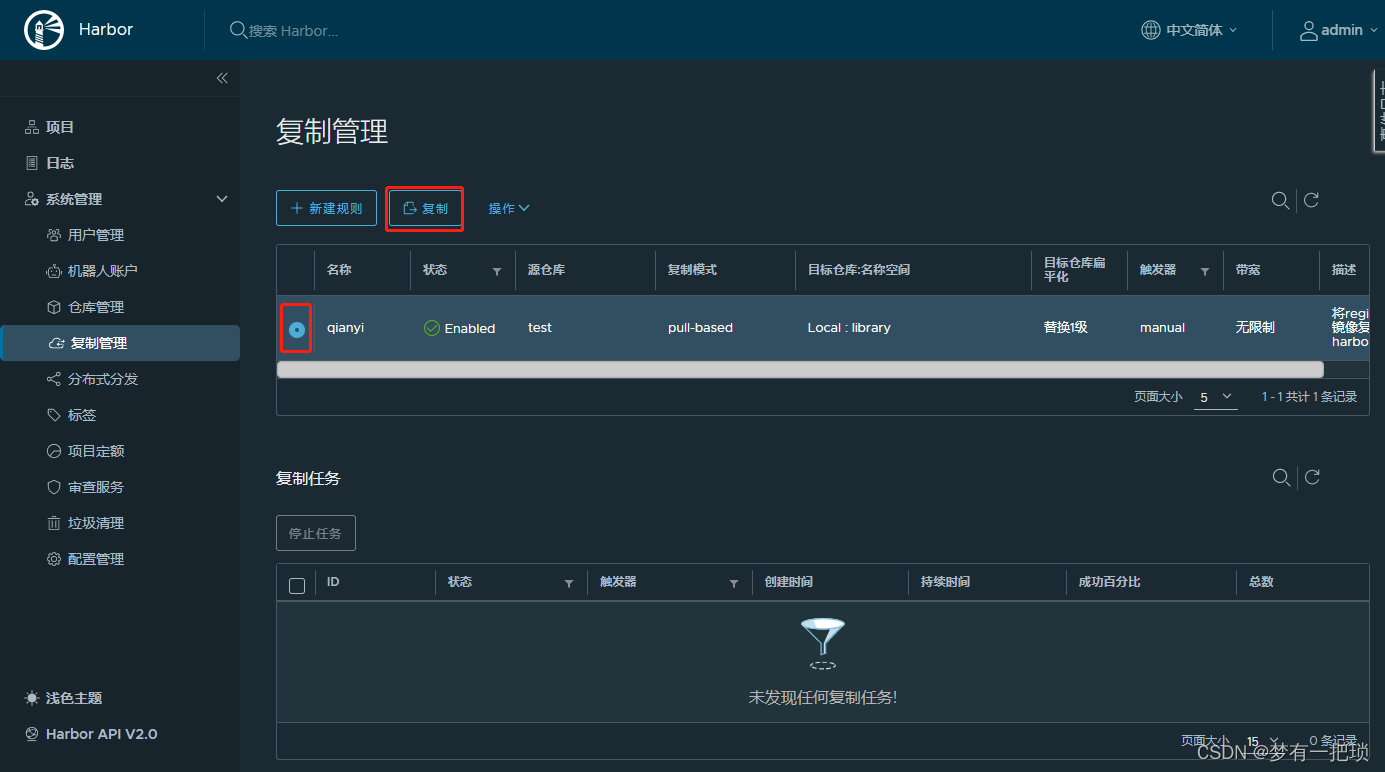

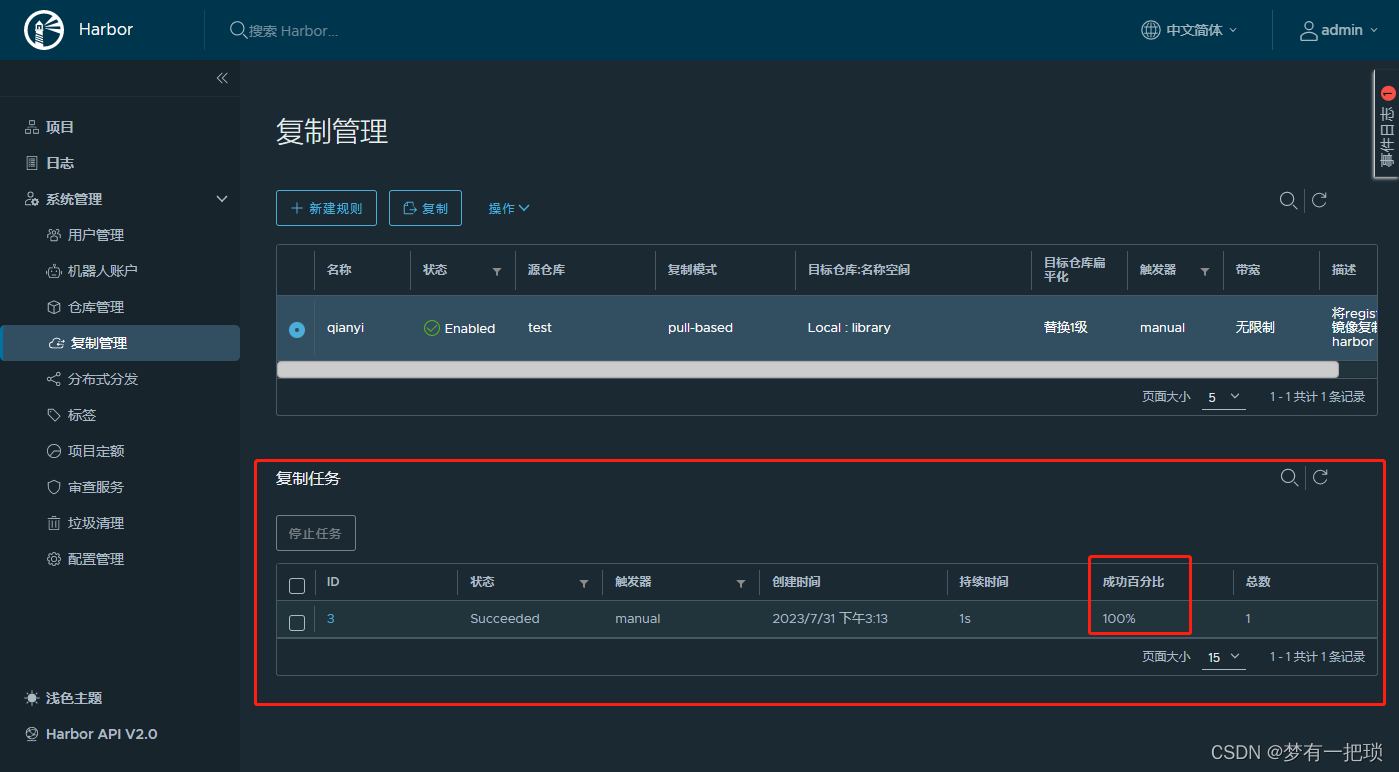

【7】 将registry仓库的镜像迁移到harbor仓库中

【8】docker-网络配置 与使用

01-基础网络和自定义网络

bridge # 默认网络,NAT模式,bridge类型网络如果想要被外界访问需要做端口映射

host # 使用宿主机的网络,性能最高

container # 使用其他容器公用网络,K8S中使用

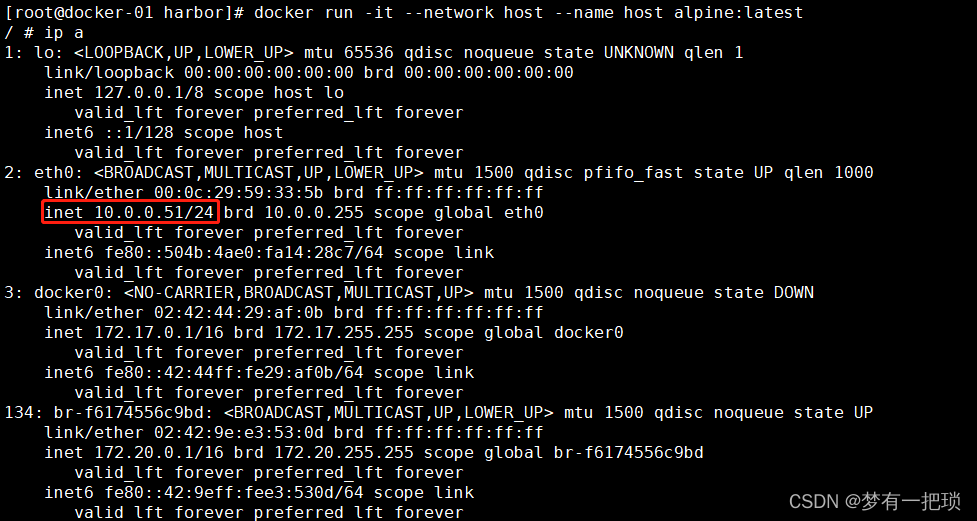

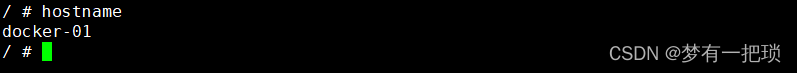

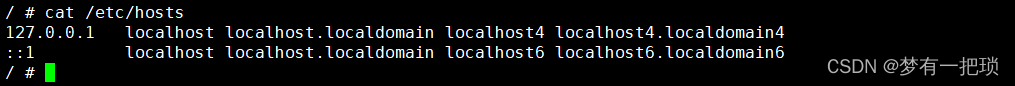

none # 没有网络,自定义02-host网络类型:使用宿主机网络,使用宿主机的IP地址、主机名、host解析

- 公用网络的缺点:如果宿主机把某些端口占用,容器就不能使用

[root@docker-01 harbor]# docker run -it --network host --name host alpine:latest

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope host valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000link/ether 00:0c:29:59:33:5b brd ff:ff:ff:ff:ff:ffinet 10.0.0.51/24 brd 10.0.0.255 scope global eth0valid_lft forever preferred_lft foreverinet6 fe80::504b:4ae0:fa14:28c7/64 scope link valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN link/ether 02:42:44:29:af:0b brd ff:ff:ff:ff:ff:ffinet 172.17.0.1/16 brd 172.17.255.255 scope global docker0valid_lft forever preferred_lft foreverinet6 fe80::42:44ff:fe29:af0b/64 scope link valid_lft forever preferred_lft forever

03-container网络类型:使用其他容器相同的网络、主机名、host解析

[root@docker-01 harbor]# docker run -dit --name test alpine:latest

5aaf372430af13710d4bae966def3d25f67c62f28445fad1b19d1959de1f93cc

[root@docker-01 harbor]# docker run -dit --network container:test --name test-02 alpine:latest

ebd43f2f636bec2f32e7d1ca3e20c36e4f52b0dcb3bb7df86ed1c68c8e6caddf

[root@docker-01 harbor]# docker inspect test | grep -i ipaddr"SecondaryIPAddresses": null,"IPAddress": "172.17.0.2","IPAddress": "172.17.0.2",

[root@docker-01 harbor]# docker inspect test-02 | grep -i ipaddr"SecondaryIPAddresses": null,"IPAddress": "",

[root@docker-01 harbor]# docker inspect test-02 | grep -i hostname"HostnamePath": "/var/lib/docker/containers/5aaf372430af13710d4bae966def3d25f67c62f28445fad1b19d1959de1f93cc/hostname","Hostname": "5aaf372430af",

[root@docker-01 harbor]# docker inspect test | grep -i hostname"HostnamePath": "/var/lib/docker/containers/5aaf372430af13710d4bae966def3d25f67c62f28445fad1b19d1959de1f93cc/hostname","Hostname": "5aaf372430af",

04-none网络类型:没有网络,空

创建自定义网络类型

[root@docker-01 harbor]# docker network ls

NETWORK ID NAME DRIVER SCOPE

85f75ec95148 bridge bridge local

f6174556c9bd harbor_harbor bridge local

e5afa29cdc9d host host local

6dee573ffc75 none null local[root@docker-01 harbor]# docker network create -d bridge --subnet 172.18.0.0/16 --gateway 172.18.0.1 test

11c946eafd311ec4370bd5a6112fa234413b44875dd28a4ef147420f34c654c3[root@docker-01 harbor]# docker network ls

NETWORK ID NAME DRIVER SCOPE

85f75ec95148 bridge bridge local

f6174556c9bd harbor_harbor bridge local

e5afa29cdc9d host host local

6dee573ffc75 none null local

11c946eafd31 test bridge local[root@docker-01 harbor]# docker run -dit --network test --name test-01 alpine:latest

2afc379d4b918947ebe7307a78eb2ea98d489aa4ca6dbe4902c4804b4b5c1a30

[root@docker-01 harbor]# docker run -dit --network test --name test-02 alpine:latest

413153cdecc6e87aaff2e902a6af57d48e9ad70f2cb135ef4dced2e7e02b70d8[root@docker-01 harbor]# docker inspect test-01 | grep -i ipaddr"SecondaryIPAddresses": null,"IPAddress": "","IPAddress": "172.18.0.2",

[root@docker-01 harbor]# docker inspect test-02 | grep -i ipaddr"SecondaryIPAddresses": null,"IPAddress": "","IPAddress": "172.18.0.3",[root@docker-01 harbor]# docker exec -it test-01 ping test-02

PING test-02 (172.18.0.3): 56 data bytes

64 bytes from 172.18.0.3: seq=0 ttl=64 time=0.049 ms

^C

--- test-02 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.049/0.049/0.049 ms

[root@docker-01 harbor]# docker exec -it test-02 ping test-01

PING test-01 (172.18.0.2): 56 data bytes

64 bytes from 172.18.0.2: seq=0 ttl=64 time=0.034 ms

^C

--- test-01 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.034/0.034/0.034 ms【9】docker-none配置桥接网络

(可以通过容器IP直接访问容器中的资源)

缺点:docker重启后就失效了

01-启动容器,使用none网络

[root@docker-01 ~]# docker run -dit --network none nginx:latest

c65f03b113bde2f957bcc971f0705292151a9a84009a390963a12adee210e8d9[root@docker-01 ~]# docker exec -it festive_yalow hostname -I[root@docker-01 ~]#

- 复制宿主机eth0配置为br0

- 修改br0文件

- 重启network

- 通过pipework工具配置容器IP:下载地址:GitHub - jpetazzo/pipework: Software-Defined Networking tools for LXC (LinuX Containers)

[root@docker-01 ~]# cd /etc/sysconfig/network-scripts/

[root@docker-01 network-scripts]# cp ifcfg-eth0 ifcfg-br0

[root@docker-01 network-scripts]# vim ifcfg-br0

TYPE=BRIDGE

BOOTPROTO=none

DEFROUTE=yes

PEERDNS=yes

PEERROUTES=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_PEERDNS=yes

IPV6_PEERROUTES=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=br0

DEVICE=br0

ONBOOT=yes

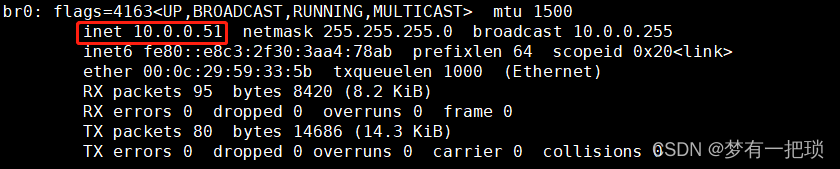

IPADDR=10.0.0.51

NETMASK=255.255.255.0

GATEWAY=10.0.0.254

DNS1=10.0.0.254[root@docker-01 network-scripts]# systemctl restart network## 修改eth0网卡配置

## 添加BRIDGE=br0,设置访问的IP是br0网卡接收

[root@docker-01 network-scripts]# systemctl restart networ[root@docker-01 ~]# ip a| grep br0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master br0 state UP qlen 1000

162: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP qlen 1000inet 10.0.0.51/24 brd 10.0.0.255 scope global br0

[root@docker-01 ~]# ip a| grep eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master br0 state UP qlen 1000

[root@docker-01 ~]# yum -y install unzip[root@docker-01 ~]# unzip pipework-master.zip

Archive: pipework-master.zip

fb03d42746a31729f3e3ddd4963e4eeaeed76714creating: pipework-master/extracting: pipework-master/.gitignore inflating: pipework-master/LICENSE inflating: pipework-master/README.md inflating: pipework-master/docker-compose.yml creating: pipework-master/doctoc/inflating: pipework-master/doctoc/Dockerfile inflating: pipework-master/pipework inflating: pipework-master/pipework.spec [root@docker-01 ~]# mv pipework-master /usr/src/

[root@docker-01 ~]# ln -s /usr/src/pipework-master/pipework /usr/local/bin/

[root@docker-01 ~]# which pipwork

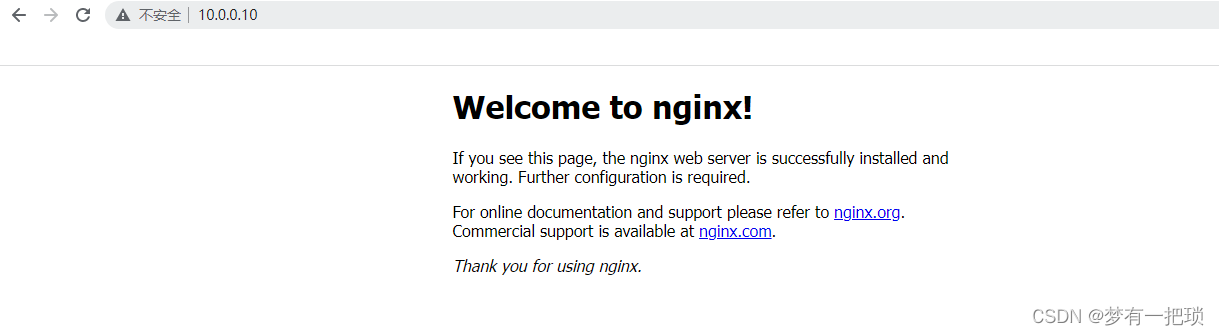

/usr/bin/which: no pipwork in (/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin)[root@docker-01 ~]# pipework br0 festive_yalow 10.0.0.10/24@10.0.0.254

[root@docker-01 ~]# docker exec -it festive_yalow hostname -I

10.0.0.10

[root@docker-01 ~]#

【10】docker-macvlan(跨宿主机容器之间相互通讯)

- macvlan的缺点:宿主机无法访问到容器,大规模使用非常麻烦,也不会解决IP冲突的问题

## docker-01 创建macvlan[root@docker-01 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

e8c1e9f4cb1b bridge bridge local

e5afa29cdc9d host host local

6dee573ffc75 none null local

[root@docker-01 ~]#[root@docker-01 ~]# docker network create -d macvlan --subnet 10.0.0.0/24 --gateway 10.0.0.254 -o parent=eth0 macvlan-01

ef4be34e90d59c1966451b6e79b1e321d46bd3b7f9bbf8bc56ecf32fd5cd3419[root@docker-01 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

e8c1e9f4cb1b bridge bridge local

e5afa29cdc9d host host local

ef4be34e90d5 macvlan-01 macvlan local

6dee573ffc75 none null local## docker-02 创建macvlan[root@docker-02 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

e2dc92e248a6 bridge bridge local

8128c95017cd host host local

95f3f6f65a18 none null local

[root@docker-02 ~]#[root@docker-02 ~]# docker network create -d macvlan --subnet 10.0.0.0/24 --gateway 10.0.0.254 -o parent=eth0 macvlan-01

32a1ca08b526e0651f9a53120e9d6e451076e1d270605f4c8a9c39b3ddd1d91c[root@docker-02 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

e2dc92e248a6 bridge bridge local

8128c95017cd host host local

32a1ca08b526 macvlan-01 macvlan local

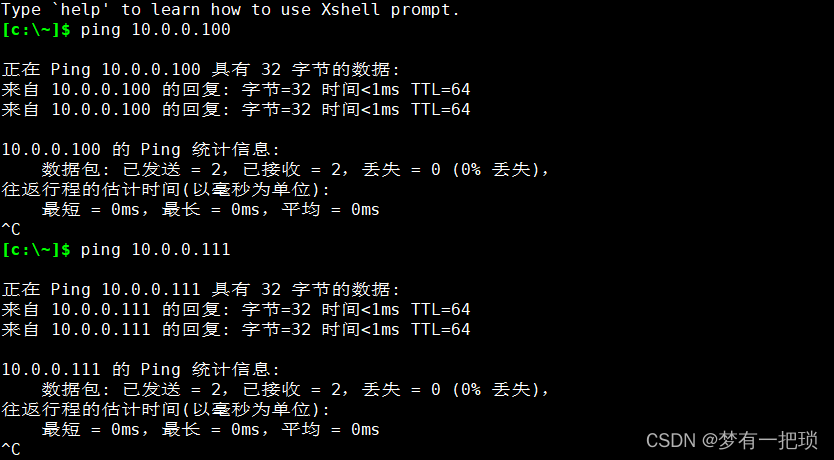

95f3f6f65a18 none null local## docker-01启动容器,容器固定使用IP地址[root@docker-01 ~]# docker run -dit --network macvlan-01 --ip 10.0.0.100 alpine:latest

18cee62da7666e7348b7d43d45a087beed1786b1a60a32252cca71fc9412f411[root@docker-01 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

18cee62da766 alpine:latest "/bin/sh" 6 seconds ago Up 4 seconds amazing_dhawan[root@docker-01 ~]# docker inspect amazing_dhawan | grep -i ipaddr"SecondaryIPAddresses": null,"IPAddress": "","IPAddress": "10.0.0.100",## docker-02启动容器,容器固定使用IP地址[root@docker-02 ~]# docker run -dit --network macvlan-01 --ip 10.0.0.111 alpine:latest

87ed8fe21caa986eb05581789ce8205e30b2b5f81fc2e5793fbb79210d7d7a01[root@docker-02 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

87ed8fe21caa alpine:latest "/bin/sh" 5 seconds ago Up 3 seconds charming_khorana[root@docker-02 ~]# docker inspect charming_khorana | grep -i ipaddr"SecondaryIPAddresses": null,"IPAddress": "","IPAddress": "10.0.0.111",

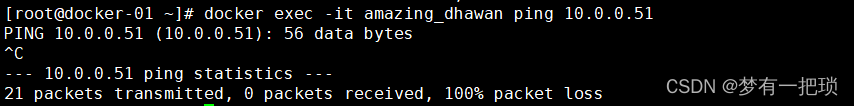

访问测试-外界访问容器

测试访问-macvlan网络缺点:容器访问外界或宿主机是不通的

【11】docker-weave(跨宿主机网络、第三方网络插件)

【11】docker-weave(跨宿主机网络、第三方网络插件)

docker-01安装weave插件

[root@docker-01 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

1cbbe7acab5c bridge bridge local

e5afa29cdc9d host host local

6dee573ffc75 none null local[root@docker-01 ~]# wget https://gitcode.net/mirrors/weaveworks/weave/-/archive/latest_release/weave-latest_release.tar.gz[root@docker-01 ~]# tar xf weave-latest_release.tar.gz -C /usr/src/

[root@docker-01 ~]# cd /usr/src/weave-latest_release/[root@docker-01 weave-latest_release]# cp weave /usr/local/bin/[root@docker-01 weave-latest_release]# which weave

/usr/local/bin/weave## 安装weave[root@docker-01 weave-latest_release]# weave version

weave script unreleased

Unable to find image 'weaveworks/weaveexec:latest' locally

latest: Pulling from weaveworks/weaveexec

21c83c524219: Pull complete

02ec35b6f627: Pull complete

c40f141adde9: Pull complete

a63db11be476: Pull complete

e8d3a1b4fb09: Pull complete

a32777c54c9c: Pull complete

62ae831e3996: Pull complete

4dce36b0e389: Pull complete

6f3464413eb4: Pull complete

Digest: sha256:847cdb3eb0d38ff6590b6066ec0f6b02ced47c1d76a78f3f93d8ca6145aecaa5

Status: Downloaded newer image for weaveworks/weaveexec:latest

weave git-34de0b10a69c## 启动[root@docker-01 weave-latest_release]# weave launch

latest: Pulling from weaveworks/weave

latest: Pulling from weaveworks/weave

21c83c524219: Already exists

02ec35b6f627: Already exists

c40f141adde9: Already exists

a63db11be476: Already exists

e8d3a1b4fb09: Already exists

Digest: sha256:a4f1dd7b4fcd3a391c165f1ab20c5f72330c22fe0918c899be67763717bb2a28

Status: Downloaded newer image for weaveworks/weave:latest

docker.io/weaveworks/weave:latest

latest: Pulling from weaveworks/weavedb

a53a673d456f: Pull complete

Digest: sha256:69451a2121b288e09329241de9401af1aeddd05d93f145764bbb735a4ea05c76

Status: Downloaded newer image for weaveworks/weavedb:latest

docker.io/weaveworks/weavedb:latest

d0c0b0e24094755002c7338d101a656e5c511af932a55014a5cb8a3439530f17[root@docker-01 weave-latest_release]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d0c0b0e24094 weaveworks/weave:latest "/home/weave/weaver …" 31 seconds ago Up 30 seconds weave

4dc0487ac570 weaveworks/weaveexec:latest "data-only" 31 seconds ago Created weavevolumes-latest

957be4362c63 weaveworks/weavedb:latest "data-only" 31 seconds ago Created weavedb[root@docker-01 weave-latest_release]# docker network ls

NETWORK ID NAME DRIVER SCOPE

1cbbe7acab5c bridge bridge local

e5afa29cdc9d host host local

6dee573ffc75 none null local

b9fde5aa55c8 weave weavemesh local

docker-02安装weave 插件

[root@docker-02 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

e2dc92e248a6 bridge bridge local

8128c95017cd host host local

95f3f6f65a18 none null local[root@docker-02 ~]# wget https://gitcode.net/mirrors/weaveworks/weave/-/archive/latest_release/weave-latest_release.tar.gz[root@docker-02 ~]# tar xf weave-latest_release.tar.gz -C /usr/src/

[root@docker-02 ~]# cd /usr/src/weave-latest_release/[root@docker-02 weave-latest_release]# cp weave /usr/local/bin/

[root@docker-02 weave-latest_release]# which weave

/usr/local/bin/weave## 安装weave[root@docker-02 weave-latest_release]# weave version

weave script unreleased

Unable to find image 'weaveworks/weaveexec:latest' locally

latest: Pulling from weaveworks/weaveexec

21c83c524219: Pull complete

02ec35b6f627: Pull complete

c40f141adde9: Pull complete

a63db11be476: Pull complete

e8d3a1b4fb09: Pull complete

a32777c54c9c: Pull complete

62ae831e3996: Pull complete

4dce36b0e389: Pull complete

6f3464413eb4: Pull complete

Digest: sha256:847cdb3eb0d38ff6590b6066ec0f6b02ced47c1d76a78f3f93d8ca6145aecaa5

Status: Downloaded newer image for weaveworks/weaveexec:latest

weave git-34de0b10a69c## 启动weave[root@docker-02 weave-latest_release]# weave launch

latest: Pulling from weaveworks/weave

latest: Pulling from weaveworks/weave

21c83c524219: Already exists

02ec35b6f627: Already exists

c40f141adde9: Already exists

a63db11be476: Already exists

e8d3a1b4fb09: Already exists

Digest: sha256:a4f1dd7b4fcd3a391c165f1ab20c5f72330c22fe0918c899be67763717bb2a28

Status: Downloaded newer image for weaveworks/weave:latest

docker.io/weaveworks/weave:latest

latest: Pulling from weaveworks/weavedb

a53a673d456f: Pull complete

Digest: sha256:69451a2121b288e09329241de9401af1aeddd05d93f145764bbb735a4ea05c76

Status: Downloaded newer image for weaveworks/weavedb:latest

docker.io/weaveworks/weavedb:latest

1cf777979aaf506941f53e67ea0349458b8a285f745b9bdd116f06c98c81a0c9[root@docker-02 weave-latest_release]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1cf777979aaf weaveworks/weave:latest "/home/weave/weaver …" 8 seconds ago Up 7 seconds weave

d1855505eee5 weaveworks/weaveexec:latest "data-only" 8 seconds ago Created weavevolumes-latest

c7830a306f15 weaveworks/weavedb:latest "data-only" 8 seconds ago Created weavedb[root@docker-02 weave-latest_release]# docker network ls

NETWORK ID NAME DRIVER SCOPE

e2dc92e248a6 bridge bridge local

8128c95017cd host host local

95f3f6f65a18 none null local

f68122130ccc weave weavemesh local

将各个宿主机的weave互联

## docker-01 执行[root@docker-01 weave-latest_release]# weave connect 10.0.0.52

[root@docker-01 weave-latest_release]# ## docker-02 执行[root@docker-02 weave-latest_release]# weave connect 10.0.0.51

[root@docker-02 weave-latest_release]#

docker-01启动一个容器,为容器划分网络

[root@docker-01 ~]# docker run -dit --name test-01 alpine:latest

b0f74c404f9011c35a8a1450c6c38100f7350e5f82cbceafe7eff352e2b296a9[root@docker-01 ~]# weave attach 172.10.3.23/24 test-01

172.10.3.23[root@docker-01 ~]# docker exec -it test-01 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever

15: eth0@if16: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ffinet 172.17.0.2/16 brd 172.17.255.255 scope global eth0valid_lft forever preferred_lft forever

17: ethwe@if18: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1376 qdisc noqueue state UP link/ether 1a:7a:40:92:39:b4 brd ff:ff:ff:ff:ff:ffinet 172.10.3.23/24 brd 172.10.3.255 scope global ethwevalid_lft forever preferred_lft foreverdocker-02启动一个容器,为容器划分网络

[root@docker-02 ~]# docker run -dit --name test-02 alpine:latest

e140c3376180cc443d2ce23aae1b731124b3412f0550265a792df18ee3450c03[root@docker-02 ~]# weave attach 172.10.3.24/24 test-02

172.10.3.24[root@docker-02 ~]# docker exec -it test-02 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever

15: eth0@if16: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ffinet 172.17.0.2/16 brd 172.17.255.255 scope global eth0valid_lft forever preferred_lft forever

17: ethwe@if18: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1376 qdisc noqueue state UP link/ether 92:ea:f5:1f:22:41 brd ff:ff:ff:ff:ff:ffinet 172.10.3.24/24 brd 172.10.3.255 scope global ethwevalid_lft forever preferred_lft forever测试验证容器之间是否能通信

## docker -01[root@docker-01 ~]# docker exec -it test-01 ping 172.10.3.24

PING 172.10.3.24 (172.10.3.24): 56 data bytes

64 bytes from 172.10.3.24: seq=0 ttl=64 time=2.490 ms

64 bytes from 172.10.3.24: seq=1 ttl=64 time=1.111 ms

^C

--- 172.10.3.24 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 1.111/1.800/2.490 ms## docker -02[root@docker-02 ~]# docker exec -it test-02 ping 172.10.3.23

PING 172.10.3.23 (172.10.3.23): 56 data bytes

64 bytes from 172.10.3.23: seq=0 ttl=64 time=1.766 ms

64 bytes from 172.10.3.23: seq=1 ttl=64 time=1.385 ms

^C

--- 172.10.3.23 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 1.385/1.575/1.766 ms

【12】docker-overlay(跨宿主机网络)

Docker跨主机网络(overlay)是Docker提供的一种跨主机的网络解决方案,它允许用户在多台Docker主机上创建一个虚拟网络,在这个虚拟网络中的容器可以像在同一主机上一样互相通信,而不需要基于传统的网络设备、路由、NAT等一系列复杂的配置,从而解决了跨主机的网络通信问题。

001-创建docker-swarm集群

## docker-01[root@docker-01 ~]# docker swarm init --advertise-addr 10.0.0.51

Swarm initialized: current node (a7e5s8wciulvyrg4pgdv83lwu) is now a manager.To add a worker to this swarm, run the following command:docker swarm join --token SWMTKN-1-2zhl0zla5y6nn2hrswec6tts5yvyjlq96numxewxpi900065qs-81pajrurl9khn194e9ql1f35w 10.0.0.51:2377To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.[root@docker-01 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

a7e5s8wciulvyrg4pgdv83lwu * docker-01 Ready Active Leader 24.0.5## docker-02、docker-03 加入集群[root@docker-02 ~]# docker swarm join --token SWMTKN-1-2zhl0zla5y6nn2hrswec6tts5yvyjlq96numxewxpi900065qs-81pajrurl9khn194e9ql1f35w 10.0.0.51:2377

This node joined a swarm as a worker.## docker-01查看集群节点[root@docker-01 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

a7e5s8wciulvyrg4pgdv83lwu * docker-01 Ready Active Leader 24.0.5

o6u5wepq22grh9n5vzv3ymiz3 docker-02 Ready Active 24.0.5

292u87wndtqjh5velgh2tngcy docker-03 Ready Active 24.0.5002-在manager端中创建overlay网络

[root@docker-01 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

1cbbe7acab5c bridge bridge local

bded221fbed9 docker_gwbridge bridge local

e5afa29cdc9d host host local

81vbjl2qgv5q ingress overlay swarm

6dee573ffc75 none null local[root@docker-01 ~]# docker network create -d overlay --attachable my-overlay

yxnhx5v7sy8a93blqg5mnq2or[root@docker-01 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

1cbbe7acab5c bridge bridge local

bded221fbed9 docker_gwbridge bridge local

e5afa29cdc9d host host local

81vbjl2qgv5q ingress overlay swarm

yxnhx5v7sy8a my-overlay overlay swarm

6dee573ffc75 none null local003-docker-02、docker-03验证网络是否可用

## docker-02 验证[root@docker-02 ~]# docker run -it --network=my-overlay --name test-02 alpine:latest

/ # ping test-03

PING test-03 (10.0.1.6): 56 data bytes

64 bytes from 10.0.1.6: seq=0 ttl=64 time=0.262 ms

64 bytes from 10.0.1.6: seq=1 ttl=64 time=0.319 ms

^C

--- test-03 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.262/0.290/0.319 ms## docker-03 验证[root@docker-03 ~]# docker run -it --network=my-overlay --name test-03 alpine:latest

/ # ping test-02

PING test-02 (10.0.1.4): 56 data bytes

64 bytes from 10.0.1.4: seq=0 ttl=64 time=0.437 ms

64 bytes from 10.0.1.4: seq=1 ttl=64 time=0.625 ms

^C

--- test-02 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.437/0.531/0.625 ms## 如果没有使用my-overlay网络,容器之间时不能使用主机名访问的

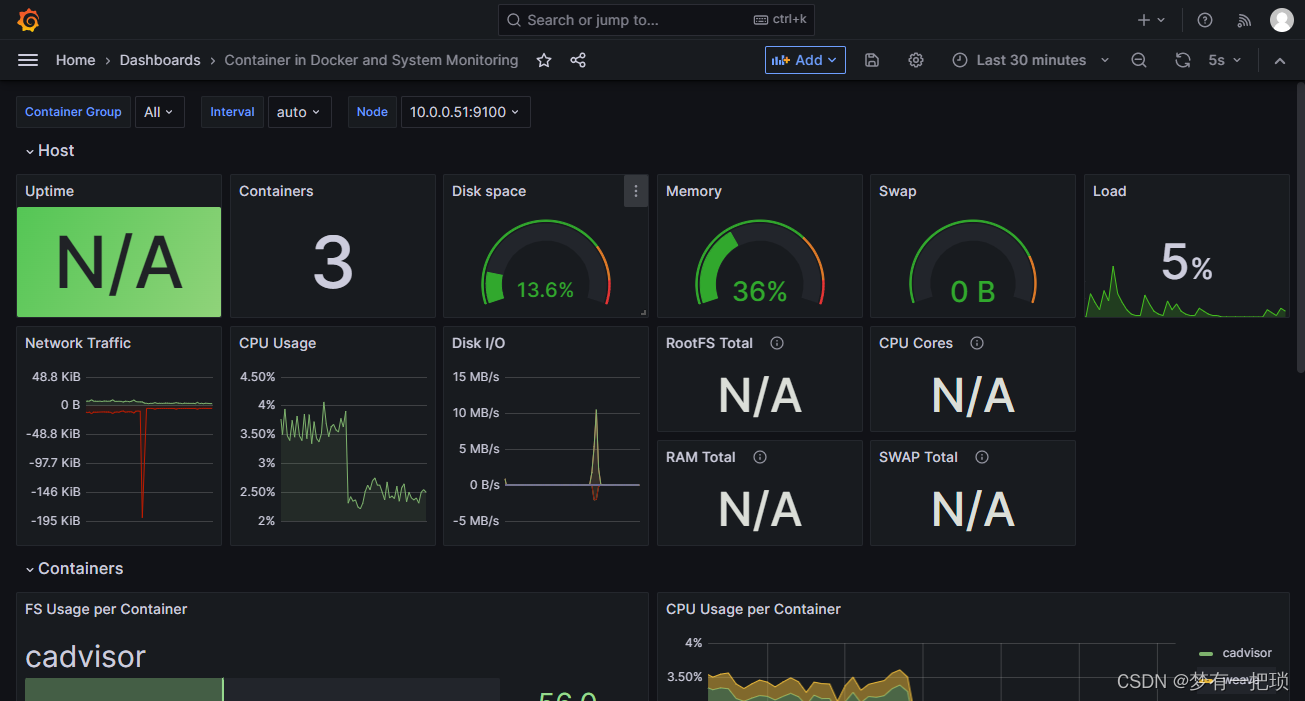

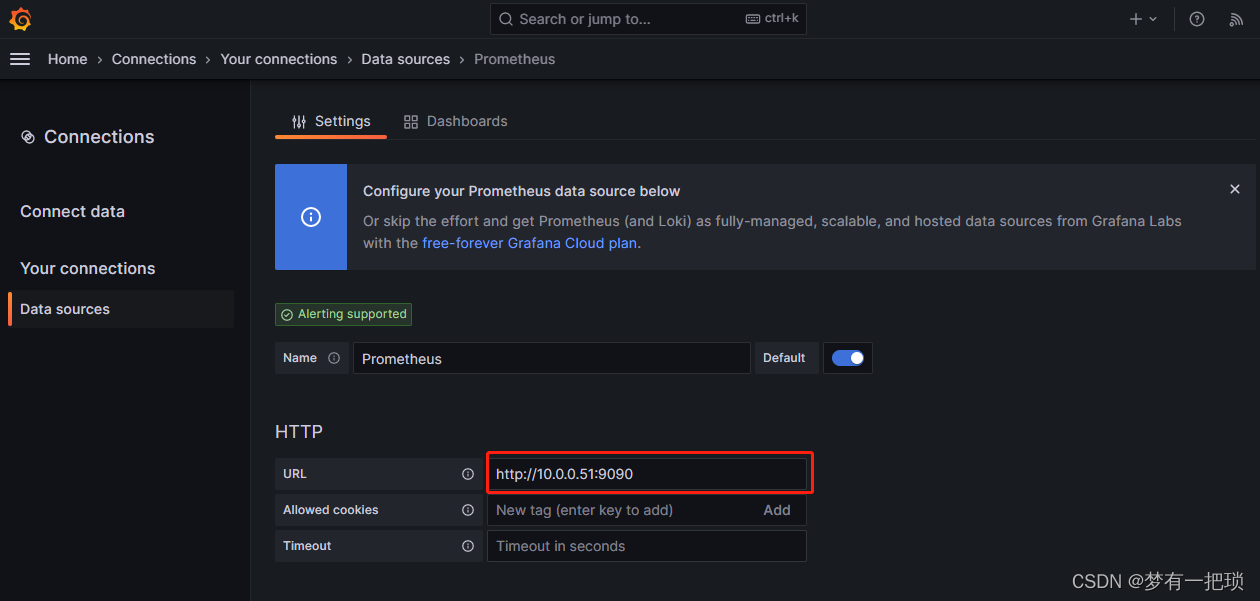

【13】prometheus-监控宿主机与容器

- 采集数据:node-exporter、mysql-exporter、cadvisor

- 收集数据:job_name(声明:监控的目标、报警程序)

- 存储数据:自带的时间序列数据库(本地)

- 报警程序:alterManager(邮件、钉钉)

- 图形展示:grafana

- 拉取数据:pushgateway(prometheus会自动拉取数据,但是某些程序不允许拉取,可以将数据先放到pushteway中,在拉取)

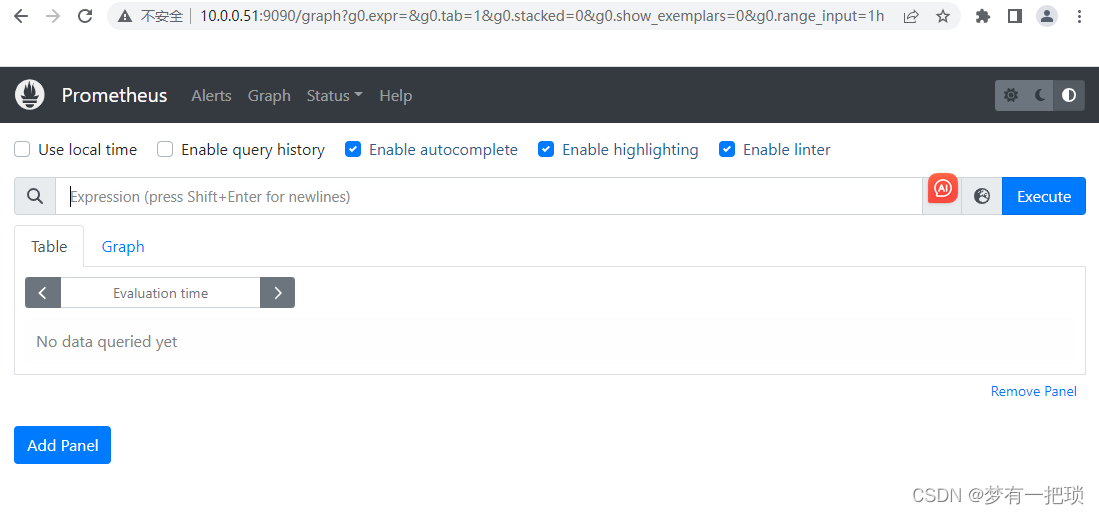

docker-01安装prometheus-server (9090)

- Download | Prometheus

- Release 2.46.0 / 2023-07-25 · prometheus/prometheus · GitHub

## docker-01[root@docker-01 ~]# tar xf prometheus-2.46.0.linux-amd64.tar.gz -C /usr/src

[root@docker-01 ~]# cd /usr/src/

[root@docker-01 src]# mv prometheus-2.46.0.linux-amd64/ prometheus## 启动[root@docker-01 prometheus]# cd prometheus/

[root@docker-01 prometheus]# ./prometheus --config.file="prometheus.yml" &

常用的命令

./prometheus -h

--config.file="prometheus.yml" # 指定配置文件

--web.listen-address="0.0.0.0:9090" # 监听地址或端口

--log.level=info # 日志级别

--alertmanager.timeout=10s # 与配置组件的超时时间

--storage.tsdb.path="data/" # 指定数据目录

--storage.tsdb.retention.time=15d # 数据保存的时间。默认时15天使用systemctl管理:

[root@docker-01 prometheus]# vim /usr/lib/systemd/system/prometheus.service[Unit]

Description=prometheus[Service]

ExecStart=/usr/src/prometheus/prometheus --config.file=/usr/src/prometheus/prometheus.yml

ExecReload=/bin/kill -HUP $MAINPID

killMode=process

Restart=on-failure[Install]

WantedBy=multi-user.target[root@docker-01 prometheus]# kill 10579[root@docker-01 prometheus]# systemctl daemon-reload

[root@docker-01 prometheus]# systemctl start prometheus.service

[root@docker-01 prometheus]# systemctl enable prometheus.service

Created symlink from /etc/systemd/system/multi-user.target.wants/prometheus.service to /usr/lib/systemd/system/prometheus.service.[root@docker-01 prometheus]# netstat -lntp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 172.17.0.1:53 0.0.0.0:* LISTEN 4674/weaver

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1898/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 2079/master

tcp 0 0 127.0.0.1:6782 0.0.0.0:* LISTEN 4674/weaver

tcp 0 0 127.0.0.1:6784 0.0.0.0:* LISTEN 4674/weaver

tcp6 0 0 :::7946 :::* LISTEN 2866/dockerd

tcp6 0 0 :::8080 :::* LISTEN 2866/dockerd

tcp6 0 0 :::22 :::* LISTEN 1898/sshd

tcp6 0 0 ::1:25 :::* LISTEN 2079/master

tcp6 0 0 :::6783 :::* LISTEN 4674/weaver

tcp6 0 0 :::9090 :::* LISTEN 10781/prometheus

tcp6 0 0 :::2377 :::* LISTEN 2866/dockerd

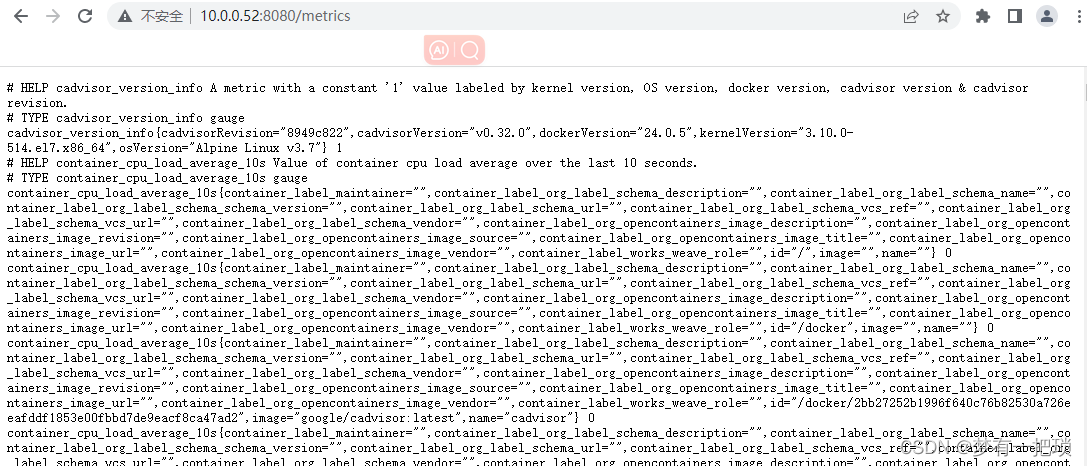

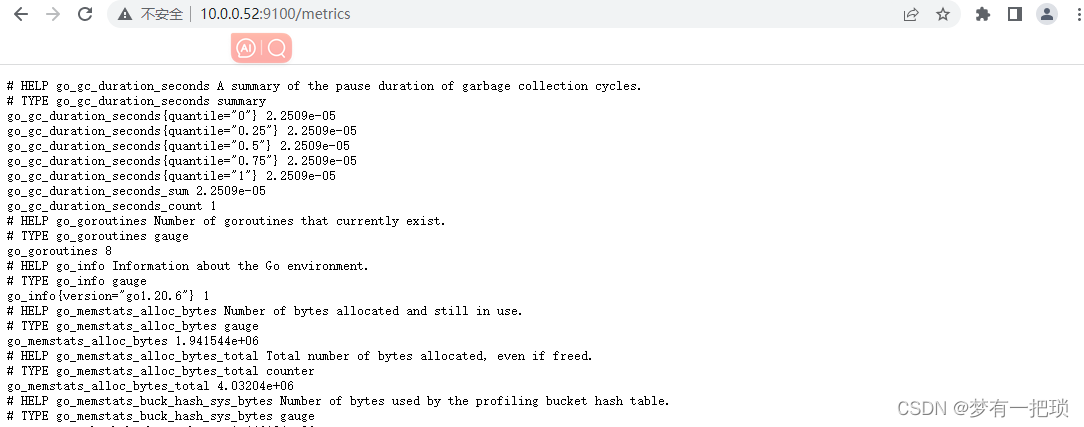

[root@docker-01 prometheus]# 安装exporter(9100)、cadvisor(8080)监控node节点(宿主机、容器)

- node-exporter:用户监控宿主机(cpu、mem、disk、network 文件描述符、系统负载、系统服务)

- cadvisor:可以用于监控容器的所有信息

## docker-01、docker-02、docker-03 全部安装node_exporter[root@docker-01 ~]# tar xf node_exporter-1.6.1.linux-amd64.tar.gz -C /usr/src/

[root@docker-01 ~]# cd /usr/src/

[root@docker-01 src]# mv node_exporter-1.6.1.linux-amd64/ node_exporter[root@docker-01 src]# vim /usr/lib/systemd/system/node_exporter.service[Unit]

Description=node_exporter[Service]

ExecStart=/usr/src/node_exporter/node_exporter

ExecReload=/bin/kill -HUP $MAINPID

killMode=process

Restart=on-failure[Install]

WantedBy=multi-user.target[root@docker-01 src]# systemctl daemon-reload

[root@docker-01 src]# systemctl start node_exporter.service

[root@docker-01 src]# systemctl enable node_exporter.service

Created symlink from /etc/systemd/system/multi-user.target.wants/node_exporter.service to /usr/lib/systemd/system/node_exporter.service.[root@docker-01 src]# netstat -lntp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 172.17.0.1:53 0.0.0.0:* LISTEN 4674/weaver

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1898/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 2079/master

tcp 0 0 127.0.0.1:6782 0.0.0.0:* LISTEN 4674/weaver

tcp 0 0 127.0.0.1:6784 0.0.0.0:* LISTEN 4674/weaver

tcp6 0 0 :::7946 :::* LISTEN 2866/dockerd

tcp6 0 0 :::9100 :::* LISTEN 11022/node_exporter

tcp6 0 0 :::8080 :::* LISTEN 2866/dockerd

tcp6 0 0 :::22 :::* LISTEN 1898/sshd

tcp6 0 0 ::1:25 :::* LISTEN 2079/master

tcp6 0 0 :::6783 :::* LISTEN 4674/weaver

tcp6 0 0 :::9090 :::* LISTEN 10781/prometheus

tcp6 0 0 :::2377 :::* LISTEN 2866/dockerd ## docker-01、docker-02、docker-03 全部安装cadvisor[root@docker-01 src]# docker run -d --volume=/:/rootfs:ro --volume=/var/run:/var/run:rw --volume=/sys/:/sys:ro --volume=/var/lib/docker/:/var/lib/docker:ro -p 8080:8080 -d --restart=always --name=cadvisor google/cadvisor:latest

6384088b943acdeca3a945b120bcc896d9ab69f6b5ddf85205b152e60a5bdb34[root@docker-01 src]# docker ps -a -l

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6384088b943a google/cadvisor:latest "/usr/bin/cadvisor -…" 20 seconds ago Up 20 seconds 0.0.0.0:8080->8080/tcp, :::8080->8080/tcp cadvisor

访问测试,取值页面是否有数据 (cadvisor、node_exporter)

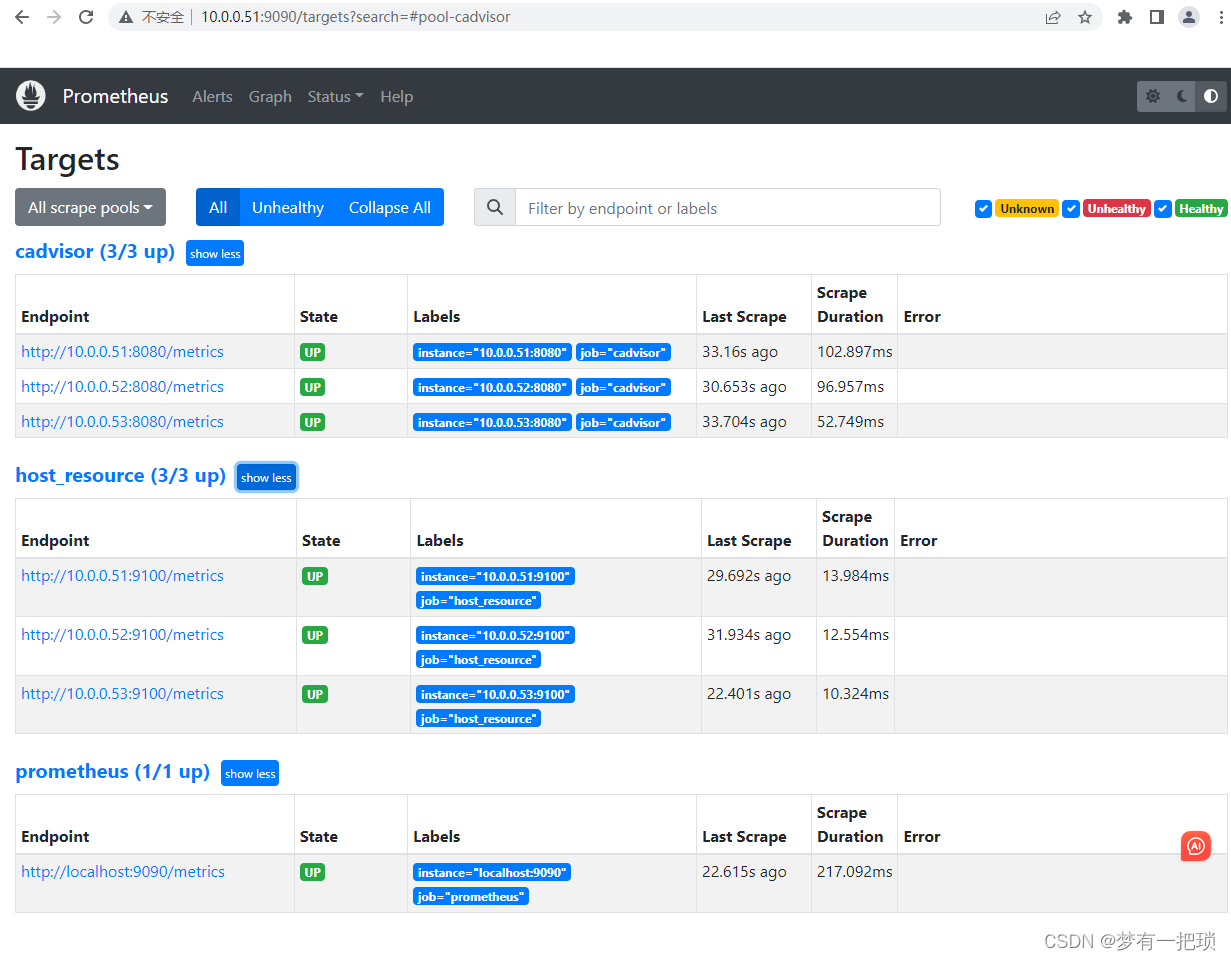

docker-01:修改prometheus的主配值文件(收集数据源):

## docker-01[root@docker-01 src]# vim prometheus/prometheus.yml

.......

.......

scrape_configs:# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.- job_name: "prometheus"static_configs:- targets: ["localhost:9090"]- job_name: "cadvisor"static_configs:- targets: ['10.0.0.51:8080','10.0.0.52:8080','10.0.0.53:8080']- job_name: "host_resource"static_configs:- targets: ['10.0.0.51:9100','10.0.0.52:9100','10.0.0.53:9100']## 修改配置文件需要重启服务,也可以配置动态配置文件

[root@docker-01 src]# systemctl restart prometheus.service

动态配置文件,不需要每次修改配置文件重启服务

[root@docker-01 src]# vim prometheus/prometheus.yml

......

......

scrape_configs:# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.- job_name: "prometheus"static_configs:- targets: ["localhost:9090"]- job_name: "cadvisor"file_sd_configs:- files:- /opt/prometheus/file_sd/cadvisor.jsonrefresh_interval: 10s- job_name: "host_resource"file_sd_configs:- files:- /opt/prometheus/file_sd/host_resource.jsonrefresh_interval: 10s[root@docker-01 src]# mkdir prometheus/file_sd

[root@docker-01 src]# vim prometheus/file_sd/cadvisor.json[{"targets": ["10.0.0.51:8080","10.0.0.52:8080","10.0.0.53:8080"]}

]

[root@docker-03 opt]# vim prometheus/file_sd/cadvisor.json

[{"targets": ["10.0.0.51:9100","10.0.0.52:9100","10.0.0.53:9100"]}

] [root@docker-03 prometheus]# systemctl restart prometheus.service

## 后续有节点接入,添加IP地址,不用重启prometheus服务了【14】接入邮件报警-alertmanager (9093、9094)

- Download | Prometheus

## docker-01 安装alertmanager[root@docker-01 ~]# tar xf alertmanager-0.25.0.linux-amd64.tar.gz -C /usr/src/

[root@docker-01 ~]# cd /usr/src/

[root@docker-01 src]# mv alertmanager-0.25.0.linux-amd64/ alertmanager## 修改配置文件[root@docker-01 src]# cd alertmanager/

[root@docker-01 alertmanager]# cp alertmanager.yml{,.bak}[root@docker-01 alertmanager]# vim alertmanager.ymlglobal:

global:resolve_timeout: 5msmtp_from: '*********@qq.com'smtp_smarthost: 'smtp.qq.com:465'smtp_auth_username: '*********@qq.com'smtp_auth_password: 'vgoejqgxmrbtbcfg'smtp_require_tls: falsesmtp_hello: 'qq.com'

route:group_by: ['alertname']group_wait: 5sgroup_interval: 5srepeat_interval: 5mreceiver: 'email'

receivers:- name: 'email'email_configs:- to: '************@163.com'

inhibit_rules:- source_match:severity: 'critical'target_match:severity: 'warning'equal: ['alertname', 'dev', 'instance']## 启动服务[root@docker-01 alertmanager]# vim /usr/lib/systemd/system/alertmanager.service[Unit]

Description=alertmanager[Service]

ExecStart=/usr/src/alertmanager/alertmanager --config.file=/usr/src/alertmanager/alertmanager.yml

ExecReload=/bin/kill -HUP $MAINPID

killMode=process

Restart=on-failure[Install]

WantedBy=multi-user.target[root@docker-01 alertmanager]# systemctl daemon-reload

[root@docker-01 alertmanager]# systemctl start alertmanager.service

[root@docker-01 alertmanager]# systemctl enable alertmanager.service

Created symlink from /etc/systemd/system/multi-user.target.wants/alertmanager.service to /usr/lib/systemd/system/alertmanager.service.[root@docker-01 alertmanager]# netstat -lntp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:8080 0.0.0.0:* LISTEN 11287/docker-proxy

tcp 0 0 172.17.0.1:53 0.0.0.0:* LISTEN 4674/weaver

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1898/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 2079/master

tcp 0 0 127.0.0.1:6782 0.0.0.0:* LISTEN 4674/weaver

tcp 0 0 127.0.0.1:6784 0.0.0.0:* LISTEN 4674/weaver

tcp6 0 0 :::7946 :::* LISTEN 2866/dockerd

tcp6 0 0 :::9100 :::* LISTEN 11022/node_exporter

tcp6 0 0 :::8080 :::* LISTEN 11292/docker-proxy

tcp6 0 0 :::22 :::* LISTEN 1898/sshd

tcp6 0 0 ::1:25 :::* LISTEN 2079/master

tcp6 0 0 :::6783 :::* LISTEN 4674/weaver

tcp6 0 0 :::9090 :::* LISTEN 11933/prometheus

tcp6 0 0 :::9093 :::* LISTEN 12845/alertmanager

tcp6 0 0 :::9094 :::* LISTEN 12845/alertmanager

tcp6 0 0 :::2377 :::* LISTEN 2866/dockerd

修改prometheus配置

[root@docker-01 ~]# vim /usr/src/prometheus/prometheus.yml

......

......

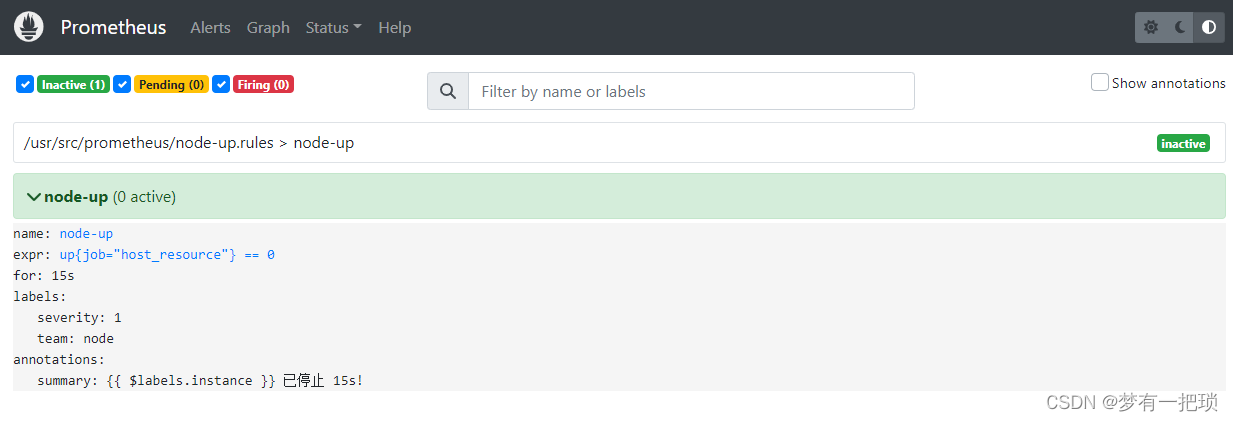

alerting:alertmanagers:- static_configs:- targets:- 10.0.0.51:9093rule_files:- "node-up.rules"......

......## 编辑报警规则[root@docker-01 ~]# vim /usr/src/prometheus/node-up.rulesgroups:

- name: node-uprules:- alert: node-upexpr: up{job="host_resource"} == 0for: 15slabels:severity: 1team: nodeannotations:summary: "{{ $labels.instance }} 已停止 15s! "重启prometheus服务

[root@docker-01 ~]# systemctl restart prometheus.service [root@docker-01 ~]# netstat -lntp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:8080 0.0.0.0:* LISTEN 11287/docker-proxy

tcp 0 0 172.17.0.1:53 0.0.0.0:* LISTEN 4674/weaver

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1898/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 2079/master

tcp 0 0 127.0.0.1:6782 0.0.0.0:* LISTEN 4674/weaver

tcp 0 0 127.0.0.1:6784 0.0.0.0:* LISTEN 4674/weaver

tcp6 0 0 :::7946 :::* LISTEN 2866/dockerd

tcp6 0 0 :::9100 :::* LISTEN 11022/node_exporter

tcp6 0 0 :::8080 :::* LISTEN 11292/docker-proxy

tcp6 0 0 :::22 :::* LISTEN 1898/sshd

tcp6 0 0 ::1:25 :::* LISTEN 2079/master

tcp6 0 0 :::6783 :::* LISTEN 4674/weaver

tcp6 0 0 :::9090 :::* LISTEN 13410/prometheus

tcp6 0 0 :::9093 :::* LISTEN 12845/alertmanager

tcp6 0 0 :::9094 :::* LISTEN 12845/alertmanager

tcp6 0 0 :::2377 :::* LISTEN 2866/dockerd

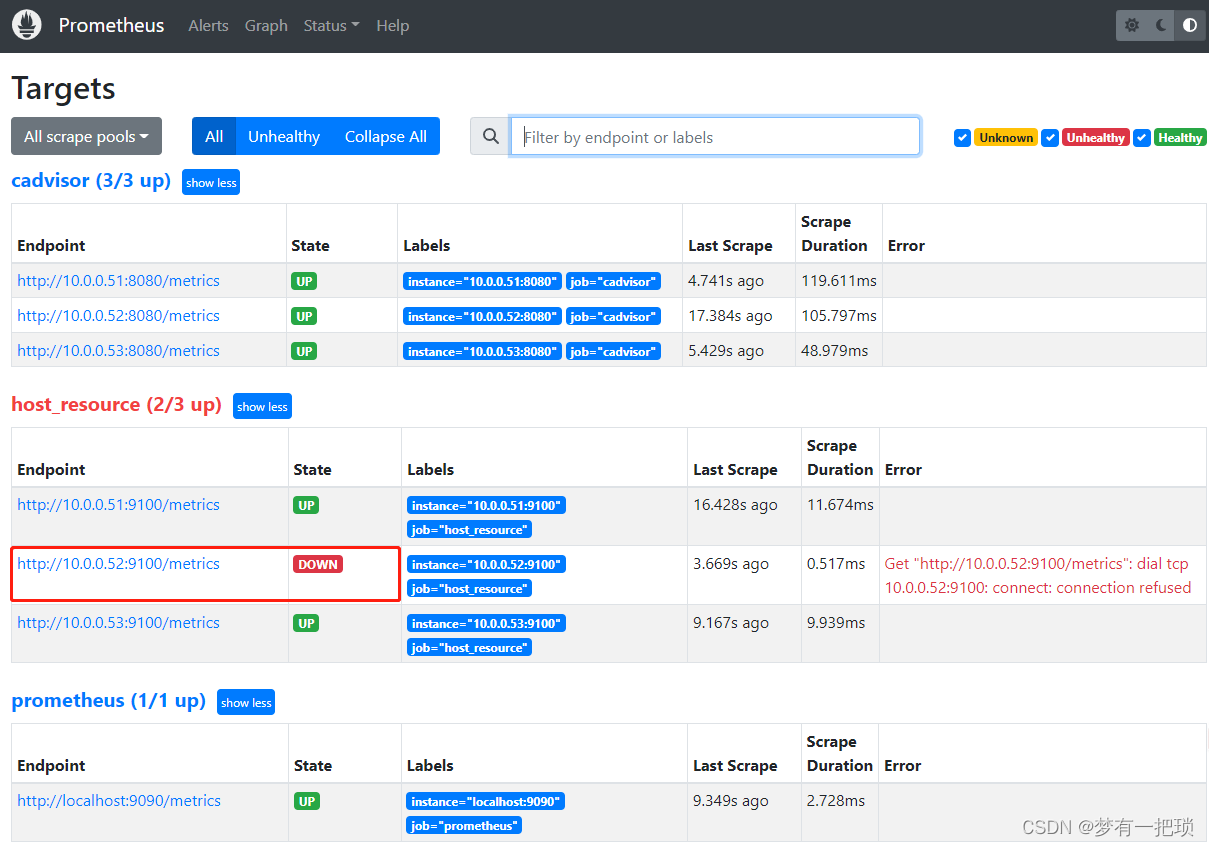

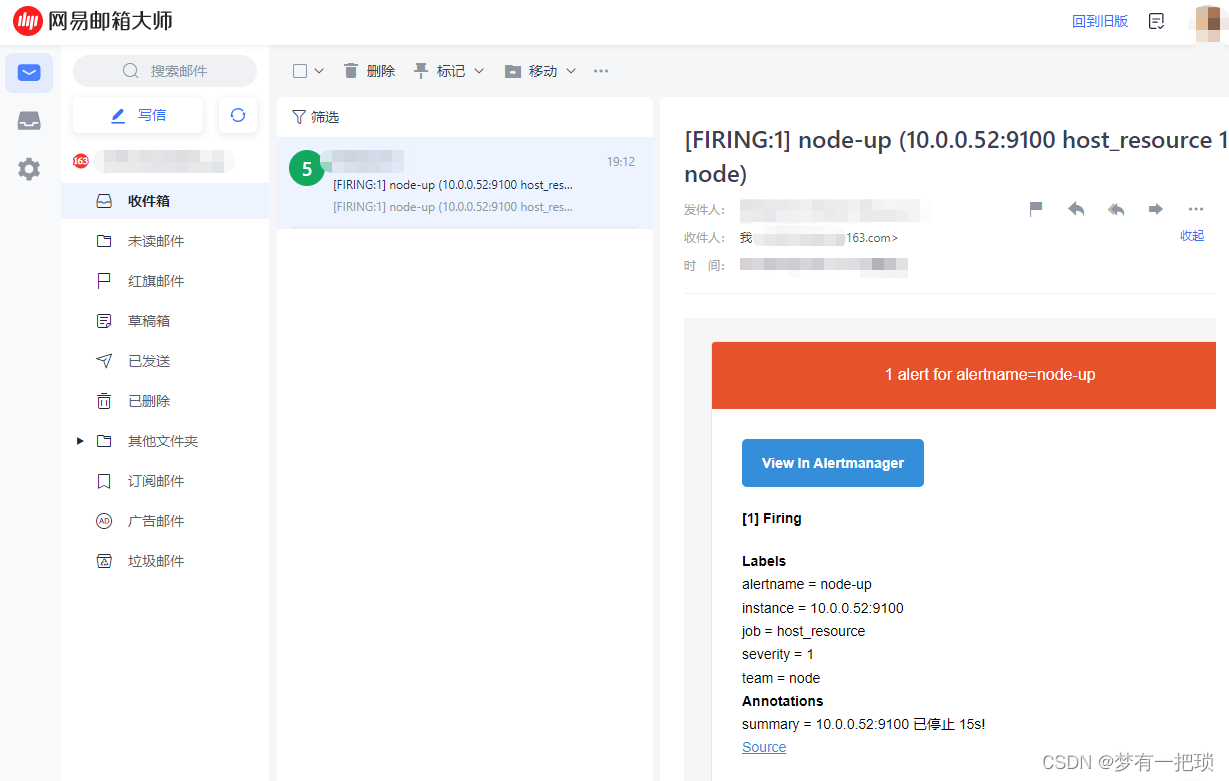

测试验证报警是否正常

[root@docker-02 src]# systemctl stop node_exporter.service

【15】钉钉报警配置

global:resolve_timeout: 5msmtp_from: '*********@qq.com' # 发件人smtp_smarthost: 'smtp.qq.com:465'smtp_auth_username: '*********@qq.com'smtp_auth_password: 'vgoejqgxmrbtbcfg'smtp_require_tls: falsesmtp_hello: 'qq.com'

route:group_by: ['alertname']group_wait: 5sgroup_interval: 5srepeat_interval: 5mreceiver: 'dingding'

receivers:- name: 'dingding'dingding_configs:- url: localhost:80/dingtalk/webook/send # 钉钉的机器人地址

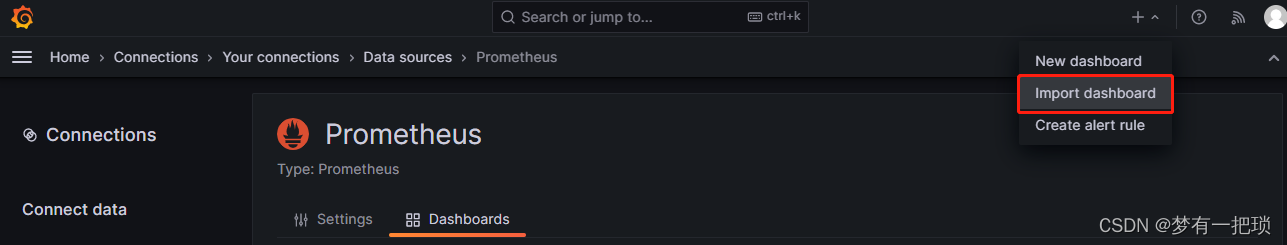

inhibit_rules:- source_match:severity: 'critical'target_match:severity: 'warning'equal: ['alertname', 'dev', 'instance']【16】接入grafan(3000)

- Index of /grafana/yum/rpm/Packages/ | 清华大学开源软件镜像站 | Tsinghua Open Source Mirror

## docker-02[root@docker-02 ~]# wget http://mirror.tuna.tsinghua.edu.cn/grafana/yum/rpm/Packages/grafana-9.5.7-1.x86_64.rpm[root@docker-02 ~]# yum -y localinstall grafana-9.5.7-1.x86_64.rpm[root@docker-02 ~]# systemctl start grafana-server.service

[root@docker-02 ~]# systemctl enable grafana-server.service

Created symlink from /etc/systemd/system/multi-user.target.wants/grafana-server.service to /usr/lib/systemd/system/grafana-server.service.[root@docker-02 ~]# netstat -lntp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 172.17.0.1:53 0.0.0.0:* LISTEN 3563/weaver

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1923/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 2023/master

tcp 0 0 127.0.0.1:6782 0.0.0.0:* LISTEN 3563/weaver

tcp 0 0 127.0.0.1:6784 0.0.0.0:* LISTEN 3563/weaver

tcp6 0 0 :::7946 :::* LISTEN 2082/dockerd

tcp6 0 0 :::9100 :::* LISTEN 10947/node_exporter

tcp6 0 0 :::22 :::* LISTEN 1923/sshd

tcp6 0 0 :::3000 :::* LISTEN 11412/grafana

tcp6 0 0 ::1:25 :::* LISTEN 2023/master

tcp6 0 0 :::6783 :::* LISTEN 3563/weaver

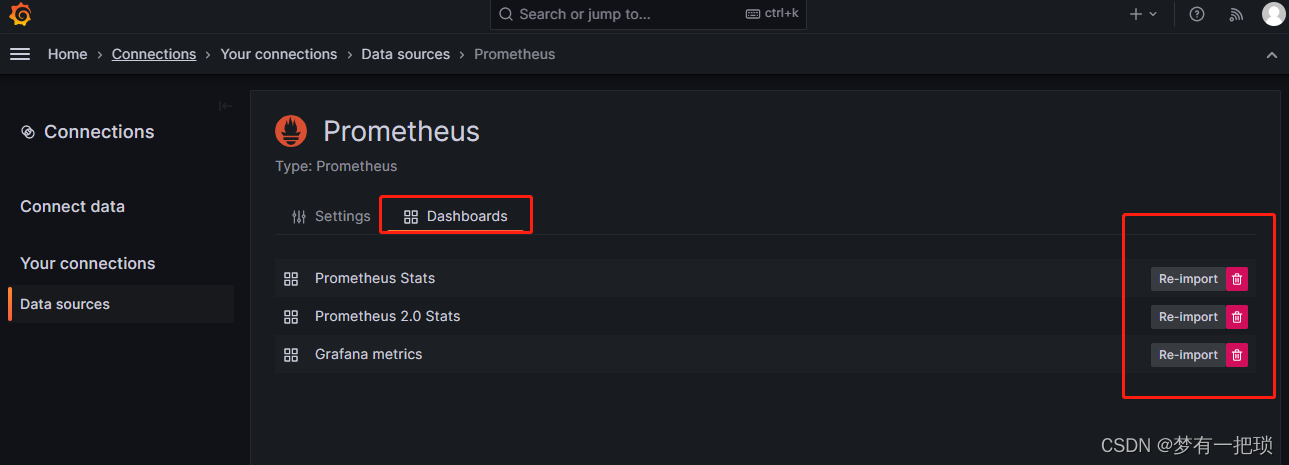

安装插件、数据源、dashboard

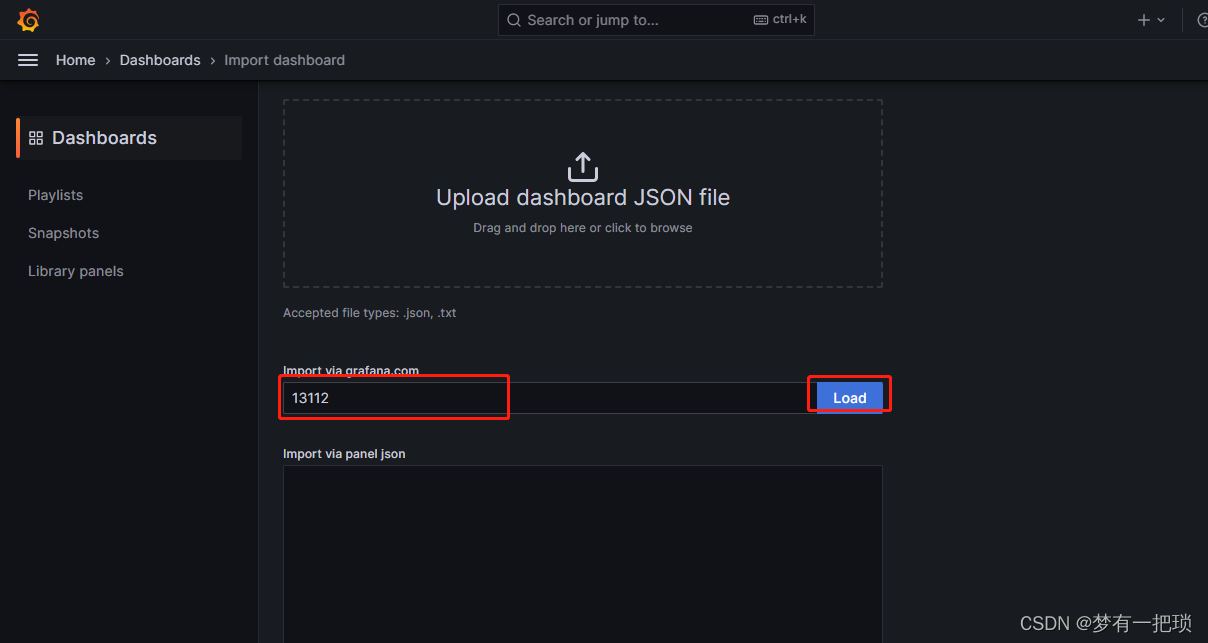

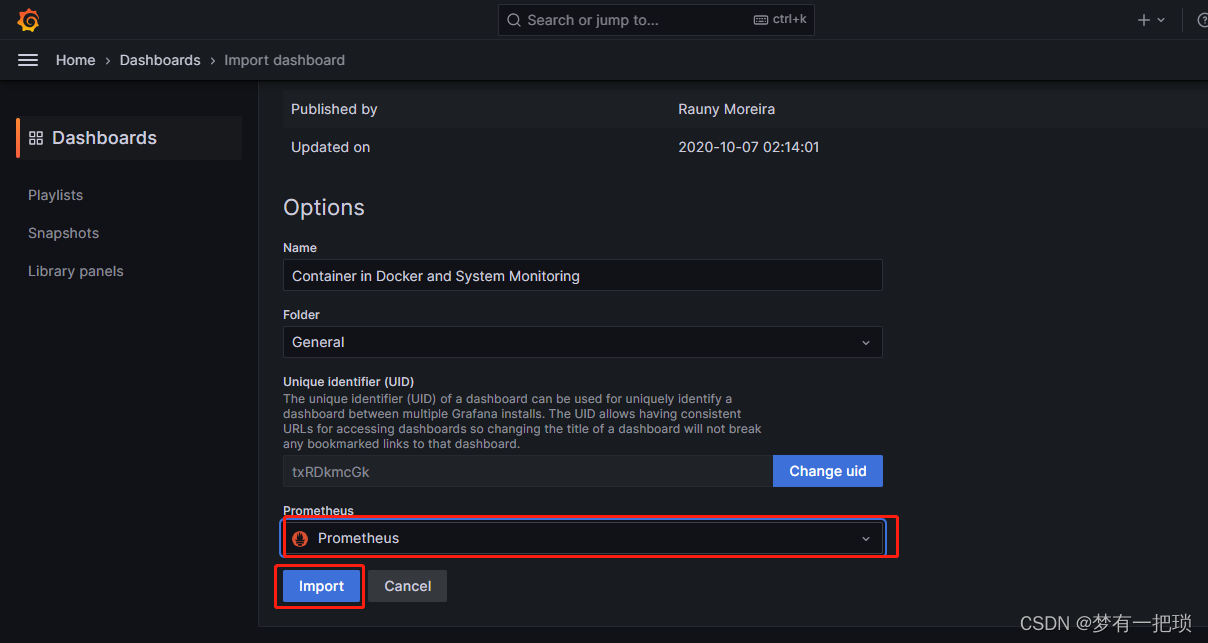

docker服务-node-exporter、cadvisor服务的图形展示

- Dashboards | Grafana Labs

- dashboardsID:10566、13112