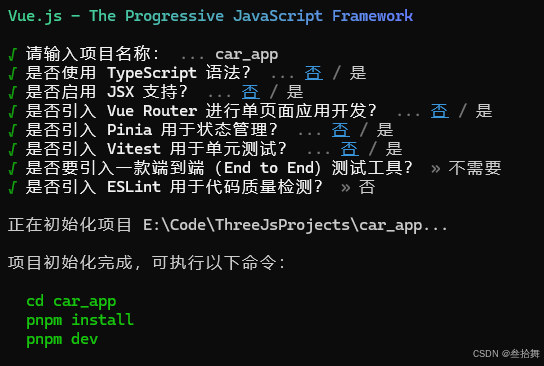

如题

问题

在vue3的elementPlus的table中,通过sortablejs添加了行拖拽功能,但是在行内会有输入框,此时拖拽输入框会触发sortablejs的拖拽功能

解决

基于这个现象,我怀疑是由于拖拽事件未绑定而冒泡到后面的行上从而导致的拖拽事件触发了sortablejs的功能,故尝试阻止事件冒泡

tsx写法:

<xq-input v-model={scoped.row.xxx}onClick={withModifiers(()=>{},["stop", "prevent"])}draggable={true}onDragstart={withModifiers(()=>{},["stop", "prevent"])}></xq-input>

注意这里必须设置draggable为true来绑定drag事件才能阻止冒泡

ts写法

<xq-input v-model="scoped.row.xxx"@click.stopdraggable="true"@dragstart.stop></xq-input>